当前位置:网站首页>Mish shake the new successor of the deep learning relu activation function

Mish shake the new successor of the deep learning relu activation function

2022-07-02 11:51:00 【A ship that wants to learn】

The study of activation function has never stopped ,ReLU Or the activation function that governs deep learning , however , This situation may be Mish change .

Diganta Misra One of them is entitled “Mish: A Self Regularized Non-Monotonic Neural Activation Function” This paper introduces a new deep learning activation function , This function is more accurate than Swish(+.494%) and ReLU(+ 1.671%) All have improved .

Their small FastAI The team used Mish Instead of ReLU, Broke before in FastAI Part of the accuracy score record on the global leaderboard . combination Ranger Optimizer ,Mish Activate ,Flat + Cosine Annealing and self attention layer , They can get 12 New leaderboard records !

We 12 Items in the leaderboard record 6 term . Every record uses Mish instead of ReLU.( Blue highlights ,400 epoch The accuracy of is 94.6, Slightly higher than our 20 epoch The accuracy of is 93.8:)

As part of their own tests , about ImageWoof Data sets 5 epoch test , They say :

Mish It is superior to ReLU (P < 0.0001).(FastAI Forum @ Seb)

Mish Already in 70 Tested on multiple benchmarks , Including image classification 、 Segmentation and generation , And with others 15 Three activation functions are compared .

What is? Mesh

Look directly at Mesh The code will be simpler , Just to summarize ,Mish=x * tanh(ln(1+e^x)).

Other activation functions ,ReLU yes x = max(0,x),Swish yes x * sigmoid(x).

PyTorch Of Mish Realization :

Tensorflow Medium Mish function :

Tensorflow:x = x *tf.math.tanh(F.softplus(x))

Mish How does it compare with other activation functions ?

The image below shows Mish Test results with some other activation functions . This is as much as 73 The result of a test , In different architectures , On different tasks :

Why? Mish Behave so well ?

There are no boundaries ( That is, a positive value can reach any height ) Avoid saturation due to capping . Theoretically, a slight allowance for negative values allows for better gradient flow , Not like it ReLU A hard zero boundary like in .

Last , Maybe the most important , The current idea is , The smooth activation function allows better information to penetrate the neural network , So as to get better accuracy and generalization .

For all that , I tested many activation functions , They also satisfy many of these ideas , But most of them cannot be implemented . The main difference here may be Mish The smoothness of the function at almost all points on the curve .

Such passage Mish The ability to activate curve smoothness to push information is shown in the following figure , In a simple test of this article , More and more layers are added to a test neural network , There is no unified function . As the layer depth increases ,ReLU The precision drops rapidly , The second is Swish. by comparison ,Mish It can better maintain accuracy , This may be because it can better spread information :

Smoother activation allows information to flow more deeply …… Be careful , As the number of layers increases ,ReLU Rapid descent .

How to make Mish Put it on your own network ?

Mish Of PyTorch and FastAI The source code of can be found in github Two places to find :

1、 official Mish github:https://github.com/digantamisra98/Mish

2、 Unofficial Mish Use inline Speed up :https://github.com/lessw2020/mish

summary

ReLU There are some known weaknesses , But usually it's very light , And very light in calculation .Mish It has a strong theoretical origin , In the test , In terms of training stability and accuracy ,Mish The average performance of is better than ReLU.

The complexity is only slightly increased (V100 GPU and Mish, be relative to ReLU, Every time epoch Increase by about 1 second ), Considering the improvement of training stability and final accuracy , It seems worthwhile to add a little more time .

Final , After testing a large number of new activation functions this year ,Mish Take the lead in this regard , Many people suspect that it is likely to become AI New in the future ReLU.

English article address :https://medium.com/@lessw/meet-mish-new-state-of-the-art-ai-activation-function-the-successor-to-relu-846a6d93471f

边栏推荐

- 抖音海外版TikTok:正与拜登政府敲定最终数据安全协议

- 程序员成长第六篇:如何选择公司?

- Take you ten days to easily finish the finale of go micro services (distributed transactions)

- Installation of ROS gazebo related packages

- PHP 2D and multidimensional arrays are out of order, PHP_ PHP scrambles a simple example of a two-dimensional array and a multi-dimensional array. The shuffle function in PHP can only scramble one-dim

- Summary of flutter problems

- YYGH-BUG-05

- Writing contract test cases based on hardhat

- [idea] use the plug-in to reverse generate code with one click

- vant tabs组件选中第一个下划线位置异常

猜你喜欢

Amazon cloud technology community builder application window opens

![[visual studio 2019] create and import cmake project](/img/51/6c2575030c5103aee6c02bec8d5e77.jpg)

[visual studio 2019] create and import cmake project

Esp32 stores the distribution network information +led displays the distribution network status + press the key to clear the distribution network information (source code attached)

ESP32存储配网信息+LED显示配网状态+按键清除配网信息(附源码)

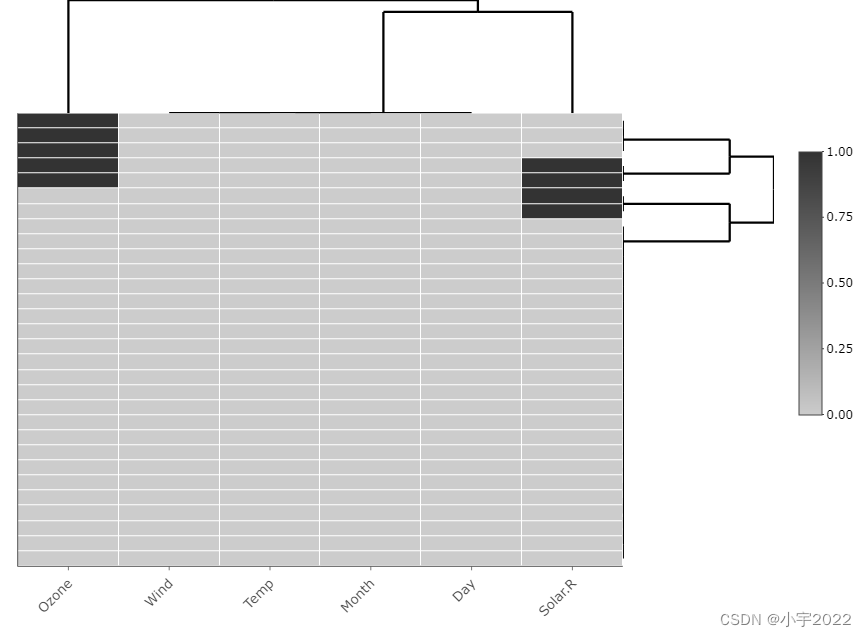

How to Visualize Missing Data in R using a Heatmap

基于Hardhat和Openzeppelin开发可升级合约(二)

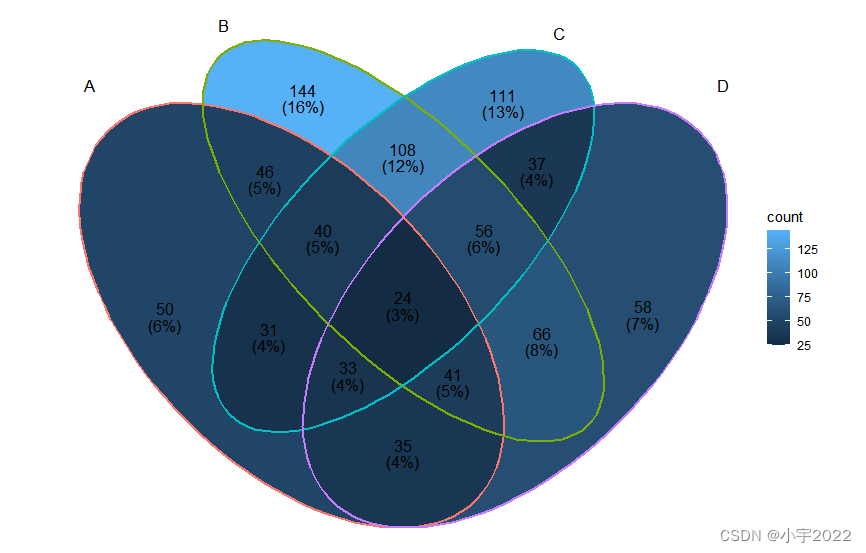

BEAUTIFUL GGPLOT VENN DIAGRAM WITH R

![[idea] use the plug-in to reverse generate code with one click](/img/b0/00375e61af764a77ea0150bf4f6d9d.png)

[idea] use the plug-in to reverse generate code with one click

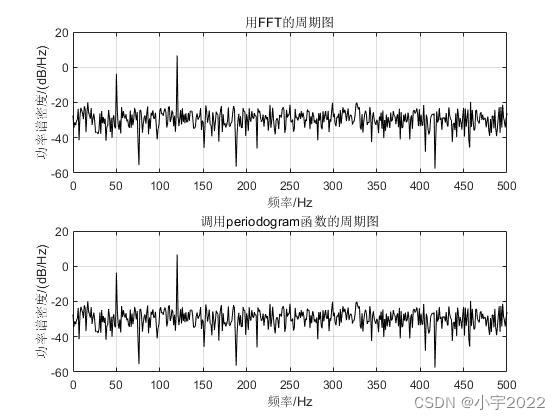

Power Spectral Density Estimates Using FFT---MATLAB

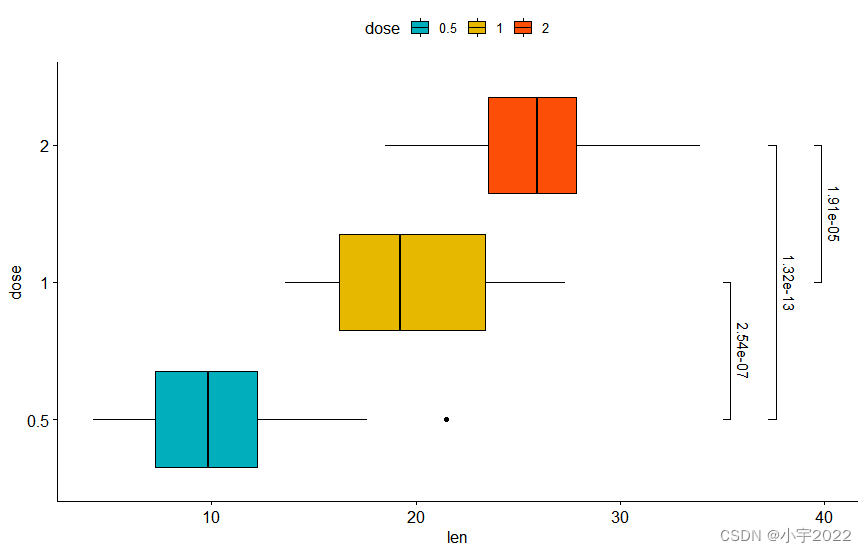

How to Add P-Values onto Horizontal GGPLOTS

随机推荐

K-Means Clustering Visualization in R: Step By Step Guide

Pyqt5+opencv project practice: microcirculator pictures, video recording and manual comparison software (with source code)

Power Spectral Density Estimates Using FFT---MATLAB

程序员成长第六篇:如何选择公司?

A white hole formed by antineutrons produced by particle accelerators

Programmer growth Chapter 6: how to choose a company?

GGPlot Examples Best Reference

R HISTOGRAM EXAMPLE QUICK REFERENCE

Webauthn - official development document

Is the stock account given by qiniu business school safe? Can I open an account?

C#基于当前时间,获取唯一识别号(ID)的方法

亚马逊云科技 Community Builder 申请窗口开启

Order by injection

Wechat applet uses Baidu API to achieve plant recognition

deepTools对ChIP-seq数据可视化

HOW TO ADD P-VALUES ONTO A GROUPED GGPLOT USING THE GGPUBR R PACKAGE

MySQL stored procedure cursor traversal result set

Implementation of address book (file version)

Thesis translation: 2022_ PACDNN: A phase-aware composite deep neural network for speech enhancement

Enter the top six! Boyun's sales ranking in China's cloud management software market continues to rise