当前位置:网站首页>In fact, the implementation of current limiting is not complicated

In fact, the implementation of current limiting is not complicated

2022-07-06 10:18:00 【CHQIUU】

Catalog

Leaky bucket algorithm (Leaky Bucket)

Token bucket algorithm (Token Bucket)

Summary

In high concurrency systems, at least three technologies can be used to protect the system : cache 、 Downgrade 、 Current limiting . This paper mainly introduces the current limiting Algorithm .

With the increase of website users , Expansion of business , The traffic scale and concurrency of our website will also continue to increase . At a certain stage, we will hope to control the traffic of the website to a certain extent . Because our business processing capacity is limited , We need to give priority to ensuring the normal operation of key businesses . Technicians have been committed to solving the problem of high concurrency completely , But so far, there is no solution that can be completely solved . We can only try to improve the performance of business processing , Do business split , Distributed , Conduct peak staggering treatment and other means . In fact, we can analyze it from every stage of the whole user request process , Adopt different schemes at different stages .

cache

Caching is easier to understand , In large and high concurrent systems , If there is no cache database, it will explode in minutes , The system will be paralyzed in an instant . Using cache can not only improve system access speed 、 Increase concurrent access , It's also about protecting databases 、 An effective way to protect the system . Large websites are usually mainly “ read ”, The use of caching is easy to think of . In large scale “ Write ” In the system , Caching also often plays a very important role . For example, accumulate some data to write in bulk , Cache queue in memory ( Production and consumption ), as well as HBase The mechanism of writing data and so on is to enhance the throughput of the system through caching or to realize the protection measures of the system . Even message middleware , You can also think of it as a distributed data cache .

Downgrade

Service degradation is when the server pressure soars , According to the current business situation and traffic, some services and pages are downgraded strategically , In order to release the server resources to ensure the normal operation of the core tasks . Demotion often specifies different levels , Face different exception levels to perform different processing . According to the way of service : You can refuse the service , Can delay service , Sometimes it can be served randomly . According to the scope of services : You can cut off a function , You can also cut down some modules . In short, service degradation needs to adopt different degradation strategies according to different business needs . The main purpose is that although the service is damaged, it is better than nothing .

Current limiting

Flow restriction can be considered as a kind of service degradation , Current limiting is to limit the input and output flow of the system to achieve the purpose of the protection system . Generally speaking, the throughput of the system can be measured , In order to ensure the stable operation of the system , Once the threshold of the required limit is reached , We need to limit the flow and take some measures to achieve the purpose of limiting the flow . such as : Delays in processing , Refuse to deal with , Or refuse to deal with part of it .

The core idea of flow restriction is to artificially discard some user requests , Do not deal with , This is equivalent to the subsequent operation of the user that is avoided from the root , Although it has a great impact on the user experience , But as long as the appropriate discard strategy is adopted , While effectively protecting the system , Minimize the impact on the user experience .

Current limiting will cause users to ( This time period is millisecond level ) System not available , In general, we measure the processing power of the system in terms of per second QPS perhaps TPS, Let's say the traffic threshold of the system per second is 1000, In theory, there's a... In a second 1001 When a request comes in , Then the request will be throttled .

Relevant concepts

PV(Page View) Traffic volume

Page views or clicks , Every time the user refreshes it is calculated once

UV(Unique View) The number of independent visitors

A computer client visiting the website is a visitor .00:00-24:00 The same client within is only calculated once

IP(Internet Protocol) Independent IP Count

00:00-24:00 Same inside IP The address is calculated once

TPS(Transactions Per Second) Transactions per second

It is the measurement unit of software test results . A transaction is a process in which a client sends a request to the server and the server responds . The client starts timing when it sends the request , End timing after receiving server response , In order to Calculation The time used and the number of transactions completed .

QPS(Query Per Second) Visits per second

Is the number of queries a server can query per second , It is a measure of how much traffic a specific query server handles in a given period of time .

QPS It's basically like TPS, But here's the difference , A visit to a page , To form a TPS; But a page request , There may be multiple requests to the server , The server requests for these , You can count in QPS In . For example, accessing a page will request the server 3 Time , A user's visit to the page will produce 1 individual “T”, produce 3 individual “Q”.

QPS To a large extent, it represents the busy degree of the system , There may be more than one disk per request IO, Network request , Multiple CPU Time slice , once QPS Over the preset threshold , You can consider increasing the capacity of the server , Avoid downtime caused by too many visits .

RT(Response Time) Response time per request

Directly determine the user experience

Current limiting algorithm

Common current limiting algorithms include : Counter 、 Leaky bucket and token bucket algorithm .

Counter algorithm

The counter is the simplest and crude algorithm . First, give the number of times an interface can be accessed in a specified time period , When the interface is accessed in this time period, record the number of times it is accessed , When the number of visits reaches its upper limit, subsequent visits are restricted . Of course, remember to empty the number of visits and re count at the end of each time period .

For example, a service can only process at most 100 A request . We can set up a 1 Seconds of sliding window , In the window 10 Lattice , Every lattice 100 millisecond , Every time 100 One millisecond move , Every time you move, you need to record the number of current service requests , Memory needs to be saved 10 Number of times .

The sample code is as follows :

/*

Interface (key)

Time unit (expire)

How many visits are allowed (limit)

Number of visits (value)

*/

if( There is... In the cache key){

value++;

if(value >= limit){

Cannot access

}

}else{

Add to cache key,value by 1

Set up key The expiration date is expire

}Leaky bucket algorithm (Leaky Bucket)

Leaky bucket algorithm is a very common current limiting Algorithm , Can be used to achieve flow shaping (Traffic Shaping) And flow control (Traffic Policing).

The main concepts of leaky bucket algorithm are as follows :

- A leaky bucket of fixed capacity , A constant constant rate of outflow of water ;

- If the bucket is empty , It doesn't need to run out of water ;

- It can flow into the leaking bucket at any rate ;

- If the incoming water drops exceed the capacity of the bucket , Then the incoming water drops overflow ( To be discarded ), And the capacity of the leaky bucket is constant .

for instance : If an interface is allowed to access in one minute 60 Time , Then from the beginning, no matter whether you are visited or not 59 Seconds only allow access 59 Time ,30 Seconds only allow access 30 Time ,10 Seconds only allow access 10 Time .

The sample code is as follows :

/*

Interface (key)

Time unit (expire)

How many visits are allowed (limit)

Decrement interval (interval)

Decreasing step size (step)

Remaining accessible times (value)

key Access time for (lastUpdateTime)

current time (nowTime)( Be careful nowTime The value of should be the time obtained by the application, not redis perhaps nginx Time of acquisition )

*/

if( There is... In the cache key){

value--;

if((nowTime-lastUpdateTime)>interval){

value=value-(nowTime-lastUpdateTime)/interval*step;

lastUpdateTime=nowTime;

}

if(value<=0){

Cannot access

}

}else{

Add to cache key, Set up value by limit;

lastUpdateTime=nowTime;

}Token bucket algorithm (Token Bucket)

The principle of token bucket algorithm is that the system will put tokens into the bucket at a constant speed , And if the request needs to be processed , You need to get a token from the bucket first , When there is no token in the bucket , Denial of service , Token bucket algorithm by issuing token , Limit the request frequency according to the issuing frequency of the token , Capacity limitation, etc .

The main concepts of token bucket algorithm are as follows :

- A bucket of fixed capacity tokens , Add tokens to the bucket at a fixed rate ;

- Hypothetical limitations 2r/s, According to 500 Add tokens to the bucket at a fixed rate of milliseconds ;

- The most storage in the barrel is b A token , When the bucket is full , The newly added token is discarded or rejected ;

- When one n Byte size packets arrive , Will be removed from bucket n A token , Then the packets are sent to the network ;

- If the token in the bucket is not enough n individual , The token will not be deleted , And the packet will be limited in flow ( Or throw away , Or wait in the buffer ).

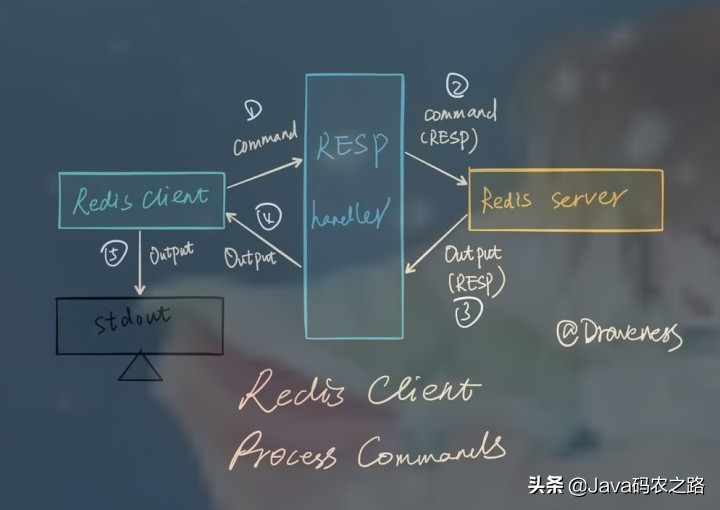

Here's the picture :

The token algorithm controls the output rate according to the rate of token release , That's the one above Departure Rate .Departure We can understand it as a message handler , Execute a business or call a RPC.

The sample code is as follows :

/*

Interface (key)

Time unit (expire)

How many visits are allowed (limit)

Decrement interval (interval)

Decreasing step size (step)

Remaining accessible times (value)

key Access time for (lastUpdateTime)

current time (nowTime)( Be careful nowTime The value of should be the time obtained by the application, not redis perhaps nginx Time of acquisition )

*/

Thread one ( The user requests this interface ):

if( There is... In the cache key){

value++;

if(value>=limit){

Cannot access

}

}else{

Add to cache key, Set up value by limit

}

Thread one ( Background scheduled tasks ):

while( In the past interval Time ){

all key Of value+step

}Compared with the leaky bucket algorithm , Token bucket algorithm can control and adjust the rate of data processing at runtime , Deal with sudden traffic . Increasing the frequency of putting tokens can improve the speed of overall data processing , The number of tokens obtained each time increases or slows down the issuing speed of tokens and the overall data processing speed . The leaky bucket algorithm is not good , Because its outflow rate is fixed , Program processing speed is also fixed .

As a whole , Token bucket algorithm is better , But the implementation is more complicated .

边栏推荐

- CAPL script pair High level operation of INI configuration file

- MySQL实战优化高手05 生产经验:真实生产环境下的数据库机器配置如何规划?

- MySQL的存储引擎

- MySQL ERROR 1040: Too many connections

- Random notes

- max-flow min-cut

- MySQL实战优化高手11 从数据的增删改开始讲起,回顾一下Buffer Pool在数据库里的地位

- C miscellaneous dynamic linked list operation

- MySQL combat optimization expert 06 production experience: how does the production environment database of Internet companies conduct performance testing?

- 高并发系统的限流方案研究,其实限流实现也不复杂

猜你喜欢

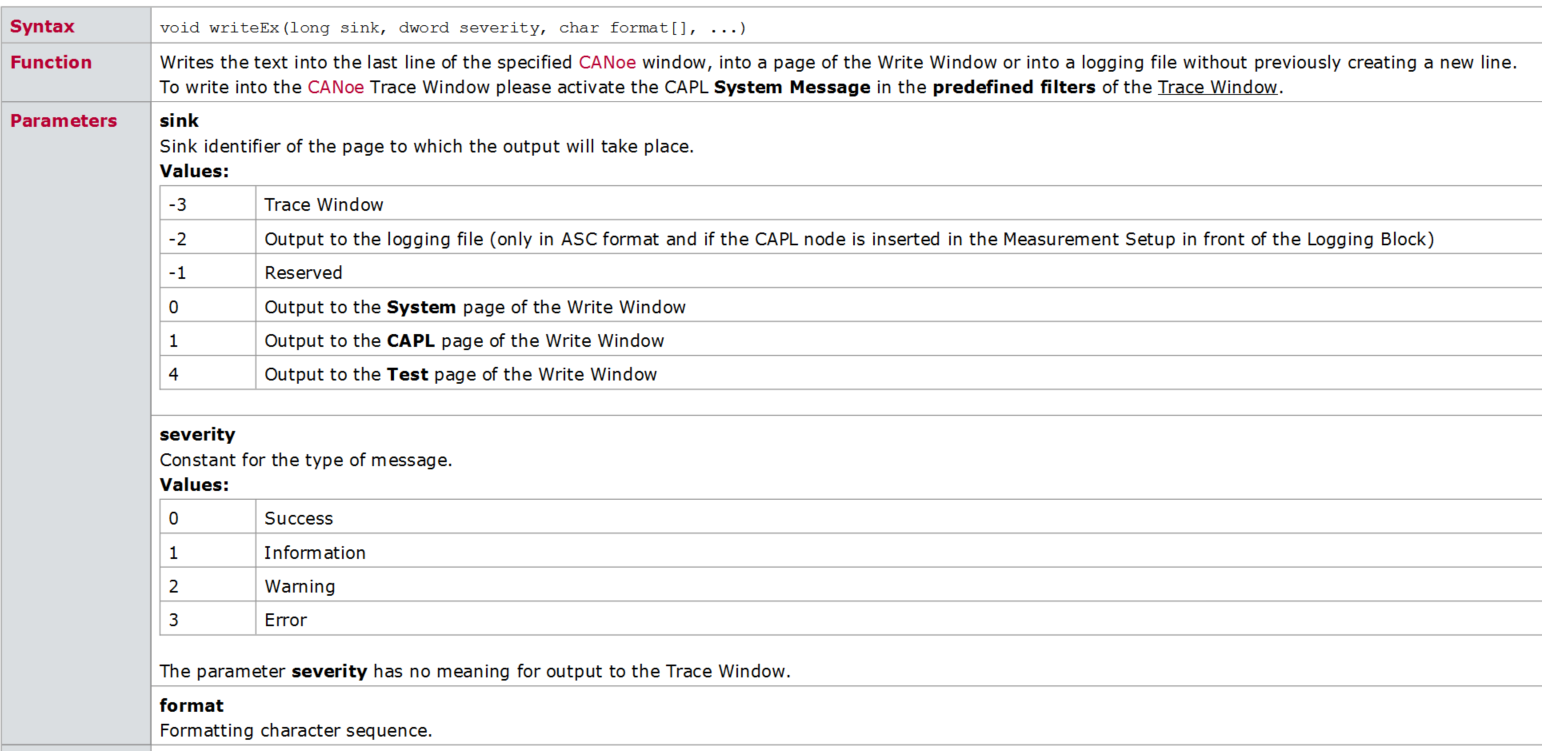

CAPL script printing functions write, writeex, writelineex, writetolog, writetologex, writedbglevel do you really know which one to use under what circumstances?

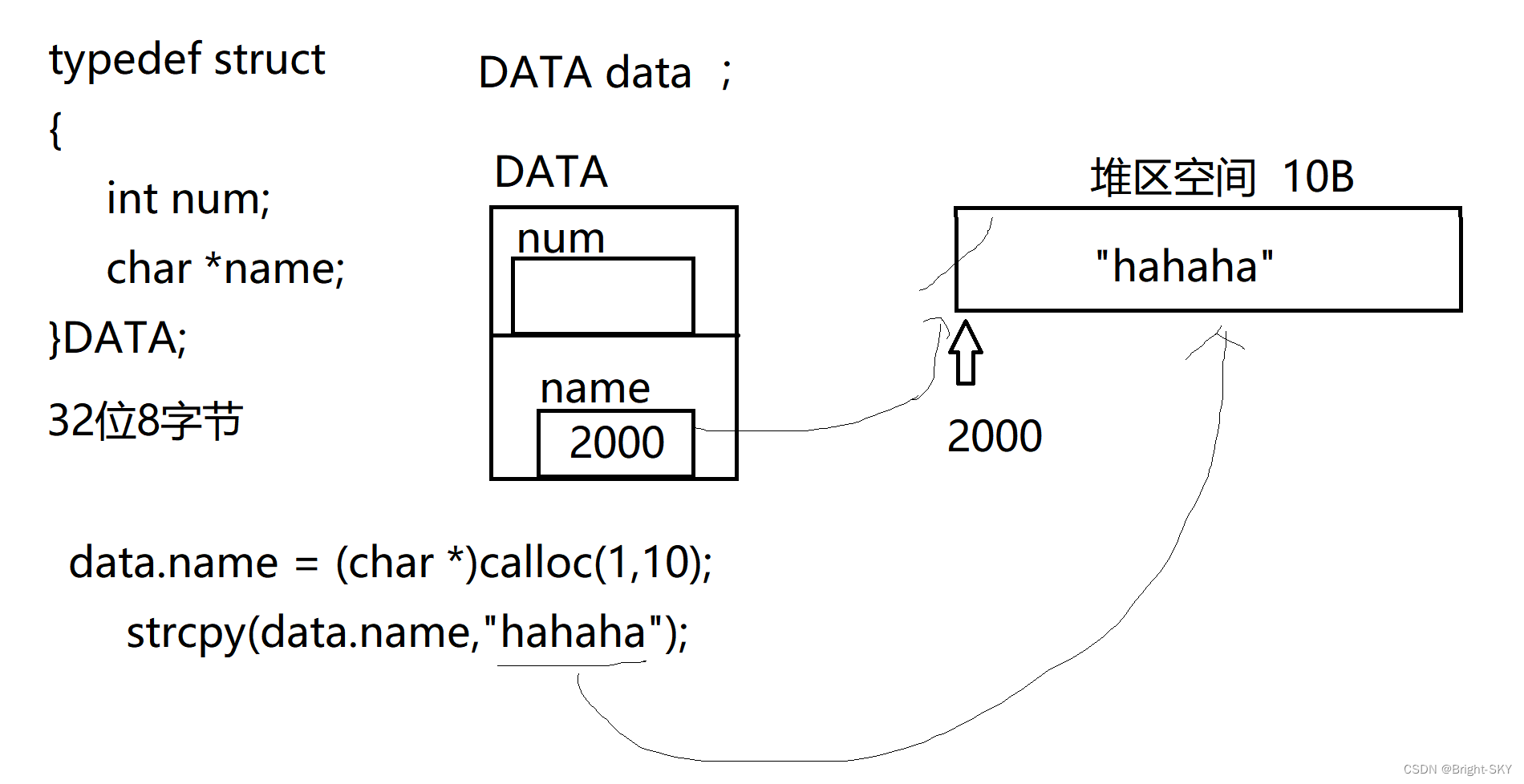

C杂讲 浅拷贝 与 深拷贝

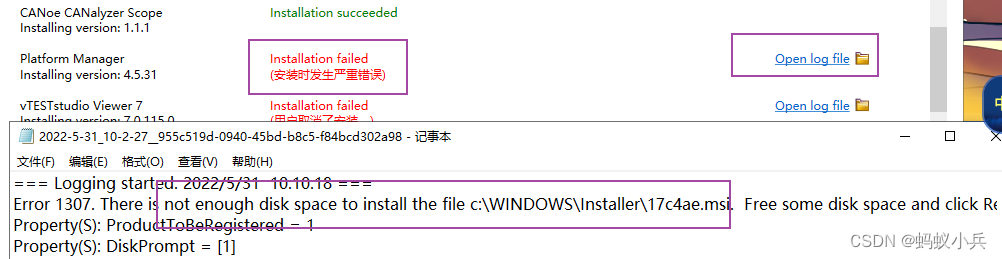

CANoe下载地址以及CAN Demo 16的下载与激活,并附录所有CANoe软件版本

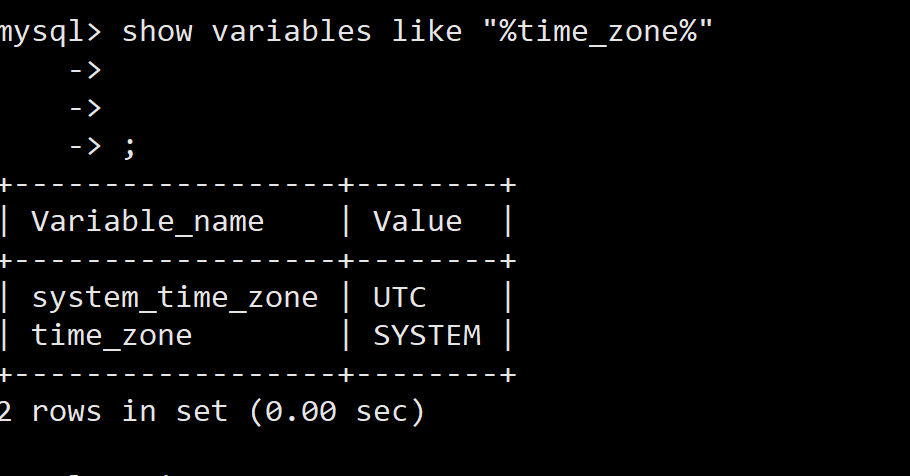

docker MySQL解决时区问题

What should the redis cluster solution do? What are the plans?

![[flask] crud addition and query operation of data](/img/fc/7a12ede31befed5f42b32b94ae5ecf.jpg)

[flask] crud addition and query operation of data

实现微信公众号H5消息推送的超级详细步骤

UEditor国际化配置,支持中英文切换

Embedded development is much more difficult than MCU? Talk about SCM and embedded development and design experience

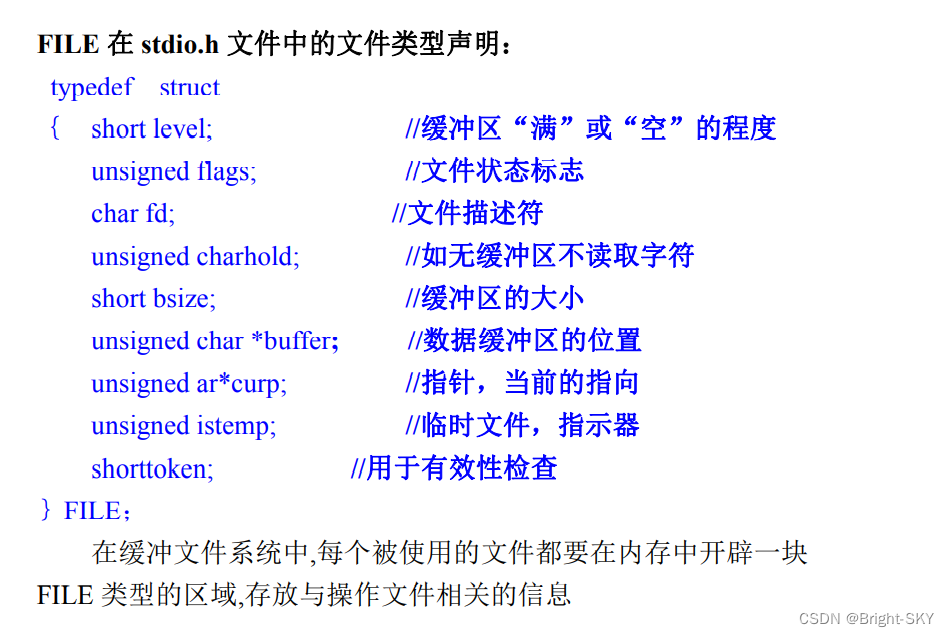

Preliminary introduction to C miscellaneous lecture document

随机推荐

Redis集群方案应该怎么做?都有哪些方案?

[one click] it only takes 30s to build a blog with one click - QT graphical tool

Zsh configuration file

South China Technology stack cnn+bilstm+attention

A new understanding of RMAN retention policy recovery window

Solve the problem of remote connection to MySQL under Linux in Windows

竞赛vscode配置指南

16 medical registration system_ [order by appointment]

Flash operation and maintenance script (running for a long time)

NLP路线和资源

The 32 year old programmer left and was admitted by pinduoduo and foreign enterprises. After drying out his annual salary, he sighed: it's hard to choose

Jar runs with error no main manifest attribute

① BOKE

Inject common SQL statement collation

max-flow min-cut

Bugku web guide

Installation de la pagode et déploiement du projet flask

简单解决phpjm加密问题 免费phpjm解密工具

Delayed note learning

再有人问你数据库缓存一致性的问题,直接把这篇文章发给他