当前位置:网站首页>Cvpr19 - adjust reference dry goods bag of tricks for image classification with revolutionary neural network

Cvpr19 - adjust reference dry goods bag of tricks for image classification with revolutionary neural network

2022-07-28 19:26:00 【I'm Mr. rhubarb】

List of articles

Original address

https://openaccess.thecvf.com/content_CVPR_2019/papers/He_Bag_of_Tricks_for_Image_Classification_with_Convolutional_Neural_Networks_CVPR_2019_paper.pdf

Thesis reading methods

First time to know

At present, deep learning shines brightly in the field of computer vision , This is not only due to the innovation of network structure , It also benefits from the optimization of training strategies ( Loss function 、 Data preprocessing 、 Optimization method, etc ). However, many implementation details and techniques are not mentioned in the paper , Or simply mentioned oneortwo , This article is a collection of these trick And carried out experiments , take ResNet-50 stay ImageNet Upper top1 Error rate from 75.3% Upgrade to 79.29%. The dry goods are full , Tiu senxia's ecstasy .

Know each other

2. Training Procedures

This section mainly describes the specific settings of the article experiment , Here is a brief mention of the relevant points , Please see the original paper for specific settings

Baseline Training strategies :① The random sampling code is float32 type ; ② Random cutting ;③ 0.5 The probability level of flip ; ④ saturation 、 Contrast 、 Brightness changes randomly ; ⑤ increase PCA noise .

The test does not use any augmentation ; The initialization of model parameters adopts Xavier, Use NAG Optimizer .

3. Large-batch training

The low numerical accuracy and large batch size Of trick, At present, half precision floating-point type is commonly used + Big batch size Improve your training speed , At the same time, improve the accuracy .

3.1 Large-batch training

Big batch size It will not change the expectation of random gradient, but will reduce the variance , That is, big batch size Will reduce the gradient noise . But as the batch size An increase in , It will reduce the convergence speed of training ( alike epoch The effect will get worse ). To solve this problem , There are the following trick:

① Linear scaling learning rate: It's simple , The learning rate increases with batch size Linear increase . Like the beginning batch size=128, Learning rate =0.1. Now? batch size=256, The learning rate also increases 2 times , Turn into 0.2;

② Learning rate warm up: Using a large learning rate at the beginning of training may lead to numerical instability , Therefore, first use the primary school learning rate, and then slowly increase to the set learning rate . The general strategy is from 0 Start , After a few epoch Increase linearly to the preset learning rate ,warm up You can join me in another article Blog ;

③ Zero γ: stay BN Layers involve shrinkage and offset γx+β, Initialize for all residual block Last BN All set up γ=0, This makes the network have fewer simulation layers in the initial stage and easier to train .

④ No bias decay: Only for convoluted and fully connected weight Use L2 Regularization , about bias as well as BN The parameters in the layer are not .

LARS, Yes, oversized batch size It works ( Greater than 16k), Cardo's boss can learn about …

3.2 Low-precision traing

About low precision is to use float16 Half floating-point precision for numerical operations , Current graphics cards are for FP16 The type is already very fast ( still 1080Ti and 2080Ti My tears fall from the players ), such as V100 stay FP32 The training speed is 14TFLOPs, stay FP16 It's already 100TFLOPS 了 . I won't talk too much about this part , It is suitable for the study of bosses in cassincardo , Hint 1080Ti Nothing? FP16 Computing power …

Finally, post the experimental results , Roughly what can be seen trick It's chicken ribs , My personal suggestion is to consider only warm up+Linear Scaling, Best value for money .

4. ResNet Architecture

ResNet I won't say much about the architecture of , See the picture 1, Students who haven't seen it can also refer to mine Blog . The author discusses some small structural trick.

① ResNet-B: As shown in Figure 2 , This is also Pytorch The official method used in the implementation , Mainly is to PathA The upper and lower samples are placed in the 2 individual 3x3 Convolution on , Avoided 1x1 Convolution directly skips some feature map content .

② ResNet-C: The... Of the stage will be entered 7x7 Convolution is changed to 3 individual 3x3 Convolution , Feel the same , Reduced computing consumption .

③ ResNet-D: stay ResNet-B、C On the basis of general Path B Change to average pool sampling +1x1 Convolution , Avoid ignoring the content of the feature map .

The direct result , But at present, everyone basically uses pre-trained Initialize the model of , So I personally think these trick It doesn't matter , But training from scratch or designing a network can refer to .

5. Training Refinemets

Training improvement , Here comes the big play, hahaha

5.1 Cosine Learning Rate Decay

Is to adopt cosine attenuation strategy , Often with Warm up Take it together . It decays slowly at the beginning of training , The medium term is similar to linear attenuation , The latter half of the class is relatively balanced . You can participate in the details and implementation of another blog of mine ( and warm Achieve the same ). Put it here warm up+cosine And another Step Comparison diagram of attenuation methods , as follows :

Judging from the results, the accuracy of the two is similar , however cosine You can avoid Step Parameter adjustment .

5.2 Label Smoothing

LabelSmooth Loss from classification - From the perspective of cross entropy , A smaller constant is introduced to change the target label from One-Hot Change the form to the form of probability distribution ( The calculation formula is as follows ), So as to prevent over fitting . For details and implementation, see My blog .

5.3 Knowledge Distillation

Knowledge distillation is the use of a Teacher Model to train Student Model ,T Models are usually pre training models with high performance , This kind of training can make S The model improves performance without increasing the capacity of the model . The implementation is also relatively simple , An extra... Has been added distillation Loss , punishment S Output and T Between outputs softmax Probability difference :

T Is the temperature coefficient , See My other blog , This can make softmax Smoother output , bring S The model can be T The knowledge of label distribution is learned from the output of the model .

5.4 Mixup Training

Mixup It is also a form of data expansion , Random sampling of two training samples (xi, yi) and (xj, yj), A new sample is obtained by linear weighting :

λ Belong to [0,1], from Beta Sampling in the distribution , Only use in subsequent training Mixup Get new samples for training .

The experimental results are as follows , Personal recommendation cosine decay+mixup, however mixup I haven't actually tried , I don't know how it works .distillation Generally used for model compression .

6. Transfer Learning

Finally, the author is also in target detection 、 Semantic segmentation and other downstream tasks are detected trick The effectiveness of the , I'm not going to put a picture here , Please refer to the original text for understanding .

review

In fact, I've heard of this reference article of Li Mu's team for a long time , And many methods have been applied in competitions or events , But I haven't taken the time to read and summarize in class . These days, I have summarized this article , This dry article is still of great guiding significance for Algorithm Engineers and friends playing games . I will continue to read some articles in this regard in the future , Share with you .

边栏推荐

- How many of the top ten test tools in 2022 do you master

- [深入研究4G/5G/6G专题-44]: URLLC-15-《3GPP URLLC相关协议、规范、技术原理深度解读》-9-低延时技术-3-非时隙调度Mini slot

- ardupilot软件在环仿真与在线调试

- 图书管理数据库系统设计

- SRS4.0安装步骤

- Module 8 of the construction camp

- Get to know nodejs for the first time (with cases)

- 我的第二次博客——C语言

- Share several coding code receiving verification code platforms, which will be updated in February 2022

- 用于异常检测的Transformer - InTra《Inpainting Transformer for Anomaly Detection》

猜你喜欢

BM16 delete duplicate elements in the ordered linked list -ii

Photoshop web design practical tutorial

CVPR21-无监督异常检测《CutPaste:Self-Supervised Learning for Anomaly Detection and Localization》

ICLR21(classification) - 未来经典“ViT” 《AN IMAGE IS WORTH 16X16 WORDS》(含代码分析)

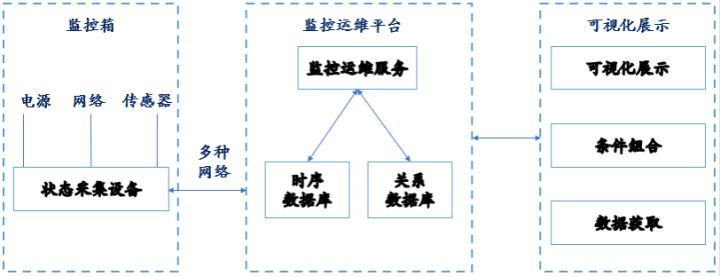

Application of time series database in monitoring operation and maintenance platform

Solve the critical path in FJSP - with Matlab source code

Photoshop responsive web design tutorial

Fundamentals of software testing and development | practical development of several tools in testing and development

Application value of MES production management system to equipment

SRS4.0安装步骤

随机推荐

Cvpr21 unsupervised anomaly detection cutpaste:self supervised learning for anomaly detection and localization

[physical application] Wake induced dynamic simulation of underwater floating wind turbine wind field with matlab code

Mid 2022 summary

How to use Qianqian listening sound effect plug-in (fierce Classic)

Nips18(AD) - 利用几何增广的无监督异常检测《Deep Anomaly Detection Using Geometric Transformations》

BM16 删除有序链表中重复的元素-II

Random finite set RFs self-study notes (6): an example of calculation with the formula of prediction step and update step

机器学习 --- 模型评估、选择与验证

As for the white box test, you have to be skillful in these skills~

剑指 Offer II 109. 开密码锁

6-20 vulnerability exploitation proftpd test

Pandownload revival tutorial

当CNN遇见Transformer《CMT:Convolutional Neural Networks Meet Vision Transformers》

Sudo rosdep init error: cannot download default

[image segmentation] vein segmentation based on directional valley detection with matlab code

Update of objects in ES6

Qt: 一个SIGNAL绑定多个SLOT

Module 8 of the construction camp

SaltStack之salt-ssh

Application of TSDB in civil aircraft industry