当前位置:网站首页>With the implementation of MapReduce job de emphasis, a variety of output folders

With the implementation of MapReduce job de emphasis, a variety of output folders

2022-07-06 18:25:00 【Full stack programmer webmaster】

Hello everyone , I meet you again , I'm the king of the whole stack .

Summarize a problem encountered in previous work .

background : Operation and maintenance and scribe from apacheserver Pushed to the log record again and again , So here ETL Deal with ongoing heavy . There is a need for multiple folders according to the output type of the business . Easy to hang partition , Use back . There is no problem with these two requirements, and they are handled separately , One mapreduce It's over , It takes a little skill .

1、map input data , After a series of processing . When the output :

if(ttype.equals("other")){

file = (result.toString().hashCode() & 0x7FFFFFFF)%400;

}else if(ttype.equals("client")){

file = (result.toString().hashCode() & 0x7FFFFFFF)%260;

}else{

file = (result.toString().hashCode()& 0x7FFFFFFF)%60;

}

tp = new TextPair(ttype+"_"+file, result.toString());

context.write(tp, valuet);valuet It's empty. , Nothing there? .

I have three types here .other,client,wap, Respectively represent the log source platform . Output by folder according to them . result It's the whole record .

file What you get is the final output file name ,hash. Bit operation , The purpose of taking modulus is to balance the output .

map The output structure of <key,value> =(ttype+”_”+file,result.toString()) The purpose of this is : Ensure that the same records get the same key, At the same time, save the type .partition To press textPair Of left, That's it key, It ensures that all records to be written to the same output file later will go to the same reduce In go to . One reduce Can write multiple output files . However, an output file cannot come from multiple reduce, The reason is very clear . Such words are probably 400+260+60=720 Output files , The amount of data in each file is almost the same ,job Of reduce Count what I set here 240, This number, together with modulus 400,260,60 It's all based on my data , To avoid reduce Data skew . 2、reduce Method de duplication :

public void reduce(TextPair key, Iterable<Text> values, Context context) throws IOException, InterruptedException

{

rcfileCols = getRcfileCols(key.getSecond().toString().split("\001"));

context.write(key.getFirst(), rcfileCols);

}No iteration , Yes, the same key Group . Output only once . Note that there job Comparator used , It must not be FirstComparator, But the whole textpair Right comparison .( Compare first left. Compare again right) The output file format of my program is rcfile. 3、 Multi folder output :

job.setOutputFormatClass(WapApacheMutiOutputFormat.class);

public class WapApacheMutiOutputFormat extends RCFileMultipleOutputFormat<Text, BytesRefArrayWritable> {

Random r = new Random();

protected String generateFileNameForKeyValue(Text key, BytesRefArrayWritable value,

Configuration conf) {

String typedir = key.toString().split("_")[0];

return typedir+"/"+key.toString();

}

}there RCFileMultipleOutputFormat I inherited it from FileOutputFormat His writing . Mainly achieved recordWriter.

Finally output the weight removed , Sub folder data file .

The key to understanding , Mainly partition key Design .reduce principle .

Copyright notice : This article is an original blog article , Blog , Without consent , Shall not be reproduced .

Publisher : Full stack programmer stack length , Reprint please indicate the source :https://javaforall.cn/117394.html Link to the original text :https://javaforall.cn

边栏推荐

- Virtual machine VirtualBox and vagrant installation

- 2022暑期项目实训(二)

- Dichotomy (integer dichotomy, real dichotomy)

- Blue Bridge Cup real question: one question with clear code, master three codes

- STM32+ENC28J60+UIP协议栈实现WEB服务器示例

- 2019 Alibaba cluster dataset Usage Summary

- 模板于泛型编程之declval

- 测试1234

- 微信为什么使用 SQLite 保存聊天记录?

- 第三季百度网盘AI大赛盛夏来袭,寻找热爱AI的你!

猜你喜欢

小程序在产业互联网中的作用

Recursive way

![Jerry is the custom background specified by the currently used dial enable [chapter]](/img/32/6c22033bda8ff1b53993bacef254cd.jpg)

Jerry is the custom background specified by the currently used dial enable [chapter]

SAP Fiori 应用索引大全工具和 SAP Fiori Tools 的使用介绍

Declval (example of return value of guidance function)

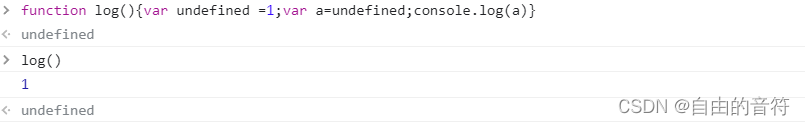

Interesting - questions about undefined

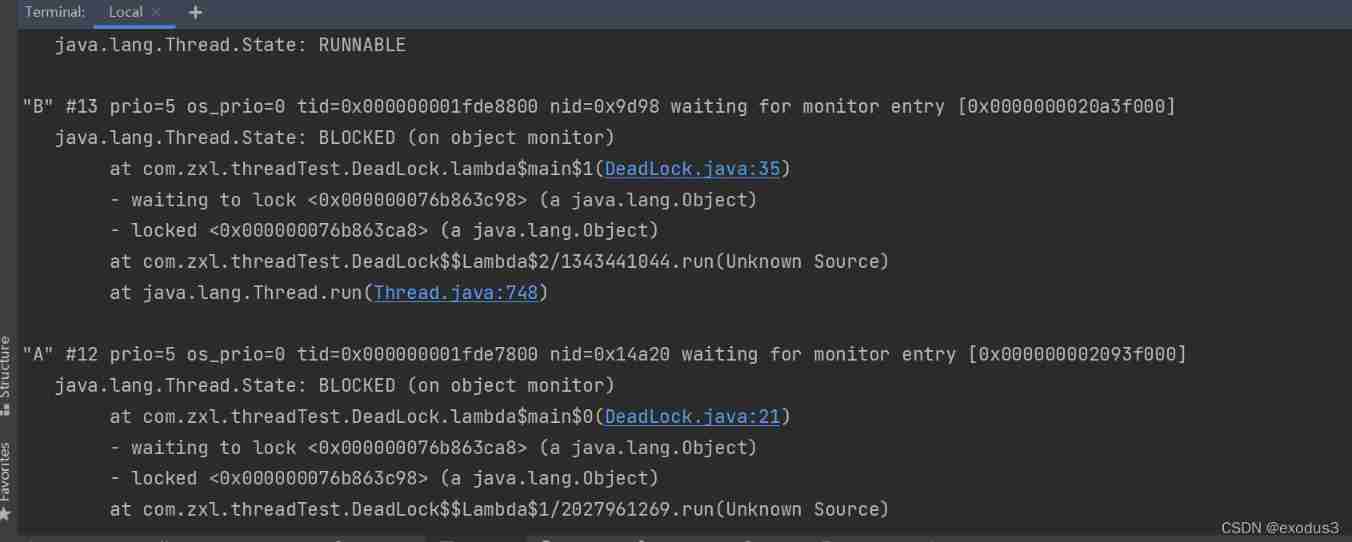

Shangsilicon Valley JUC high concurrency programming learning notes (3) multi thread lock

Splay

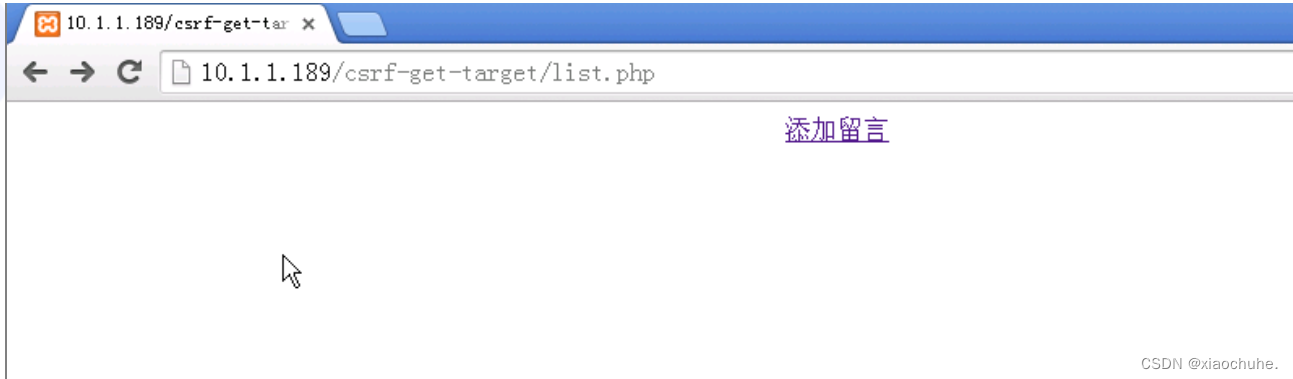

CSRF vulnerability analysis

I want to say more about this communication failure

随机推荐

传输层 拥塞控制-慢开始和拥塞避免 快重传 快恢复

UDP protocol: simple because of good nature, it is inevitable to encounter "city can play"

2019阿里集群数据集使用总结

287. Find duplicates

Four processes of program operation

Reproduce ThinkPHP 2 X Arbitrary Code Execution Vulnerability

Maixll dock camera usage

std::true_ Type and std:: false_ type

Markdown syntax for document editing (typera)

2022/02/12

重磅硬核 | 一文聊透对象在 JVM 中的内存布局,以及内存对齐和压缩指针的原理及应用

Implementation of queue

测试1234

队列的实现

文档编辑之markdown语法(typora)

FMT open source self driving instrument | FMT middleware: a high real-time distributed log module Mlog

On time and parameter selection of asemi rectifier bridge db207

2022暑期项目实训(三)

78 year old professor Huake has been chasing dreams for 40 years, and the domestic database reaches dreams to sprint for IPO

虚拟机VirtualBox和Vagrant安装