- I haven't written for a long time ( Sorry, I'm rowing ), Recently, I was looking at rent in Beijing ( How expensive ).

- Let me know , Nothing happened recently , According to personal securities operation strategy for many years and his own simple AI Time series algorithm knowledge , And their Javascript Learning and selling now , In the wechat small program to get a simple auxiliary system . I'll try it out first , If it's good, I'll make an article to introduce it to you in the future .

If you are interested, you can contact brother Liandan ,WX:cyx645016617.

1 summary

FPN yes Feature Parymid Network Abbreviation .

In the target detection task , It's like YOLO1 The middle one , Use convolution to extract features from an image , Through multiple pooling layers or stride by 2 After the convolution layer of , Output a small-scale feature map . And then do it in this feature map object detection .

let me put it another way , The final result of target detection is , It's totally dependent on this feature map , This method is called single stage Object detection algorithm .

As one can imagine , This method is difficult to effectively identify different sizes of targets , That's why many stage Detection algorithm , In fact, the feature pyramid is used FPN.

Simply put : A picture is also extracted by convolution network , Originally, a feature map is output through multiple pooling layers , Now it's going through multiple pooling layers , After each pooling layer, a feature map will be output , In this way, several feature maps with different scales are extracted .

And then different scale feature maps , Into the pyramid network of features FPN, Do target detection .

( If you don't understand , Just keep looking down ~)

2 FPN Summary of structure

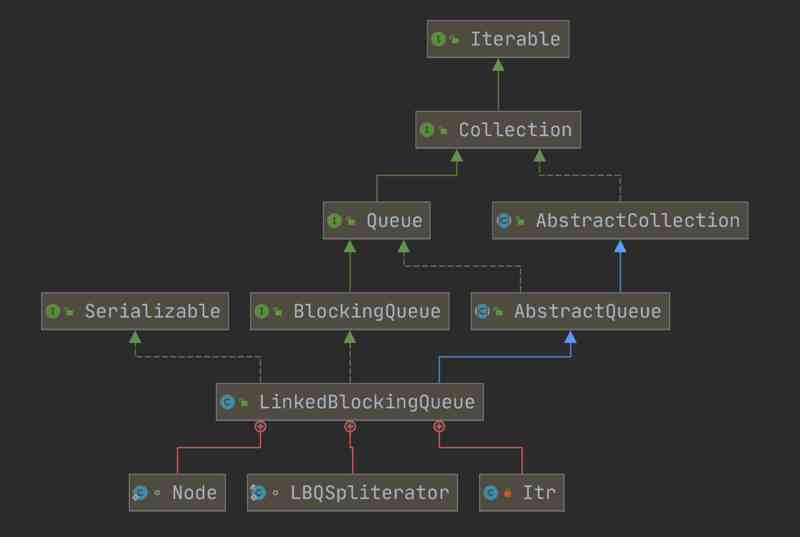

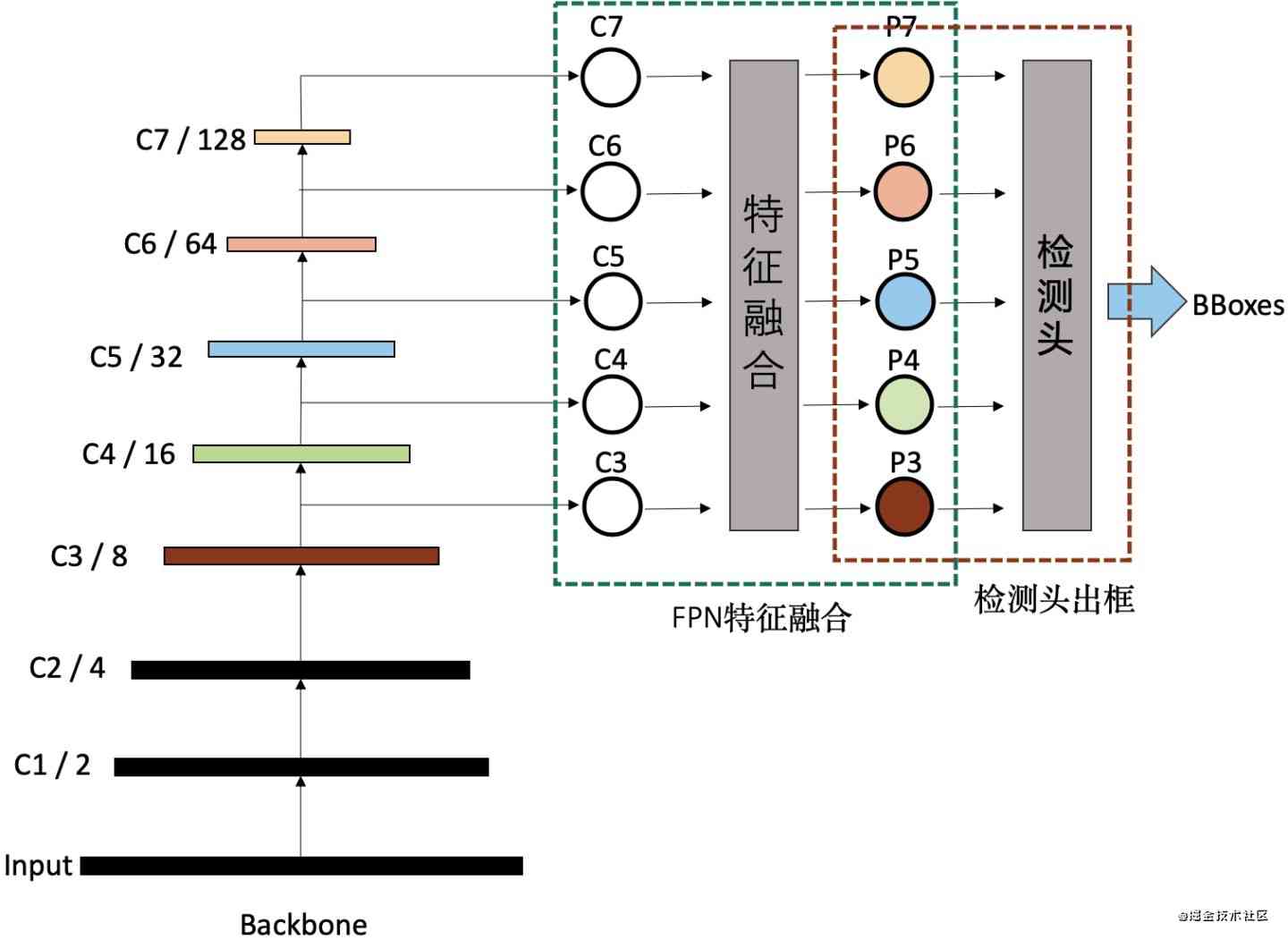

As you can see from the diagram :

- Sinister c1 ah ,c2 Ah, it's a feature map of different scales . The original image input The size of a pool layer or stride by 2 After the convolution layer of , Half the size , So this becomes C1 Characteristics of figure ; If you go through another pool layer , Then it becomes C2 Characteristics of figure .

- C3,C4,C5,C6,C7 These four different scale feature maps , Get into FPN Feature pyramid network for feature fusion , Then use the detection head to predict the candidate frame .

- Here are some personal understandings ( If there is an error , Please correct me ): Here's just a distinction many stage Detection algorithm And the difference between a pyramid network of features .

- many stage Detection algorithm : As can be seen from the picture above P3,P4,P5,P6,P7 These five different scale feature maps enter a detection head prediction candidate box , This detector head is actually a human detection algorithm , But the input to this neural network is a number of different scale feature maps , The output is the candidate box , So this is a lot of sgtage Detection algorithm ;

- Characteristic pyramid network : This is actually a fusion of feature maps of different scales , To enhance the representation ability of feature graph . This process is not a prediction candidate box , It should be included in the process of feature extraction .FPN The input of neural network is also a number of different scale characteristic graphs , The output is also a number of different scale feature maps , And the input feature map is the same .

So , More than one stage In fact, the detection algorithm can not FPN structure , Output directly from a convolutional network C3,C4,C5,C6,C7 Put in the output candidate box of the detection head .

3 The simplest FPN structure

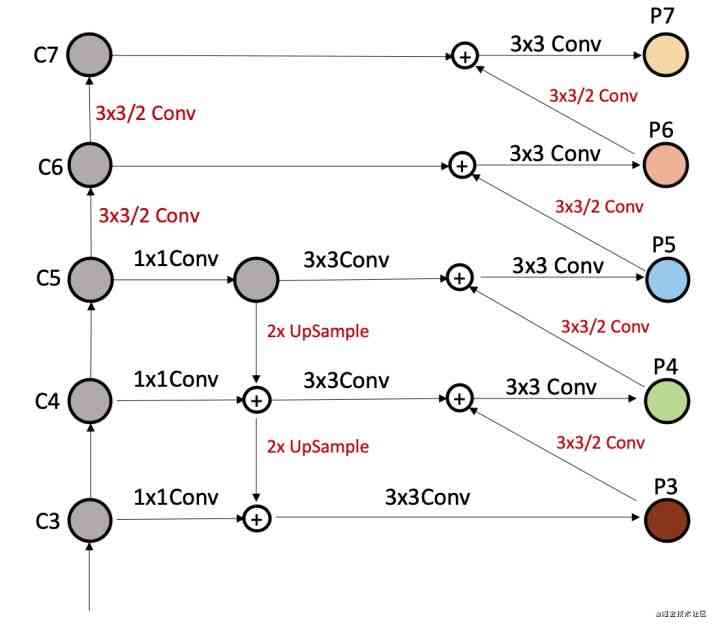

Top down one-way fusion FPN, In fact, it is still the mainstream fusion mode of current object detection model . As we often see Faster RCNN、Mask RCNN、Yolov3、RetinaNet、Cascade RCNN etc. , Top down one way FPN The structure is shown in the following figure :

The essence of this structure is :C5 Characteristic graph , After sampling , And then C4 Feature map stitching of , Then, we put the feature map after splicing through the convolution layer and BN layer , Output gets P4 Characteristics of figure . among P4 and C4 Characteristic graph of shape identical .

Through this structure , therefore P4 You can learn from C5 Deeper semantics , then P3 You can learn from C4 Deeper semantics . An individual's valid explanation for this structure , Because for prediction accuracy , The deeper the feature extraction, the better , So the more accurate the prediction is , But the deep feature map is smaller , Through up sampling and shallow feature map fusion , It can strengthen the feature expression of shallow characteristic map .

4 nothing FPN More stage structure

This is a useless FPN Structure diagram of the structure . No fusion , The typical representative of multi-scale features is that 2016 The famous sunrise of SSD, It takes advantage of different stage The feature map of the is responsible for different scale Detection of large and small objects .

You can see , The output characteristic graph of convolution network is directly put into the candidate box of feature head output .

5 Simple two-way fusion

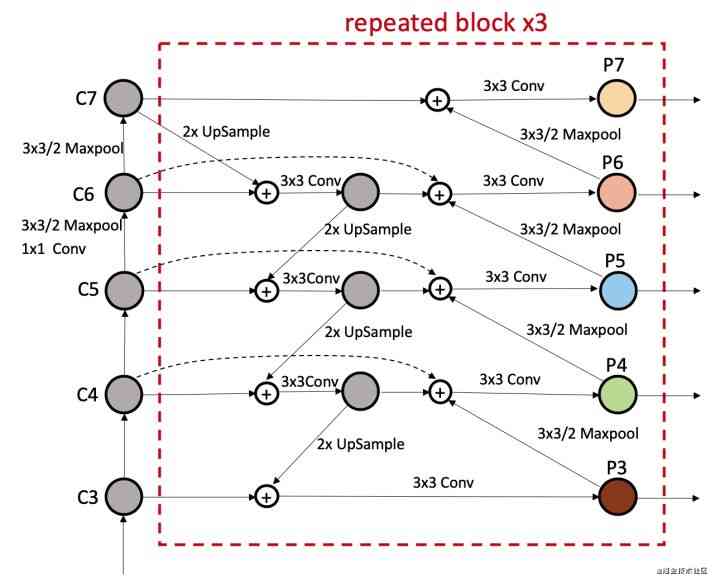

The original FPN It's a one-way fusion from deep to shallow , Now it is From deep to shallow 、 And then from shallow to deep two-way Fusion .PANet It's the first model to propose a bottom-up secondary fusion :

- PAnet: Path Aggregation Network.2018 Year of CVPR I've got a paper on .

- Address of thesis :https://arxiv.org/abs/1803.01534

- Title of thesis :Path Aggregation Network for Instance Segmentation

As you can see from the diagram , First there's a guy with FPN The same up sampling process , Then use it from shallow to deep stride by 2 The convolution completes the down sampling . use stride by 2 The convolution layer of the shallow layer of the feature map P3 Down sampling , And then the size and C4 identical , After the two are spliced together, we can use 3x3 The convolution layer of , Generate P4 Characteristics of figure

In addition, there are many complex bidirectional fusion operations , I don't introduce it in detail here .

6 BiFPN

above PAnet It's the simplest two-way FPN, But the real name is BiFPN It's another paper .

- BiFPN:2019 year google The team put forward .

- Address of thesis :https://arxiv.org/abs/1911.09070

- Title of thesis :EfficientDet: Scalable and Efficient Object Detection

The structure is not difficult to understand , In fact, in the PAnet Structurally , Made some small improvements . But the main contribution of this paper is still EfficientDet The proposed , therefore BiFPN It's just a small contribution .

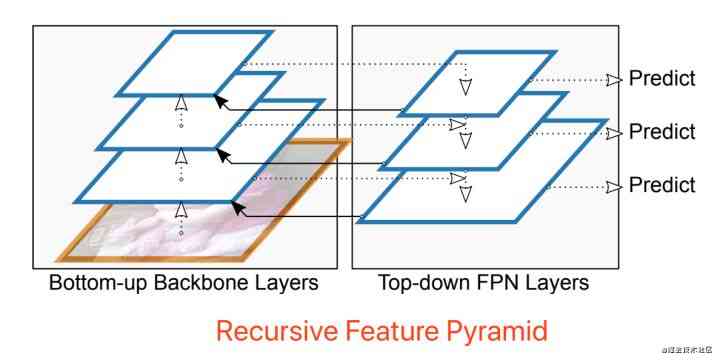

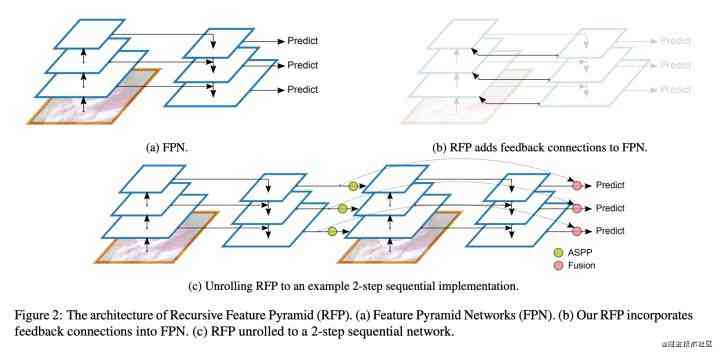

7 Recursive-FPN Cyclic feature pyramid network

- Recursive-FPN: The effect is amazing , Using recursion FPN Of DetectoRS It's a target detection task SOTA Is that right .(2020 Year paper )

- Thesis link :https://arxiv.org/abs/2006.02334

- Title of thesis :DetectoRS: Detecting Objects with Recursive Feature Pyramid and Switchable Atrous Convolution

Individuals in their own target detection tasks , Also used. RFN structure , Although the required computing power has doubled , But the effect is obviously improved , There are about 3 To 5 A point of improvement . Let's take a look at the structure :

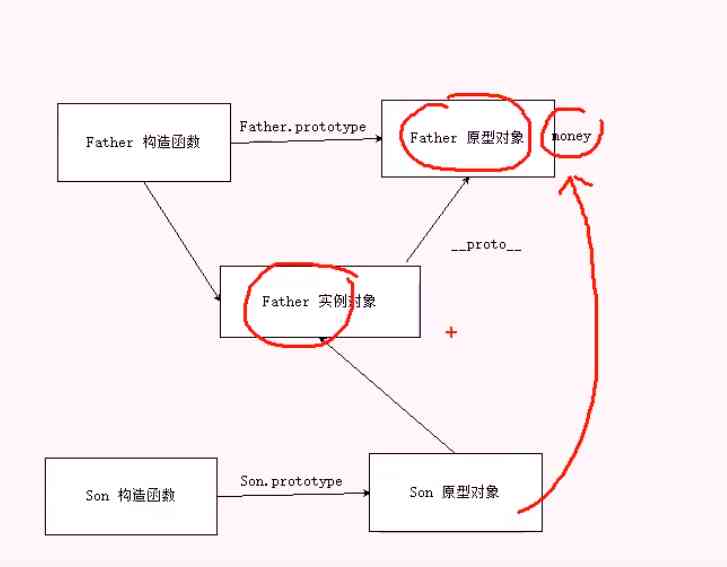

You can see , There's a dotted line and a solid line that make up a feature map and FPN A cycle between networks . Here's a 2-step Of RFP structure , That is to say, it circulates twice FPN structure .( If it is 1-step, That's the general FPN structure )

You can see , Is to put the previous FPN The structure of the P3,P4,P5 these , Then it is spliced into the corresponding feature extraction process of convolution network . After splicing, use a 3x3 Convolution layer and the BN layer , Restore the number of channels to the required value .