当前位置:网站首页>The worse the AI performance, the higher the bonus? Doctor of New York University offered a reward for the task of making the big model perform poorly

The worse the AI performance, the higher the bonus? Doctor of New York University offered a reward for the task of making the big model perform poorly

2022-07-07 04:31:00 【qubit 】

Yi Pavilion From the Aofei temple qubits | official account QbitAI

The bigger the model 、 The worse the performance, the better the prize ?

The total bonus is 25 Ten thousand dollars ( Renminbi conversion 167 ten thousand )?

such “ Out of line ” It really happened , A man named Inverse Scaling Prize( Anti scale effect Award ) The game of caused heated discussion on twitter .

The competition was organized by New York University 7 Jointly organized by researchers .

Originator Ethan Perez Express , The main purpose of this competition , It is hoped to find out which tasks will make the large model show anti scale effect , So as to find out some problems in the current large model pre training .

Now? , The competition is receiving contributions , The first round of submissions will end 2022 year 8 month 27 Japan .

Competition motivation

People seem to acquiesce , As the language model gets bigger , The operation effect will be better and better .

However , Large language models are not without flaws , For example, race 、 Gender and religious prejudice , And produce some fuzzy error messages .

The scale effect shows , With the number of parameters 、 The amount of computation used and the size of the data set increase , The language model will get better ( In terms of test losses and downstream performance ).

We assume that some tasks have the opposite trend : With the increase of language model testing loss , Task performance becomes monotonous 、 The effect becomes worse , We call this phenomenon anti scale effect , Contrary to the scale effect .

This competition aims to find more anti scale tasks , Analyze which types of tasks are prone to show anti scale effects , Especially those tasks that require high security .

meanwhile , The anti scale effect task will also help to study the potential problems in the current language model pre training and scale paradigm .

As language models are increasingly applied to real-world applications , The practical significance of this study is also increasing .

Collection of anti scale effect tasks , It will help reduce the risk of adverse consequences of large language models , And prevent harm to real users .

Netizen disputes

But for this competition , Some netizens put forward different views :

I think this is misleading . Because it assumes that the model is static , And stop after pre training . This is more a problem of pre training on standard corpora with more parameters , Not the size of the model .

Software engineer James Agree with this view :

Yes , This whole thing is a hoax . Anything a small model can learn , Large models can also . The deviation of the small model is larger , therefore “ Hot dogs are not hot dogs ” It may be recognized as 100% Right , When the big model realized that it could make cakes similar to hot dogs , The accuracy will drop to 98%.

James Even further proposed “ Conspiracy theories ” View of the :

Maybe the whole thing is a hoax —— Let people work hard , And show the training data when encountering difficult tasks , This experience will be absorbed by large models , Large models will eventually be better . So they don't need to give bonuses , You will also get a better large-scale model .

Regarding this , Originator Ethan Perez Write in the comment :

Clarify it. , The focus of this award is to find language model pre training that will lead to anti scale effect , Never or rarely seen category . This is just a way to use large models . There are many other settings that can lead to anti scale effects , Not included in our awards .

Rules of the game

According to the task submitted by the contestant , The team will build a system that contains at least 300 Sample datasets , And use GPT-3/OPT To test .

The competition will be selected by an anonymous jury .

The judges will start from the intensity of the anti scale effect 、 generality 、 Novelty 、 Reproducibility 、 Coverage and the importance of the task 6 There are three considerations , Conduct a comprehensive review of the submitted works , Finally, the first prize was awarded 、 Second and third prizes .

The bonus is set as follows :

The first prize is the most 1 position ,10 Ten thousand dollars ;

Most second prizes 5 position , Everyone 2 Ten thousand dollars ;

The third prize is the most 10 position , Everyone 5000 dollar .

The competition was held in 6 month 27 The day begins ,8 month 27 The first round of evaluation will be conducted on the th ,10 month 27 The second round of evaluation began on the th .

Originator Ethan Perez

Originator Ethan Perez Is a scientific researcher , Has been committed to the study of large-scale language models .

Perez Received a doctorate in natural language processing from New York University , Previously in DeepMind、Facebook AI Research、Mila( Montreal Institute of learning algorithms ) Worked with Google .

Reference link : 1、https://github.com/inverse-scaling/prize 2、https://twitter.com/EthanJPerez/status/1541454949397041154 3、https://alignmentfund.org/author/ethan-perez/

— End —

「 qubits · viewpoint 」 Live registration

What is? “ Intelligent decision making ”? What is the key technology of intelligent decision ? How will it build a leading enterprise for secondary growth “ Intelligent gripper ”?

7 month 7 On Thursday , Participate in the live broadcast , Answer for you ~

Focus on me here , Remember to mark the star ~

One key, three links 「 Share 」、「 give the thumbs-up 」 and 「 Looking at 」

The frontier of science and technology meets day by day ~

边栏推荐

- Intel and Xinbu technology jointly build a machine vision development kit to jointly promote the transformation of industrial intelligence

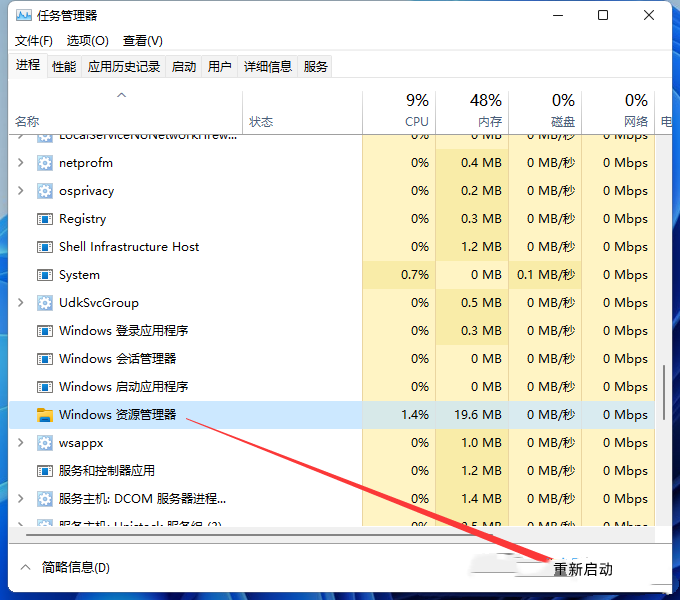

- What if the win11 screenshot key cannot be used? Solution to the failure of win11 screenshot key

- 见到小叶栀子

- 2022中青杯数学建模B题开放三孩背景下的生育政策研究思路

- Implementation of JSTL custom function library

- A series of shortcut keys for jetbrain pychar

- NanopiNEO使用开发过程记录

- 两个div在同一行,两个div不换行「建议收藏」

- 史上最全MongoDB之安全认证

- The first introduction of the most complete mongodb in history

猜你喜欢

C # use Siemens S7 protocol to read and write PLC DB block

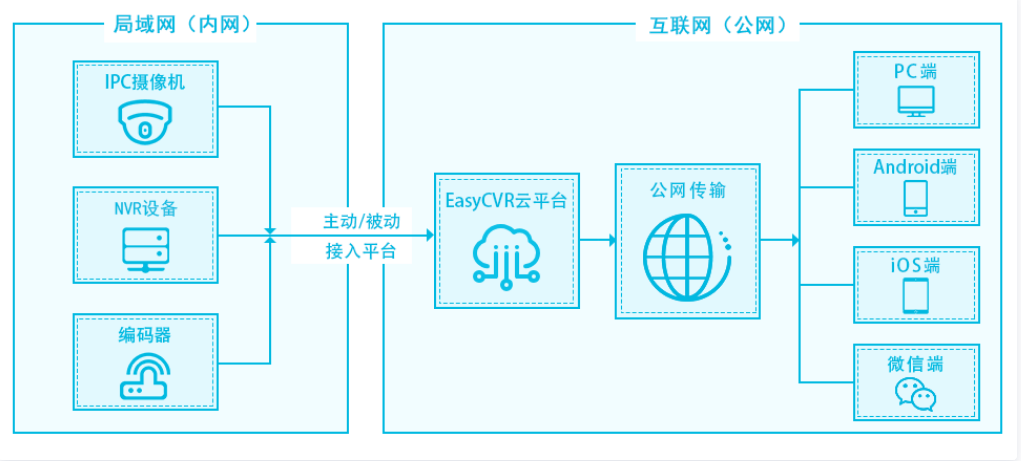

Easycvr cannot be played using webrtc. How to solve it?

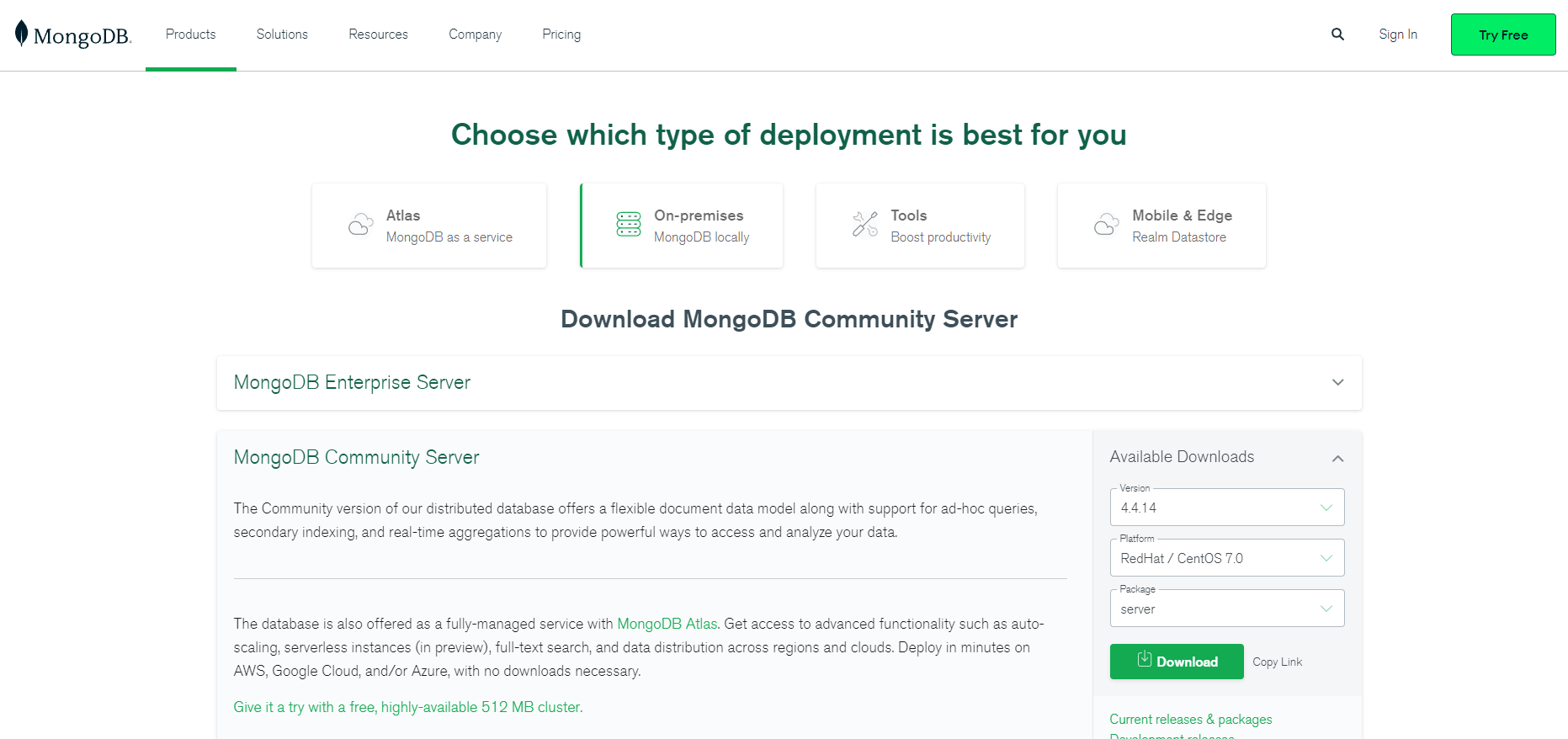

史上最全MongoDB之部署篇

What if win11 pictures cannot be opened? Repair method of win11 unable to open pictures

See Gardenia minor

Digital chemical plant management system based on Virtual Simulation Technology

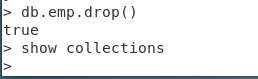

史上最全MongoDB之Mongo Shell使用

Opencv third party Library

【写给初发论文的人】撰写综述性科技论文常见问题

The easycvr platform is connected to the RTMP protocol, and the interface call prompts how to solve the error of obtaining video recording?

随机推荐

史上最全MongoDB之Mongo Shell使用

UltraEdit-32 warm prompt: right association, cancel bak file [easy to understand]

Digital chemical plant management system based on Virtual Simulation Technology

微信能开小号了,拼多多“砍一刀”被判侵权,字节VR设备出货量全球第二,今日更多大新闻在此

AI表现越差,获得奖金越高?纽约大学博士拿出百万重金,悬赏让大模型表现差劲的任务

见到小叶栀子

Win11玩绝地求生(PUBG)崩溃怎么办?Win11玩绝地求生崩溃解决方法

MySQL split method usage

Digital chemical plants realize the coexistence of advantages of high quality, low cost and fast efficiency

Break the memory wall with CPU scheme? Learn from PayPal to expand the capacity of aoteng, and the volume of missed fraud transactions can be reduced to 1/30

Mongo shell, the most complete mongodb in history

广告归因:买量如何做价值衡量?

Intel and Xinbu technology jointly build a machine vision development kit to jointly promote the transformation of industrial intelligence

The most complete deployment of mongodb in history

2022 middle school Youth Cup mathematical modeling question B fertility policy research ideas under the background of open three children

论文上岸攻略 | 如何快速入门学术论文写作

Redis source code learning (30), dictionary learning, dict.h

一度辍学的数学差生,获得今年菲尔兹奖

[written to the person who first published the paper] common problems in writing comprehensive scientific and Technological Papers

NTU notes 6422quiz review (1-3 sections)