当前位置:网站首页>Orin installs CUDA environment

Orin installs CUDA environment

2022-07-05 06:43:00 【Enlaihe】

There are two ways to install CUDA Environmental Science

1. Command line mode

Finished in the machine Orin, Execute the following command :

a. sudo apt update

b. sudo apt upgrade

c. sudo apt install nvidia-jetpack -y

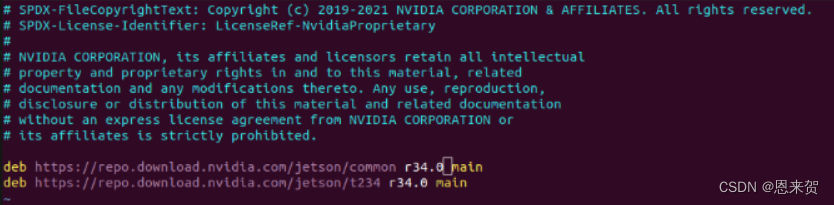

If the report is wrong , View version :/etc/apt/sources.list.d/nvidia-l4t-apt-source.list In file , The latest is 34.1

Change it to

After execution, you can install , If you use l4t_for_tegra The latest version , adopt flash.sh No error will be reported after brushing the machine .

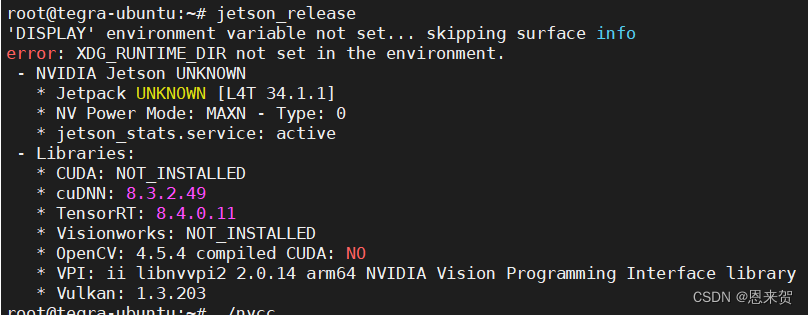

a. CUDA Check if the installation is successful

nvcc -V

If you make a mistake , Need to put nvcc Add to environment variables .

The following indicates that the installation is correct :

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2021 NVIDIA Corporation

Built on Thu_Nov_11_23:44:05_PST_2021

Cuda compilation tools, release 11.4, V11.4.166

Build cuda_11.4.r11.4/compiler.30645359_0b.cuDNN

dpkg -l libcudnn8Display the following information :

Desired=Unknown/Install/Remove/Purge/Hold

| Status=Not/Inst/Conf-files/Unpacked/halF-conf/Half-inst/trig-aWait/Trig-pend

|/ Err?=(none)/Reinst-required (Status,Err: uppercase=bad)

||/ Name Version Architecture Description

+++-==============-===================-============-======================

ii libcudnn8 8.3.2.49-1+cuda11.4 arm64 cuDNN runtime librariesc.TensorRT:

Display the following information :

Desired=Unknown/Install/Remove/Purge/Hold

| Status=Not/Inst/Conf-files/Unpacked/halF-conf/Half-inst/trig-aWait/Trig-pend

|/ Err?=(none)/Reinst-required (Status,Err: uppercase=bad)

||/ Name Version Architecture Description

+++-==============-===================-============-=====================

ii tensorrt 8.4.0.11-1+cuda11.4 arm64 Meta package of TensorRTd.OpenCV:

Display the following information :

Desired=Unknown/Install/Remove/Purge/Hold

| Status=Not/Inst/Conf-files/Unpacked/halF-conf/Half-inst/trig-aWait/Trig-pend

|/ Err?=(none)/Reinst-required (Status,Err: uppercase=bad)

||/ Name Version Architecture Description

+++-==============-===================-============-=======================

ii libopencv 4.5.4-8-g3e4c170df4 arm64 Open Computer Vision Library2. Use SDKmanager install

stay SDKmanager Download the required files

perform step1/2/3/4 that will do , Simple operation .

View the installed version :

TensorRT

TensorRT It's a high-performance deep learning reasoning (Inference) Optimizer , Can provide low latency for deep learning applications 、 High throughput deployment reasoning .TensorRT Can be used for very large data centers 、 Embedded platform or autopilot platform for reasoning acceleration . TensorRT Now we can support TensorFlow、Caffe、Mxnet、Pytorch And almost all the deep learning frameworks , take TensorRT and NVIDIA Of GPU Combine , Rapid and efficient deployment reasoning in almost all frameworks .

cuDNN

cuDNN It is used for deep neural network GPU Acceleration Library . It emphasizes performance 、 Ease of use and low memory overhead .NVIDIA cuDNN Can be integrated into a higher-level machine learning framework , Like Google's Tensorflow、 The popularity of the University of California, Berkeley caffe Software . Simple plug-in design allows developers to focus on designing and implementing neural network models , Instead of simply adjusting performance , At the same time, it can be in GPU To achieve high performance modern parallel computing .

CUDA

CUDA(ComputeUnified Device Architecture), It's the video card manufacturer NVIDIA The new computing platform . CUDA It's a kind of NVIDIA General parallel computing architecture , The architecture enables GPU Able to solve complex computing problems .

CUDA And cuDNN The relationship between

CUDA Think of it as a workbench , There are many tools on it , Like a hammer 、 Screwdrivers, etc .cuDNN Is based on CUDA Deep learning GPU Acceleration Library , With it, we can be in GPU Complete the calculation of deep learning . It's like a tool for work , For example, it's a wrench . however CUDA When I bought this workbench , No wrench . Want to be in CUDA Run deep neural network on , Just install cuDNN, It's like if you want to screw a nut, you have to buy a wrench back . Only in this way can GPU Do deep neural network work , Work faster than CPU Much faster .

边栏推荐

猜你喜欢

Get class files and attributes by reflection

SolidWorks template and design library are convenient for designers to call

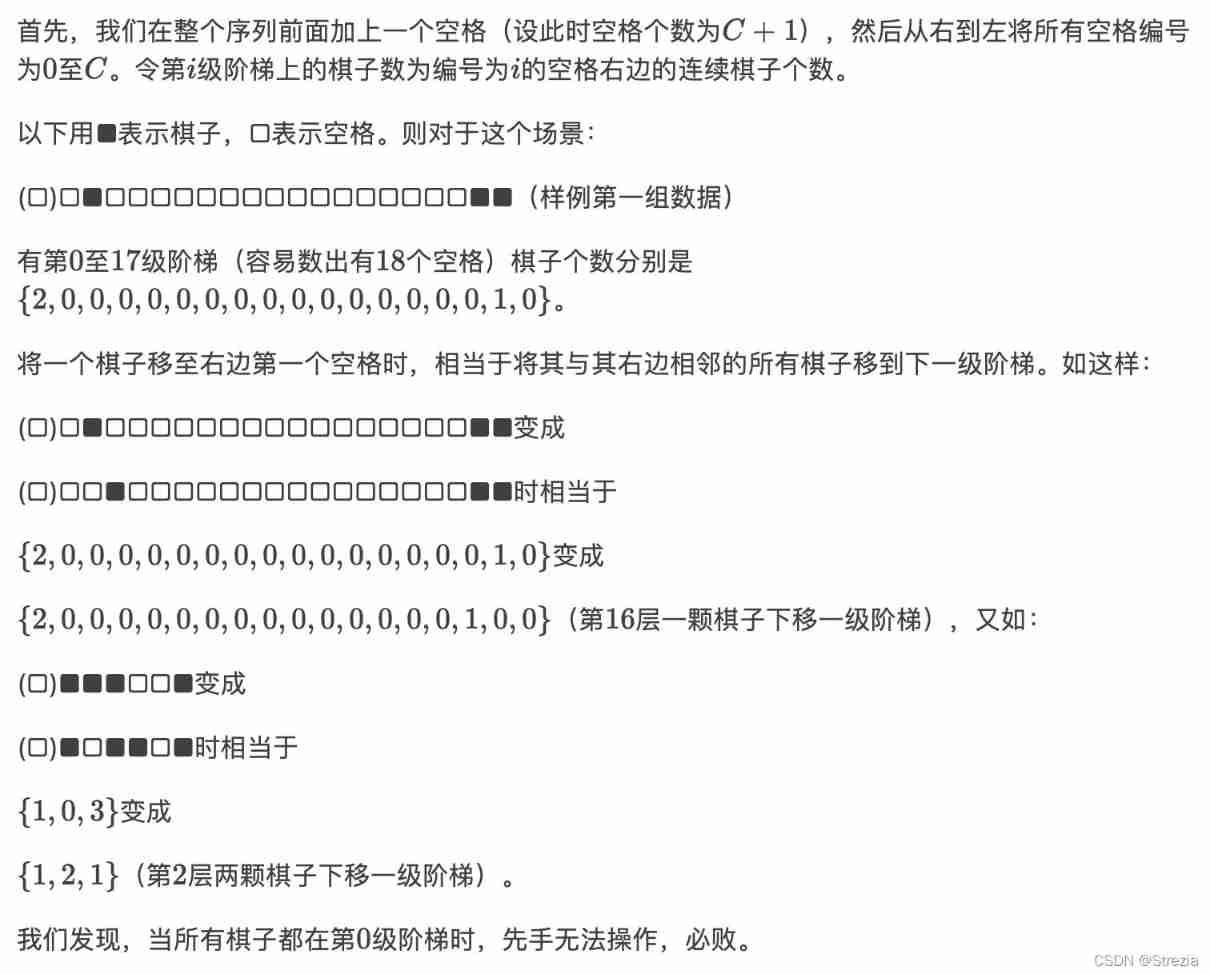

P2575 master fight

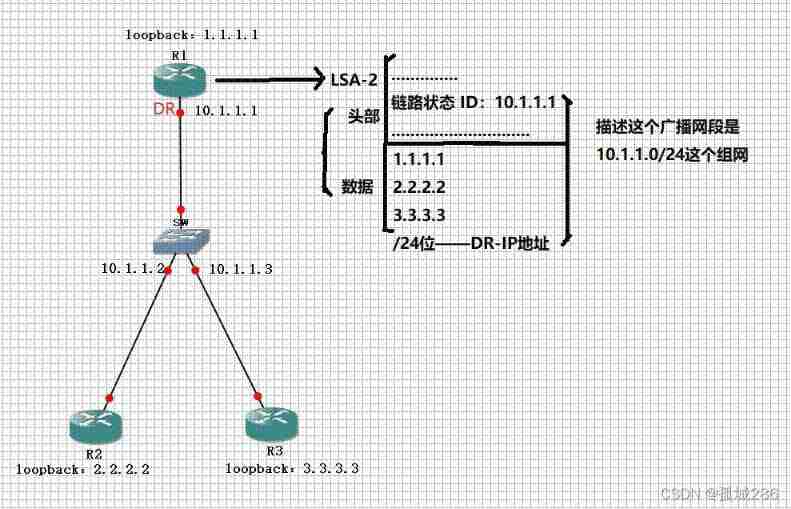

LSA Type Explanation - detailed explanation of lsa-2 (type II LSA network LSA) and lsa-3 (type III LSA network Summary LSA)

Bit of MySQL_ OR、BIT_ Count function

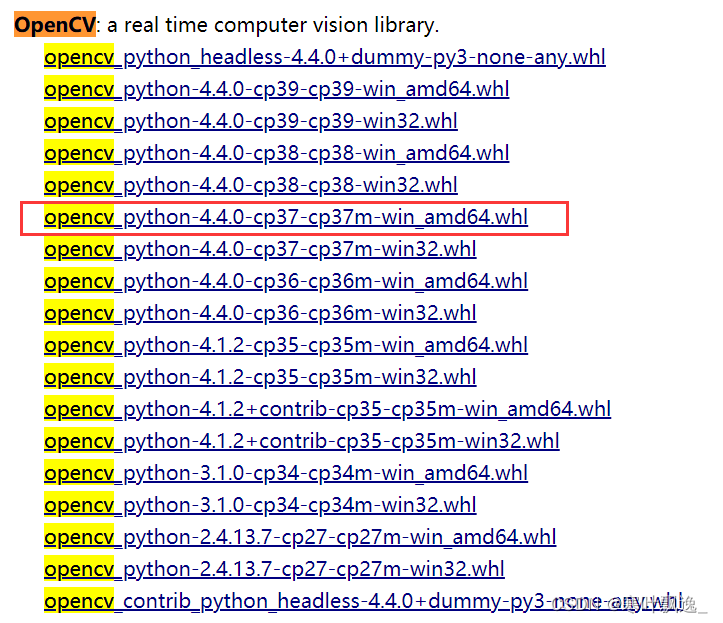

安装OpenCV--conda建立虚拟环境并在jupyter中添加此环境的kernel

Vant Weapp SwipeCell設置多個按鈕

![[QT] QT multithreading development qthread](/img/7f/661cfb00317cd2c91fb9cc23c55a58.jpg)

[QT] QT multithreading development qthread

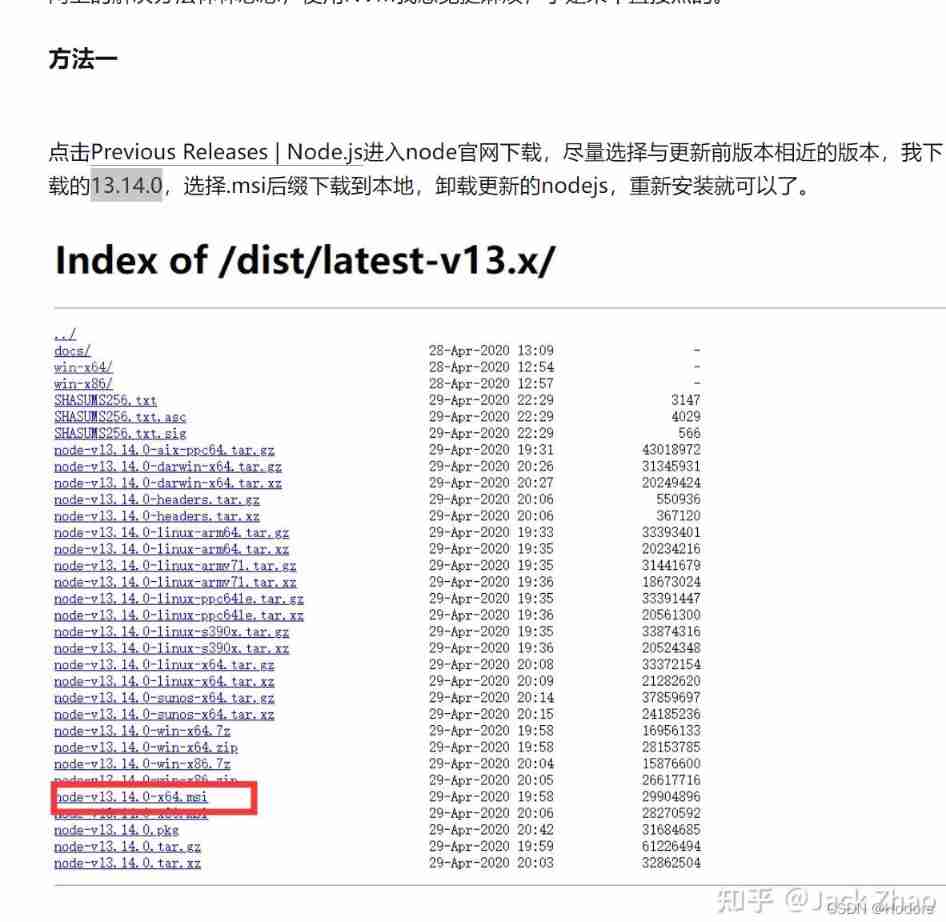

The “mode“ argument must be integer. Received an instance of Object

Day 2 document

随机推荐

Inclusion exclusion principle acwing 890 Divisible number

GDB code debugging

Edge calculation data sorting

FFmpeg build下载(包含old version)

Vant Weapp SwipeCell設置多個按鈕

Financial risk control practice -- feature derivation based on time series

Positive height system

How to make water ripple effect? This wave of water ripple effect pulls full of retro feeling

[learning] database: several cases of index failure

RecyclerView的应用

vim

5. Oracle tablespace

[learning] database: MySQL query conditions have functions that lead to index failure. Establish functional indexes

UIO driven framework

2. Addition and management of Oracle data files

4.Oracle-重做日志文件管理

Skywalking全部

Genesis builds a new generation of credit system

5.Oracle-錶空間

[moviepy] unable to find a solution for exe