当前位置:网站首页>ICML 2022 | explore the best architecture and training method of language model

ICML 2022 | explore the best architecture and training method of language model

2022-07-05 15:10:00 【Zhiyuan community】

This article introduces two articles published in ICML 2022 The paper of , Researchers are mainly from Google. Both papers are very practical analytical papers . It's different from the common papers' innovation in the model , Both papers are aimed at existing NLP The structure and training method of language model 、 Explore its advantages and disadvantages in different scenarios and summarize empirical rules .

Here, the author first collates the main experimental conclusions of the two papers :

1. The first paper found that although encoder-decoder It has occupied the absolute mainstream of machine translation , But when the model parameters are large , Design language model reasonably LM It can be compared with the traditional encoder-decoder The performance of architecture for machine translation tasks is comparable ; And LM stay zero-shot scenario 、 Better performance in small language machine translation 、 In large language machine translation, it also has off-target Fewer benefits .

2. The second paper found that I was not doing finetuning Under the circumstances ,Causal decoder LM framework +full language modeling Training in zero-shot The best performance in the task ; And there are multitasking prompt finetuning when , It is encoder-decoder framework +masked language modeling Training has the best zero-shot performance .

The paper 1:Examining Scaling and Transfer of Language Model Architectures for Machine Translation

link :https://arxiv.org/abs/2202.00528

The paper 2:What Language Model Architecture and Pretraining Objective Work Best for Zero-Shot Generalization?

边栏推荐

猜你喜欢

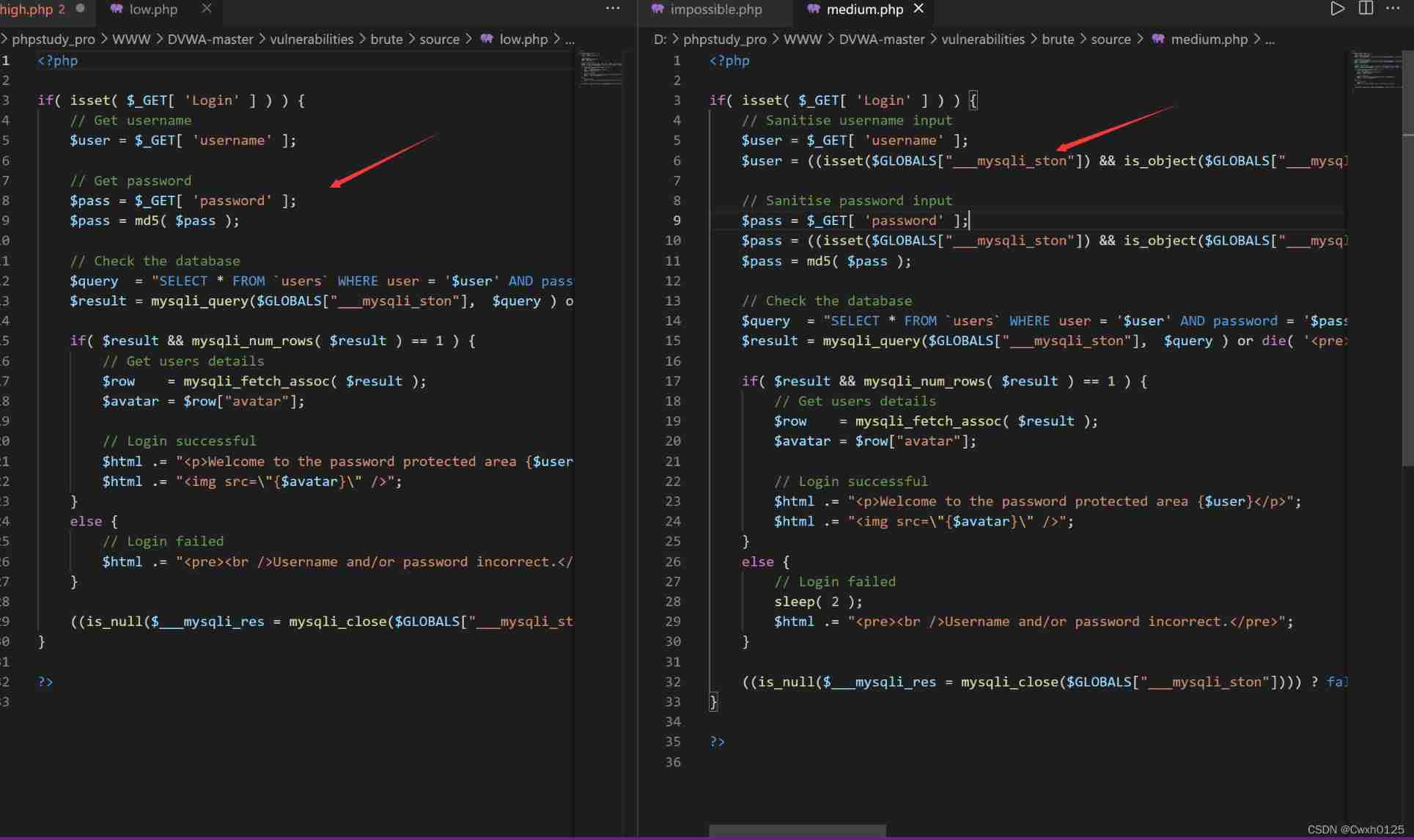

DVWA range clearance tutorial

爱可可AI前沿推介(7.5)

![1330: [example 8.3] minimum steps](/img/69/9cb13ac4f47979b498fa2254894ed1.gif)

1330: [example 8.3] minimum steps

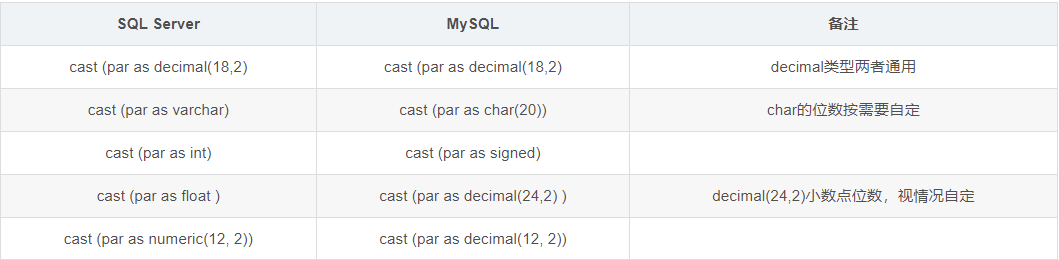

sql server学习笔记

![P1451 calculate the number of cells / 1329: [example 8.2] cells](/img/c4/c62f3464608dbd6cf776c2cd7f07f3.png)

P1451 calculate the number of cells / 1329: [example 8.2] cells

B站做短视频,学抖音死,学YouTube生?

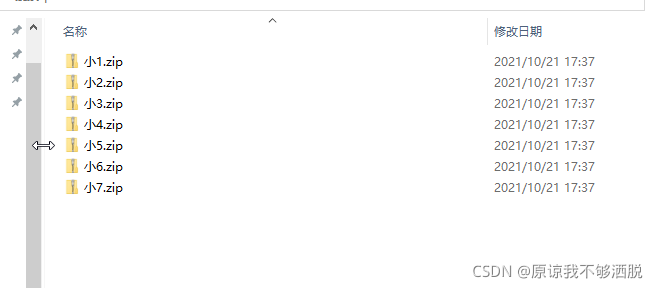

Change multiple file names with one click

亿咖通科技通过ISO27001与ISO21434安全管理体系认证

超越PaLM!北大碩士提出DiVeRSe,全面刷新NLP推理排行榜

危机重重下的企业发展,数字化转型到底是不是企业未来救星

随机推荐

Leetcode: Shortest Word Distance II

Under the crisis of enterprise development, is digital transformation the future savior of enterprises

1330:【例8.3】最少步数

PHP high concurrency and large traffic solution (PHP interview theory question)

做研究无人咨询、与学生不交心,UNC助理教授两年教职挣扎史

TS所有dom元素的类型声明

两个BI开发,3000多张报表?如何做的到?

漫画:优秀的程序员具备哪些属性?

Coding devsecops helps financial enterprises run out of digital acceleration

Using tensorboard to visualize the training process in pytoch

机器学习框架简述

Common PHP interview questions (1) (written PHP interview questions)

Easyocr character recognition

P6183 [USACO10MAR] The Rock Game S

Fr exercise topic --- comprehensive question

ICML 2022 | 探索语言模型的最佳架构和训练方法

Creation and optimization of MySQL index

Detailed explanation of usememo, memo, useref and other relevant hooks

B站做短视频,学抖音死,学YouTube生?

sql server char nchar varchar和nvarchar的区别