当前位置:网站首页>ByteDance open source Gan model compression framework, saving up to 97.8% of computing power - iccv 2021

ByteDance open source Gan model compression framework, saving up to 97.8% of computing power - iccv 2021

2022-07-06 16:49:00 【ByteDance Technology】

Put the outline of the shoes :

Restore to the shoe body :

How much calculation is needed ?

Use the most basic Pix2Pix Model , Need to consume 56.8G;

And when Pix2Pix The model is compressed by a new technology , It only needs 1.219G, It's the original 1/46.6, It greatly saves the amount of calculation .

The technology used here , That is, the technical team of ByteDance will meet at the top of computer vision ICCV 2021 Published on Online multi particle Distillation Algorithm (Online Multi-Granularity Distillation, abbreviation OMGD).

This model compression framework , To govern GAN The volume of the model is too large 、 Too much calculation effort , At present, the code is open source ( The address is at the end of the article ), as well as CycleGAN And Pix2Pix Pre training model of , And it has been landing on products such as Tiktok .

Compared with similar model compression algorithms ,OMGD This new framework , Not only the pressure is smaller , And the pressure is better .

For example, in the process of turning horses into zebras :

MACs Indicates the amount of calculation consumed , The number in brackets is the promotion multiple

And turning summer into winter :

Restore the divided street view into photos ( Pay attention to the cyclist ):

Experiments show that , This technology can make GAN The calculation amount of the model is reduced to the original 1/46、 The minimum number of parameters is reduced to the original 1/82.

Convert it , Is to omit 97.8% Amount of computation .

OMGD How did it happen

Model compression usually uses 「 Distillation of knowledge 」 Methods , That is, there are many parameters 、 The bulky model acts as 「 Teacher model 」, There are few parameters to supervise and optimize 、 Small size 「 Student model 」, Let the student model get the knowledge taught by the teacher model without expanding the volume .

OMGD This technology optimizes a student model by two teacher models that complement each other in the width and depth of the neural network , whole Pipeline That's true :

The framework transfers the concepts of different levels from the middle layer and the output layer , It can be used without discriminator and without Ground Truth Training under the setting of , Realize the refinement of knowledge , The on-line distillation scheme was optimized as a whole .

stay Pix2Pix and CycleGAN Experimental data on two well-known models show ,OMGD You can use the least number of parameters 、 The lowest amount of computation achieves the best image generation effect .

The most the right side FID The smaller the score , Indicates that the better the generation effect

Why make the big model smaller ?

ByteDance technical team related research and development students said , This is the first online knowledge distillation to compress GAN Technical solution , Has landed in Tiktok .

you 're right , All kinds of funny special effects props you see in Tiktok , All kinds of algorithm models are needed to run , Especially in dealing with special effects related to images ,GAN It's a general method , This set of GAN The model compression scheme has also been implemented in Tiktok , such as 「 Motion pictures 」:

「 Dance gloves 」:

also 「 Three screen life 」:

however ,GAN Models are usually large , It takes a lot of calculation to complete , It is a great challenge to land on mobile phones, especially low-end computers with insufficient computing power .OMGD A classmate of the R & D team said :“ We will measure the coverage of the model , That is, how many models of a model can run smoothly , After successfully compressing the model, more mobile phones can be covered , Let more people use , If the original model may be iPhone 11 Ability to use , After the compression iPhone 7 Can also be used .”

therefore , Model compression is a rigid requirement , How to make GAN Used by more people 、 Provide more inclusive services , It is the direction that the technology industry has been pursuing .

ByteDance technology team was the first 2017 Research on compression of annual input model , Today's headline 、 Tiktok 、 Cut and reflect 、 Watermelon video, etc App All have relevant technologies , I've also got 2020 year IEEE Low power computer vision challenge (LPCV) The champion of two tracks .

after OMGD Before compression , Teams usually use distillation or pruning algorithms to complete GAN Compression of models , Because the input resolution required by the model is very large , The amount of calculation is still very large , Not compressed to the extreme .

How can we achieve more extreme compression ?

After studying a large number of existing methods in academia , ByteDance technical team students did not find a method suitable for the company's business , Instead, I decided to study by myself , Creatively in GAN In model compression, it is the first time to think of using two complementary teacher models to train the same student model , And achieved the success of the experiment .

Now? ,OMGD In practice, it can be faster than the original method 20~30%, Some can even reach 80%.

also , As can be 「 Online compression 」 Methods ,OMGD Greatly reduced GAN The complexity of model production . there 「 On-line 」 It's not the online state that we refer to in our daily life , It means that the distillation process is completed in one step ,“ Previous GAN The compression method is carried out in several steps , For example, pre training first , Train again after compression , Then there are other steps , It's more complicated as a whole ; Our new method can complete the whole process in one step , The effect is also much better than other methods .” The team technical classmate said .

Now? , This kind of model compression technology can not only save computing power and energy , It can provide users with a smooth experience , Help creators inspire creativity , Enrich your life .

Related links

Address of thesis :

https://arxiv.org/abs/2108.06908

GitHub Code and training model :

https://github.com/bytedance/OMGD

边栏推荐

- LeetCode 1558. Get the minimum number of function calls of the target array

- JS time function Daquan detailed explanation ----- AHAO blog

- 7-4 harmonic average

- 7-10 punch in strategy

- string. How to choose h and string and CString

- Solve the single thread scheduling problem of intel12 generation core CPU (II)

- LeetCode 1562. Find the latest group of size M

- Chapter 5 yarn resource scheduler

- 第6章 Rebalance详解

- Educational Codeforces Round 122 (Rated for Div. 2)

猜你喜欢

Simple records of business system migration from Oracle to opengauss database

~81 long table

Use JQ to realize the reverse selection of all and no selection at all - Feng Hao's blog

SQL quick start

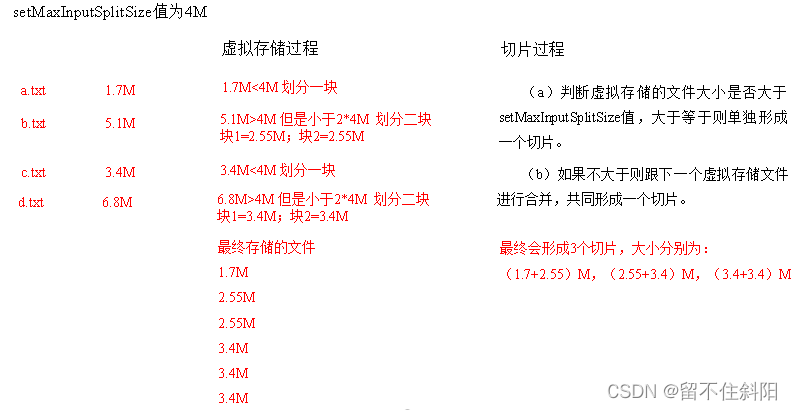

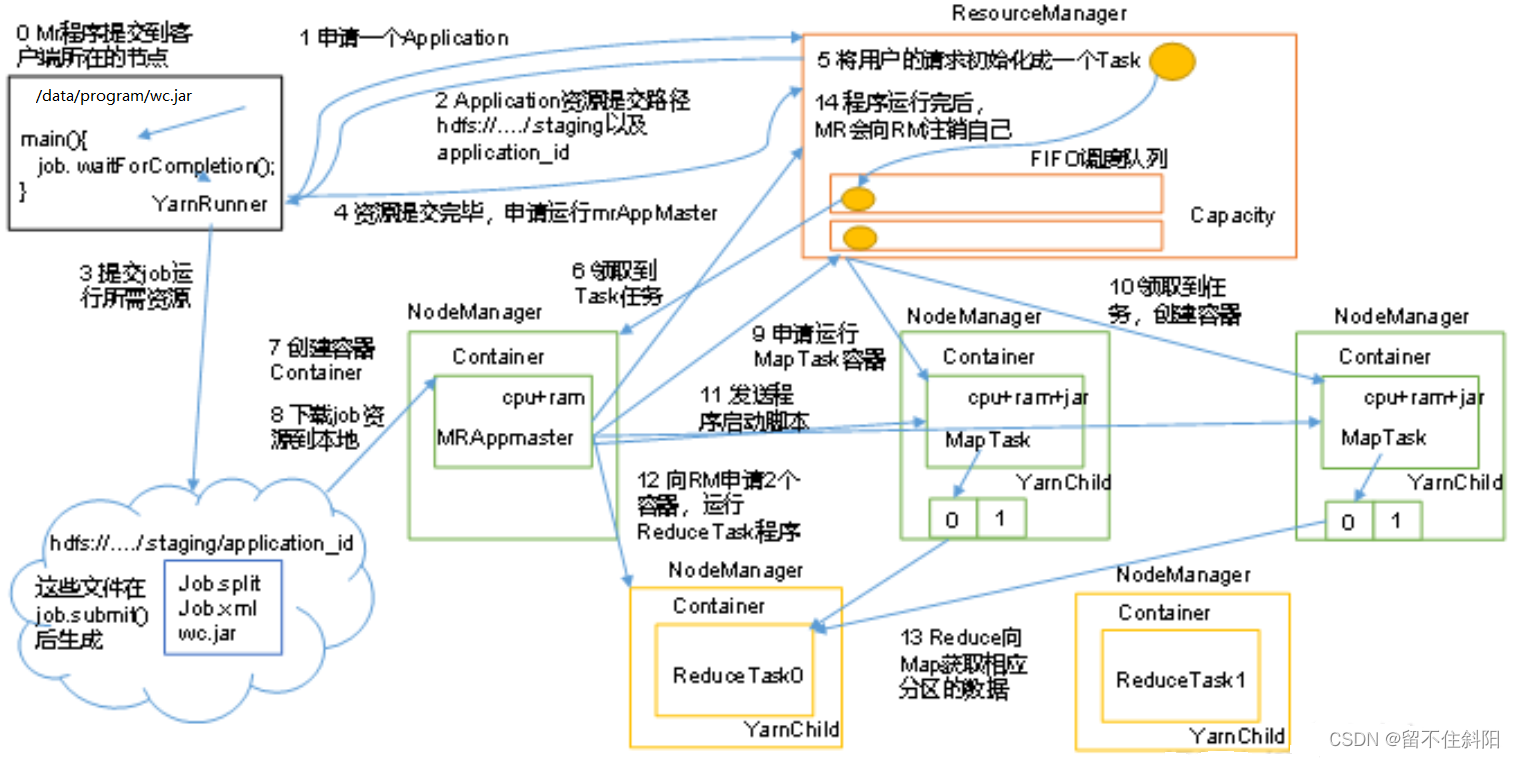

第三章 MapReduce框架原理

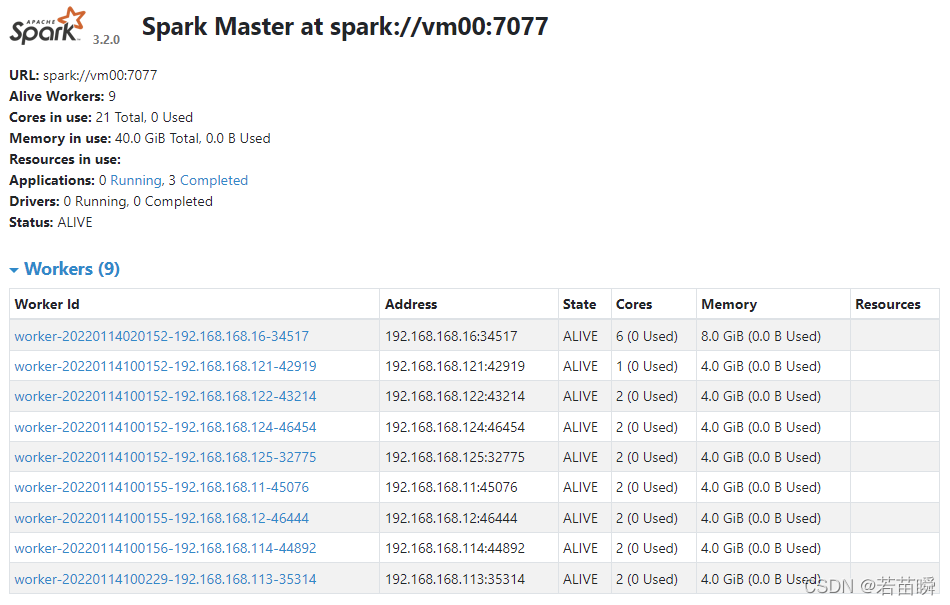

The concept of spark independent cluster worker and executor

字节跳动技术新人培训全记录:校招萌新成长指南

第五章 Yarn资源调度器

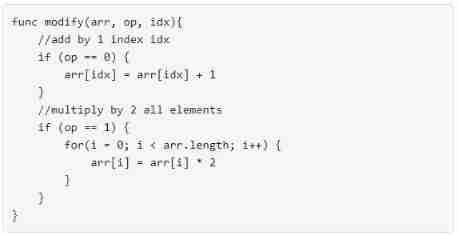

LeetCode 1558. Get the minimum number of function calls of the target array

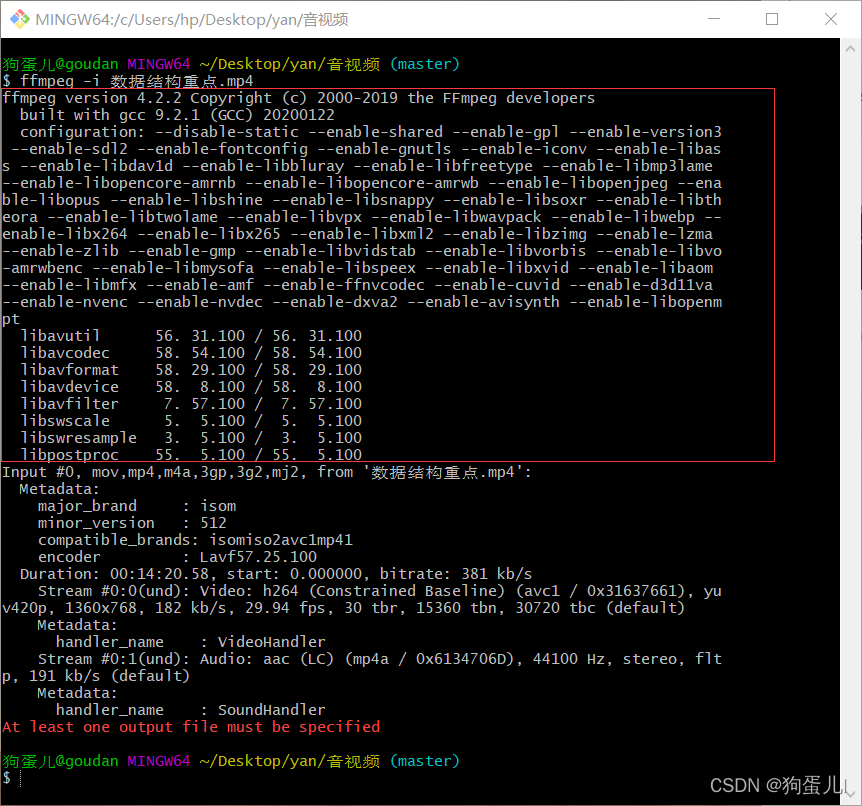

ffmpeg命令行使用

随机推荐

7-4 harmonic average

Spark独立集群Worker和Executor的概念

Native JS realizes the functions of all selection and inverse selection -- Feng Hao's blog

Summary of game theory

Eureka single machine construction

Two weeks' experience of intermediate software designer in the crash soft exam

Chapter 2 shell operation of hfds

7-5 blessing arrived

Market trend report, technological innovation and market forecast of desktop electric tools in China

音视频开发面试题

LeetCode 1641. Count the number of Lexicographic vowel strings

第2章 HFDS的Shell操作

~78 radial gradient

Chapter 5 yarn resource scheduler

Tree of life (tree DP)

力扣leetcode第 280 场周赛

Chapter 7__ consumer_ offsets topic

~87 animation

Continue and break jump out of multiple loops

LeetCode 1560. The sector with the most passes on the circular track