当前位置:网站首页>Data analysis - Thinking foreshadowing

Data analysis - Thinking foreshadowing

2022-07-05 23:13:00 【Dutkig】

Three parts of data analysis

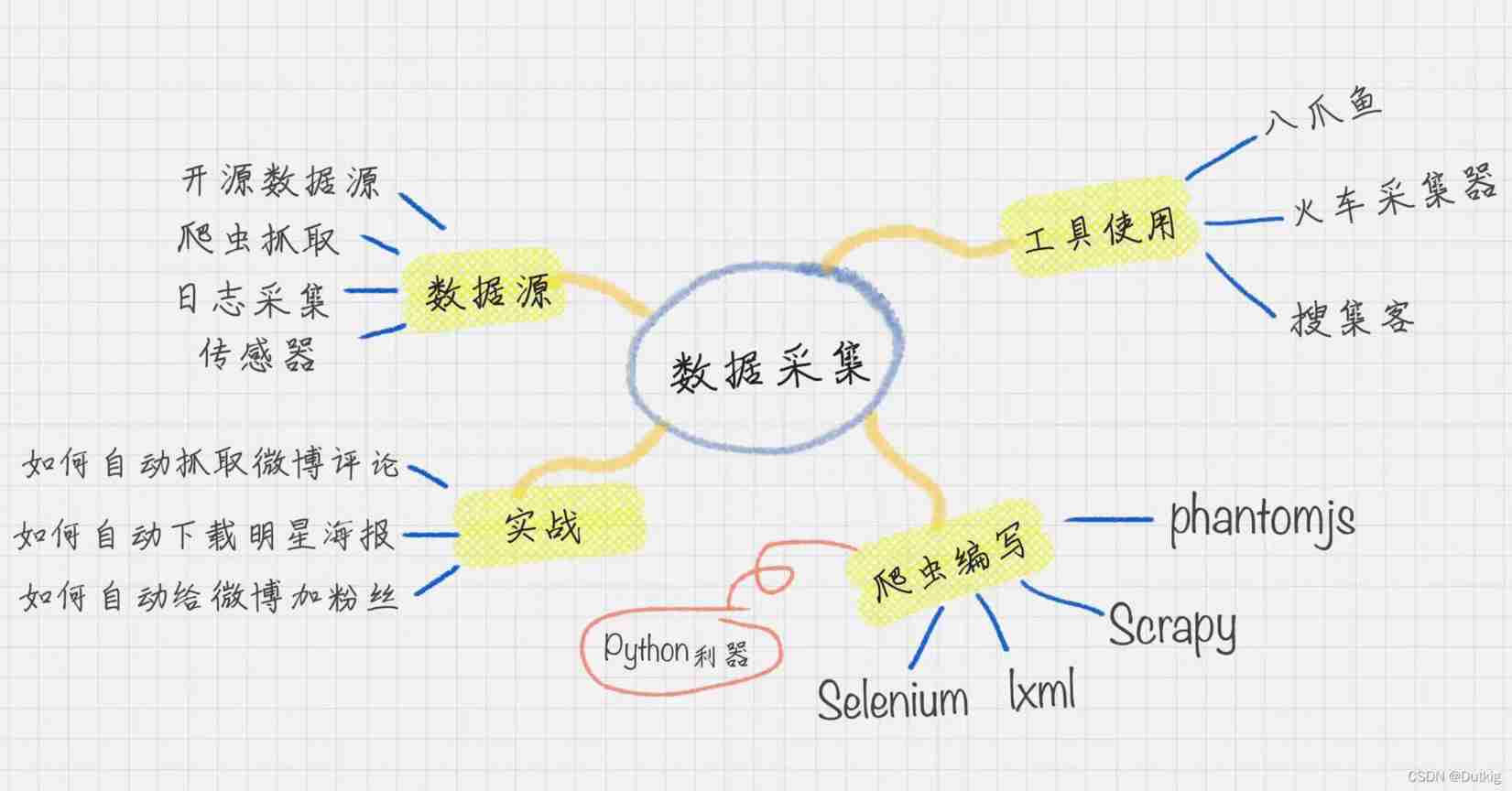

Data collection

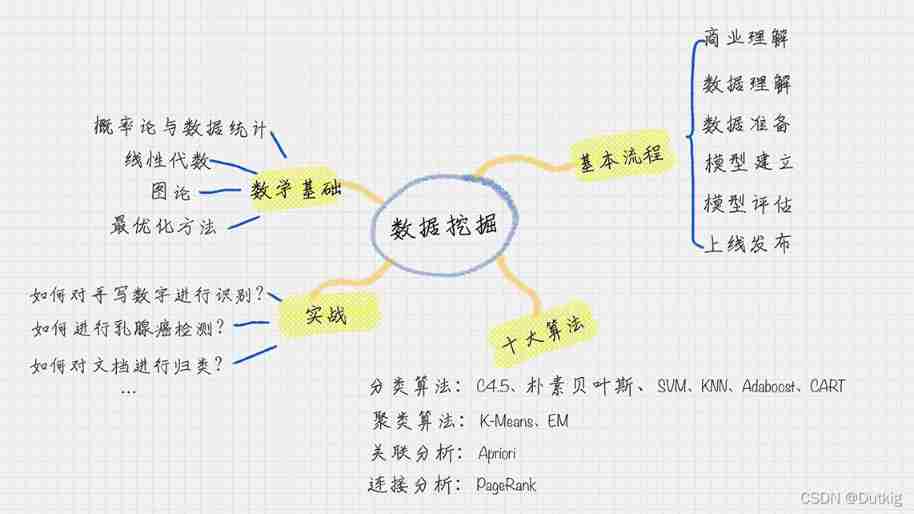

data mining

—— The core of data mining is to mine the commercial value of data , That is what we are talking about business intelligence (BI)

You need to master and understand the following contents :

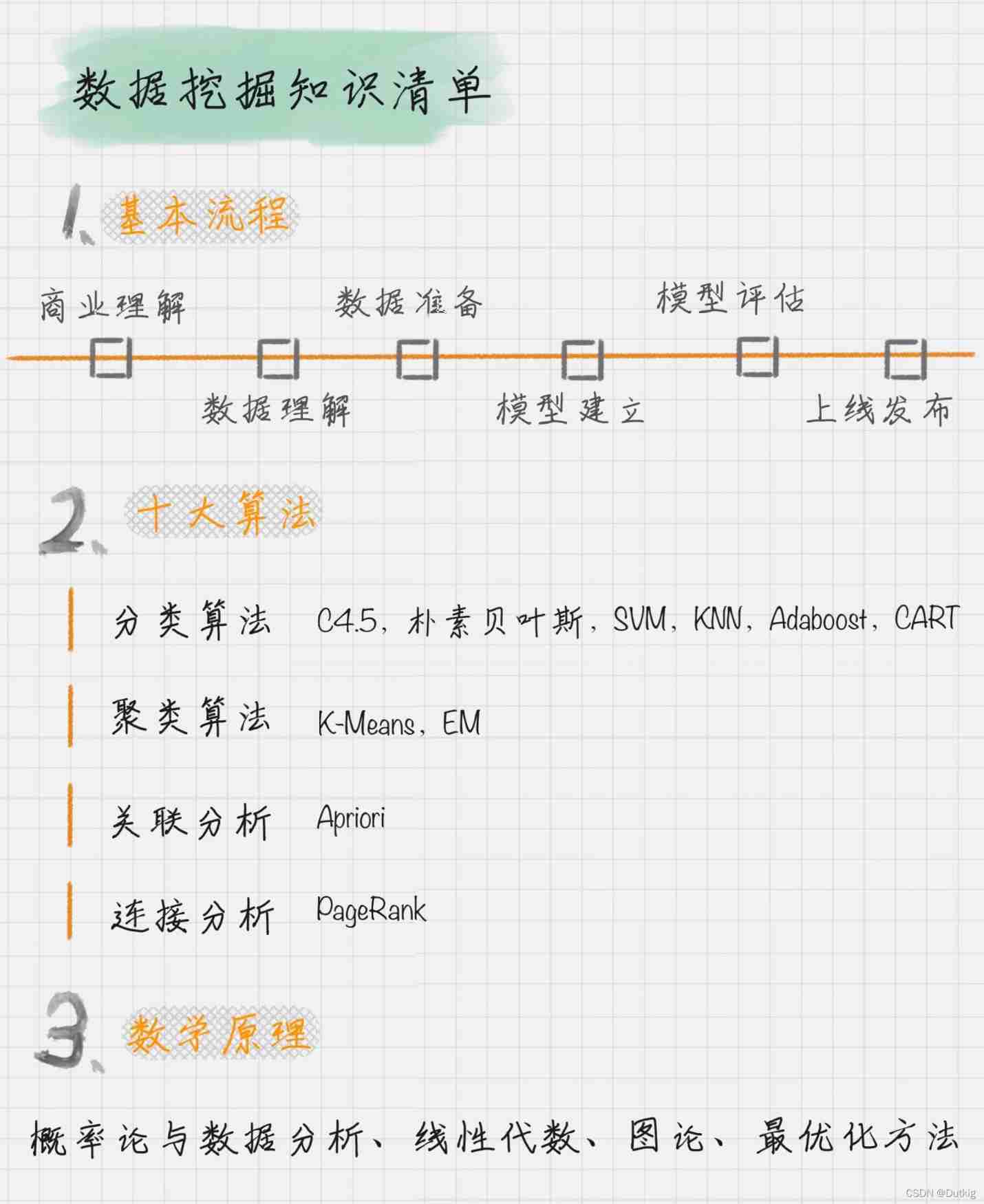

① The basic flow

② Ten algorithms

③ A certain mathematical foundation

Data visualization

This part is mainly to learn how to use relevant tools

Two principles

- Try to use third-party class libraries to complete your own ideas

- Try to choose the tool with the most users ,bug Less , All the documents , Many cases

The basic flow

- Business understanding : Understand project requirements from a business perspective , Better serve the business ;

- Data understanding : Explore the data , Including data description , Data quality verification , So as to have a preliminary understanding of the data ;

- Data preparation : Data cleaning and inheritance ;

- model : Apply the mining model and optimize , In order to get better classification results ;

- Model to evaluate : Evaluate the model , Check every step of building the model , Confirm whether the model has achieved the business objectives ;

- Launch online

Ten algorithms of data mining

For different purposes , The above ten algorithms are divided into the following four categories :

- Classification algorithm :C4.5 , Naive Bayes ,SVM,KNN,Adaboost,CART;

- clustering algorithm :K—Means,EM

- Correlation analysis :Apriori

- Connection analysis :PageRank

First of all, let's have a preliminary understanding of the above 10 Algorithms :

C4.5

A decision tree algorithm , Prune in the process of creating the decision tree , And can handle continuous attributes , It can also process incomplete data .

Naive Bayes

Based on the principle of probability theory , Want to classify the given unknown objects , We need to solve the probability of each category under the condition of occurrence , Which is the biggest , Which classification do you think it belongs to .

SVM

Support vector machine (Support Vector Machine) Build a hyperplane classification model .

KNN

K Nearest neighbor algorithm (K-Nearest Neighbor) Each sample can use its latest k A neighbor represents , If a sample , its k The closest neighbors belong to the classification A, So this sample also belongs to classification A

AdaBoost

AdaBoost A joint classification model is established in the training , Build a classifier Lifting Algorithm , It allows us to form a strong classifier with multiple weak classifiers , therefore Adaboost It is also a commonly used classification algorithm .

CART

CART Represents classification and regression trees , English is Classification and Regression Trees. Like English , It builds two trees : One is a classification tree , The other is the regression tree . and C4.5 equally , It is a decision tree learning method .

Apriori

Apriori Is a kind of mining association rules (association rules) The algorithm of , It does this by mining frequent itemsets (frequent item sets) To reveal the relationship between objects , It is widely used in the fields of business mining and network security . Frequent itemsets are collections of items that often appear together , Association rules imply that there may be a strong relationship between the two objects .

K-Means

K-Means Algorithm is a clustering algorithm . You can think of it this way , Finally, I want to divide the object into K class . Suppose that in each category , There was a “ Center point ”, Opinion leader , It is the core of this category . Now I have a new point to classify , In this case, just calculate the new point and K The distance between the center points , Which center point is it near , It becomes a category .

EM

EM Algorithm is also called maximum expectation algorithm , It is a method to find the maximum likelihood estimation of parameters . The principle is : Suppose we want to evaluate parameters A And parameters B, In the initial state, both are unknown , And got it A You can get B Information about , In turn, I know B And you get A. Consider giving... First A Some initial value , So as to get B Valuation of , And then from B Starting from the valuation of , Reevaluate A The value of , This process continues until convergence .

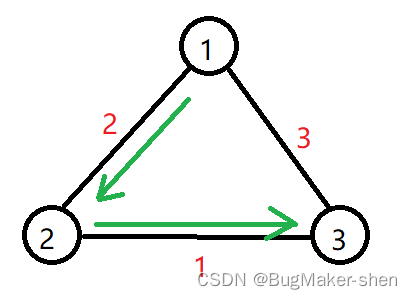

PageRank

PageRank It originated from the calculation of the influence of the paper , If a literary theory is introduced more times , It means that the stronger the influence of this paper . Again PageRank By Google It is creatively applied to the calculation of web page weight : When a page chains out more pages , Description of this page “ reference ” The more , The more frequently this page is linked , The higher the number of times this page is referenced . Based on this principle , We can get the weight of the website .

边栏推荐

- Go language implementation principle -- map implementation principle

- Selenium+pytest automated test framework practice

- 【Note17】PECI(Platform Environment Control Interface)

- 媒体查询:引入资源

- leecode-学习笔记

- Leetcode sword finger offer brush questions - day 21

- Fix the memory structure of JVM in one article

- 2022.02.13 - SX10-30. Home raiding II

- Krypton Factor purple book chapter 7 violent solution

- Basic knowledge of database (interview)

猜你喜欢

查看网页最后修改时间方法以及原理简介

2022 R2 mobile pressure vessel filling review simulation examination and R2 mobile pressure vessel filling examination questions

Debian 10 installation configuration

Practice of concurrent search

Registration of Electrical Engineering (elementary) examination in 2022 and the latest analysis of Electrical Engineering (elementary)

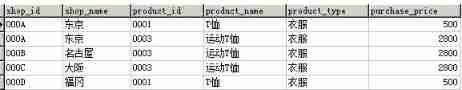

Alibaba Tianchi SQL training camp task4 learning notes

Element operation and element waiting in Web Automation

如何快速理解复杂业务,系统思考问题?

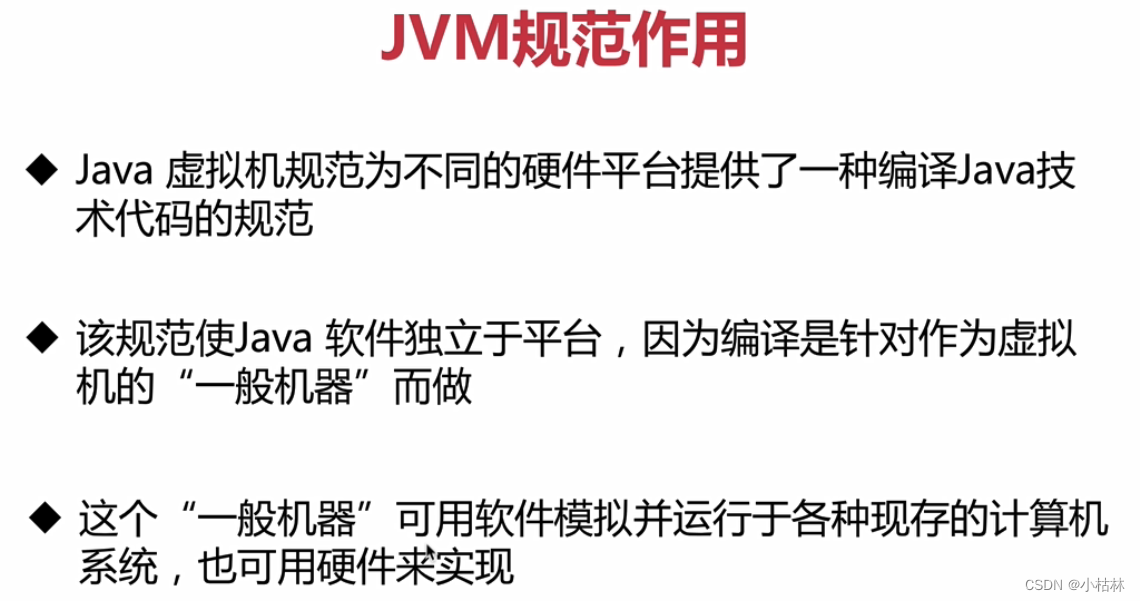

3: Chapter 1: understanding JVM specification 2: JVM specification, introduction;

终于搞懂什么是动态规划的

随机推荐

Element positioning of Web Automation

Matlab smooth curve connection scatter diagram

Alibaba Tianchi SQL training camp task4 learning notes

Debian 10 installation configuration

一文搞定JVM的内存结构

Week 17 homework

How to quickly understand complex businesses and systematically think about problems?

Mathematical formula screenshot recognition artifact mathpix unlimited use tutorial

Use of grpc interceptor

Three.JS VR看房

3 find the greatest common divisor and the least common multiple

Global and Chinese markets of tantalum heat exchangers 2022-2028: Research Report on technology, participants, trends, market size and share

Metasploit(msf)利用ms17_010(永恒之蓝)出现Encoding::UndefinedConversionError问题

Global and Chinese markets for reciprocating seal compressors 2022-2028: Research Report on technology, participants, trends, market size and share

Registration and skills of hoisting machinery command examination in 2022

一文搞定垃圾回收器

openresty ngx_ Lua regular expression

Multi sensor fusion of imu/ optical mouse / wheel encoder (nonlinear Kalman filter)

Déterminer si un arbre binaire est un arbre binaire complet

2022 G3 boiler water treatment simulation examination and G3 boiler water treatment simulation examination question bank