当前位置:网站首页>Vins technical route and code explanation

Vins technical route and code explanation

2022-06-12 08:01:00 【Wangshuailpp】

VINS technology roadmap

Write it at the front : This article integrates my own ideas , Hope for learning VINS perhaps VIO My classmates are helpful , If you think the article is a little helpful to your understanding , It can be recommended to the friends around , Of course , If you have any questions you want to communicate , Welcome to discuss at any time . Don't talk much , Text below .

VINS Code address :https://github.com/HKUST-Aerial-Robotics/VINS-Mono https://github.com/HKUST-Aerial-Robotics/VINS-Mono

https://github.com/HKUST-Aerial-Robotics/VINS-Mono

Reference documents :1.VINS-Mono A Robust and Versatile Monocular Visual-Inertial State Estimator

2.Quaternion kinematics for the error-state Kalman filter

New content :

State estimation and Kalman filter understanding and analysis

If you want to understand a piece of content alone , See the separate discussion section , Same content :

VINS Detailed explanation of theory and code 0—— Theoretical basis vernacular

VINS Detailed explanation of theory and code 1—— Framework analysis

VINS Detailed explanation of theory and code 2—— Monocular visual tracking

VINS Detailed explanation of theory and code 4—— initialization

It's been sorted out recently ORB_SLAM2 Theoretical analysis of tight coupling of visual inertia , If you want to know, you can click on the following connection :

ORB_SLAM2 Detailed explanation of visual inertial tight coupling positioning algorithm

If you just want to know ORB_SLAM2 Theoretical analysis of tight coupling of visual inertia A piece of words , See the separate discussion section , The content is the same :

Some of the key mathematical knowledge often mentioned in these blogs is summarized below , Update at any time. Welcome to read and correct :

VINS The code is mainly contained in two files , Namely feature_tracker and vins_estimate,feature_tracker Just like the name of the file , The overall function is to receive images , Use KLT Optical flow algorithm ;vins_estimate Including camera and IMU Front end preprocessing of data ( That is, the pre integration process )、 Monocular inertial joint initialization ( Online calibration process )、 Based on sliding window BA Joint optimization 、 Global graph optimization and loop detection . To really understand a SLAM frame , We must really understand the corresponding algorithm model , Then we can study its code logic , Finally, achieve the effect of complementing each other , Therefore, this explanation is mainly based on the theoretical knowledge in the paper and the codes in the two documents . The overall framework is familiar , As shown in the figure below , The first part is Measuremen Preprocessing: Observation data preprocessing , Contains image data tracking IMU Data pre integration ; The second part is Initialization: initialization , Including simple visual initialization and visual inertia joint initialization ; The third part Local Visual-Inertia BA and Relocalization: Local BA Joint optimization and relocation , Contains a sliding window based BA Optimization model ; The fourth part Global Pose Graph Optimization: Global graph optimization , Only the global pose is optimized ; The fifth part Loop detection: Loop back detection .

One .Feature_tracker In the folder

Let's start with the first part , That is, pure image processing , In the second part of the paper IV Pretreatment of point observations A Some visual front-end processing , In order to better understand the code , It is necessary to discuss the relevant contents of the paper with you .

The content of the paper is : Whenever you enter a new image , Will use KLT Sparse optical flow method for tracking , Simultaneous extraction 100-300 Corner information , My understanding is that corners are used to create images , Optical flow tracking is used to quickly locate . At the same time, the key frame is also selected here , There are mainly two strategies to eliminate key frames , They are the average parallax method and the tracking quality method . Average parallax method : If the average parallax of the current frame and the previous keyframe tracking point exceeds a set threshold , Set the current frame as the keyframe . Here's a question , It's that both rotation and translation produce parallax ( Not just translation ), When pure rotation occurs, the feature points cannot be triangulated , Can't calculate the rotation value , It is impossible to calculate the average parallax between the tracking points , To solve this problem , Short time gyro observations are used to compensate for rotation , So we can calculate the parallax , This process only applies to the calculation of the average parallax , It doesn't affect the actual rotation results .

Specific code implementation : Mainly responsible for image corner extraction and optical flow tracking , Only one main thread . Mainly three source programs , Namely feature_tracker、feature_tracker_node as well as parameters.feature_tracker_node It is the system entry of feature tracking thread ,feature_tracker Is the specific implementation of feature tracking algorithm ,parameters It is the reading and storage of equipment and other parameters .

1. feature_tracker_node.cpp System entrance

(1) main() function

step 1:readParameters(n); Read parameters , yes config->euroc->euroc_config.yaml Some configuration parameters in .

step 2: trackerData[i].readIntrinsicParameter(CAM_NAMES[i]); ad locum NUM_OF_CAM Set to constant 1, Only one camera ( Monocular ), Read camera internal parameters .

step 3: Judge whether to add fish eyes mask To remove edge noise

step 4: ros::Subscriber sub_img = n.subscribe(IMAGE_TOPIC, 100,img_callback); Subscribe to topics and publish topics , monitor IMAGE_TOPIC(/cam0/image_raw), When images are posted on this topic , Execute callback function , Here you go straight to img_callback Function to receive an image , The algorithm of front-end vision is basically in this callback function .

1) img_callback(const sensor_msgs::ImageConstPtr &img_msg) Receive image

step 1: Frequency control , Ensure that every second is processed image Not more than FREQ, Here, the average rate is controlled at 10hz within .

step 2: Deal with monocular cameras

step 2.1: trackerData[i].readImage(ptr->image.rowRange(ROW * i, ROW *(i + 1))); The read image data is stored in trackerData in , After reading, if the image is too bright or too dark (EQUALIZE=1), Use createCLAHE Image adaptive histogram equalization , If the image is normal , Set to current image . Optical flow tracking and feature point extraction are carried out when reading the image .FeatureTracker The main functions handled in the class are readImage(), This involves 3 individual img(prev_img, cur_img, forw_img) and pts(prev_pts,cur_pts, forw_pts), The two are similar . It was not easy to understand at first ,cur and forw Namely LK Two frames before and after optical flow tracking ,forw It's real “ At present ” frame ,cur It's actually the last frame , and prev Is the last published frame , It is actually after optical flow tracking ,prev and forw according to Fundamental Matrix do RANSAC To eliminate outlier With , That is to say rejectWithF() function . readImage() The processing flow of is :

① First call cv::CLAHE Histogram equalization of the image ( If EQUALIZE=1, It means too bright or too dark )

PS:CLAHE Is a histogram equalization algorithm , Can effectively enhance or improve the image ( Local ) Contrast , Thus, more image related edge information is obtained, which is conducive to segmentation , For example, in the spine cutting of the bookshelf recognition system , Use CLAHE Compared with the traditional histogram enhancement method, it can achieve a better effect of enhancing the straight line of the spine boundary , Thus, it is beneficial to the subsequent detection and extraction of the spine boundary line . It can also effectively improve AHE The problem of amplifying noise in , Although it is not widely used in practice , But the effect is really good .

② call calcOpticalFlowPyrLK() track cur_pts To forw_pts, according to status, Eliminate the points of tracking failure ( Be careful :prev, cur,forw, ids, track_cnt All must be eliminated ), There is also a inBorder Judge , The points traced to the edge of the image are also eliminated .

③ If you don't need to publish feature points , Then it's over , Put the current frame forw Assign to the previous frame cur, And then quit . If you need to publish feature points (PUB_THIS_FRAME=1), Then perform the following steps

④ First call rejectWithF() Yes prev_pts and forw_pts do ransac To eliminate outlier.( It actually calls findFundamentalMat function ), When the optical flow tracking is successful, remember to be tracked +1, The number represents the number of times tracked , The greater the numerical , That means the longer it's tracked

⑤ call setMask(), Start with the tracking point forw_pts Sort by tracking times , Then choose points in turn , Choose a point , stay mask Set the area with a certain radius around the point as 0, The points in this area will not be selected later . It's a bit like non-max suppression, But the difference is that it retains track_cnt Highest point .

⑥ stay mask Not in 0 Region , call goodFeaturesToTrack Extract new corners n_pts, adopt addPoints() function push To forw_pts in , id initialization -1,track_cnt Initialize to 1.

On the whole, it should be noted that : Optical flow tracking in ② Finish in , Corner extraction in ⑥ Finish in

step 2.2: Determine whether to display distortion .

step 2.3: Correct the feature points ( Camera model camodocal) Of the post normalized plane 3D spot ( There is no scale information at this time ,3D spot p.z=1), Pixels 2D spot , And the characteristics of id, Encapsulated into ros Of sensor_msgs::PointCloud Of message type feature_points In the example ; Encapsulate the image into cv_bridge::CvImageConstPtr Type of ptr In the example

step 3: Data for publishing messages

pub_img.publish(feature_points);

pub_match.publish(ptr->toImageMsg())

Use the processed image information as PointCloud example feature_points and Image Example ptr Message type , Publish to "feature" and "feature_img" Of topic( This step is in main Done in the function )

thus , The image data has been packaged into feature point data and image data and released , The following is to open a thread , Post a topic , Receive both messages , That's what's down here vins_esitimate What is done in the document .

The following is the specific flow chart :

Two .Vins_estimate In the folder

Let's move on to the second part , It is also the focus and difficulty of the whole framework , It is estimated that it will take a long time to learn and sum up theoretical knowledge and code logic , This part starts from the theoretical knowledge of the paper , Then attach the code to digest and understand .

The content of the paper is :

1. Paper No IV Dot B part IMU Preintegration ,

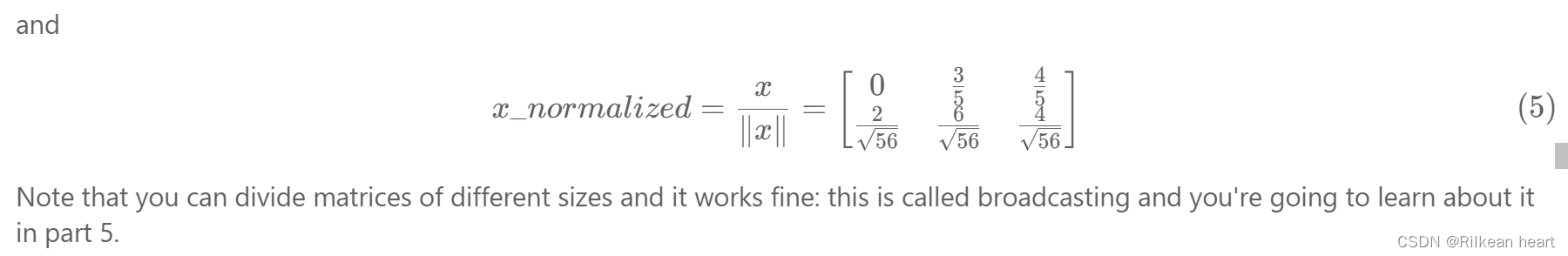

IMU The function of pre integration is to calculate IMU Observations of data ( Namely IMU The premixed value ) And the covariance matrix and Jacobian matrix of the residuals , Then we should clearly understand why we should calculate these three quantities ? Calculate why these three quantities can be coupled with visual observations ? If you can't answer now , Please think about what you have learned before , These three quantities about vision , Visual observations are used to calculate residuals ( That's the error ), Jacobian matrix of residual error is the descending direction in optimization ( That's the gradient ), Rarely mentioned covariance matrix ( But it's important. ) In fact, it is the weight corresponding to the observed value ( Because there are many observations ), Is it clear now ? For specific use , These three quantities Provide initial values for subsequent joint initialization and backend optimization IMU The constraints of . Raw gyro and accelerometer observation data :

On the left side of the first equation is the measured acceleration ( The value you can read from the accelerometer , The data with angle brackets is the data with errors ), To the right of the equation is the true value of the acceleration ( In fact, it is the exact value , What we need to get is the true value ) Plus the accelerometer offset 、 Acceleration of gravity ( Notice that in world coordinates ) And acceleration noise . The left side of the second equation is the measured value of the gyroscope , On the right side of the equation is the true value of the gyroscope plus the gyroscope bias and gyroscope noise terms . The values here are all IMU(body) Frame coordinate system . It is assumed that the noise obeys Gaussian normal distribution , The offset follows the random walk model .

With the most primitive formula above, we can calculate the next time p、v and q:

The superscript of the state quantity represents the coordinate system , Which frame of data is the subscript . The values on the left side of the equation are all in the world coordinate system (W)bk+1 The value of the frame . It is necessary to explain the derivation of the formula ,P and V The formula is simple ,q The formula of needs to be explained , In general q_w_bk+1=q_w_bk*q_bk_bk+1, Note that the multiplication here is the multiplication of quaternions , It is different from the direct multiplication of general rotation matrix , and q_bk_bk+1 That's the integral formula , Integral can be understood as q_bk_t*q_t_t1*q_t1_t2..., Alone q_bk_t*q_t_t1, Transform the multiplication of quaternions into general multiplication , The paper here uses the right multiplication rule of quaternions , take q_bk_t*q_t_t1 Transformed into 1/2*Ω(w)*dt*q_bk_t.q The formula is as follows , Formula ()R It's Hamilton right

From the whole equation, we can see the state propagation here need bk Rotation under frame , Translation and speed , When these initial states change , It needs to be redistributed IMU Observed value , That is to say, the state propagation equation should be recalculated and modified ( And in the slam In the optimization process ,bk Rotation under frame , Translation and speed are the state variables of real-time optimization update ). We want to find out at one time bk and bk+1 State propagation between , Therefore, the pre integration model is selected ( The foreign leader who first proposed pre integration was to convert the world coordinate system to calculate the change of state , In fact, the principle of both is the same , The value of the preintegration is the variation ), Multiply both sides by the world to bk Transformation of frame coordinate system , As shown in the figure below , Then, it is proposed that the right side of the equation only integrates the quantities related to acceleration and angular velocity , As formula 6:

IMU The real significance of the predicted score lies in that the result is a short period of time (tk~tk+1)IMU True rotation , Changes in displacement and velocity , and IMU The amount of change in a short time is very accurate . Here it is Just find out The formula 6 Integral value in , The value of the real preintegration is obtained , here bk Is the reference frame , Slave mode 6 It can be seen that , stay bk To bk+1 Between frames , The three estimated sub state quantities required here are only related to IMU Is related to the offset state quantity , Not related to other states ,( The state quantity is the quantity we have been optimizing , It's going to change all the time ), because IMU Of bias Will always be revised , So the pre integration above will continue to be re integrated ( It's troublesome , It's also time-consuming ), So we hope bias When it changes , We can simply recalculate the real pre integral , There is no need to repeat the integral to calculate the pre integral . Our approach is , First, according to the currently known bias Through the formula (6) Calculate the current pre integral , When the offset change here is particularly small , We can use the first-order linear expansion to adjust the preintegration , It is mentioned here that a Jacobian matrix is a first-order partial derivative ( I think everyone should know ), The following formula 12 Shown :

So to find this temporary state quantity , You have to find the two values on the right side of the equation , The first part is the original integral form ( Like a formula 6 like that ), Is the subject of the pre integral , The simplest Euler integral method is used in this paper ( Take the first place i The slope of the time value times the time difference plus i The initial value of time , You get i+1 The value of the moment ), But in the code, the author also proposed to use the median integral ( As the name suggests, the slope here is i and i+1 Midpoint (2i+1)/2 The time slope of ). The formula 7 Is the result of using Euler integral . There is a certain explanation in the front here , In limine abkbk,bbkbk Wait is zero , Rotation is unit rotation , Note that the noise is set to 0.

The second part is actually the corresponding first-order partial derivative ( For accelerometer bias and gyroscope bias ), The solution of the first-order partial derivative is introduced below , So far, we have calculated the measured value of the temporary state quantity , Then the measured value of the state quantity can be obtained .

At this point in the paper, the pre integration is already half done , That is, the solution of the measured value is completed , What's the difference ? Covariance matrix, of course , Let's focus on solving the covariance matrix , By the way, find out the Jacobian matrix of the gyro and accelerometer offset that are not found above .

How to find the covariance matrix ? How to find it from the definition of Mathematics ? We need to use SLAM Divine works in state estimation for robotic, Build a Linear Gaussian error state propagation equation , By the covariance of linear Gauss system , The covariance matrix of the equation can be derived , That is, the covariance matrix of the measurement state . That is to say, the previous formula for solving the measured value of the state is still needed 6. Notice the actual solution formula in the code 6 The median method is used , So in order to be consistent with the code , In the following solution process, I also use the median method , In order to solve the demand, we first add some dry goods :

First, we need to convert the rotation of the above four degrees of freedom into a three-dimensional state quantity , This is because the rotation of four degrees of freedom has been parameterized , Over parameterization will require the introduction of additional constraints ( Unit quaternion properties ), Therefore, the error is regarded as a disturbance definition 8:

Then the following two graphs define the pre integration process in the discrete state :

Then the following two graphs define the pre integration process in the discrete state :

Finally, the linear error state propagation model in the graph is obtained , From this we can get IMU The covariance matrix and Jacobian matrix of the pre integrated measurements , The Jacobian matrix of pre integration is directly substituted into the formula 12 More accurate propagation state values are calculated in , The covariance matrix is naturally used in back-end optimization .

It should be noted that the Jacobian matrix obtained above is the Jacobian of the pre product score , We also need to ask for one IMU Jacobian matrix of residuals ,WHAT? There are also two Jacobian matrices , No surprise, no surprise ? But this is true , So don't get up from bed and continue to play with the formula . The Jacobian matrix obtained above is used to calculate the pre integral value , The following requirements IMU Jacobian matrix of the residuals is a descending gradient in tight coupling , As mentioned earlier . Before Jacobi of the residuals , Let's talk about how the residual is calculated , The pre integration is equivalent to the measured value ( It's really worth , Because there is no more accurate value than this , Of course it's worth it ), The state to be estimated is the estimated value , So the pre integration measurement minus the state estimation is the residual , The need for estimation will be mentioned later IMU The estimates are p,v,q,ba,bg.P and q The initial value of the estimated value is better ( Visual related , You can directly use the initial value of vision ), and v,ba,bg The initial values of the estimated values of these three quantities are difficult to obtain , Because vision does not have these three initial quantities , We will use the following joint initialization to get the initial three quantities . Let's go directly to the residual formula and the state quantity to be optimized :

The residual Jacobian matrix to be solved is the first-order partial derivative of the residual to the estimated state quantity , The residual vector has three , The state vector has 2*5=10 individual . So we need to calculate the first-order partial derivative of the residual vector to the state vector . First of all, it should be pointed out that it is complicated to calculate the partial derivative for the offset , Therefore, the first-order Taylor expansion is used to calculate the pre integration , This is relatively simple . That is, the formula in the paper (12).

We need to explain why there is a coefficient in front of the error of the rotation vector 2, There is a relation between quaternion and rotation vector 2 The relation of times

(1) Jacobian matrix of rotating quaternion residuals

It can be seen that the rotating quaternion residual contains only qi,qj,big These three variables , That is, the partial derivative of a function of three variables .

(2) Jacobian matrix of velocity residuals

To be continued , In fact, you can deduce it yourself .

(3) Jacobian matrix of displacement residuals

To be continued , In fact, you can push it yourself .

Congratulations on completing all the steps of data front-end processing , Let's go directly to the initialization process !

2. Pure vision monocular initialization based on sliding window

Before introducing pure visual initialization, let's first talk about why to initialize ? What to do in initialization ? And initialization ? The reason for our initialization is that the monocular inertial close coupled system is a highly nonlinear system , First of all, monocular is unable to obtain the absolute scale in space , and IMU And there must be bias , The gravitational acceleration is also needed in the later solution ( Including size and direction ), Under speed sensitive conditions , Like drones , And accurate speed information , therefore , How to effectively calculate these quantities before processing the tightly coupled system , It is of great significance to the robustness of the whole tightly coupled system ( In fact, it can be understood as camera calibration , The internal parameters of the camera are not calibrated correctly , The camera must be inaccurate when positioning , And it's likely to hang up ). So the initialization is actually very simple , Is to calculate the absolute scale s、 Gyroscope bias bg、 Acceleration offset ba、 Acceleration of gravity G And each IMU The speed of the moment v,VINS It is emphasized that the accelerometer offset value is generally coupled with the gravitational acceleration ( That is, it is absorbed by the acceleration of gravity ), The magnitude of gravitational acceleration is much larger than its acceleration offset , And the accelerometer offset is relatively small in the initialization time , It's hard to really calculate , Therefore, the effect of accelerometer bias is ignored , No more calculations during initialization . The function of initialization is self-evident , It directly affects the robustness and positioning accuracy of the whole tightly coupled system , And initialization usually takes a long time ,VINS It will take about ten seconds ,ORB_SLAM2 combination IMU The time is set at 15 Seconds to complete initialization . Don't talk much , Go straight to the point .

Pure visual initialization in the V Dot A part , First build a sliding window , Contains a set of data frames . The use of antipolar geometry model is mentioned in the paper 5 The point method is used to solve the relative transformation of monocular camera , Including relative rotation and displacement without scale information . In fact, each monocular model basically uses epipolar geometry to solve the relative transformation of two frames in initialization , It should be noted that rotation is scale invariant ( It's actually unit rotation , There will be no scale information , Think about it carefully ?), As for why monocular has no scale information , I want to say a long word , But in fact, many people learn monocular vision SLAM None of them really understand , The source of monocular vision without scale is the polar geometry between the first two frames , To be more specific is to ask F/E perhaps H It needs to be lowered to a lower parameter ,F from 6 Dimension down to 5 dimension ,H from 9 Dimension down to 8 dimension , The dimension here is the scale , And it must be lowered . Then triangulate to get the corresponding 3d Point coordinates , There are these 3d Points and other frames in the sliding window 2d Click to proceed PNP Solve to obtain all the pose and feature points in the sliding window 3d coordinate , thus , Visual initialization is complete . Is it simple ? Of course. , After all, it's just a simple visual initialization , What is really complicated is the visual inertia joint initialization , That is, the focus and difficulty of our initialization , So the following knowledge points must be spiritually learned !

3. Visual inertia joint initialization

Visual inertia joint initialization at V Dot B part , The name of the definition given by the author here is Visual-Inertia Alignment, That is, visual inertia joint initialization ( And in the ORBSLAM2+IMU In my paper , The name defined by the author is IMU initialization, namely IMU initialization ), Why define such a noun , I think it has two meanings , First, when initializing the gyro offset, it is necessary to use IMU Rotation of measurement and rotation of visual measurement , That is to combine visual and inertial data . Second, the scale obtained here S The value of is more than IMU Of , Or visual and IMU The scale of the whole system . During the specific explanation of each initialization process , It is necessary to make a general summary , The physical definition of initialization is actually the calibration of intrinsic parameters , The definition in the mathematical model is actually a formula (6) Solution of matrix equation , And the formula (6) In fact, it comes from the most primitive PVQ The integral formula , among Q The rotation corresponds to the gyroscope , and PV Corresponding to the accelerometer , If you don't understand , It doesn't matter , After reading the following overall derivation process, I believe that you will be enlightened if you are smart .

(1) Gyro bias calibration

Rotation can be obtained in two ways , One is the measured value of gyroscope , One is visual observation . According to the normal understanding, the two must be equal in size ( Assume no error ), But there must be some errors in the actual situation , Let's look at the respective errors . The error of gyroscope has two parts: measurement noise and gyroscope bias , The noise can be ignored for the time being ( After all, it is too small ), The only visual error is observation noise ( It can also be ignored ), Therefore, the absolute value of the difference between the two is the gyro offset , All the rotations of the entire sliding window are adjusted to form a minimum error model :

The formula 15 If the first term and the second term of the first expression in are the generalized product of quaternion rotation, we can get bk+1 Camera in coordinate system from bk+1 To bk Relative rotation under ( relative bk+1), The third is bk The gyroscope in the coordinate system starts from b To bk k+1 Relative rotation under ( relative bk), The two are doing generalized product , It's from bk To bk+1 rotate , And then from bk+1 To bk rotate , It is equivalent to doing badly (OA+AO=0), The second formula is the first-order linear approximation mentioned above . Then take the least square , You can also use it SVD Decomposition and other methods . Note that after the gyro offset is obtained, the gyro offset must be substituted into the pre integration again to calculate the value of the pre integration again , Will be more accurate .

(2) Speed 、 Gravitational acceleration and scaling

Here, the author unifies the three state quantities into a state vector , As formula 16 Shown :

The speed is at bk In a coordinate system , The gravitational acceleration is in the initial camera coordinate system , As mentioned earlier , These quantities are solved by P、V The mathematical model is obtained , Consider two consecutive keyframes in a sliding window bk and bk+1, The following is the formula in the paper 17 and 19 The derivation of :

After the derivation of the formula, we will get the formula in the paper 17、18 and 19, Let's focus on why this is the case , And the derived equation of motion . First of all, why should we make such a derivation , It all depends on how the state vector is defined , The left side of the equation form we finally get must be expressed in the form of state vector , But also to meet other quantities are known ( from IMU Pre integration and visual tracking result in ), So we need to change the equation like this , To satisfy such a relationship . Then there is the final form. We can see that the final formal dimension of the state vector is (n+1)*3+3+1, The dimension of the motion equation generated by two consecutive frames is 3+3+3+1(vbkbk,vbk+1bk+1,gc0,s),gc0 Is the acceleration of gravity in the first camera coordinate system , The rest is to solve the least square problem , In this paper, the fast Cholesky decompose . If you don't understand how to solve this kind of equation with least squares, you can turn to my other blog , I hope I can help you :

(3) Gravity optimization

In fact, the magnitude and direction of gravitational acceleration have been obtained above , Why do we need to optimize gravity here ? The reason is simple , The gravity calculated here absorbs the bias of the gravitational accelerometer , Although it is not necessary to calculate the bias of the gravity accelerometer , But gravity still needs to be optimized , When it comes to optimizing gravitational acceleration , It must contain two quantities , Size and direction , That is, three dimensions , But in general, the size is known ( Set here as 9.8), So what we need to do is to optimize the direction , Is a two-dimensional vector , The following figure shows how to optimize gravity and b1,b2 The direction of the unit vector determines the model . Tangent plane optimization is suitable for fine adjustment of gravity acceleration , And just fine tune the direction .

4. Monocular vision tightly coupled back-end optimization model based on sliding window

Finally, we have talked about the real vision inertial tight coupling system ,( Insert a sentence here , It can be regarded as writing a blog and learning a SLAM Systematic experience , At the beginning, we may have a winning mentality to conquer all the knowledge points and difficulties , But in the middle of the process , There will always be more or less problems , Hindering our progress , You may want to relax your vigilance , I don't want to go into the specific formula and content , Eager to see what the end is , But I want to remind you , Including myself , The best and most valuable thing is always the process , It is the experience learned in the process that can be used for a lifetime , Just blindly rush to the end , And forget the process , You will eventually find that all you get is disappointment . If you don't want to be disappointed , Then keep up the good work , Keep cheering yourself on !) This is the focus of the whole system , All the above mentioned are actually loosely coupled , The purpose is to provide optimized initial value and state for the whole system . The tight coupling optimization model of visual inertia is presented in the VI part , The tight coupling system I understand is an effective combination of the original visual and inertial measurements , That is, data fusion before data processing ,VINS Is to integrate the state quantity in the sliding window into a state vector , As formula 21 Shown :

The first expression is the entire state vector in the sliding window , among n It's the number of frames ,m Is the total number of feature points in the sliding window , Dimension is 15*n+6+m, The second formula xk In the first k Frame image captured IMU state , Including posture , Speed , rotate , Accelerometer and gyroscope offset , The third formula is the camera external parameter ,λ Is the inverse of the depth of the feature point ( You will ask , Ask why only the inverse of the depth value of the characteristic point is used here , That is, the inverse depth , It mainly considers the stability and satisfaction of Gaussian systems , If you don't know, you can refer to Gao Bo's fourteen lectures ). From the state vector, we can see xk Only with IMU Items and Marginalization of , The depth value of the feature point is only related to camera and Marginalization of , So let's create BA The tight coupling model is based on this idea , Let's create a visual inertia BA Optimization model and robust kernel function model :

It is obvious from the formula that ,BA The optimization model is divided into three parts , Namely Marginalization( marginalized ) The residual part ( Remove the constraints of pose and feature points from the sliding window ),IMU The residual part ( Between adjacent frames in a sliding window IMU produce ) And visual cost error function ( The projection of feature points in the sliding window under the camera ), The robust kernel function is set for the cost function ( Please refer to vision for specific functions SLAM Fourteen speak ), The function of robust kernel function is to remove outlier, Visual residuals use robust kernels , and imu Residuals are not used , This is because IMU The data doesn't exist outlier. Here are three parts .

(1) IMU Residual of observed value

Consider two consecutive frames bk and bk+1 Between observations , Same as the previous pre integration model , As formula 24 Shown , Remember when IMU Do you get the covariance matrix and the observed value when pre integrating ? The observed value obtained there is the measured value , The covariance matrix is the covariance matrix here , And the formula 24 The preceding item is the predicted value , Also called an estimate , The difference between the estimated value and the measured value is the residual , In fact, the formula here 24 Is by the formula 5 Move the right side of to the left to get , Did you find out ?

(2) Visual observation residual

It is different from the re projection error defined by the traditional pinhole camera model , In this paper, we use the camera observation residual of the unit hemisphere , Is a wide field of view fish eye or omni-directional camera . The residual model of the camera is as follows 25 Shown :

The first expression is the expression of the residual , The second formula is that the back projection function of the fish eye camera converts the observed pixel coordinates into the observed value data of the unit vector ,b1 and b2 Is a set of bases on the tangent plane of this unit vector . The third formula is the re projection estimation model . Actually VINS Common pinhole camera models can also be used in the code . Here is a detail , I think it's quite important , The formula of visual residual is to normalize the error in the plane coordinates of the camera ( Instead of using pixel errors directly , Did you find out ?), The main reason is accuracy , The scale of a pixel is one pixel ( That is to say, the minimum error is 1 Pixels change ), There are no specific restrictions on the calibration of the normalized camera plane , Higher accuracy .

(3) Marginalization

This part draws lessons from blogs :http://blog.csdn.net/q597967420/article/details/76099443

sliding windowsbounding The boundary of the optimization problem pose The number of , To prevent pose And the number of features increases with time , The optimization problem is always in a limited complexity , Will not grow over time . However , take pose Removed from the windows when , Some constraints will be discarded , This will inevitably lead to a decline in the accuracy of the solution , And when MAV Do some degenerative movements ( Such as : Move at a constant speed ) when , Without the constraint of historical information, it can not be solved . therefore , When moving out of a pose or feature , The associated constraint needs to be transformed into a constraint item as prior Put it in the optimization problem . This is it. marginalization What to do .

VINS-MONO in , To handle some hovering case, Introduced a two-way marginalization, In a nutshell : If the penultimate frame is a keyframe , The oldest pose Removed from the sliding window, Edge out the visual and inertial data associated with the oldest frame , That is to say MARGIN_OLD, As part of a priori value , If the penultimate frame is not a key frame , The penultimate frame pose Removed from the sliding window, Discard the visual observations of the penultimate frame , Keep the associated IMU data , That is to say MARGIN_NEW. The key frame selection strategy is that the parallax is large enough , In the case of small motion such as hovering , It will happen frequently MARGIN_NEW, In this way, the old ones with large parallax are preserved pose. If this situation continues MARGIN_OLD Words , Visual constraint is not strong enough , State estimation will be affected by IMU Integration error affects , With large cumulative error .

The marginal part can be understood as a priori of the system , That is, the constraint before sliding the window , There will be more and more constraints in this part , The dimension and time-consuming of the matrix will become larger and larger .

Here, the whole VINS The theoretical framework is basically introduced , For later repositioning , Global pose optimization and loop detection will be discussed later , Now is there a clear idea ? If you are still confused , Then hurry to the following code practice link . Let's analyze it from the perspective of code VINS The whole second part of .

(4) Monocular visual Jacobian calculation ( Refer to Mr. He )

Vision SLAM The fourth of the fourteen lectures 162 It's mentioned on the page , In the space coordinate system 3D Point projection to the pixel coordinate system to do the least square , The Jacobian matrix of the least square model can be obtained by Gauss Newton or LM The descending gradient of the method , So as to optimize the posture and road markings , Please refer to the book for details . It should be noted that VINS There are three differences between the calculation of visual Jacobi and the book :

①VINS Zhongyou feature_tracker The transmitted pixel coordinates absorb the internal parameters K And normalized , Therefore, it is the normalized image coordinate of absorbing internal parameters .

②VINS Use in Q Rotate quaternions to optimize pose , Therefore, when calculating the Jacobian matrix corresponding to the pose, it is not exactly the same as the formula in the book .

③VINS Is based on sliding windows , The optimized pose contains... In the sliding window 11 Frame pose , But at the same time, it depends on feature points to determine which two frames are optimized , The visual residual model is determined by traversing the feature points each time , Set up i The frame is the camera that first observed the secondary feature points , be j( It's not equal to i) For other common view keyframes .

4.1 Vision j Frame residual calculation ( In fact, it can also be calculated i Frame residual , The process is basically similar )

Understanding the above three differences, we can deduce the following formula , First, the following figure shows the residual calculation model ,VINS Selection is j Frame image residual calculation model .

4.2 The whole Jacobian formula

Passed to the above j The optimized state quantities of the frame visual residual model are 7 Dimensional i Frame pose ,7 Dimensional j Frame pose ,7 Dimension's camera and IMU External parameters and 1 Is the inverse depth of the feature point of . Therefore, the Jacob to be calculated is shown in the figure below :

VINS The reference coordinates of all results of the system are world coordinates , At the same time, they all correspond to IMU The rotation of time in the world coordinate system , translation , Speed etc. , And the value of the inverse depth is relative to i The camera of the moment , So we need to tidy up 4.1 Got i The key frame of time corresponds to j Normalized camera coordinates at time Pj The re projection equation of is shown in the figure below :( What we need to ask for is Pj about i、j Time keyframes , Camera external participation i The inverse depth of time , So we need to construct such a re projection equation , To satisfy the partial derivative solution )

From the final formula we can see Pj from i,j The posture of the moment ( Rotation and translation ), Camera external parameters ( Rotation and translation ) And inverse depth representation , Now we can find Jacoby directly ( Partial derivative ).

4.3 Vision i Frame state quantity ( Displacement and rotation ) stay Pj Jacoby under

Here and visually SLAM There are discrepancies in the fourteenth lecture , But the solution principle is basically the same , The properties of rotating quaternion and difference multiplication are used . After we get the following partial derivatives, the whole J[0] You can multiply them to get .

4.4 Vision j Frame state quantity 、 Camera and IMU Between external parameters ( Displacement and rotation ) stay Pj Jacoby under

The Jacobi derivation process here is not repeated , Similar to the above process , Just give the result

4.6 Feature point your depth is Pj Jacoby under

It is easier to find partial derivative by inverse depth , It is a process of finding partial derivatives of inverse functions , After we get the following partial derivatives, the whole J[3] You can multiply them to get .

thus , The whole visual residual based on sliding window and Jacobi model are completed . Its covariance matrix is relatively simple , Directly assign values in the code , There is no need to calculate .

The system entrance of the second part is estimator_node.cpp,( The overall introduction remains to be continued )

1. estimator_node.cpp System entrance

Initialize the settings node first vins_estimator, Read parameters and set corresponding parameters at the same time , Publish corresponding topics for the nodes , Subscribe to three topics for the node , Respectively used to receive and save IMU data 、 Image feature data and original image data , In the three callback functions imu_callback、feature_callback and raw_image_callback, Whenever the subscribed node is sent by data, it will enter the corresponding callback function .

(1) receive IMU data

imu_callback Function first executes imu_buf.push(imu_msg); take IMU The data is saved to imu_buf in , At the same time con.notify_one(); Wake up acts on process The function in the thread to obtain the observation data , Here, the role of wake-up and mutex is very important. We will discuss it in detail when we really want to use it , And then predict the p、v、q value , At the same time, the latest IMU Measured value message (pvq value ), Calculated here pvq It's an estimate , Note that there is no observed noise and bias , The action is calculated with the following pre integration pvq( The observation noise and bias are considered ) Do the error to get the residual error .

(2) Receive the original image and image feature point data

feature_callback and raw_image_callback The function mainly saves the feature data and the original image data to feature_buf and image_buf in , stay feature_callback Also used. con.notify_one() And the mutex ;.

2. process() Thread processing observation data

(1) Get the observations (IMU Data and image feature point data )

Define the observation data class measurements, Contains a set of IMU A container for the combination of data and one frame of image data , What is interesting here is the use of mutexes and conditional waiting , Mutexes are used to lock the current code segment , Conditional waiting is to wait for the completion of the above two received data and be awakened , And then from imu_buf and feature_buf Extract observation data from measurements = getMeasurements(), It should be noted that the mutex used in extracting the observed data will be locked imu_buf and feature_buf Wait until the extraction is complete before releasing , That is to say, the above two callback functions cannot receive data during the extraction process , At the same time, the above two callback functions also use mutual exclusion when receiving data , lock imu_buf and feature_buf, Nor can it be extracted here imu_buf and feature_buf Data in . So the whole data acquisition process is : The callback function receives data , After receiving a group of data, wake up the thread that extracts the data , After the data extraction thread finishes extracting the data , The callback function can continue to receive data , In turn . This is the beauty of inter thread communication !

1) getMeasurements() Return observation data

The function works as the name suggests , Is to get a group IMU A container for combining data and image feature data . First of all, ensure the existence of IMU Data and image feature data , Then it is necessary to judge the image feature data and IMU Is the data aligned . The queue data structure is used here ( fifo front Is advanced data ,back It is backward data ), Two conditions need to be met to ensure data alignment , The first is the IMU The time of the last data is greater than the time of the first data of the image feature , The second is IMU The time of the first data is less than that of the image feature . If the data alignment is satisfied, the data can be taken out of the queue in an aligned manner . Here we know that the image feature data in the cache or IMU Data fetched , Can jump out of this function , Return the data .

(2) Handle IMU Data and image feature data

step 1: Handle IMU data

Traversal call send_imu(imu_msg) Will single IMU Data dt, The linear acceleration value and angular acceleration value are calculated and sent to the optimizer for processing , The optimizer calls estimator.processIMU(dt, Vector3d(dx, dy, dz), Vector3d(rx, ry,rz)); Method .

1)Estimator::processIMU(doubledt, const Vector3d &linear_acceleration, const Vector3d&angular_velocity) Handle IMU Data method

step 1 call imu Pre integration of , call push_back function , The time in the function , The acceleration and angular velocity are stored in the corresponding cache respectively , At the same time called propagation function , Calculate the corresponding state quantity 、 Covariance and Jacobian matrix

①propagate(double _dt, const Eigen::Vector3d &_acc_1, constEigen::Vector3d &_gyr_1)

Preintegrated propagation equation , In the preintegrated propagation equation propagate The midpoint integral method is used in midPointIntegration Calculate the measured value of the pre integration , The midpoint integral method mainly consists of two parts , Respectively, get the state change quantity result_delta_q,result_delta_p,result_delta_v,result_linearized_ba,result_linearized_bg And get the new covariance matrix and Jacobian matrix ( Be careful , Although the Jacobian matrix and covariance matrix are obtained , However, the residual error and the state variable of the first-order offset term have not been calculated ), Because we use the midpoint integral , So we need the last moment IMU data , Including measured acceleration and angular velocity and state change , The initial value is provided by the constructor . It should be noted that what is defined here delta_p And so on are cumulative changes , That is to say, from i The amount of change from time to time , This is the final requirement ( To correct the offset first-order term ), and result_delta_q Wait is just a temporary variable , Final residual and Jacobian matrix 、 The covariance matrix is stored in pre_integrations in , There is another function that has not been used here for the time being , It is only called during optimization , But it belongs to the content of pre integration ,evaluate Function in this function, the offset first-order correction of the state change and the calculation of the residual are carried out .

step 2 Pre integration formula (3) Errors are not considered , Provide imu Calculated current rotation , Location , Speed , As the initial value of optimization

step 2: Processing image feature data

The data coming in here is not image data , Instead, we have tracked the matched normalized plane coordinates . Store the features of the current frame in image in ,image The first element type of is the number of the feature point , The second element is the camera number , The third is the feature point coordinates , Then it directly enters the thread of processing image feature data estimator.processImage(image, img_msg->header).

1)Estimator::processImage(constmap<int, vector<pair<int, Vector3d>>> &image, conststd_msgs::Header &header) Methods of processing image feature data

First, judge whether the incoming image feature data is a key frame according to the parallax , Select discard current frame ( But keep IMU data ) Or discard the oldest frame in the sliding window .

step 1: Save the image data and time into the image frame class : First, save the data and time to the object of the image frame imageframe in (ImageFrame The object contains feature points , Time , Postures R,t, Pre integration object pre_integration, Is it a keyframe ), At the same time, save the temporary pre product score to this object ( The initial value of the temporary pre integration here is just above IMU It's calculated during pre integration ), Then the object of the image frame imageframe Save to all_image_frame In the object (imageframe The container of ), Update the initial value of temporary pre integration .

step 2: Calibrate the camera and IMU External parameters of : Then, if there are no external parameters, calibrate the external parameters , If parameters are passed, skip this step ( The default is , If it's your own equipment , It can be set to 2 Online calibration of external parameters ).

step 3: Initialize the system at the same time BA Optimize : When the solver is in an initializable state ( The initial state is initializable , If the initialization succeeds, it will be set to the uninitialized state ), Judge the present frame_count Whether to achieve WINDOW_SIZE, Make sure there is enough frame Participate in initialization , there frame_count Is the number of image frames in the sliding window , Initially initialized to 0, The total number of sliding window frames is 10. There are external parameters and the current frame timestamp is greater than the initialization timestamp 0.1 second , Do the initialization operation .

step 3.1:initialStructure() System initialization , Initialize first Vision-only SFM, Then initialize Visual-Inertial Alignment, Constitute the entire initialization process .

① Guarantee IMU Get plenty of exercise , Judge by linear acceleration , The standard deviation of the linear acceleration at the beginning ( The degree of dispersion ) Judgment guarantees IMU Get plenty of exercise , The standard deviation of acceleration is greater than 0.25 Then represent imu Fully motivate , Enough to initialize .

② Pure visual initialization , Yes SlidingWindow Image frame and camera pose in sfm problem , The solution here is Keyframes The pose and feature point coordinates of .

step 1. First, build. SFMFeature object sfm_f,SFMFeature The array contains the status of the feature points ( Whether it is triangulated ),id,2d spot ,3d Coordinates and depth , Save the feature information in the feature manager to SFMFeature object sfm_f in sfm_f.push_back(tmp_feature).

step 2. Then, by the F The matrix is restored to R、t, The main call method relativePose(relative_R, relative_T, l).relativePose Method first through FeatureManeger obtain ( Slide in window ) The first i Feature matching between frame and last frame corres, When corres When the match is large enough , Review the latest keyFrame and slidingwindow One of them keyFrame There is enough feature Matching and parallax large enough (id by l=i), Meet these two conditions , And then, between these two frames, we can recover the R,t And triangulate out 3D Characteristics of feature point, Here is the use of solveRelativeRT(corres, relative_R, relative_T),solveRelativeRT Method defined in solv_5pts.cpp Class , By the F The matrix is restored to R、t, Call directly opencv The method in , There's nothing to say , Here's what's interesting , such relativePose The resulting pose is the l The frame of , The first l The filtering of frames is from the first frame to all frames in the sliding window that meet the requirement of a large average parallax at the beginning , Here's number l The frame will be used as a reference frame to the following global SFM Use . Here we have got the feature points of the image 2d Coordinate extraction , Camera No l Rotation and translation between frame and last frame ( Note that there are no features yet 3d Point coordinates ), With this information, you can build a global SFM class GlobalSFM sfm, Call it here sfm.construct(frame_count + 1, Q, T, l,relative_R, relative_T,sfm_f,sfm_tracked_points), Here is the first l Frame as reference frame , It's going on PNP Before we solve it , It is necessary to judge that the current number of frames should be greater than the number of l frame , This guarantees the second l Frame skip PNP step , First, execute the following step l Triangulation of frame and last frame , Get the characteristic points of the common view , For the following l+1 Frame and the last frame PNP, And then use it pnp solve l+1 Frame to the last frame R_initial,P_initial, The last pose is saved in Pose in , A loop , obtain l+1,l+2…n-1 Frame pose . Jump out step 2 After the cycle , So far we have l+1,l+2…n-1 Frame pose and l+1,l+2… Frame n-1 Triangulate the feature points of the frame . Then triangulate l The frame and i frame ( In the l Frame between frame and last frame ) Between 3d coordinate ,( I don't know why I have to do it twice , Is it possible to triangulate more feature points ????), next PNP solve l-1,l-2…0 The frame and l The pose between frames has been triangulated to the corresponding feature point coordinates , Finally triangulate all other feature points . So far, the pose of all cameras in the sliding window and the position of feature points are obtained 3d coordinate . The first 6 Department is to carry out BA Optimize , It uses ceres Optimize pose and feature points , Here you can refer to the vision SLAM The content in Lecture 10 , The optimization method is the same .

step 4:visualInitialAlign Call in VisualIMUAlignment Method , Real visual inertia joint initialization ,imu Align with vision , Obtain absolute scale, etc . This method is defined in initial/initial_alignment.h in .

step 4.1:solveGyroscopeBias Calculate the gyro offset , The calculation model of the whole method is given in the paper , Use LTLD Methods to solve the least square problem ,delta_bg = A.ldlt().solve(b); here A +=tmp_A.transpose() * tmp_A,b += tmp_A.transpose() * tmp_b, In fact, it's dealing with AT*A*x=AT*b problem , The general least square problem is dealt with directly Ax=b That is to say Ax-b=0 that will do , Here is the use of LDLT Method , Multiply both sides by A The transpose of a matrix AT*A It must be reversible , So we can multiply the two sides by their inverse , The corresponding instructions are detailed in LDLT Method . After obtaining the gyro offset, save its value to the previously defined Bgs[] in , Finally, we are recalculating the pre integral .

step 4.2:LinearAlignment Calculation scale , Gravitational acceleration and velocity . The formula given in this paper is the model of two adjacent velocities , Map to the entire n+1 Of the four velocity models ,A A matrix must be a positive definite matrix ( Real symmetric matrix ), Defined in code A and b That is, the most general H and b,tmp_A and tmp_b A temporary variable between adjacent speeds . The final solution :x = A.ldlt().solve(b); And then call RefineGravity Recalculate the direction of gravitational acceleration , Get the best solution .

step 4.3: Set all the state variables by the camera coordinates C0 Convert to world coordinates W Next , The definition of the world coordinate system here is based on the direction of gravitational acceleration and the initial camera C0 The direction of the coordinate system defines R0 = Utility::ypr2R(Eigen::Vector3d{-yaw, 0, 0}) * R0; Defined rot_diff Is the coordinate rotation matrix q_wc0. The final will be Ps[i]、Rs[i] and Vs[i] By camera C0 The coordinate system is rotated to the defined world coordinates , It needs to be explained here , General SLAM The system world coordinate system is C0 Coordinate system , A world coordinate system is defined here , Then the final results are all based on the world coordinate system , If you want to compare with the truth value, you need to pay attention to the coordinate system .

2) Tight coupling optimization based on sliding window

This part is mainly about solveOdometry() and slideWindow() In the method , These two methods will be called when initialization is completed .

step 1:solveOdometry() Conduct BA Optimize , Called inside BA The way to optimize optimization()

step 1. To be continued

边栏推荐

- Connect to the database but cannot modify the data

- Principes et exemples de tâches OpenMP

- The R language converts the data of the specified data column in the dataframe data from decimal to percentage representation, and the data to percentage

- DUF:Deep Video Super-Resolution Network Using Dynamic Upsampling Filters ...阅读笔记

- MFC中窗口刷新函数详解

- Leetcode notes: Weekly contest 280

- Principle and example of OpenMP task

- Topic 1 Single_ Cell_ analysis(1)

- Conda創建虛擬環境報錯,問題解决

- Ten important properties of determinant

猜你喜欢

Vscode的Katex问题:ParseError: KaTeX Parse Error: Can‘t Use Function ‘$‘ In Math Mode At Position ...

Classic paper review: palette based photo retrieval

Pytorch installation (GPU) in Anaconda (step on pit + fill pit)

Logistic regression

离散 第一章

『Three.js』辅助坐标轴

PPP agreement

二、八、十、十六进制相互转换

Utilize user behavior data

Chapter 2 - cyber threats and attacks

随机推荐

Numerical calculation method chapter5 Direct method for solving linear equations

R language uses rstudio to save visualization results as PDF files (export--save as PDF)

Mathematical Essays: Notes on the angle between vectors in high dimensional space

MFC中窗口刷新函数详解

OpenMP task 原理与实例

Leetcode notes: biweekly contest 69

解决逆向工程Mapper重复问题

连接数据库却无法修改数据

2021.10.27-28 scientific research log

Solve mapper duplication problem in reverse engineering

Principle and example of OpenMP task

20220525 RCNN--->Faster RCNN

Leverage contextual information

CONDA reports an error when creating a virtual environment, and the problem is solved

Servlet advanced

LeetCode笔记:Weekly Contest 295

uni-app用canvas截屏并且分享好友

Conda创建虚拟环境报错,问题解决

10 lessons from the recommended system

Numerical calculation method chapter6 Iterative method for solving linear equations