当前位置:网站首页>[paper reading] semi supervised left atrium segmentation with mutual consistency training

[paper reading] semi supervised left atrium segmentation with mutual consistency training

2022-07-07 05:33:00 【xiongxyowo】

[ Address of thesis ] [ Code ] [MICCAI 21]

Abstract

Semi supervised learning has attracted great attention in the field of machine learning , Especially for the task of medical image segmentation , Because it reduces the heavy burden of collecting a large number of dense annotation data for training . However , Most existing methods underestimate challenging areas during training ( Such as small branches or fuzzy edges ) Importance . We think , These unmarked areas may contain more critical information , To minimize the uncertainty of the model prediction , And should be emphasized in the training process . therefore , In this paper , We propose a new mutual consistent network (MC-Net), For from 3D MR Semi supervised segmentation of left atrium in image . especially , our MC-Net It consists of an encoder and two slightly different decoders , The prediction difference between the two decoders is transformed into unsupervised loss by our cyclic pseudo tag scheme , To encourage mutual consistency . This mutual consistency encourages the two decoders to have consistent 、 Low entropy prediction , And enable the model to gradually capture generalization features from these unmarked challenging areas . We are in the public left atrium (LA) Our MC-Net, It has achieved impressive performance improvements by effectively utilizing unlabeled data . our MC-Net In terms of left atrial segmentation, it is superior to the recent six semi supervised methods , And in LA New and most advanced performance has been created in the database .

Method

The general idea of this paper is to design a better pseudo tag to improve the semi supervised performance , The process is as follows :

The first is how to measure uncertainty (uncertainty) The problem of . This paper believes that popular methods such as MC-Dropout You need to reason many times during training , It will bring extra time overhead , So here is a " Space for time " The way , That is, an auxiliary decoder is designed D B D_B DB, The decoder is structurally " Very simple ", It is directly multiple up sampling interpolation to obtain the final result . And the original decoder D A D_A DA Then with V − N e t V-Net V−Net bring into correspondence with .

It's like this , Without introducing large network parameters ( Because the structure of the auxiliary decoder is too simple ), The model can obtain two different results in the case of one reasoning , Obviously, the result of the auxiliary decoder will be " Worse "( This can also be seen from the picture ). In the final calculation of uncertainty, we only need to compare the differences between the two results .

Although this approach seems very simple , But it's amazing to think about it ; One is strong and the other is weak , If the sample is simple , So weak classification header can also get a better result , At this time, the difference between the two results is small , The degree of uncertainty is low . For some samples with large amount of information , The result of weak classification header is poor , At this time, there is a big difference between the two results , The uncertainty is higher .

And for the two results obtained , First, use a sharpening function to deal with it , To eliminate some potential noise in the prediction results . The sharpening function is defined as follows : s P L = P 1 / T P 1 / T + ( 1 − P ) 1 / T s P L=\frac{P^{1 / T}}{P^{1 / T}+(1-P)^{1 / T}} sPL=P1/T+(1−P)1/TP1/T When using false label supervision , Then use B To monitor A, Use A To monitor the results B. In this way, the strong decoder D A D_A DA Can learn the invariant features in the weak encoder to reduce over fitting , Weak encoder D B D_B DB You can also learn strong encoder D A D_A DA Advanced features in .

边栏推荐

- 基于NCF的多模块协同实例

- Flink SQL 实现读写redis,并动态生成Hset key

- Leetcode 1189 maximum number of "balloons" [map] the leetcode road of heroding

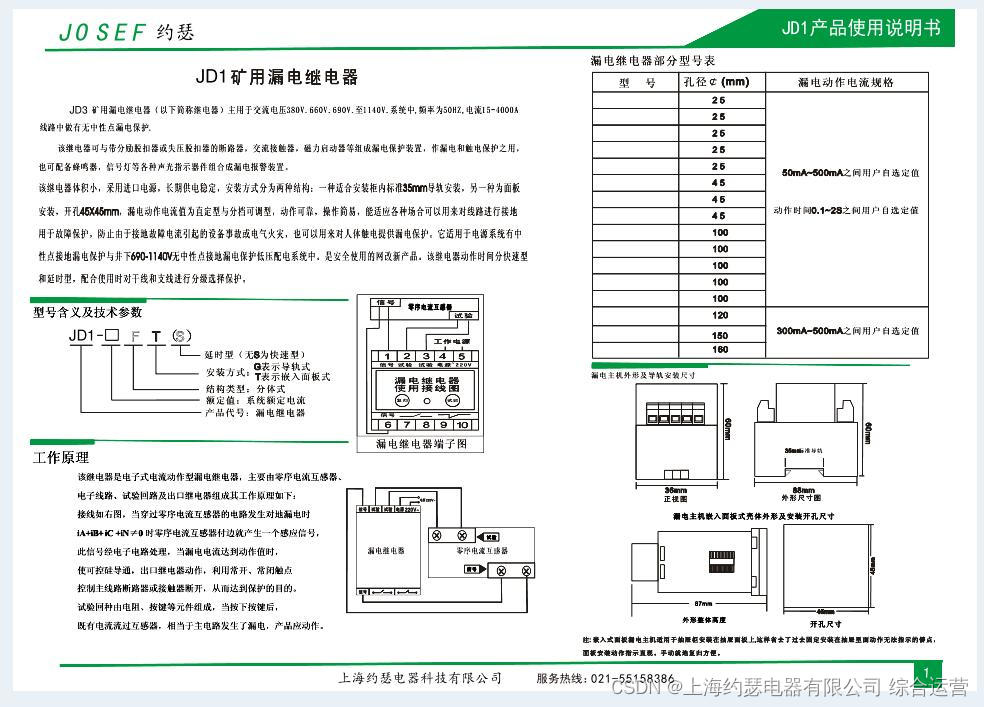

- 漏电继电器JOLX-GS62零序孔径Φ100

- Writing process of the first paper

- 论文阅读【Semantic Tag Augmented XlanV Model for Video Captioning】

- 说一说MVCC多版本并发控制器?

- K6el-100 leakage relay

- 【js组件】自定义select

- DJ-ZBS2漏电继电器

猜你喜欢

MySQL数据库学习(8) -- mysql 内容补充

Complete code of C language neural network and its meaning

CVE-2021-3156 漏洞复现笔记

Leakage relay jd1-100

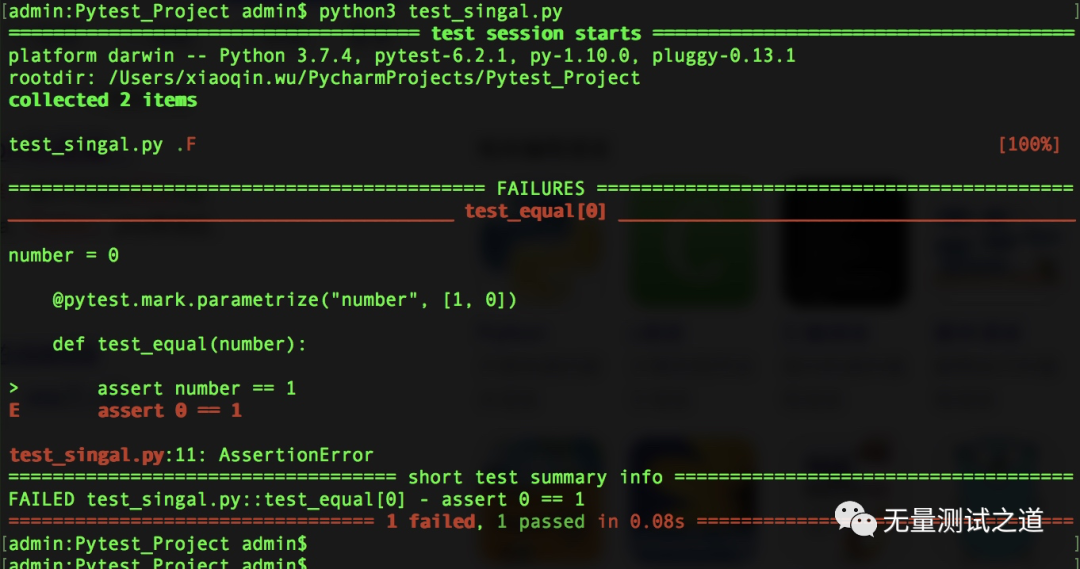

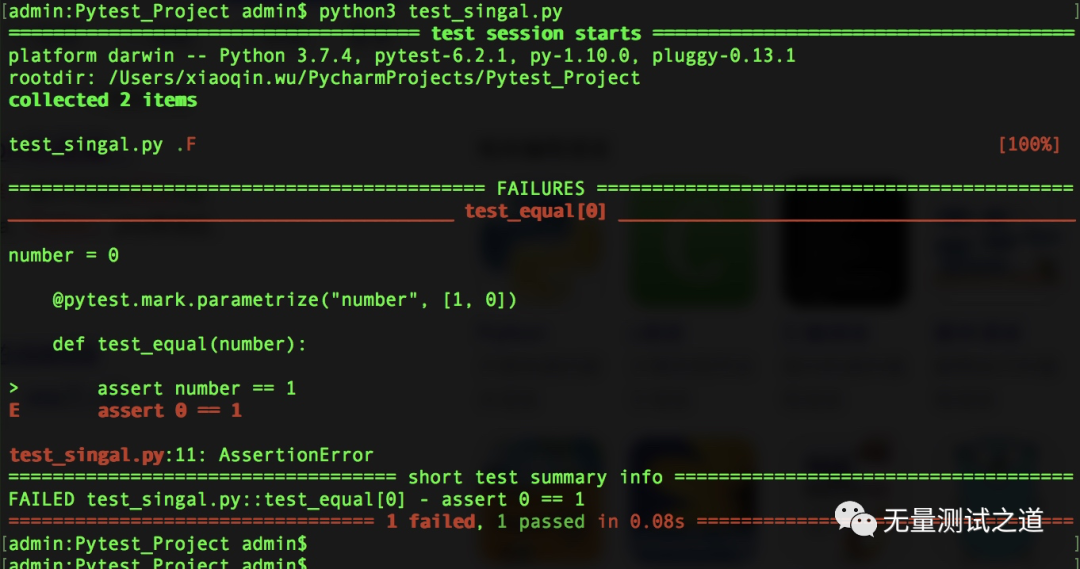

pytest测试框架——数据驱动

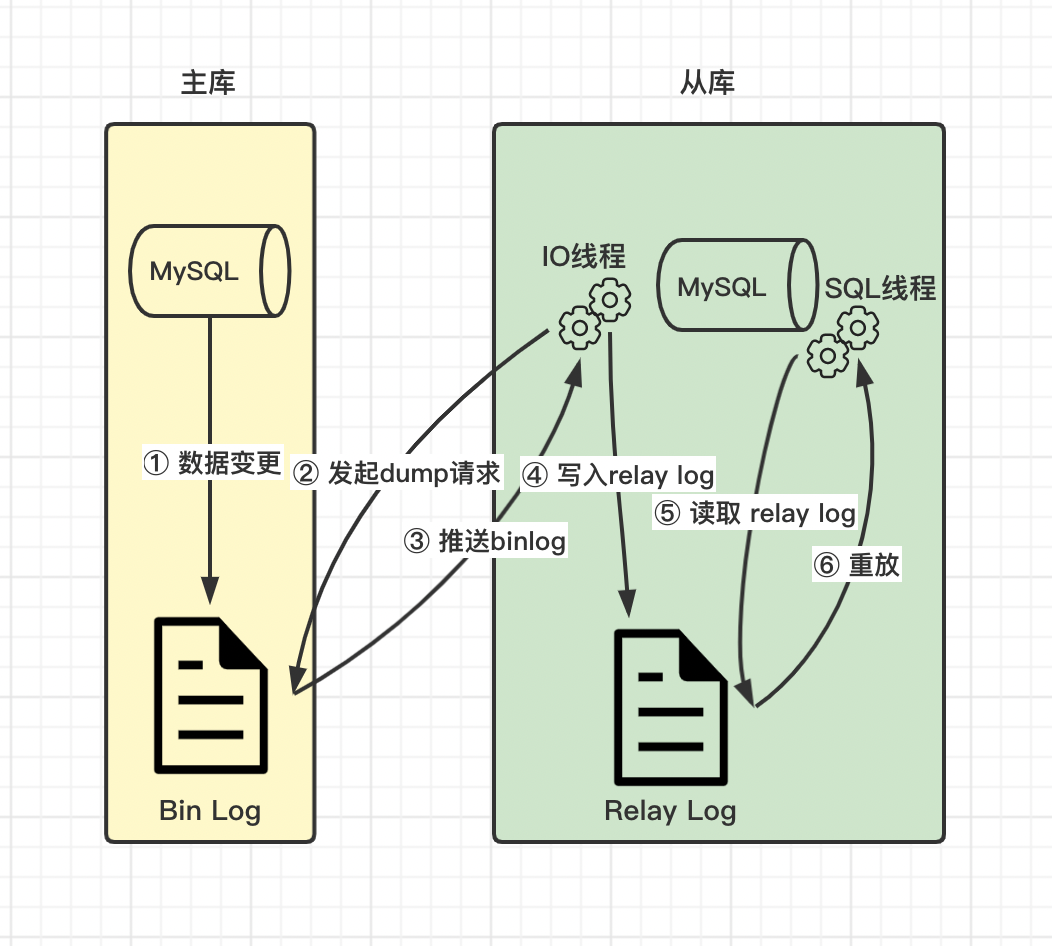

高级程序员必知必会,一文详解MySQL主从同步原理,推荐收藏

Photo selector collectionview

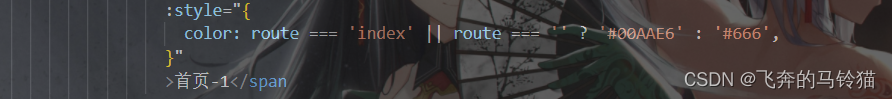

The navigation bar changes colors according to the route

Pytest testing framework -- data driven

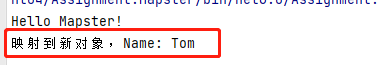

4. Object mapping Mapster

随机推荐

[PHP SPL notes]

论文阅读【Semantic Tag Augmented XlanV Model for Video Captioning】

说一说MVCC多版本并发控制器?

Linkedblockingqueue source code analysis - initialization

利用OPNET进行网络指定源组播(SSM)仿真的设计、配置及注意点

漏电继电器JD1-100

照片选择器CollectionView

DOM-节点对象+时间节点 综合案例

How digitalization affects workflow automation

How can project managers counter attack with NPDP certificates? Look here

高压漏电继电器BLD-20

Egr-20uscm ground fault relay

Photo selector collectionview

Where is NPDP product manager certification sacred?

Leakage relay jd1-100

数字化创新驱动指南

Record a pressure measurement experience summary

Complete code of C language neural network and its meaning

How does mapbox switch markup languages?

Use Zhiyun reader to translate statistical genetics books