当前位置:网站首页>Detailed ResNet: What problem is ResNet solving?

Detailed ResNet: What problem is ResNet solving?

2022-08-04 07:18:00 【hot-blooded chef】

原作者开源代码:https://github.com/KaimingHe/deep-residual-networks

论文:https://arxiv.org/pdf/1512.03385.pdf

1、网络退化问题

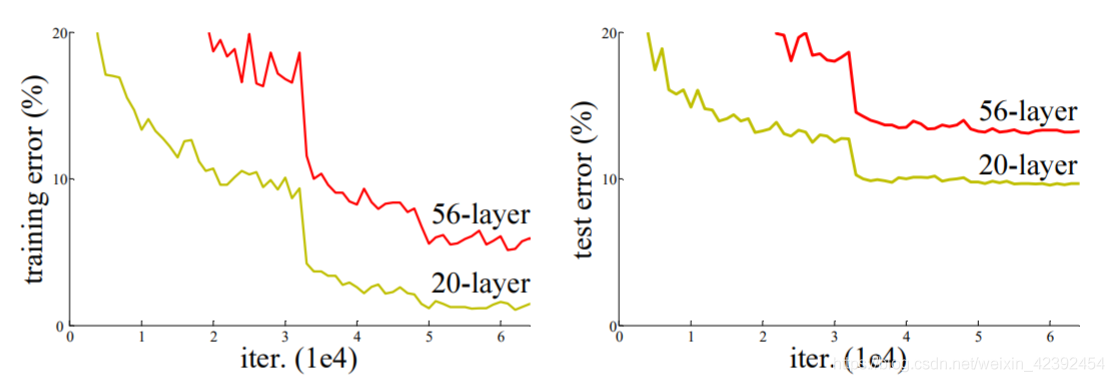

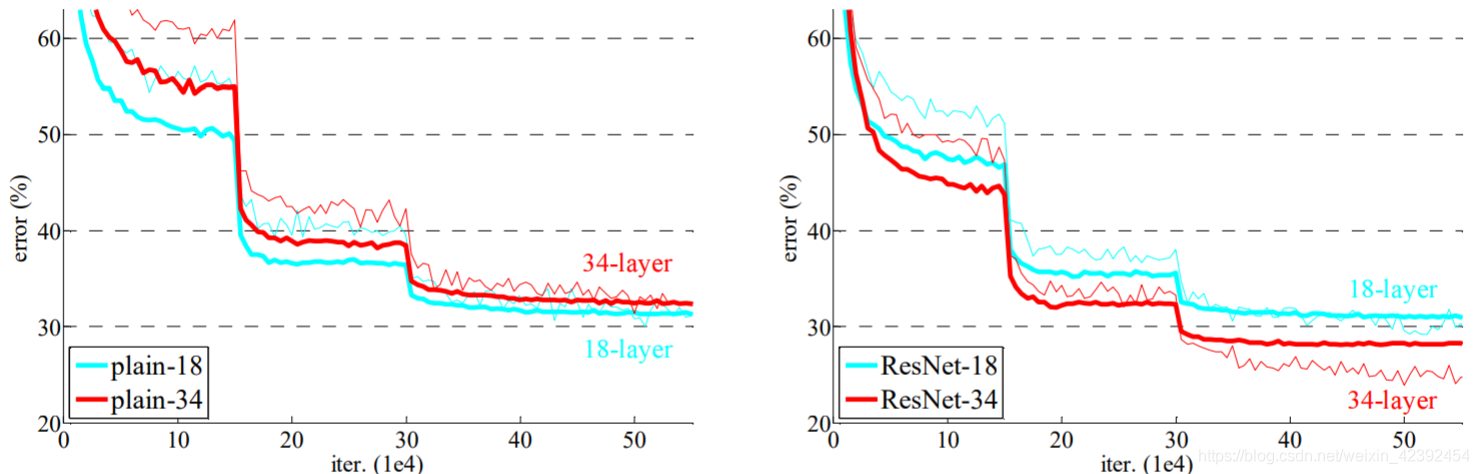

在ResNet诞生之前,AlexNet、VGGThese more mainstream networks are simple stacked layers,比较明显的现象是,网络层数越深,The better the recognition effect.但事实上,当When the number of network layers reaches a certain depth,Accuracy will reach saturation,然后迅速下降.

2、Causes of network degradation

Due to the chain rule in the backpropagation algorithm,If the gradient between layers is(0,1)之间,层层缩小,那么就会出现梯度消失.反之,If the gradient passed layer by layer is greater than1,Then after layer by layer expansion,就会出现梯度爆炸.所以,Simple stacked layers will inevitably degenerate the network.

虽然梯度消失/The explosion is caused by too deep hidden layers of the network,但是在论文中,It has been said that this problem is mainly solved by normalizing initialization and intermediate normalization layers.So the network degradation is not because the gradient disappears/caused by the explosion,What is the cause of the network degradation problem??Another paper gives the answer:The Shattered Gradients Problem: If resnets are the answer, then what is the question?

大意是神经网络越来越深的时候,反传回来的梯度之间的相关性会越来越差,最后接近白噪声.因为我们知道图像是具备局部相关性的,那其实可以认为梯度也应该具备类似的相关性,这样更新的梯度才有意义,如果梯度接近白噪声,那梯度更新可能根本就是在做随机扰动.

3、残差网络

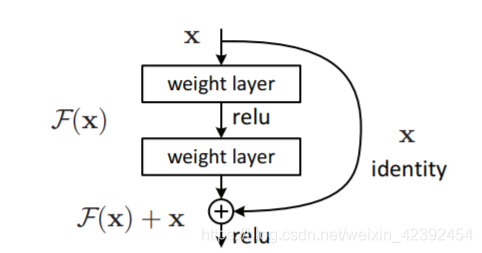

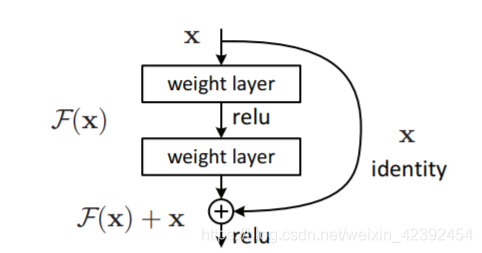

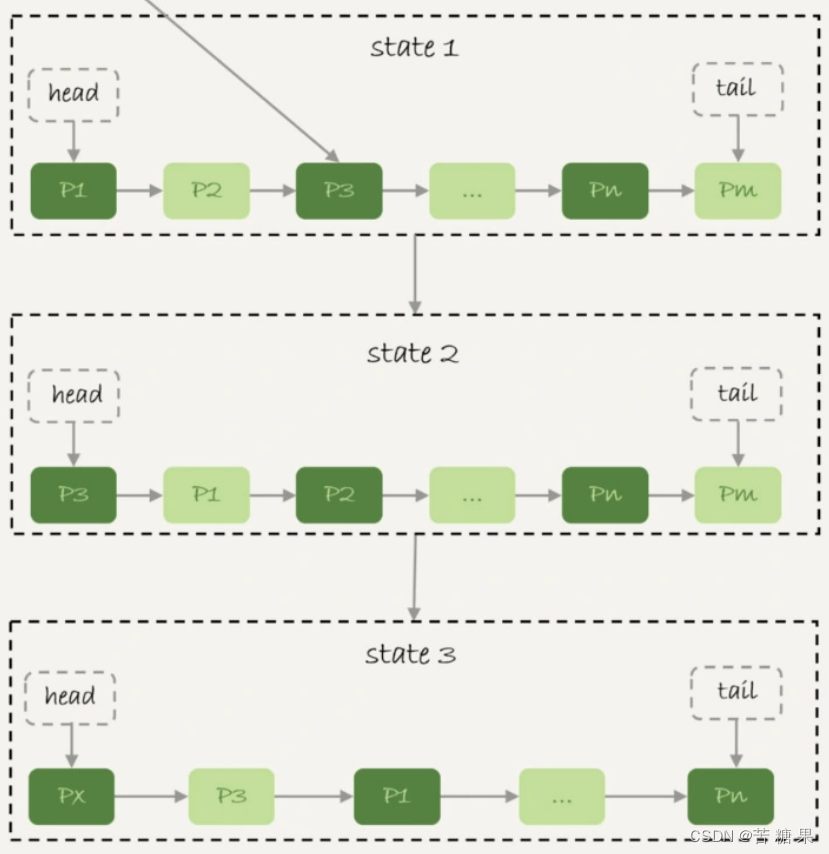

Based on network degradation problem,The author of the paper proposed the concept of residual network.The mathematical model of a residual block is shown in the following figure.The biggest difference between the residual network and the previous network is that there is one moreidentityThe quickest way to branch.And because of the existence of this branch,make the network backpropagation,The loss can pass the gradient directly to the previous network through this shortcut,thereby slowing down the problem of network degradation.

When analyzing the causes of network degradation in Section II,We learned that there is a correlation between gradients.After we have the gradient correlation in the index,The authors analyze a range of structures and activation functions,发现resnetExcellent at preserving gradient correlation(Correlation between attenuation from 1 2 L \frac{1}{\sqrt{2^L}} 2L1到 1 L \frac{1}{\sqrt{L}} L1了.This is actually quite understandable,From the gradient flow,There is a gradient that is passed back untouched,This part of the correlation is very strong.

除此之外,Residual network does not add new parameters,Just one more addition.而在GPU的加速下,This extra computation is almost negligible.

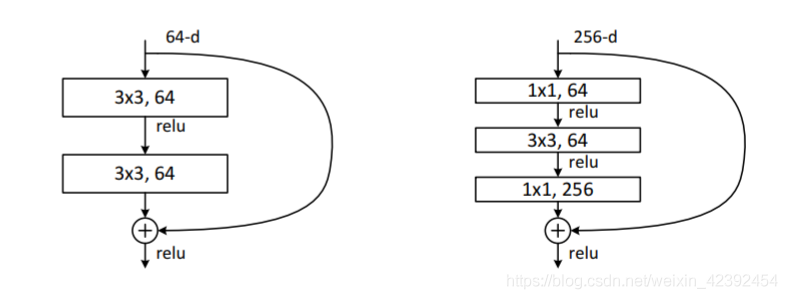

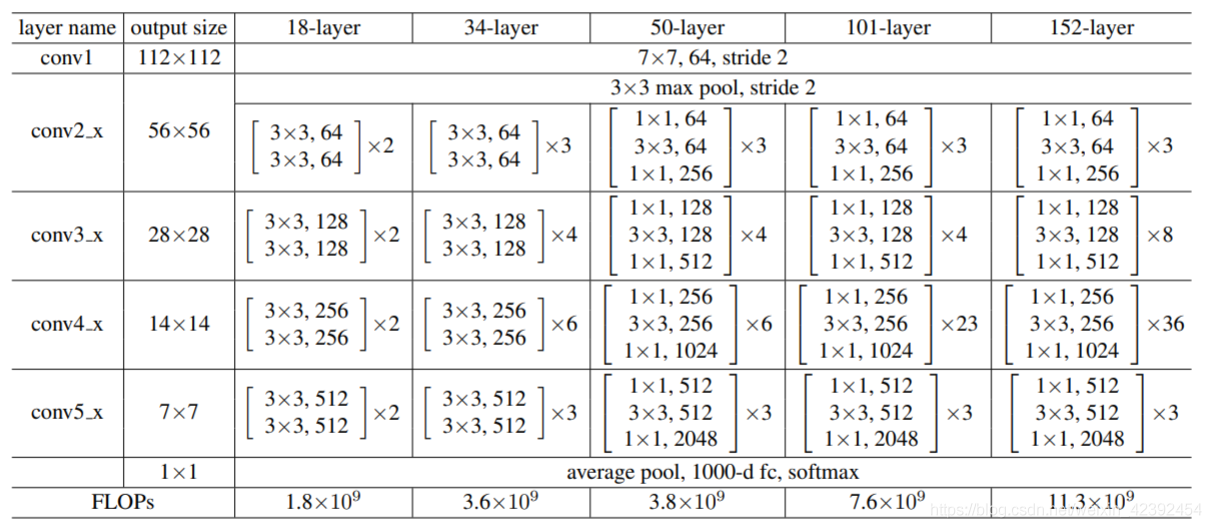

不过我们可以看到,Because the residual block is finally F ( x ) + x F(x) + x F(x)+x的操作,那么意味着 F ( x ) F(x) F(x) 与 x x x的shape必须一致.But in the actual network,还可以利用1x1The convolution of changing the number of channels,The above is on the leftResNet-34structure used,The bottleneck-like structure in the picture on the right isResNet-50/101/152structure used.

And doing so on the right effectively reduces the amount of parameters,Comparing the two calculations:

- The parameter on the left is:3x3x256x256+3x3x256x256 = 1,179,648

- The parameter on the right is:1x1x256x64+3x3x64x64+1x1x64x256 = 69,632

可以看到,We're in a residual block was reduced2个数量级的参数,而在ResNetA series of network construction process,is the stacking of these structures.

4、实验结果

ResNetAs shown in the above recommended parameter,The authors also replaced the fully connected layers with global average pooling,On the one hand, the number of parameters is reduced,On the other hand, fully connected layers are prone to overfitting and rely heavily on dropout 正则化,The global average pooling itself acts as a regularizer,Itself to prevent the overall structure of the fitting.此外,Global average pooling aggregates spatial information,So it is more robust to spatial transformation of the input.

The final experimental comparison results are also very obvious.,ResNet-34Effectively slow down the gradient disappearance/爆炸的现象.And for the exploration of deeper networks,ResNetEven stack the number of network layers to1000层,Although not widely used in industry,But in academic theory, it is of great significance.

5、总结

而最后,To answer our questions:“ResNet到底在解决什么问题?”,我们重新来看一下Res Block的结构.

现在假设 x = 5 , H ( x ) = 5.1 x=5,H(x) = 5.1 x=5,H(x)=5.1

- If it is a non-residual structure,Then the network map is: F ( 5 ) ′ = 5.1 F(5)' = 5.1 F(5)′=5.1

- If it is a residual structure,The network is mapped to: F ( 5 ) + 0.1 = 5.1 F(5) + 0.1 = 5.1 F(5)+0.1=5.1

这里的 F ′ F' F′和 F F F都表示网络参数映射,引入残差后的映射对输出的变化更敏感.For example, from5.1到5.2,映射 F ′ F' F′的输出增加了1/51=2%,And for the residual structure from5.1到5.2,映射 F F F是从0.1到0.2,增加了100%.明显后者输出变化对权重的调整作用更大,所以效果更好.(转自:resnet(残差网络)的F(x)究竟长什么样子?)Subsequent experiments also proved the hypothesis,Residual network ratioplainThe network is better trained.因此,ResNetTo solve the problem is better training network.

Finally, put the author to useKeras和tf2实现的ResNet.

边栏推荐

猜你喜欢

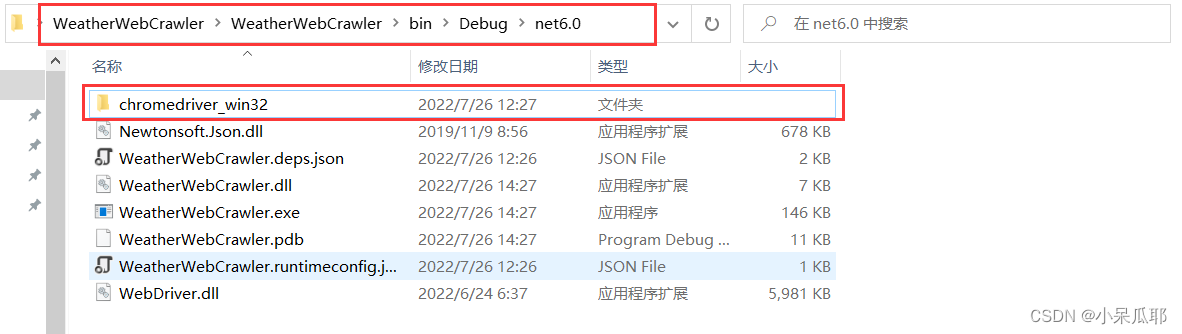

【C# - 爬虫】使用Selenium实现爬虫,获取近七天天气信息(包含完整代码)

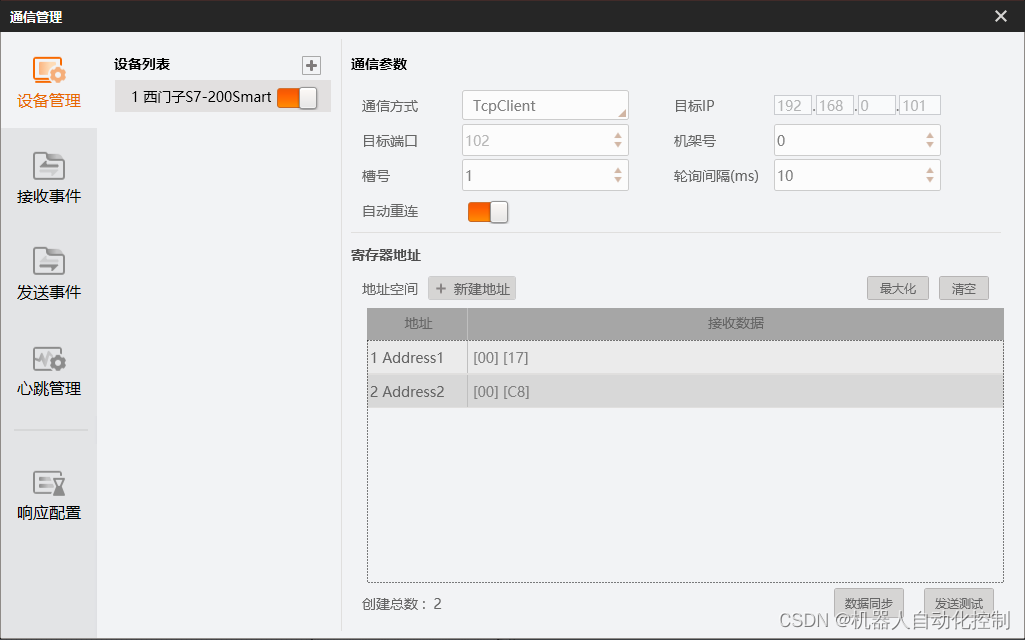

海康VisionMaster与西门子Smart 200进行S7通信

MySQL内存淘汰策略

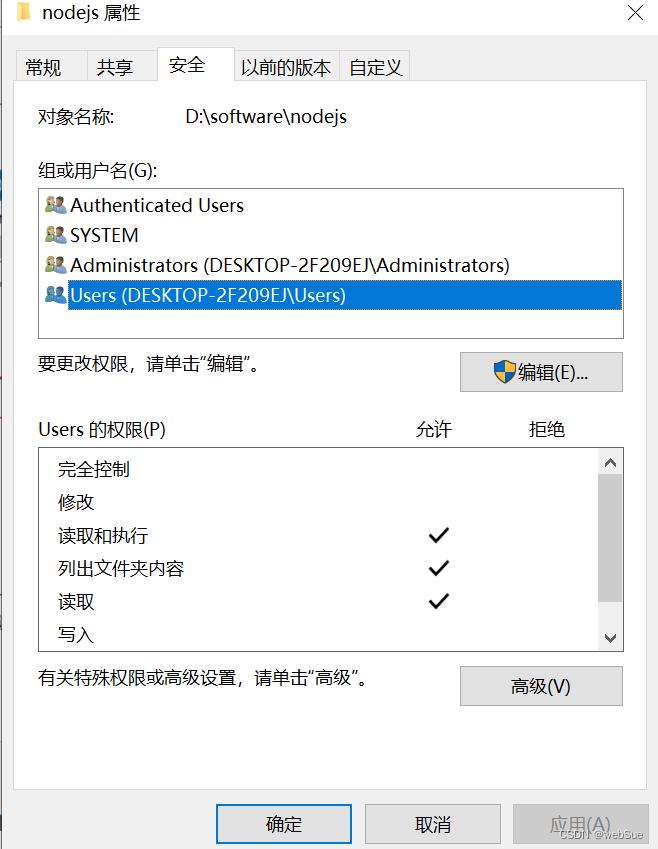

Error EPERM operation not permitted, mkdir ‘Dsoftwarenodejsnode_cache_cacach两种解决办法

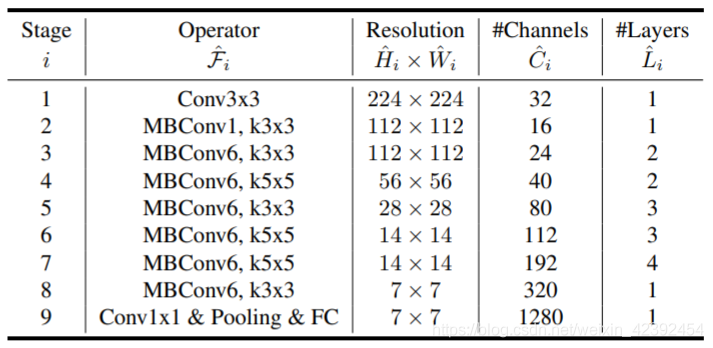

Interpretation of EfficientNet: Composite scaling method of neural network (based on tf-Kersa reproduction code)

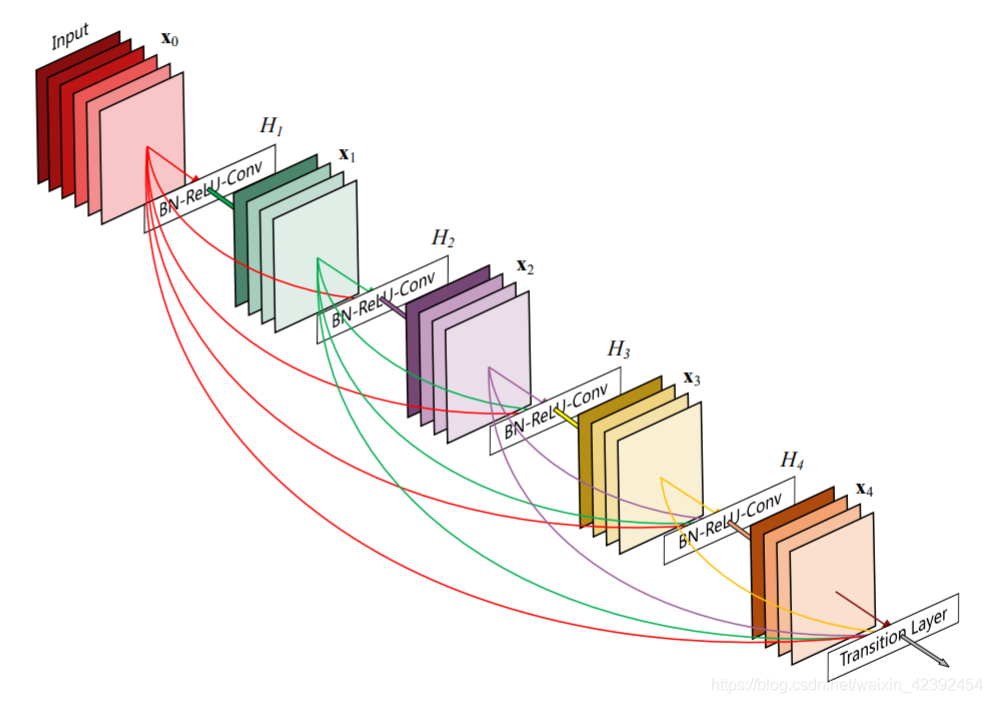

Detailed explanation of DenseNet and Keras reproduction code

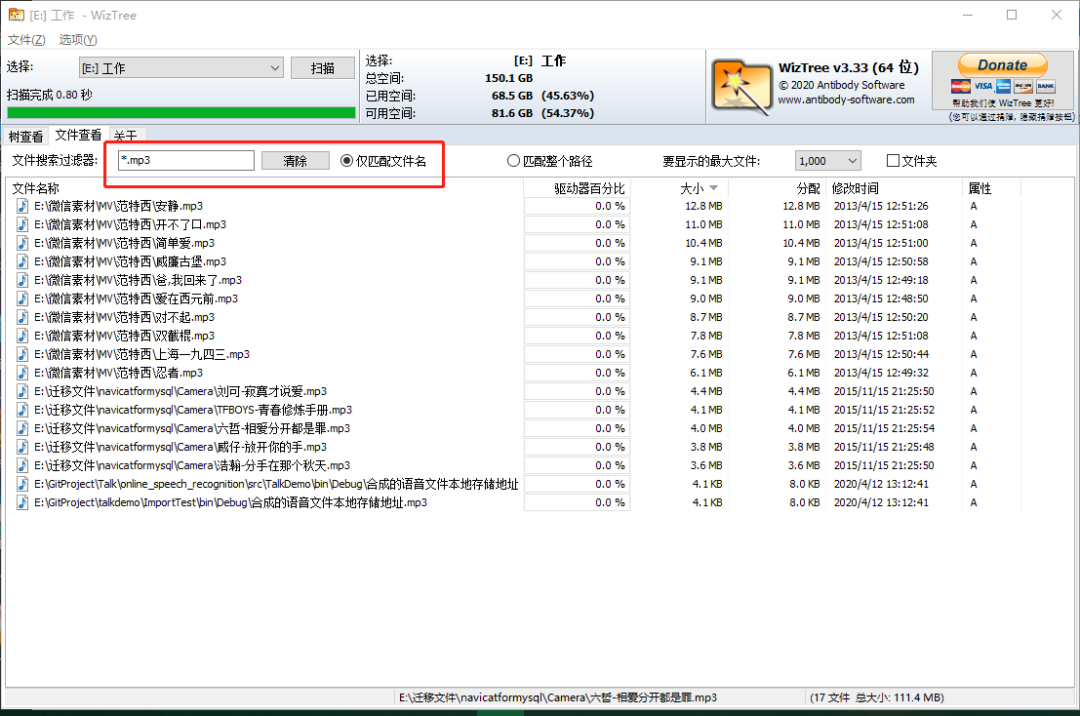

Computer software: recommend a disk space analysis tool - WizTree

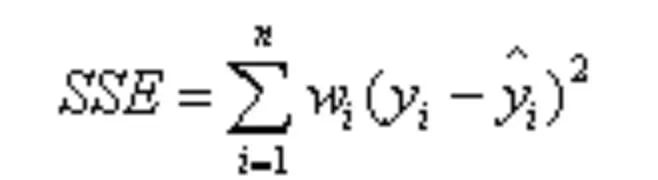

误差指标分析计算之matlab实现【开源1.0.0版】

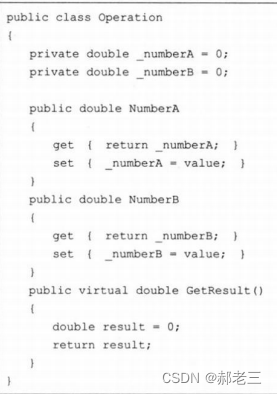

什么是多态。

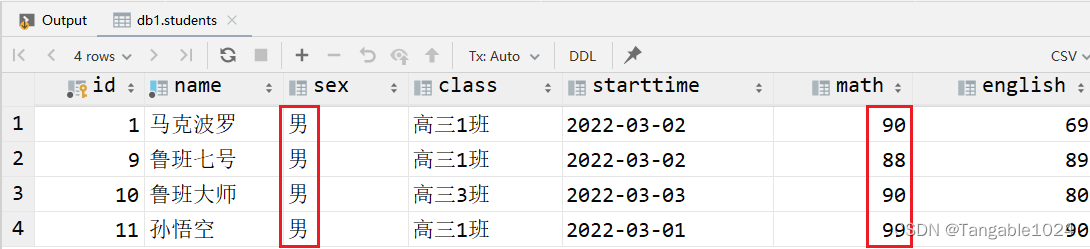

MySQL基础(DDL、DML、DQL)

随机推荐

2DCNN, 1DCNN, BP, SVM fault diagnosis and result visualization of matlab

微软电脑管家2.0公测版体验

基于爬行动物搜索RSA优化LSTM的时间序列预测

天鹰优化的半监督拉普拉斯深度核极限学习机用于分类

Time Series Forecasting Based on Reptile Search RSA Optimized LSTM

LAN技术-3iStack

Software: Recommend a domestic and very easy-to-use efficiency software uTools to everyone

What is the connection between GRNN, RBF, PNN, KELM?

关于我写的循环遍历

npm包发布与迭代

SENet detailed explanation and Keras reproduction code

matlab封闭曲线拟合 (针对一些列离散点)

搭建redis哨兵

Computer knowledge: desktop computers should choose the brand and assembly, worthy of collection

E-R图总结规范

ES6新语法:symbol,map容器

微信小程序实现活动倒计时

如何画好业务架构图。

如何用matlab做高精度计算?【第三辑】(完)

Gramm Angle field GAF time-series data into the image and applied to the fault diagnosis