当前位置:网站首页>"Tips" to slim down Seurat objects

"Tips" to slim down Seurat objects

2022-07-04 13:09:00 【Xu zhougeng】

We are using Seurat When processing single cell data , Will find Seurat The object keeps getting bigger , Carelessly, it becomes a bottomless hole in memory ,

For example, one of mine Seurat Objects occupy 22.3 Of memory space

old_size <- object.size(seu.obj)

format(old_size, units="Gb")

# 22.3 Gb

If I need to shut down halfway Rstudio, So in order to ensure their work continuity , I need to put the in memory 20 many G The data of is saved to disk , And the memory will be loaded in the next analysis . This time , Considering the read and write speed of the disk , Time consuming may require 10 More minutes .

Considering that you may have to upload the data to the online disk or GEO database , So this 20 many G The time required for data , It's even more beyond your imagination .

Is there any way to Seurat Object slimming ? It's very simple , because Seurat Mainly in the Scale This step , Turn the original sparse matrix into an ordinary matrix , At the same time, the elements inside are floating-point , It takes up a lot of space . As long as we clear the normalized matrix before saving the data , You can make Seurat Lose weight all at once .

[email protected][email protected] <- matrix()

new_size <- object.size(seu.obj)

format(new_size, units="Gb")

# 5Gb

Operation above , Reduce the memory occupation to only the original 20% about . however , The problem is coming. , What is the cost of memory reduction ? The price is , You need to... The loaded data scale, Restore Seurat Medium scale.data.

all.genes <- rownames(seu.obj)

seu.obj <- ScaleData(seu.obj, features = all.genes)

This is a common idea in Computer Science , Or space for time , Or time for space .

This trick can speed up Seurat Save, read and write objects , Are there any other applications ? because scale The latter data is mainly for principal component analysis (PCA) Provide input . Subsequent nonlinear dimensionality reduction (UMAP), Clustering analysis (Cluster) It's all based on PCA, Not based on scale data , therefore , If the memory space is surprised during the analysis , You can also use this trick to free up memory space . After running some operations that occupy memory , Then restore it .

边栏推荐

- Apache server access log access Log settings

- Can Console. Clear be used to only clear a line instead of whole console?

- 认知的定义

- Play Sanzi chess easily

- C語言函數

- Using nsproxy to forward messages

- DVWA range exercise 4

- 17.内存分区与分页

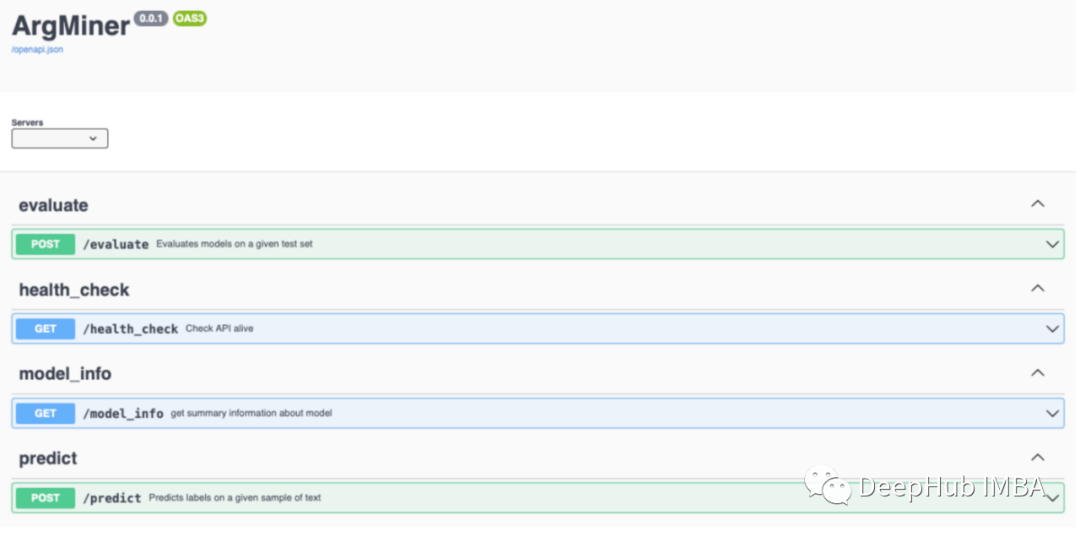

- Argminer: a pytorch package for processing, enhancing, training, and reasoning argument mining datasets

- Simple understanding of binary search

猜你喜欢

6 分钟看完 BGP 协议。

Valentine's Day confession code

Introduction to the button control elevatedbutton of the fleet tutorial (the tutorial includes the source code)

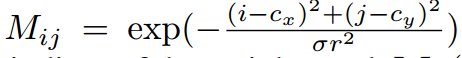

CVPR 2022 | TransFusion:用Transformer进行3D目标检测的激光雷达-相机融合

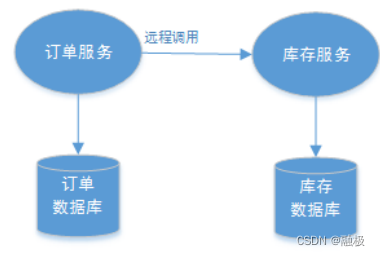

分布式事务相关概念与理论

ArgMiner:一个用于对论点挖掘数据集进行处理、增强、训练和推理的 PyTorch 的包

Is the outdoor LED screen waterproof?

轻松玩转三子棋

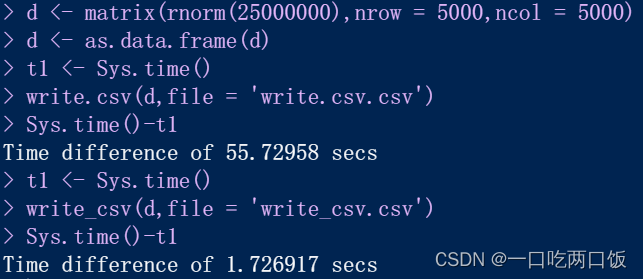

R language -- readr package reads and writes data

诸神黄昏时代的对比学习

随机推荐

Transformer principle and code elaboration (pytorch)

Sort merge sort

C language: find the palindrome number whose 100-999 is a multiple of 7

Using nsproxy to forward messages

16.内存使用与分段

七、软件包管理

[Yu Yue education] 233 pre school children's language education reference questions in the spring of 2019 of the National Open University

Interviewer: what is the difference between redis expiration deletion strategy and memory obsolescence strategy?

爬虫练习题(一)

AI painting minimalist tutorial

Implementation mode and technical principle of MT4 cross platform merchandising system (API merchandising, EA merchandising, nj4x Merchandising)

ArgMiner:一个用于对论点挖掘数据集进行处理、增强、训练和推理的 PyTorch 的包

Full arrangement (medium difficulty)

室外LED屏幕防水吗?

高效!用虚拟用户搭建FTP工作环境

Practice of retro SOAP Protocol

Agile development / agile testing experience

在 Apache 上配置 WebDAV 服务器

Master the use of auto analyze in data warehouse

【FAQ】华为帐号服务报错 907135701的常见原因总结和解决方法