当前位置:网站首页>Reflection on pytorch back propagation

Reflection on pytorch back propagation

2022-07-27 07:06:00 【Mr_ health】

Realize a simple all to condense network , The work done is :

Then the loss function is classical L2 Loss :

The code is as follows , Here we set paranoia to 0:

class network(nn.Module):

def __init__(self):

super().__init__()

self.w = torch.Tensor([1.0])

self.w.requires_grad = True

def forward(self, x):

output = self.w * x

return output

def gradient(x, y, w):

return 2*x*(x*w - y)

if __name__ == '__main__':

model = network()

x_data = torch.Tensor([1.0,2.0,3.0])

y_data = torch.Tensor([2.0,4.0,6.0])

output = model(x_data)

loss = (output-y_data).pow(2).mean()

loss.backward()

grads = gradient(x_data, y_data, model.w).mean()# Manual implementation Back propagation

print(model.w.grad.data) #

print(grads)The results are consistent , All for -8.3333

Specific derivation :

Let's revise it loss In the form of , The weight of each sample is different , Increase the weight of the last sample , You can see that the weight of the two samples is 1/4, The weight of the last sample is 1/2. From this point of view , In fact, the above is in accordance with the standard loss Back propagation , In fact, each sample has equal weight .

model = network()

x_data = torch.Tensor([1.0,2.0,3.0])

y_data = torch.Tensor([2.0,4.0,6.0])

output = model(x_data)

loss = (output-y_data).pow(2)

loss = (loss[0:2].mean() + loss[2])/2 # modify loss

loss.backward()

# grads = gradient(x_data, y_data, model.w).mean()# Manual implementation Back propagation

print(model.w.grad.data) #

The result is -11.5, Now calculate manually :

边栏推荐

- Interpretation of deepsort source code (IV)

- Campus news release management system based on SSM

- 工控用Web组态软件比组态软件更高效

- OpenGL development with QT (I) drawing plane graphics

- Li Hongyi 2020 deep learning and human language processing dlhlp conditional generation by RNN and attention-p22

- How to delete or replace the loading style of easyplayer streaming media player?

- Account management and authority

- 基于SSM图书借阅管理系统

- ZnS DNA QDs near infrared zinc sulfide ZnS quantum dots modified deoxyribonucleic acid dna|dna modified ZnS quantum dots

- C#时间相关操作

猜你喜欢

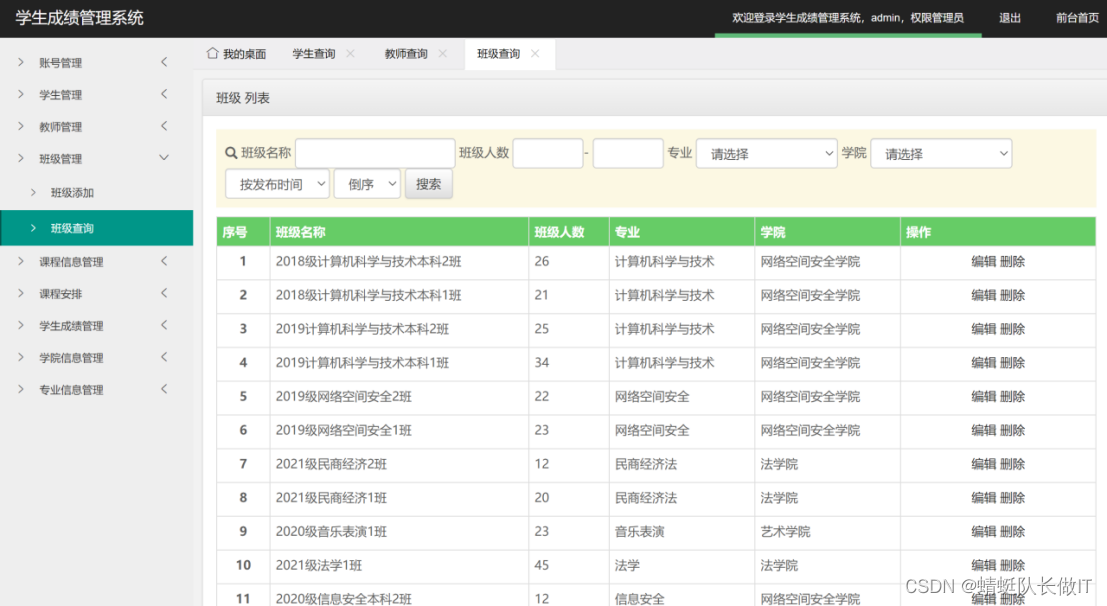

基于SSM学生成绩管理系统

O2O电商线上线下一体化模式分析

ES6的新特性(2)

Derivative, partial derivative and gradient

聊聊大火的多模态

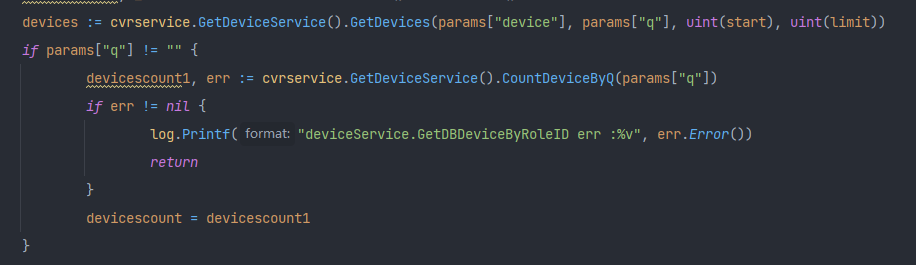

Fix the problem that the paging data is not displayed when searching the easycvr device management list page

DNA偶联PbSe量子点|近红外硒化铅PbSe量子点修饰脱氧核糖核酸DNA|PbSe-DNA QDs

Account management and authority

PNA peptide nucleic acid modified peptide suc Tyr Leu Val PNA | suc ala Pro Phe PNA 11

Express receive request parameters

随机推荐

PNA polypeptide PNA TPP | GLT ala ala Pro Leu PNA | suc ala Pro PNA | suc AAPL PNA | suc AAPM PNA

Error in running code: libboost_ filesystem.so.1.58.0: cannot open shared object file: No such file or directory

Day012 application of one-dimensional array

Vscode connection remote server development

DNA科研实验应用|环糊精修饰核酸CD-RNA/DNA|环糊精核酸探针/量子点核酸探针

C#时间相关操作

How can chrome quickly transfer a group of web pages (tabs) to another device (computer)

Cyclegan parsing

Express framework

Analysis of pix2pix principle

OpenGL development with QT (I) drawing plane graphics

CASS11.0.0.4 for AutoCAD2010-2023免狗使用方法

Web configuration software for industrial control is more efficient than configuration software

R2LIVE代码学习记录(3):对雷达特征提取

Mysql database

C语言怎么学?这篇文章给你完整答案

Variance and covariance

齐岳:巯基修饰寡聚DNA|DNA修饰CdTe/CdS核壳量子点|DNA偶联砷化铟InAs量子点InAs-DNA QDs

DNA modified zinc oxide | DNA modified gold nanoparticles | DNA coupled modified carbon nanomaterials

运行代码报错: libboost_filesystem.so.1.58.0: cannot open shared object file: No such file or directory