当前位置:网站首页>Multithreading tutorial (XXVII) CPU cache and pseudo sharing

Multithreading tutorial (XXVII) CPU cache and pseudo sharing

2022-06-11 05:30:00 【Have you become a great God today】

2 Multithreading tutorial ( twenty-seven )cpu cache 、 False sharing

One 、CPU Cache structure

see cpu cache

[email protected] ~ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 1

On-line CPU(s) list: 0

Thread(s) per core: 1

Core(s) per socket: 1

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 142

Model name: Intel(R) Core(TM) i7-8565U CPU @ 1.80GHz

Stepping: 11

CPU MHz: 1992.002

BogoMIPS: 3984.00

Hypervisor vendor: VMware

Virtualization type: full

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 8192K

NUMA node0 CPU(s): 0

Speed comparison

| from cpu To | About the required clock cycle |

|---|---|

| register | 1 cycle |

| L1 | 3~4 cycle |

| L2 | 10~20 cycle |

| L3 | 40~45 cycle |

| Memory | 120~240 cycle |

Registers can be understood as being in cpu Inside ,cpu The speed to register is the fastest

Clock cycles and cpu It's about the dominant frequency of , such as 4GHZ The main frequency of , A time period is about 0.25ns

In order to improve the cpu Utilization ratio , We read the memory data into the cache

because CPU And The speed of memory varies greatly , We need to improve efficiency by pre reading data to the cache .

Caching is in cache behavior units , Each cache line corresponds to a block of memory , It's usually 64 byte(8 individual long)

The addition of cache will cause the generation of data copies , That is, the same data will be cached in cache lines of different cores

CPU To ensure data consistency , If a CPU Core changed data , Other CPU The entire cache line corresponding to the core must be invalidated

More detailed cpu The caching mechanism can be seen in Blog , It's very good

Two 、 False sharing

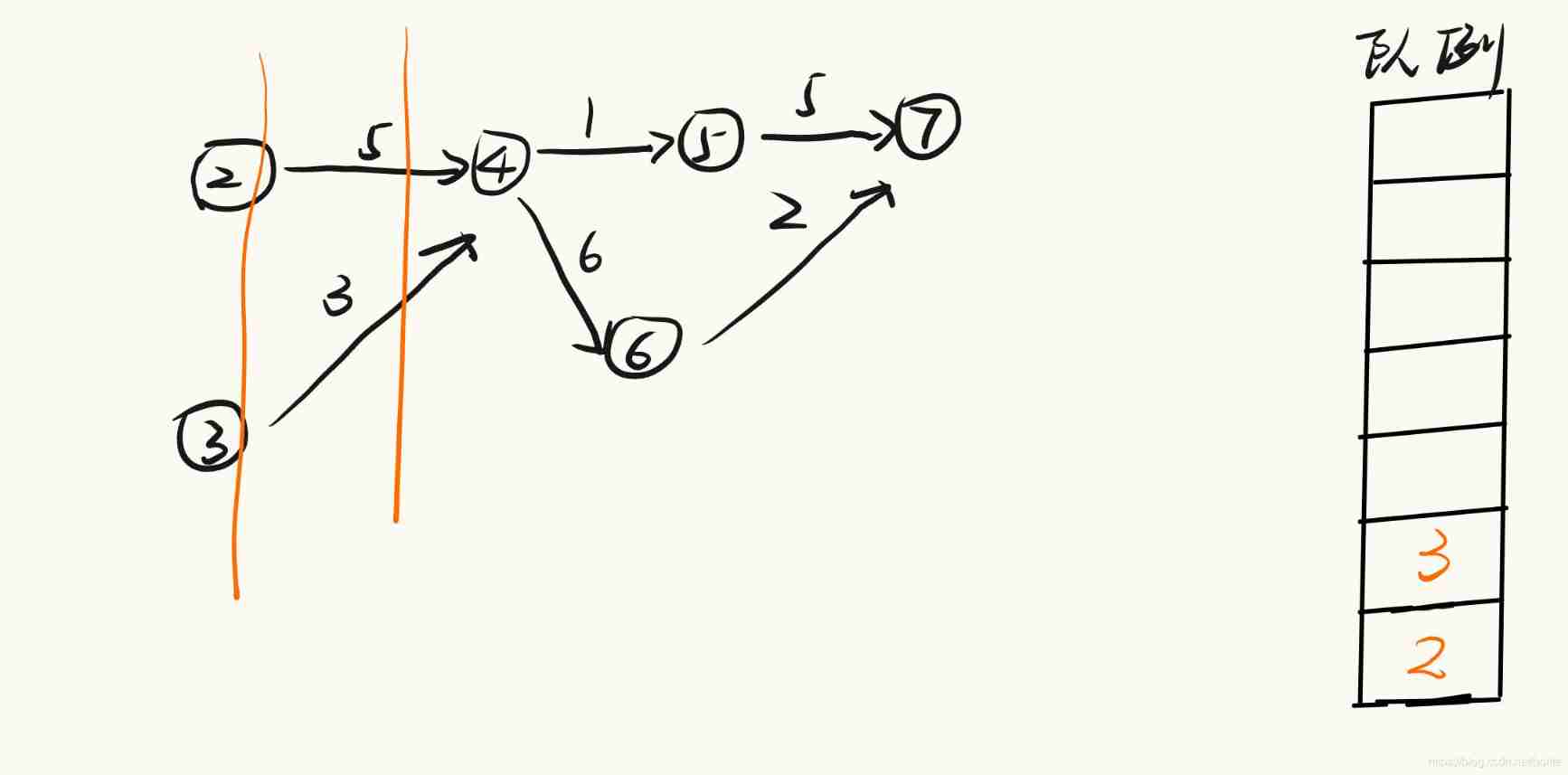

As mentioned earlier, caching is based on cache line pseudo units , Each cache line corresponds to a block of memory , It's usually 64 byte(8 individual long)

But if the amount of data is less than 64byte Half of it is 32byte, Cache rows are stored 2 Data , If one of the two data changes, the entire cache line will be invalidated , This is pseudo sharing .

Introduced in the previous session LongAdder For example ,cell Is the accumulation unit ,LongAdder In order to improve efficiency, several cell.

because Cell It's in the form of an array , It's continuously stored in memory , One Cell by 24 byte (16 Byte object header and 8 Bytes of value), Therefore, cache lines can be saved 2 One of the Cell object . Here comes the question :

Core-0 To be modified Cell[0]

Core-1 To be modified Cell[1]

No matter who modifies it successfully , Will lead to each other Core Cache row invalidation for , such as Core-0 in Cell[0]=6000, Cell[1]=8000 To accumulate Cell[0]=6001, Cell[1]=8000 , This will allow Core-1 Cache row invalidation for

@sun.misc.Contended To solve this problem , Its principle is to add... Before and after the object or field using this annotation 128 Byte size padding, So that CPU Different cache lines are used when pre reading objects to the cache , such , It will not invalidate the other party's cache lines

reference :

边栏推荐

- code

- About custom comparison methods of classes and custom methods of sort functions

- 项目-智慧城市

- 微信小程序,购买商品属性自动换行,固定div个数,超出部分自动换行

- Emnlp2021 𞓜 a small number of data relation extraction papers of deepblueai team were hired

- PCB走線到底能承載多大電流

- 1. use alicloud object OSS (basic)

- Swap numbers (no temporary variables)

- 49. grouping of acronyms

- Manually splicing dynamic JSON strings

猜你喜欢

jvm调优五:jvm调优工具和调优实战

Exploration of kangaroo cloud data stack on spark SQL optimization based on CBO

推荐一款免费的内网穿透开源软件,可以在测试本地开发微信公众号使用

智能门锁为什么会这么火,米家和智汀的智能门锁怎么样呢?

Topological sorting

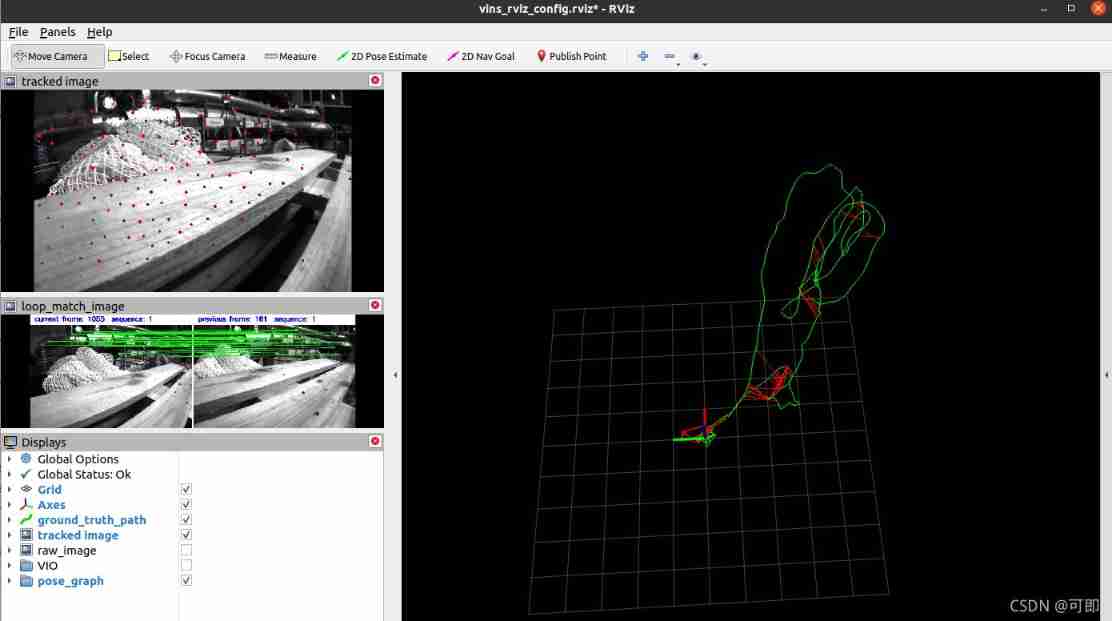

Zed2 running vins-mono preliminary test

Basics of customized view

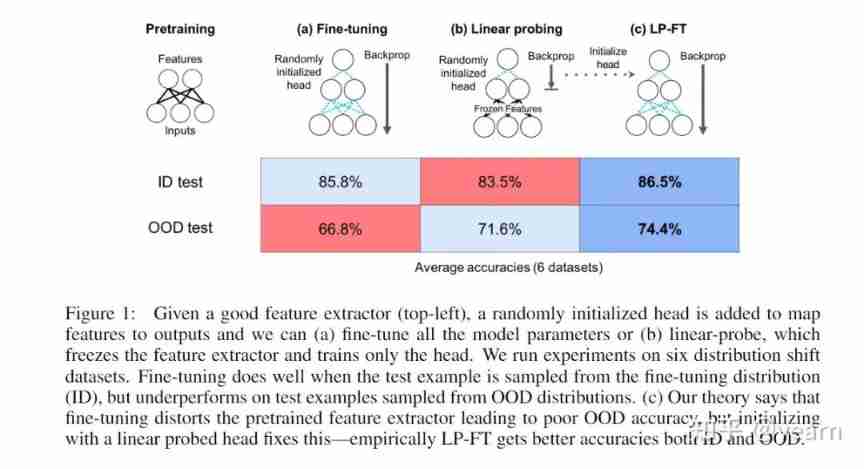

Inventory | ICLR 2022 migration learning, visual transformer article summary

AAAI2022-ShiftVIT: When Shift Operation Meets Vision Transformer

Big meal count (time complexity) -- leetcode daily question

随机推荐

创建酷炫的 CollectionViewCell 转换动画

Combing route - Compaction Technology

微信小程序text内置组件换行符不换行的原因-wxs处理换行符,正则加段首空格

Recursively process data accumulation

Oh my Zsh correct installation posture

[NIPS2021]MLP-Mixer: An all-MLP Architecture for Vision

微信自定义组件---样式--插槽

wxParse解析iframe播放视频

Zed2 camera manual

Preliminary test of running vins-fusion with zed2 binocular camera

微信小程序,购买商品属性自动换行,固定div个数,超出部分自动换行

1.使用阿里云对象OSS(初级)

27. Remove elements

IOU series (IOU, giou, Diou, CIO)

Recommend a free intranet penetration open source software that can be used in the local wechat official account under test

Es IK installation error

Thesis 𞓜 jointly pre training transformers on unpaired images and text

getBackgroundAudioManager控制音乐播放(类名的动态绑定)

袋鼠云数栈基于CBO在Spark SQL优化上的探索

工具类ObjectUtil常用的方法