当前位置:网站首页>【Opencv450】HOG+SVM 与Hog+cascade进行行人检测

【Opencv450】HOG+SVM 与Hog+cascade进行行人检测

2022-07-01 19:24:00 【十年一梦实验室】

因为从opencv3.0开始不再支持hog+cascade级联分类器。github上有人从opencv2.x导出了个hogcascade类,引入opencv4.x可以使用。

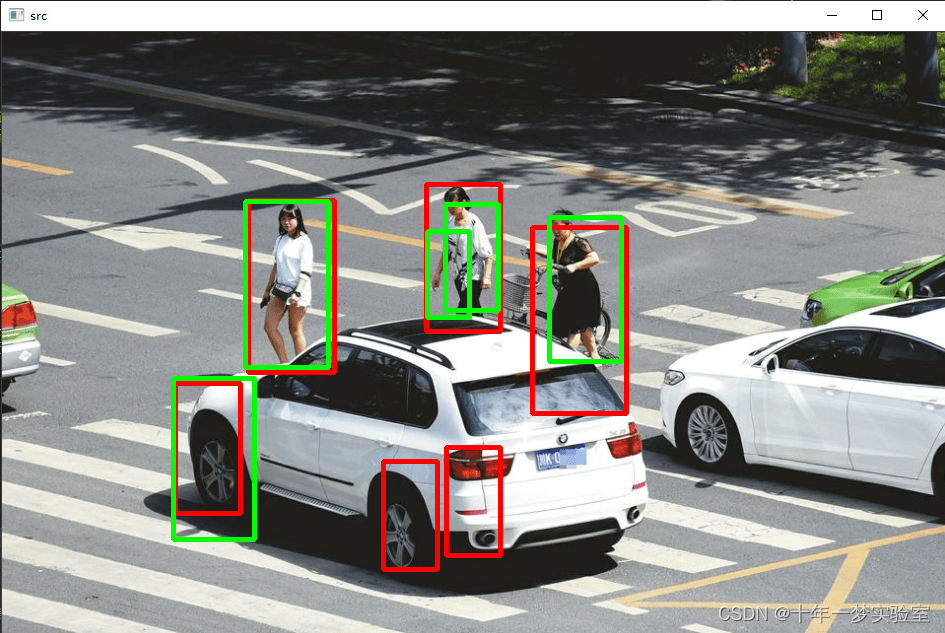

行人检测

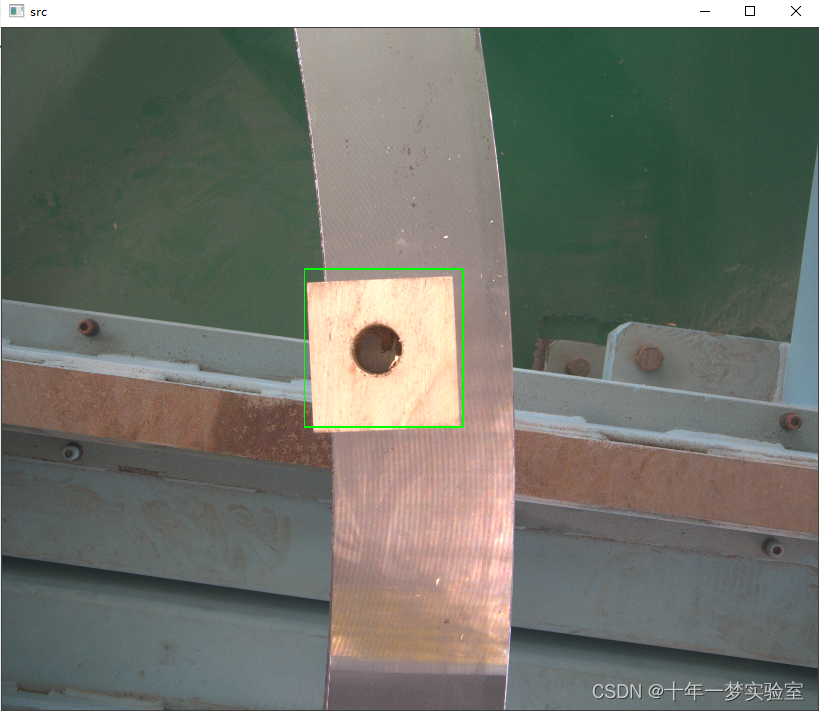

带孔木块级联分类器识别效果

主程序源码:

#include <iostream>

#include <string>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/objdetect/objdetect.hpp>

#include <opencv2/ml/ml.hpp>

#include<ctime>

#include "hogcascade.hpp"

using namespace std;

using namespace cv;

int main()

{

cout << "Red:Hog+svm------Green:Hog+cascade" << endl;

Mat src = imread("1.jpg", 1);

vector<Rect> found1, found_filtered1, found2, found_filtered2;//矩形框数组

clock_t start1, end1, start2, end2;

//方法1,Hog+svm

start1 = clock();

HOGDescriptor hog;//HOG特征检测器

hog.setSVMDetector(HOGDescriptor::getDefaultPeopleDetector());//设置SVM分类器为默认参数

hog.detectMultiScale(src, found1, 0, Size(2, 2), Size(0, 0), 1.05, 2);//对图像进行多尺度检测,检测窗口移动步长为(8,8)

end1 = (double)(1000 * (clock() - start1) / CLOCKS_PER_SEC);

//方法2.Hog+cascade

start2 = clock();

cv::HOGCascadeClassifier hogclassifier;

//CascadeClassifier* cascade = new CascadeClassifier;

hogclassifier.load("hogcascade_pedestrians.xml");//hogcascade_pedestrians.xml

//hogclassifier.load("blockhogcascade.xml"); //带孔木块 hog+cascade级联分类器

//hogclassifier.detectMultiScale(src, found2,1.05,5,0,Size(400,400),Size(800,800));//带孔木块

hogclassifier.detectMultiScale(src, found2);

end2 = (double)(1000 * (clock() - start2) / CLOCKS_PER_SEC);

cout << "Hog+svm: " << end1 << "ms" << " Hog+cascade: " << end2 << "ms" << endl;

//找出所有没有嵌套的矩形框r,并放入found_filtered中,如果有嵌套的话,则取外面最大的那个矩形框放入found_filtered中

for (int i = 0; i < found1.size(); i++)

{

Rect r = found1[i];

int j = 0;

for (; j < found1.size(); j++)

if (j != i && (r & found1[j]) == r)

break;

if (j == found1.size())

found_filtered1.push_back(r);

}

for (int i = 0; i < found2.size(); i++)

{

Rect r = found2[i];

int j = 0;

for (; j < found2.size(); j++)

if (j != i && (r & found2[j]) == r)

break;

if (j == found2.size())

found_filtered2.push_back(r);

}

//画矩形框,因为hog检测出的矩形框比实际人体框要稍微大些,所以这里需要做一些调整

for (int i = 0; i < found_filtered1.size(); i++)

{

Rect r = found_filtered1[i];

r.x += cvRound(r.width * 0.1);

r.width = cvRound(r.width * 0.8);

r.y += cvRound(r.height * 0.07);

r.height = cvRound(r.height * 0.8);

rectangle(src, r.tl(), r.br(), Scalar(0, 0, 255), 3);//红色 hog+svm

}

for (int i = 0; i < found_filtered2.size(); i++)

{

Rect r = found_filtered2[i];

r.x += cvRound(r.width * 0.1);

r.width = cvRound(r.width * 0.8);

r.y += cvRound(r.height * 0.07);

r.height = cvRound(r.height * 0.8);

rectangle(src, r.tl(), r.br(), Scalar(0, 255, 0), 3);//绿色: hog+cascade

}

//resize(src,src,Size(src.size()/3)); //带孔木块识别 缩放

imshow("src", src);

waitKey();

system("pause");

return 0;

}

hogcascade.h

/*M///

//

// IMPORTANT: READ BEFORE DOWNLOADING, COPYING, INSTALLING OR USING.

//

// By downloading, copying, installing or using the software you agree to this license.

// If you do not agree to this license, do not download, install,

// copy or use the software.

//

//

// Intel License Agreement

// For Open Source Computer Vision Library

//

// Copyright (C) 2000, Intel Corporation, all rights reserved.

// Third party copyrights are property of their respective owners.

//

// Redistribution and use in source and binary forms, with or without modification,

// are permitted provided that the following conditions are met:

//

// * Redistribution's of source code must retain the above copyright notice,

// this list of conditions and the following disclaimer.

//

// * Redistribution's in binary form must reproduce the above copyright notice,

// this list of conditions and the following disclaimer in the documentation

// and/or other materials provided with the distribution.

//

// * The name of Intel Corporation may not be used to endorse or promote products

// derived from this software without specific prior written permission.

//

// This software is provided by the copyright holders and contributors "as is" and

// any express or implied warranties, including, but not limited to, the implied

// warranties of merchantability and fitness for a particular purpose are disclaimed.

// In no event shall the Intel Corporation or contributors be liable for any direct,

// indirect, incidental, special, exemplary, or consequential damages

// (including, but not limited to, procurement of substitute goods or services;

// loss of use, data, or profits; or business interruption) however caused

// and on any theory of liability, whether in contract, strict liability,

// or tort (including negligence or otherwise) arising in any way out of

// the use of this software, even if advised of the possibility of such damage.

//

//M*/

#pragma once

#include <opencv2/opencv.hpp>

#include <string>

namespace cv

{

using std::vector;

#define CCC_CASCADE_PARAMS "cascadeParams"

#define CCC_STAGE_TYPE "stageType"

#define CCC_FEATURE_TYPE "featureType"

#define CCC_HEIGHT "height"

#define CCC_WIDTH "width"

#define CCC_STAGE_NUM "stageNum"

#define CCC_STAGES "stages"

#define CCC_STAGE_PARAMS "stageParams"

#define CCC_BOOST "BOOST"

#define CCC_MAX_DEPTH "maxDepth"

#define CCC_WEAK_COUNT "maxWeakCount"

#define CCC_STAGE_THRESHOLD "stageThreshold"

#define CCC_WEAK_CLASSIFIERS "weakClassifiers"

#define CCC_INTERNAL_NODES "internalNodes"

#define CCC_LEAF_VALUES "leafValues"

#define CCC_FEATURES "features"

#define CCC_FEATURE_PARAMS "featureParams"

#define CCC_MAX_CAT_COUNT "maxCatCount"

#define CCV_SUM_PTRS( p0, p1, p2, p3, sum, rect, step ) \

/* (x, y) */ \

(p0) = sum + (rect).x + (step) * (rect).y, \

/* (x + w, y) */ \

(p1) = sum + (rect).x + (rect).width + (step) * (rect).y, \

/* (x + w, y) */ \

(p2) = sum + (rect).x + (step) * ((rect).y + (rect).height), \

/* (x + w, y + h) */ \

(p3) = sum + (rect).x + (rect).width + (step) * ((rect).y + (rect).height)

//#define CCV_TILTED_PTRS( p0, p1, p2, p3, tilted, rect, step ) \

// /* (x, y) */ \

// (p0) = tilted + (rect).x + (step) * (rect).y, \

// /* (x - h, y + h) */ \

// (p1) = tilted + (rect).x - (rect).height + (step) * ((rect).y + (rect).height), \

// /* (x + w, y + w) */ \

// (p2) = tilted + (rect).x + (rect).width + (step) * ((rect).y + (rect).width), \

// /* (x + w - h, y + w + h) */ \

// (p3) = tilted + (rect).x + (rect).width - (rect).height \

// + (step) * ((rect).y + (rect).width + (rect).height)

#define CCALC_SUM_(p0, p1, p2, p3, offset) \

((p0)[offset] - (p1)[offset] - (p2)[offset] + (p3)[offset])

#define CCALC_SUM(rect,offset) CCALC_SUM_((rect)[0], (rect)[1], (rect)[2], (rect)[3], offset)

//#define CCC_HAAR "HAAR"

//#define CCC_RECTS "rects"

//#define CCC_TILTED "tilted"

//

//#define CCC_LBP "LBP"

#define CCC_RECT "rect"

#define CCC_HOG "HOG"

class HOGEvaluator

{

public:

static const int HOG = 2;

struct Feature

{

Feature();

float calc( int offset ) const;

void updatePtrs( const vector<Mat>& _hist, const Mat &_normSum );

bool read( const FileNode& node );

enum { CELL_NUM = 4, BIN_NUM = 9 };

Rect rect[CELL_NUM];

int featComponent; //component index from 0 to 35

const float* pF[4]; //for feature calculation

const float* pN[4]; //for normalization calculation

};

HOGEvaluator();

virtual ~HOGEvaluator();

virtual bool read( const FileNode& node );

virtual Ptr<HOGEvaluator> clone() const;

virtual int getFeatureType() const { return HOGEvaluator::HOG; }

virtual bool setImage( const Mat& image, Size winSize );

virtual bool setWindow( Point pt );

double operator()(int featureIdx) const

{

return featuresPtr[featureIdx].calc(offset);

}

virtual double calcOrd( int featureIdx ) const

{

return (*this)(featureIdx);

}

private:

virtual void integralHistogram( const Mat& srcImage, vector<Mat> &histogram, Mat &norm, int nbins ) const;

Size origWinSize;

Ptr<vector<Feature> > features;

Feature* featuresPtr;

vector<Mat> hist;

Mat normSum;

int offset;

};

class CV_EXPORTS_W HOGCascadeClassifier

{

public:

CV_WRAP HOGCascadeClassifier();

CV_WRAP HOGCascadeClassifier(const std::string& filename);

virtual ~HOGCascadeClassifier();

CV_WRAP virtual bool empty() const;

CV_WRAP bool load(const std::string& filename);

virtual bool read( const FileNode& node );

CV_WRAP void detectMultiScale(const Mat& image,

CV_OUT std::vector<Rect>& objects,

double scaleFactor = 1.1,

int minNeighbors = 3, int flags = 0,

Size minSize = Size(),

Size maxSize = Size());

CV_WRAP void detectMultiScale( const Mat& image, vector<Rect>& objects,

vector<int>& rejectLevels,

vector<double>& levelWeights,

double scaleFactor=1.1,

int minNeighbors=3, int flags=0,

Size minSize=Size(),

Size maxSize=Size(),

bool outputRejectLevels=false );

class CV_EXPORTS MaskGenerator

{

public:

virtual ~MaskGenerator() {}

virtual cv::Mat generateMask(const cv::Mat& src)=0;

virtual void initializeMask(const cv::Mat& /*src*/) {};

};

void setMaskGenerator(Ptr<MaskGenerator> maskGenerator);

Ptr<MaskGenerator> getMaskGenerator();

protected:

Ptr<MaskGenerator> maskGenerator;

virtual int runAt( Ptr<HOGEvaluator>& feval, Point pt, double& weight );

virtual bool detectSingleScale( const Mat& image, int stripCount, Size processingRectSize,

int stripSize, int yStep, double factor, vector<Rect>& candidates,

vector<int>& rejectLevels, vector<double>& levelWeights, bool outputRejectLevels=false);

friend class HOGCascadeClassifierInvoker;

friend int HOGpredictOrdered( HOGCascadeClassifier& cascade, Ptr<HOGEvaluator> &featureEvaluator, double& weight);

friend int HOGpredictOrderedStump( HOGCascadeClassifier& cascade, Ptr<HOGEvaluator> &featureEvaluator, double& weight);

class Data

{

public:

struct CV_EXPORTS DTreeNode

{

int featureIdx;

float threshold; // for ordered features only

int left;

int right;

};

struct CV_EXPORTS DTree

{

int nodeCount;

};

struct CV_EXPORTS Stage

{

int first;

int ntrees;

float threshold;

};

bool read(const FileNode &node);

bool isStumpBased;

int stageType;

int featureType;

int ncategories;

Size origWinSize;

vector<Stage> stages;

vector<DTree> classifiers;

vector<DTreeNode> nodes;

vector<float> leaves;

vector<int> subsets;

};

Data data;

Ptr<HOGEvaluator> featureEvaluator;

};

inline HOGEvaluator::Feature :: Feature()

{

rect[0] = rect[1] = rect[2] = rect[3] = Rect();

pF[0] = pF[1] = pF[2] = pF[3] = 0;

pN[0] = pN[1] = pN[2] = pN[3] = 0;

featComponent = 0;

}

inline float HOGEvaluator::Feature :: calc( int _offset ) const

{

float res = CCALC_SUM(pF, _offset);

float normFactor = CCALC_SUM(pN, _offset);

res = (res > 0.001f) ? (res / ( normFactor + 0.001f) ) : 0.f;

return res;

}

inline void HOGEvaluator::Feature :: updatePtrs( const vector<Mat> &_hist, const Mat &_normSum )

{

int binIdx = featComponent % BIN_NUM;

int cellIdx = featComponent / BIN_NUM;

Rect normRect = Rect( rect[0].x, rect[0].y, 2*rect[0].width, 2*rect[0].height );

const float* featBuf = (const float*)_hist[binIdx].data;

size_t featStep = _hist[0].step / sizeof(featBuf[0]);

const float* normBuf = (const float*)_normSum.data;

size_t normStep = _normSum.step / sizeof(normBuf[0]);

CCV_SUM_PTRS( pF[0], pF[1], pF[2], pF[3], featBuf, rect[cellIdx], featStep );

CCV_SUM_PTRS( pN[0], pN[1], pN[2], pN[3], normBuf, normRect, normStep );

}

inline int HOGpredictOrdered( HOGCascadeClassifier& cascade, Ptr<HOGEvaluator> &_featureEvaluator, double& sum )

{

int nstages = (int)cascade.data.stages.size();

int nodeOfs = 0, leafOfs = 0;

HOGEvaluator& featureEvaluator = (HOGEvaluator&)*_featureEvaluator;

float* cascadeLeaves = &cascade.data.leaves[0];

HOGCascadeClassifier::Data::DTreeNode* cascadeNodes = &cascade.data.nodes[0];

HOGCascadeClassifier::Data::DTree* cascadeWeaks = &cascade.data.classifiers[0];

HOGCascadeClassifier::Data::Stage* cascadeStages = &cascade.data.stages[0];

for( int si = 0; si < nstages; si++ )

{

HOGCascadeClassifier::Data::Stage& stage = cascadeStages[si];

int wi, ntrees = stage.ntrees;

sum = 0;

for( wi = 0; wi < ntrees; wi++ )

{

HOGCascadeClassifier::Data::DTree& weak = cascadeWeaks[stage.first + wi];

int idx = 0, root = nodeOfs;

do

{

HOGCascadeClassifier::Data::DTreeNode& node = cascadeNodes[root + idx];

double val = featureEvaluator(node.featureIdx);

idx = val < node.threshold ? node.left : node.right;

}

while( idx > 0 );

sum += cascadeLeaves[leafOfs - idx];

nodeOfs += weak.nodeCount;

leafOfs += weak.nodeCount + 1;

}

if( sum < stage.threshold )

return -si;

}

return 1;

}

inline int HOGpredictOrderedStump( HOGCascadeClassifier& cascade, Ptr<HOGEvaluator> &_featureEvaluator, double& sum )

{

int nodeOfs = 0, leafOfs = 0;

HOGEvaluator& featureEvaluator = (HOGEvaluator&)*_featureEvaluator;

float* cascadeLeaves = &cascade.data.leaves[0];

HOGCascadeClassifier::Data::DTreeNode* cascadeNodes = &cascade.data.nodes[0];

HOGCascadeClassifier::Data::Stage* cascadeStages = &cascade.data.stages[0];

int nstages = (int)cascade.data.stages.size();

for( int stageIdx = 0; stageIdx < nstages; stageIdx++ )

{

HOGCascadeClassifier::Data::Stage& stage = cascadeStages[stageIdx];

sum = 0.0;

int ntrees = stage.ntrees;

for( int i = 0; i < ntrees; i++, nodeOfs++, leafOfs+= 2 )

{

HOGCascadeClassifier::Data::DTreeNode& node = cascadeNodes[nodeOfs];

double value = featureEvaluator(node.featureIdx);

sum += cascadeLeaves[ value < node.threshold ? leafOfs : leafOfs + 1 ];

}

if( sum < stage.threshold )

return -stageIdx;

}

return 1;

}

}

hogcascade.cpp

/*M///

//

// IMPORTANT: READ BEFORE DOWNLOADING, COPYING, INSTALLING OR USING.

//

// By downloading, copying, installing or using the software you agree to this license.

// If you do not agree to this license, do not download, install,

// copy or use the software.

//

//

// Intel License Agreement

// For Open Source Computer Vision Library

//

// Copyright (C) 2000, Intel Corporation, all rights reserved.

// Third party copyrights are property of their respective owners.

//

// Redistribution and use in source and binary forms, with or without modification,

// are permitted provided that the following conditions are met:

//

// * Redistribution's of source code must retain the above copyright notice,

// this list of conditions and the following disclaimer.

//

// * Redistribution's in binary form must reproduce the above copyright notice,

// this list of conditions and the following disclaimer in the documentation

// and/or other materials provided with the distribution.

//

// * The name of Intel Corporation may not be used to endorse or promote products

// derived from this software without specific prior written permission.

//

// This software is provided by the copyright holders and contributors "as is" and

// any express or implied warranties, including, but not limited to, the implied

// warranties of merchantability and fitness for a particular purpose are disclaimed.

// In no event shall the Intel Corporation or contributors be liable for any direct,

// indirect, incidental, special, exemplary, or consequential damages

// (including, but not limited to, procurement of substitute goods or services;

// loss of use, data, or profits; or business interruption) however caused

// and on any theory of liability, whether in contract, strict liability,

// or tort (including negligence or otherwise) arising in any way out of

// the use of this software, even if advised of the possibility of such damage.

//

//M*/

#include "hogcascade.hpp"

#include <cassert>

namespace cv

{

using std::string;

class HOGCascadeClassifierInvoker : public ParallelLoopBody

{

public:

HOGCascadeClassifierInvoker( HOGCascadeClassifier& _cc, Size _sz1, int _stripSize, int _yStep, double _factor,

vector<Rect>& _vec, vector<int>& _levels, vector<double>& _weights, bool outputLevels, const Mat& _mask, Mutex* _mtx)

{

classifier = &_cc;

processingRectSize = _sz1;

stripSize = _stripSize;

yStep = _yStep;

scalingFactor = _factor;

rectangles = &_vec;

rejectLevels = outputLevels ? &_levels : 0;

levelWeights = outputLevels ? &_weights : 0;

mask = _mask;

mtx = _mtx;

}

void operator()(const Range& range) const

{

Ptr<HOGEvaluator> evaluator = classifier->featureEvaluator->clone();

Size winSize(cvRound(classifier->data.origWinSize.width * scalingFactor), cvRound(classifier->data.origWinSize.height * scalingFactor));

int y1 = range.start * stripSize;

int y2 = min(range.end * stripSize, processingRectSize.height);

for( int y = y1; y < y2; y += yStep )

{

for( int x = 0; x < processingRectSize.width; x += yStep )

{

if ( (!mask.empty()) && (mask.at<uchar>(Point(x,y))==0)) {

continue;

}

double gypWeight;

int result = classifier->runAt(evaluator, Point(x, y), gypWeight);

if( rejectLevels )

{

if( result == 1 )

result = -(int)classifier->data.stages.size();

if( classifier->data.stages.size() + result < 4 )

{

mtx->lock();

rectangles->push_back(Rect(cvRound(x*scalingFactor), cvRound(y*scalingFactor), winSize.width, winSize.height));

rejectLevels->push_back(-result);

levelWeights->push_back(gypWeight);

mtx->unlock();

}

}

else if( result > 0 )

{

mtx->lock();

rectangles->push_back(Rect(cvRound(x*scalingFactor), cvRound(y*scalingFactor),

winSize.width, winSize.height));

mtx->unlock();

}

if( result == 0 )

x += yStep;

}

}

}

HOGCascadeClassifier* classifier;

vector<Rect>* rectangles;

Size processingRectSize;

int stripSize, yStep;

double scalingFactor;

vector<int> *rejectLevels;

vector<double> *levelWeights;

Mat mask;

Mutex* mtx;

};

bool HOGEvaluator::Feature :: read( const FileNode& node )

{

FileNode rnode = node[CCC_RECT];

FileNodeIterator it = rnode.begin();

it >> rect[0].x >> rect[0].y >> rect[0].width >> rect[0].height >> featComponent;

rect[1].x = rect[0].x + rect[0].width;

rect[1].y = rect[0].y;

rect[2].x = rect[0].x;

rect[2].y = rect[0].y + rect[0].height;

rect[3].x = rect[0].x + rect[0].width;

rect[3].y = rect[0].y + rect[0].height;

rect[1].width = rect[2].width = rect[3].width = rect[0].width;

rect[1].height = rect[2].height = rect[3].height = rect[0].height;

return true;

}

HOGEvaluator::HOGEvaluator()

{

features = Ptr<vector<Feature> >(new vector<Feature>());

}

HOGEvaluator::~HOGEvaluator()

{

}

bool HOGEvaluator::read( const FileNode& node )

{

features->resize(node.size());

featuresPtr = &(*features)[0];

FileNodeIterator it = node.begin(), it_end = node.end();

for(int i = 0; it != it_end; ++it, i++)

{

if(!featuresPtr[i].read(*it))

return false;

}

return true;

}

Ptr<HOGEvaluator> HOGEvaluator::clone() const

{

Ptr<HOGEvaluator> ret = Ptr<HOGEvaluator>(new HOGEvaluator);

ret->origWinSize = origWinSize;

ret->features = features;

ret->featuresPtr = &(*ret->features)[0];

ret->offset = offset;

ret->hist = hist;

ret->normSum = normSum;

return ret;

}

bool HOGEvaluator::setImage( const Mat& image, Size winSize )

{

int rows = image.rows + 1;

int cols = image.cols + 1;

origWinSize = winSize;

if( image.cols < origWinSize.width || image.rows < origWinSize.height )

return false;

hist.clear();

for( int bin = 0; bin < Feature::BIN_NUM; bin++ )

{

hist.push_back( Mat(rows, cols, CV_32FC1) );

}

normSum.create( rows, cols, CV_32FC1 );

integralHistogram( image, hist, normSum, Feature::BIN_NUM );

size_t featIdx, featCount = features->size();

for( featIdx = 0; featIdx < featCount; featIdx++ )

{

featuresPtr[featIdx].updatePtrs( hist, normSum );

}

return true;

}

bool HOGEvaluator::setWindow(Point pt)

{

if( pt.x < 0 || pt.y < 0 ||

pt.x + origWinSize.width >= hist[0].cols-2 ||

pt.y + origWinSize.height >= hist[0].rows-2 )

return false;

offset = pt.y * ((int)hist[0].step/sizeof(float)) + pt.x;

return true;

}

void HOGEvaluator::integralHistogram(const Mat &img, vector<Mat> &histogram, Mat &norm, int nbins) const

{

CV_Assert( img.type() == CV_8U || img.type() == CV_8UC3 );

int x, y, binIdx;

Size gradSize(img.size());

Size histSize(histogram[0].size());

Mat grad(gradSize, CV_32F);

Mat qangle(gradSize, CV_8U);

AutoBuffer<int> mapbuf(gradSize.width + gradSize.height + 4);

int* xmap = (int*)mapbuf + 1;

int* ymap = xmap + gradSize.width + 2;

const int borderType = (int)BORDER_REPLICATE;

for( x = -1; x < gradSize.width + 1; x++ )

xmap[x] = borderInterpolate(x, gradSize.width, borderType);

for( y = -1; y < gradSize.height + 1; y++ )

ymap[y] = borderInterpolate(y, gradSize.height, borderType);

int width = gradSize.width;

AutoBuffer<float> _dbuf(width*4);

float* dbuf = _dbuf;

Mat Dx(1, width, CV_32F, dbuf);

Mat Dy(1, width, CV_32F, dbuf + width);

Mat Mag(1, width, CV_32F, dbuf + width*2);

Mat Angle(1, width, CV_32F, dbuf + width*3);

float angleScale = (float)(nbins/CV_PI);

for( y = 0; y < gradSize.height; y++ )

{

const uchar* currPtr = img.data + img.step*ymap[y];

const uchar* prevPtr = img.data + img.step*ymap[y-1];

const uchar* nextPtr = img.data + img.step*ymap[y+1];

float* gradPtr = (float*)grad.ptr(y);

uchar* qanglePtr = (uchar*)qangle.ptr(y);

for( x = 0; x < width; x++ )

{

dbuf[x] = (float)(currPtr[xmap[x+1]] - currPtr[xmap[x-1]]);

dbuf[width + x] = (float)(nextPtr[xmap[x]] - prevPtr[xmap[x]]);

}

cartToPolar( Dx, Dy, Mag, Angle, false );

for( x = 0; x < width; x++ )

{

float mag = dbuf[x+width*2];

float angle = dbuf[x+width*3];

angle = angle*angleScale - 0.5f;

int bidx = cvFloor(angle);

angle -= bidx;

if( bidx < 0 )

bidx += nbins;

else if( bidx >= nbins )

bidx -= nbins;

qanglePtr[x] = (uchar)bidx;

gradPtr[x] = mag;

}

}

integral(grad, norm, grad.depth());

float* histBuf;

const float* magBuf;

const uchar* binsBuf;

int binsStep = (int)( qangle.step / sizeof(uchar) );

int histStep = (int)( histogram[0].step / sizeof(float) );

int magStep = (int)( grad.step / sizeof(float) );

for( binIdx = 0; binIdx < nbins; binIdx++ )

{

histBuf = (float*)histogram[binIdx].data;

magBuf = (const float*)grad.data;

binsBuf = (const uchar*)qangle.data;

memset( histBuf, 0, histSize.width * sizeof(histBuf[0]) );

histBuf += histStep + 1;

for( y = 0; y < qangle.rows; y++ )

{

histBuf[-1] = 0.f;

float strSum = 0.f;

for( x = 0; x < qangle.cols; x++ )

{

if( binsBuf[x] == binIdx )

strSum += magBuf[x];

histBuf[x] = histBuf[-histStep + x] + strSum;

}

histBuf += histStep;

binsBuf += binsStep;

magBuf += magStep;

}

}

}

HOGCascadeClassifier::HOGCascadeClassifier()

{

}

HOGCascadeClassifier::HOGCascadeClassifier(const std::string& filename)

{

load(filename);

}

HOGCascadeClassifier::~HOGCascadeClassifier()

{

}

bool HOGCascadeClassifier::empty() const

{

return data.stages.empty();

}

bool HOGCascadeClassifier::load(const std::string& filename)

{

data = Data();

featureEvaluator.release();

FileStorage fs(filename, FileStorage::READ);

if( !fs.isOpened() )

return false;

if( read(fs.getFirstTopLevelNode()) )

return true;

fs.release();

return false;

}

bool HOGCascadeClassifier::Data::read(const FileNode &root)

{

static const float THRESHOLD_EPS = 1e-5f;

// load stage params

string stageTypeStr = (string)root[CCC_STAGE_TYPE];

if( stageTypeStr == CCC_BOOST )

stageType = 0;

else

return false;

string featureTypeStr = (string)root[CCC_FEATURE_TYPE];

if( featureTypeStr == CCC_HOG )

featureType = HOGEvaluator::HOG;

else

return false;

origWinSize.width = (int)root[CCC_WIDTH];

origWinSize.height = (int)root[CCC_HEIGHT];

CV_Assert( origWinSize.height > 0 && origWinSize.width > 0 );

isStumpBased = (int)(root[CCC_STAGE_PARAMS][CCC_MAX_DEPTH]) == 1 ? true : false;

// load feature params

FileNode fn = root[CCC_FEATURE_PARAMS];

if( fn.empty() )

return false;

ncategories = fn[CCC_MAX_CAT_COUNT];

int subsetSize = (ncategories + 31)/32,

nodeStep = 3 + ( ncategories>0 ? subsetSize : 1 );

// load stages

fn = root[CCC_STAGES];

if( fn.empty() )

return false;

stages.reserve(fn.size());

classifiers.clear();

nodes.clear();

FileNodeIterator it = fn.begin(), it_end = fn.end();

for( int si = 0; it != it_end; si++, ++it )

{

FileNode fns = *it;

Stage stage;

stage.threshold = (float)fns[CCC_STAGE_THRESHOLD] - THRESHOLD_EPS;

fns = fns[CCC_WEAK_CLASSIFIERS];

if(fns.empty())

return false;

stage.ntrees = (int)fns.size();

stage.first = (int)classifiers.size();

stages.push_back(stage);

classifiers.reserve(stages[si].first + stages[si].ntrees);

FileNodeIterator it1 = fns.begin(), it1_end = fns.end();

for( ; it1 != it1_end; ++it1 ) // weak trees

{

FileNode fnw = *it1;

FileNode internalNodes = fnw[CCC_INTERNAL_NODES];

FileNode leafValues = fnw[CCC_LEAF_VALUES];

if( internalNodes.empty() || leafValues.empty() )

return false;

DTree tree;

tree.nodeCount = (int)internalNodes.size()/nodeStep;

classifiers.push_back(tree);

nodes.reserve(nodes.size() + tree.nodeCount);

leaves.reserve(leaves.size() + leafValues.size());

if( subsetSize > 0 )

subsets.reserve(subsets.size() + tree.nodeCount*subsetSize);

FileNodeIterator internalNodesIter = internalNodes.begin(), internalNodesEnd = internalNodes.end();

for( ; internalNodesIter != internalNodesEnd; ) // nodes

{

DTreeNode node;

node.left = (int)*internalNodesIter; ++internalNodesIter;

node.right = (int)*internalNodesIter; ++internalNodesIter;

node.featureIdx = (int)*internalNodesIter; ++internalNodesIter;

if( subsetSize > 0 )

{

for( int j = 0; j < subsetSize; j++, ++internalNodesIter )

subsets.push_back((int)*internalNodesIter);

node.threshold = 0.f;

}

else

{

node.threshold = (float)*internalNodesIter; ++internalNodesIter;

}

nodes.push_back(node);

}

internalNodesIter = leafValues.begin(), internalNodesEnd = leafValues.end();

for( ; internalNodesIter != internalNodesEnd; ++internalNodesIter ) // leaves

leaves.push_back((float)*internalNodesIter);

}

}

return true;

}

bool HOGCascadeClassifier::read(const FileNode& root)

{

if( !data.read(root) )

return false;

// load features

featureEvaluator = Ptr<HOGEvaluator>(new HOGEvaluator);

FileNode fn = root[CCC_FEATURES];

if( fn.empty() )

return false;

return featureEvaluator->read(fn);

}

bool HOGCascadeClassifier::detectSingleScale( const Mat& image, int stripCount, Size processingRectSize,

int stripSize, int yStep, double factor, vector<Rect>& candidates,

vector<int>& levels, vector<double>& weights, bool outputRejectLevels )

{

if( !featureEvaluator->setImage( image, data.origWinSize ) )

return false;

Mat currentMask;

if (!maskGenerator.empty()) {

currentMask=maskGenerator->generateMask(image);

}

vector<Rect> candidatesVector;

vector<int> rejectLevels;

vector<double> levelWeights;

Mutex mtx;

if( outputRejectLevels )

{

parallel_for_(Range(0, stripCount), HOGCascadeClassifierInvoker( *this, processingRectSize, stripSize, yStep, factor,

candidatesVector, rejectLevels, levelWeights, true, currentMask, &mtx));

levels.insert( levels.end(), rejectLevels.begin(), rejectLevels.end() );

weights.insert( weights.end(), levelWeights.begin(), levelWeights.end() );

}

else

{

parallel_for_(Range(0, stripCount), HOGCascadeClassifierInvoker( *this, processingRectSize, stripSize, yStep, factor,

candidatesVector, rejectLevels, levelWeights, false, currentMask, &mtx));

}

candidates.insert( candidates.end(), candidatesVector.begin(), candidatesVector.end() );

return true;

}

int HOGCascadeClassifier::runAt( Ptr<HOGEvaluator>& evaluator, Point pt, double& weight )

{

assert( data.featureType == HOGEvaluator::HOG );

if( !evaluator->setWindow(pt) )

return -1;

if( data.isStumpBased )

{

return HOGpredictOrderedStump( *this, evaluator, weight );

}

else

{

return HOGpredictOrdered( *this, evaluator, weight );

}

}

void HOGCascadeClassifier::detectMultiScale( const Mat& image, vector<Rect>& objects,

vector<int>& rejectLevels,

vector<double>& levelWeights,

double scaleFactor, int minNeighbors,

int flags, Size minObjectSize, Size maxObjectSize,

bool outputRejectLevels )

{

const double GROUP_EPS = 0.2;

CV_Assert( scaleFactor > 1 && image.depth() == CV_8U );

if( empty() )

return;

objects.clear();

// TODO

if (!maskGenerator.empty()) {

maskGenerator->initializeMask(image);

}

if( maxObjectSize.height == 0 || maxObjectSize.width == 0 )

maxObjectSize = image.size();

Mat grayImage = image;

if( grayImage.channels() > 1 )

{

Mat temp;

cvtColor(grayImage, temp, COLOR_BGR2GRAY);

grayImage = temp;

}

Mat imageBuffer(image.rows + 1, image.cols + 1, CV_8U);

vector<Rect> candidates;

for( double factor = 1; ; factor *= scaleFactor )

{

Size originalWindowSize = data.origWinSize;

Size windowSize( cvRound(originalWindowSize.width*factor), cvRound(originalWindowSize.height*factor) );

Size scaledImageSize( cvRound( grayImage.cols/factor ), cvRound( grayImage.rows/factor ) );

Size processingRectSize( scaledImageSize.width - originalWindowSize.width, scaledImageSize.height - originalWindowSize.height );

if( processingRectSize.width <= 0 || processingRectSize.height <= 0 )

break;

if( windowSize.width > maxObjectSize.width || windowSize.height > maxObjectSize.height )

break;

if( windowSize.width < minObjectSize.width || windowSize.height < minObjectSize.height )

continue;

Mat scaledImage( scaledImageSize, CV_8U, imageBuffer.data );

resize( grayImage, scaledImage, scaledImageSize, 0, 0, INTER_LINEAR );

int yStep = 4;

int stripCount, stripSize;

const int PTS_PER_THREAD = 1000;

stripCount = ((processingRectSize.width/yStep)*(processingRectSize.height + yStep-1)/yStep + PTS_PER_THREAD/2)/PTS_PER_THREAD;

stripCount = std::min(std::max(stripCount, 1), 100);

stripSize = (((processingRectSize.height + stripCount - 1)/stripCount + yStep-1)/yStep)*yStep;

if( !detectSingleScale( scaledImage, stripCount, processingRectSize, stripSize, yStep, factor, candidates,

rejectLevels, levelWeights, outputRejectLevels ) )

break;

}

objects.resize(candidates.size());

std::copy(candidates.begin(), candidates.end(), objects.begin());

if( outputRejectLevels )

{

groupRectangles( objects, rejectLevels, levelWeights, minNeighbors, GROUP_EPS );

}

else

{

groupRectangles( objects, minNeighbors, GROUP_EPS );

}

}

void HOGCascadeClassifier::detectMultiScale( const Mat& image, vector<Rect>& objects,

double scaleFactor, int minNeighbors,

int flags, Size minObjectSize, Size maxObjectSize)

{

vector<int> fakeLevels;

vector<double> fakeWeights;

detectMultiScale( image, objects, fakeLevels, fakeWeights, scaleFactor,

minNeighbors, flags, minObjectSize, maxObjectSize, false );

}

void HOGCascadeClassifier::setMaskGenerator(Ptr<MaskGenerator> _maskGenerator)

{

maskGenerator=_maskGenerator;

}

Ptr<HOGCascadeClassifier::MaskGenerator> HOGCascadeClassifier::getMaskGenerator()

{

return maskGenerator;

}

}参考:

https://github.com/Schmetzler/opencv3_CascadeHOG https://github.com/Schmetzler/opencv3_CascadeHOG

https://github.com/Schmetzler/opencv3_CascadeHOG

https://github.com/watersink/hog https://github.com/watersink/hog

https://github.com/watersink/hog

边栏推荐

- 喜马拉雅自研网关架构演进过程

- 《软件工程导论(第六版)》 张海藩 复习笔记

- 走进如心小镇,数智化变革连接“未来社区”

- 写博客文档

- PLC模拟量输入 模拟量转换FB S_ITR(三菱FX3U)

- 考虑关系的图卷积神经网络R-GCN的一些理解以及DGL官方代码的一些讲解

- Getting started with fastdfs

- 关联线探究,如何连接流程图的两个节点

- Iframe parent-child page communication

- STC 32-bit 8051 single chip microcomputer development example tutorial three program compilation setting and download

猜你喜欢

《软件工程导论(第六版)》 张海藩 复习笔记

8K HDR!| Hevc hard solution for chromium - principle / Measurement Guide

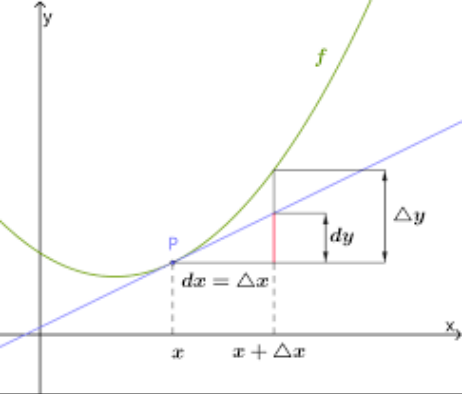

math_ Use differentiation to calculate approximate value

小鸟逃票登机,如何反思,应如何解决,飞机为何怕小鸟?

Détection des cibles - série Yolo

RichView TRVDocParameters 页面参数设置

GaussDB(for MySQL) :Partial Result Cache,通过缓存中间结果对算子进行加速

Big factories are wolves, small factories are dogs?

Simple but modern server dashboard dashdot

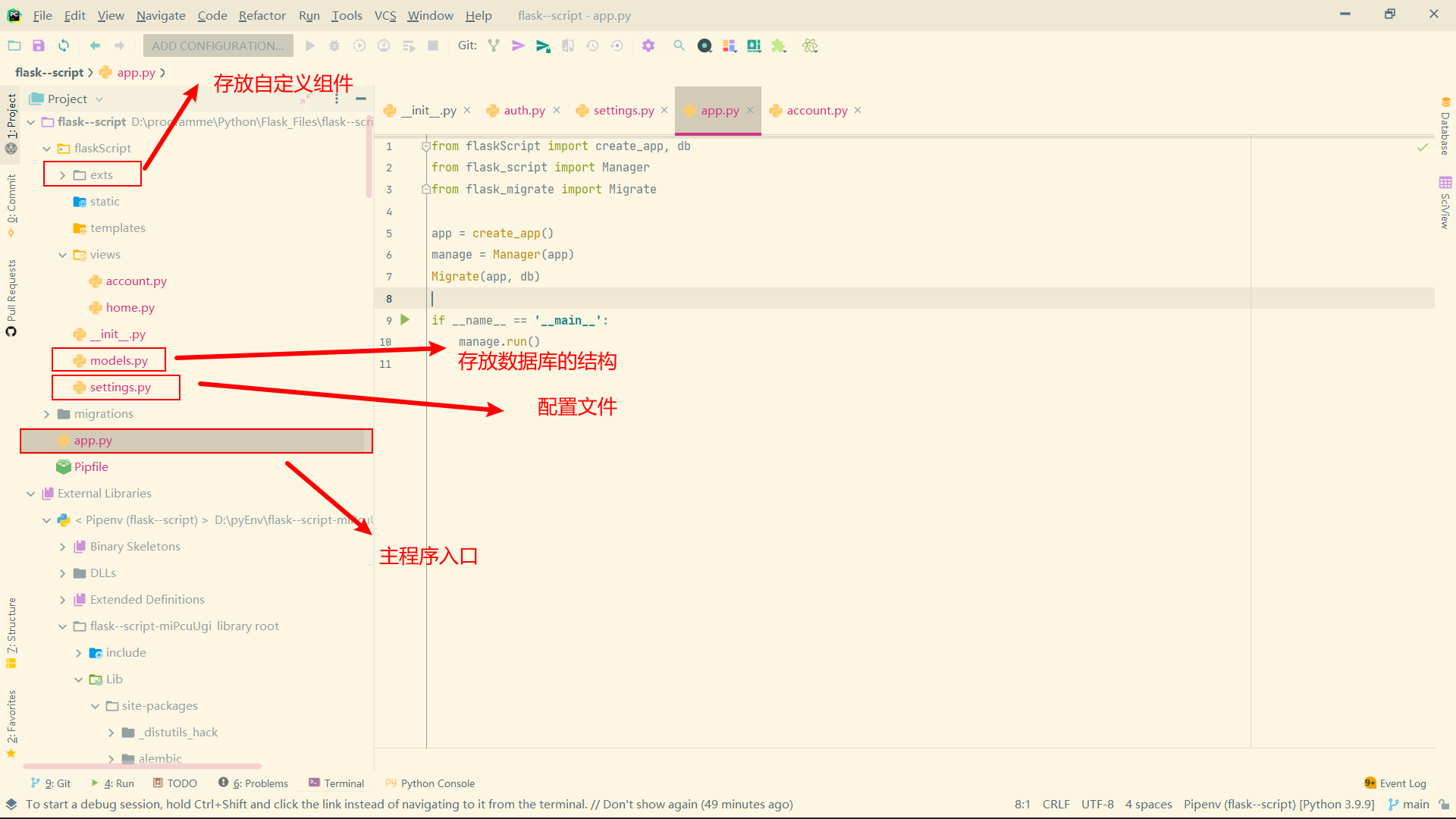

Common components of flask

随机推荐

朋友圈社区程序源码分享

【C语言】详解 memset() 函数用法

8K HDR!|为 Chromium 实现 HEVC 硬解 - 原理/实测指南

leetcode刷题:栈与队列07(滑动窗口最大值)

Stack overflow 2022 developer survey: where is the industry going?

Win11 how to hide the taskbar? Win11 method to hide the taskbar

Practical project notes (I) -- creation of virtual machine

想得到股票开户的优惠链接,如何得知?在线开户是安全么?

Internship: gradually moving towards project development

人脸识别系统 —— OpenCV人脸检测

图片拼图微信小程序源码_支持多模板制作和流量主

Big factories are wolves, small factories are dogs?

C#聯合halcon應用——大華相機采集類

Flask 常用组件

薛定谔的日语学习小程序源码

After adding cocoapods successfully, the header file cannot be imported or an error is reported in not found file

PHP获取微信小程序和小程序商店外链地址

Uniapp uses Tencent map to select points without window monitoring to return users' location information. How to deal with it

Problems encountered in installing MySQL in docker Ubuntu container

运动捕捉系统原理