当前位置:网站首页>dried food! Information diffusion prediction based on sequence hypergraph neural network

dried food! Information diffusion prediction based on sequence hypergraph neural network

2022-06-11 19:38:00 【Aitime theory】

Click on the blue words

Pay attention to our

AI TIME Welcome to everyone AI Fans join in !

Diffusion cascade prediction is the key to understanding the spread of information on social networks . Most methods typically focus on the order or structure of infected users in a single cascade , Therefore, global users and cascading dependencies are ignored , Limited predictive performance . The current strategy of introducing social networks can only obtain the social homogeneity between users , Not enough to describe their preferences .

In order to solve the above problems , We propose a new information diffusion prediction method , Called memory enhanced sequence hypergraph attention network (MS-HGAT).

say concretely , In terms of learning users' global dependencies , We not only take advantage of their friendship , Also consider their interactions at the cascade level . Besides , In order to dynamically capture user preferences , We divide the diffusion hypergraph into a series of subgraphs based on timestamp , Construct hypergraph attention network to learn sequence hypergraph , And the gating fusion strategy is used to connect them .

Besides , A memory enhanced embedded search module is also proposed , It is used to capture the learned user representation to a specific cascade embedding space , So as to highlight the sequence information inside the cascade .

In this issue AI TIME PhD studio , We invite you to Xi'an Jiaotong University 2019 I'm a direct doctoral student —— Sun Ling , Bring us report sharing 《 Information diffusion prediction based on sequence hypergraph neural network 》.

Sun Ling :

Xi'an Jiaotong University 2019 I'm a direct doctoral student , Under the guidance of Professor Rao yuan . The main research direction is information communication modeling for social media 、 False information detection and intervention methods , Relevant work is published in AAAI、IJCAI、ACL Wait for the International Conference .

Research background

Information diffusion prediction

Given : Historical diffusion data ( Cascade tree : complex 、 Hard to get , Cascade sequence )

Mission :

● At the micro level :

Research motivation

Representation based learning :

The current problems are all based on the fixed propagation mode , But in the real information scenario, the predefined patterns may not be followed . There are two main problems in the current method :

1. Most studies ignore the global dependence of users in the process of information consumption

Our solution : At the same time, mining the global friendship relationship and global diffusion interaction relationship

There may be cascading interactions between different users in the above networks .

2. Few models can learn more about the dynamic relationship between users and cascades

Our solution : Set up a series of hypergraphs to dynamically learn the interaction between users and cascades in a specific time interval .

An edge in a hypergraph may contain multiple points , We construct a series of hypergraphs to represent the interaction of users at the global level in different time intervals and learn their dynamic preferences .

MS-HGAT Model

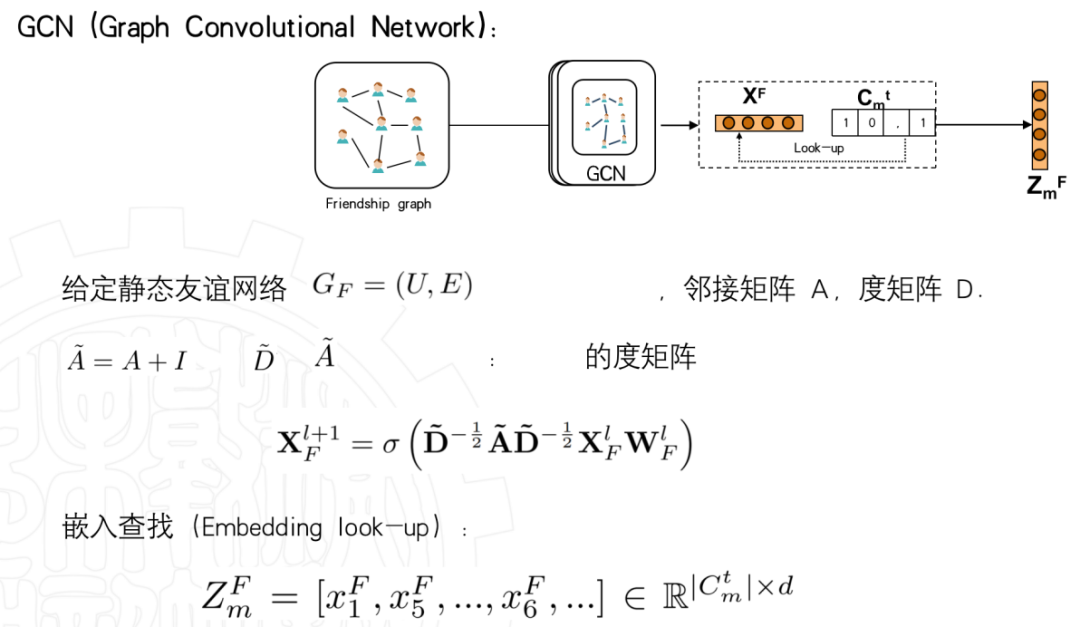

The first part is the static learning of user relationship network ;

The second part is to dynamically use a series of propagating hypergraphs to learn their dynamic interaction behavior ; The memory enhanced embedded search module stores the static and dynamic learned user representations in the memory block for subsequent search .

MS-HGAT

Module1: Users statically rely on learning

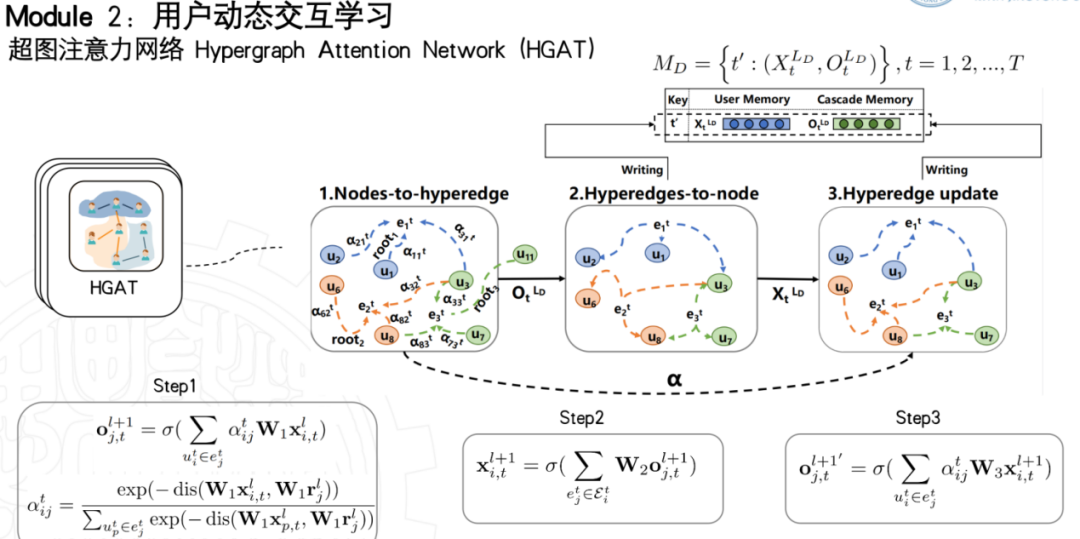

The second module is the main innovation of this study , It mainly studies the dynamic interaction of users .

First step , Because of users 1,2,3 At the same time, it participates in the super edge , According to this 3 Users and the root on the super edge (root) Distance between users , Calculate the attention coefficient , And the weighted aggregation is the representation of super edge .

The second step , It is a process of Super Edge aggregation back to point . Our first step converged to the edge , Learned 3 The characteristics of bar cascade , And then back to the point . That is, the edge features learned in the global scope are returned to the nodes . We can finally learn the user's interaction preferences . however , This hypergraph learns short-term user interaction behavior , Instead of calculating extra attention, the average aggregation is calculated directly .

The third step , Is an update . That is, point to edge aggregation again , Update the representation of the superedge , Then it is stored in the memory module .

The memory module mainly stores the features of users and hyperedges , So as to facilitate subsequent search .

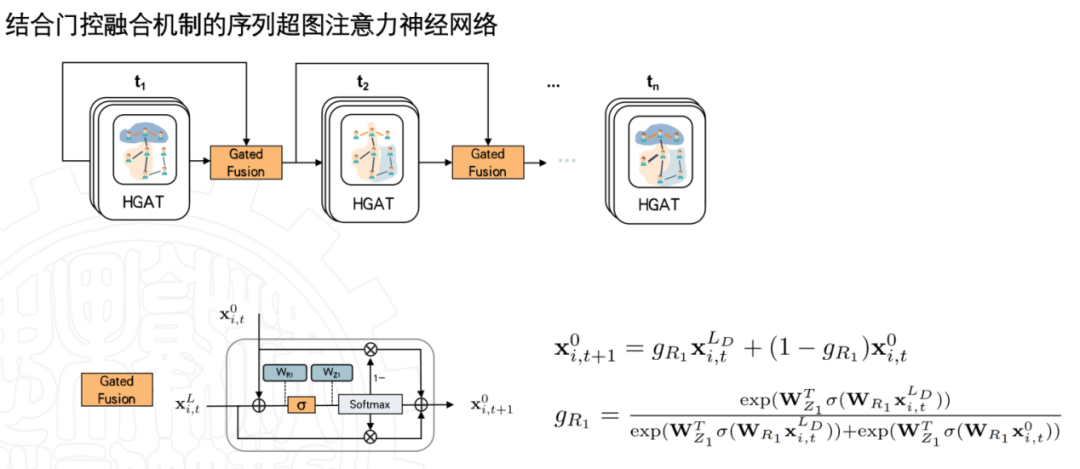

The hypergraph of global diffusion is divided into several subgraphs according to the timestamp , Subgraphs are connected through an adaptive fusion gate mechanism .

We aggregate the input and output of the previous moment as the input of the next stage .

Finally, perform embedded search , Given a specific cascade , Find the static and dynamic representation features in the memory module . In the aspect of dynamic search, it is necessary to fuse the results of user angle and cascade angle .

We learn static and dynamic cascading representations in interactive networks , But the graph neural network can not learn the sequence relationship between the participating users .

therefore , We introduced transformer Self attention decoder module in , Learn more about the interaction within the cascade .

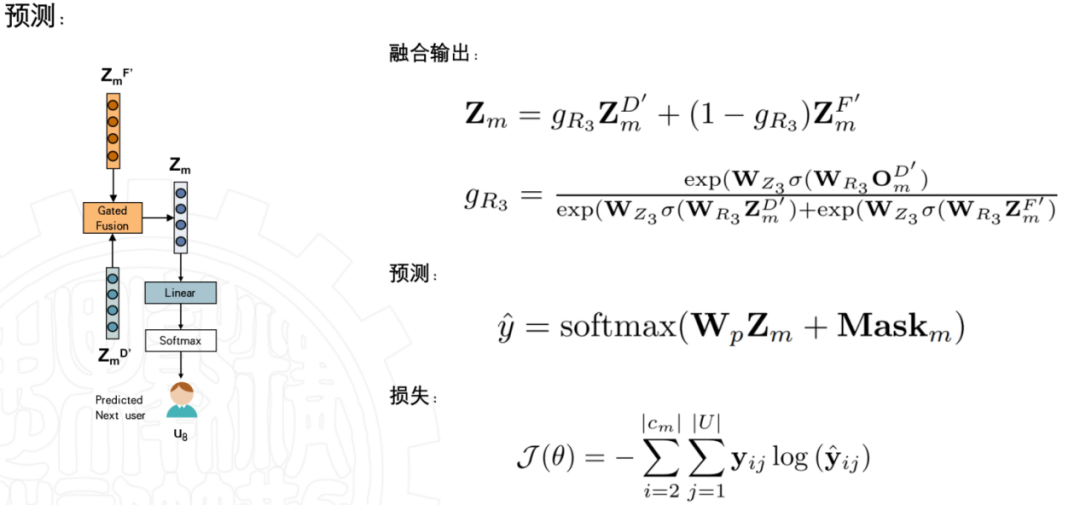

Last , We also use these two representations through adaptive fusion methods , The resulting output is our final cascaded representation , According to this expression , We predict which users will join the cascade next .

experiment

● RQ1:MS-HGAT Whether it is superior to the most advanced information diffusion prediction methods ?

● RQ2: How the quantity and quality of training sets affect the prediction performance of the model ?

● RQ3: How user relationships and our learning strategies affect MS-HGAT Prediction performance of ?

We changed it K value , Look at the model in K Performance when taking different values .

We can also see , It's better to use global users and interactions than just cascading to make predictions .

The above experimental results also show that , Our model has more in-depth feature capture .

We adjusted the maximum length of the trainable couplet , It can be seen from experiments that our model can achieve good results on any length of cascades .

In the ablation experiment , We prune the modules and analyze their impact .

First, we removed the social network graph , The second is to remove the diffusion network graph , The third and fourth remove the user's memory module and the cascading memory module respectively , The fifth one converts all the gated fusion mechanisms into a simple form of splicing .

Future work direction

● Use hypergraphs to describe tree cascades , Not just sequence .

● Combine information content with diffusion characteristics .

● Consider the diverse user behavior in social platforms ( for example “ give the thumbs-up ” and “ Comment on ”)

carry

Wake up

Thesis title :

MS-HGAT: Memory-enhanced Sequential Hypergraph Attention Network for Information Diffusion Prediction

Thesis link :

https://www.aaai.org/AAAI22Papers/AAAI-4362.SunL.pdf

Click on “ Read the original ”, You can watch this playback

Arrangement : Lin be

author : Grandchildren Water caltrop

Excellent articles in the past are recommended

Remember to pay attention to us ! There is new knowledge every day !

About AI TIME

AI TIME From 2019 year , It aims to carry forward the spirit of scientific speculation , Invite people from all walks of life to the theory of artificial intelligence 、 Explore the essence of algorithm and scenario application , Strengthen the collision of ideas , Link the world AI scholars 、 Industry experts and enthusiasts , I hope in the form of debate , Explore the contradiction between artificial intelligence and human future , Explore the future of artificial intelligence .

so far ,AI TIME Has invited 600 Many speakers at home and abroad , Held more than 300 An event , super 210 10000 people watch .

I know you.

Looking at

Oh

~

Click on Read the original View playback !

边栏推荐

- MongoDB 什么兴起的?应用场景有哪些?

- Pyramid test principle: 8 tips for writing unit tests

- Linux环境安装mysql数据库详细教程(含卸载和密码重置过程)

- "Case sharing" based on am57x+ artix-7 FPGA development board - detailed explanation of Pru Development Manual

- 无监督图像分类《SCAN:Learning to Classify Images without》代码分析笔记(1):simclr

- Activate function formulas, derivatives, image notes

- 【mysql进阶】10种数据类型的区别以及如何优化表结构(三)

- Qubicle notes: self set shortcut keys (attached with Lao Wang's self set shortcut key file)

- CMU 15-445 database course lesson 5 text version - buffer pool

- CMU 15 - 445 cours de base de données Leçon 5 version texte - Pool tampon

猜你喜欢

Go语言入门(五)——分支语句

![[untitled]](/img/02/49d333ba80bc6a3e699047c0c07632.png)

[untitled]

CMU 15-445 数据库课程第五课文字版 - 缓冲池

Flutter--Button浅谈

Qubicle notes: Hello voxel

Multimodal learning toolkit paddlemm based on propeller

Tensorflow---TFRecord文件的创建与读取

Loop filtering to uncover the technical principle behind video thousand fold compression

Practice of tag recognition based on Huawei cloud image

YOLOv3 Pytorch代码及原理分析(一):跑通代码

随机推荐

基于 Vue + Codemirror 实现 SQL 在线编辑器

Introduction to ieda bottom menu

[help] how can wechat official account articles be opened in an external browser to display the selected messages below?

司空见惯 - 会议室名称

SISO decoder for repetition (supplementary Chapter 4)

Module 8 operation

Activate function formulas, derivatives, image notes

On Workflow selection

Pyqt5 tips - button vertical display method, QT designer sets button vertical display.

Flash ckeditor rich text compiler can upload and echo images of articles and solve the problem of path errors

Skywalking source code analysis Part 5 - server configuration configuration module startup

CMU 15-445 數據庫課程第五課文字版 - 緩沖池

使用贝叶斯优化进行深度神经网络超参数优化

Picture bed: picgo+ Tencent cloud +typera

NR LDPC punched

556. 下一个更大元素 III-(31. 下一个排列)-两次遍历

Use Mysql to determine the day of the week

MySQL federated index and BTREE

构建Web应用程序

What is the workflow of dry goods MapReduce?