当前位置:网站首页>Matlab simulation of inverted pendulum control system based on qlearning reinforcement learning

Matlab simulation of inverted pendulum control system based on qlearning reinforcement learning

2022-07-27 00:27:00 【I love c programming】

Catalog

3. Preview of some simulation drawings

4. Source code acquisition method

1. Algorithm description

Reinforcement learning usually includes two entities agent and environment. The interaction between the two entities is as follows , stay environment Of statestst Next ,agent take actionatat And then you get rewardrtrt And enter statest+1st+1.Q-learning The core is Q-table.Q-table The rows and columns of represent state and action Value ,Q-table Value Q(s,a)Q(s,a) Measure current states take actiona How good it is .

At every moment , The agent observes the current state of the environment and selects an action , This will cause the environment to move to a new state , At the same time, the environment will return a reward to the agent , The reward reflects the result of the action . In the inverted pendulum task , The reward for each time step is +1, But once the car deviates from the center by more than 4.8 The inclination of units or rods exceeds 15 degree , The task will end . therefore , Our goal is to make the task run as long as possible , In order to get more benefits . Primitive inverted pendulum task , The input of the agent includes 4 A real number ( Location , Speed etc. ), But actually , Neural networks can complete tasks directly by observing scenes , So we can directly use the car centered screen patch as input . Strictly speaking , Our design status is the difference between the current screen patch and the previous screen patch , This enables the agent to infer the speed of the rod from an image .

2. Partial procedure

for trial=1:MaxTr, % The external cycle begins

count=0;

failure=0;

failReason=0;

lfts = 1;

newSt = inistate;

inputs = newSt./NF;

lc = Initlc;

la = Initla;

xhist=newSt;

% Calculation newAction

ha = inputs*wa1;

g = (1 - exp(-ha))./(1 + exp(-ha));

va = g*wa2;

newAction = (1 - exp(-va))./(1 + exp(-va));

% Calculation J

inp=[inputs newAction];

qc=inp*wc1;

p = (1 - exp(-qc))./(1 + exp(-qc));

J=p*wc2;

Jprev = J;

while(lfts<Tit), % The internal cycle begins

if (rem(lfts,500)==0),

disp(['It is ' int2str(lfts) ' time steps now......']);

end

% Generate control signal

if (newAction >= 0)

sgnf = 1;

else

sgnf = -1;

end

u = Mag*sgnf; %bang-bang control

%Plug in the model

[T,Xf]=ode45('cartpole_model',[0 tstep],newSt,[],u);

a=size(Xf);

newSt=Xf(a(1),:);

inputs=newSt./NF; %input normalization

% Calculation newAction

ha = inputs*wa1;

g = (1 - exp(-ha))./(1 + exp(-ha));

va = g*wa2;

newAction = (1 - exp(-va))./(1 + exp(-va));

%calculate new J

inp=[inputs newAction];

qc=inp*wc1;

p = (1 - exp(-qc))./(1 + exp(-qc));

J=p*wc2;

xhist=[xhist;newSt];

%%===========================================================%%

%% Obtain the enhanced signal r(t), namely reinf %%

%%===========================================================%%

if (abs(newSt(1)) > FailTheta)

reinf = 1;

failure = 1;

failReason = 1;

elseif (abs(newSt(3)) > Boundary)

reinf = 1;

failure = 1;

failReason = 2;

else

reinf = 0;

end

%%================================%%

%% learning rate update scheme %%

%%================================%%

if (rem(lfts,5)==0)

lc = lc - 0.05;

la = la - 0.05;

end

if (lc<0.01)

lc=0.005;

end

if (la<0.01)

la=0.005;

end

%%================================================%%

%% internal weights updating cycles for critnet %%

%%================================================%%

cyc = 0;

ecrit = alpha*J-(Jprev-reinf);

Ec = 0.5 * ecrit^2;

while (Ec>Tc & cyc<=Ncrit),

gradEcJ=alpha*ecrit;

%----for the first layer(input to hidden layer)-----------

gradqwc1 = [inputs'; newAction];

for i=1:N_Hidden,

gradJp = wc2(i);

gradpq = 0.5*(1-p(i)^2);

wc1(:,i) = wc1(:,i) - lc*gradEcJ*gradJp*gradpq*gradqwc1;

end

%----for the second layer(hidden layer to output)-----------

gradJwc2=p';

wc2 = wc2- lc*gradEcJ*gradJwc2;

%----compute new J----

inp=[inputs newAction];

qc=inp*wc1;

p = (1 - exp(-qc))./(1 + exp(-qc));

J=p*wc2;

cyc = cyc +1;

ecrit = alpha*J-(Jprev-reinf);

Ec = 0.5 * ecrit^2;

end % end of "while (Ec>0.05 & cyc<=Ncrit)"

%normalization weights for critical network

if (max(max(abs(wc1)))>1.5)

wc1=wc1/max(max(abs(wc1)));

end

if max(max(abs(wc2)))>1.5

wc2=wc2/max(max(abs(wc2)));

end

%%=============================================%%

%% internal weights updating cycles for actnet %%

%%=============================================%%

cyc = 0;

eact = J - Uc;

Ea = 0.5*eact^2;

while (Ea>Ta & cyc<=Nact),

graduv = 0.5*(1-newAction^2);

gradEaJ = eact;

gradJu = 0;

for i=1:N_Hidden,

gradJu = gradJu + wc2(i)*0.5*(1-p(i)^2)*wc1(WC_Inputs,i);

end

%----for the first layer(input to hidden layer)-----------

for (i=1:N_Hidden),

gradvg = wa2(i);

gradgh = 0.5*(1-g(i)^2);

gradhwa1 = inputs';

wa1(:,i)=wa1(:,i)-la*gradEaJ*gradJu*graduv*gradvg*gradgh*gradhwa1;

end

%----for the second layer(hidden layer to output)-----------

gradvwa2 = g';

wa2=wa2-la*gradEaJ*gradJu*graduv*gradvwa2;

%----compute new J and newAction-------

ha = inputs*wa1;

g = (1 - exp(-ha))./(1 + exp(-ha));

va = g*wa2;

newAction = (1 - exp(-va))./(1 + exp(-va));

inp=[inputs newAction];

qc=inp*wc1;

p = (1 - exp(-qc))./(1 + exp(-qc));

J=p*wc2;

cyc = cyc+1;

eact = J - Uc;

Ea = 0.5*eact^2;

end %end of "while (Ea>Ta & cyc<=Nact)"

if ~failure

Jprev=J;

else

break; %another trial Jump out “while(lfts<Tit),”

end

lfts=lfts+1;

end %end of "while(lfts<Tit)" End the internal cycle

msgstr1=['Trial # ' int2str(trial) ' has ' int2str(lfts) ' time steps.'];

msgstr21=['Trial # ' int2str(trial) ' has successfully balanced for at least '];

msgstr22=[msgstr21 int2str(lfts) ' time steps '];

3. Preview of some simulation drawings

4. Source code acquisition method

Get the way 1:

Click the download link :

Access method 2:

Blog resource item , Search for resources with the same name as blog .

Access method 3:

If the download link fails , Blogger wechat contact .

A_027

边栏推荐

- 100. Same tree

- LeetCode——哈希表篇

- Arthas quick start

- Nacos installation and pit stepping

- View where Anaconda created the environment

- Design of electronic scale based on 51 single chip microcomputer

- C and pointer Chapter 18 runtime environment 18.7 problems

- Design of vision protector based on 51 single chip microcomputer

- AutoCAD的卸载后重新安装,删除注册表的详细过程

- Deploy yolov5 error reporting in pycharm

猜你喜欢

LeetCode——链表篇

7_主成分分析法(Principal Component Analysis)

CSDN文章语法规则

爬虫解析网页的 对象.元素名方法

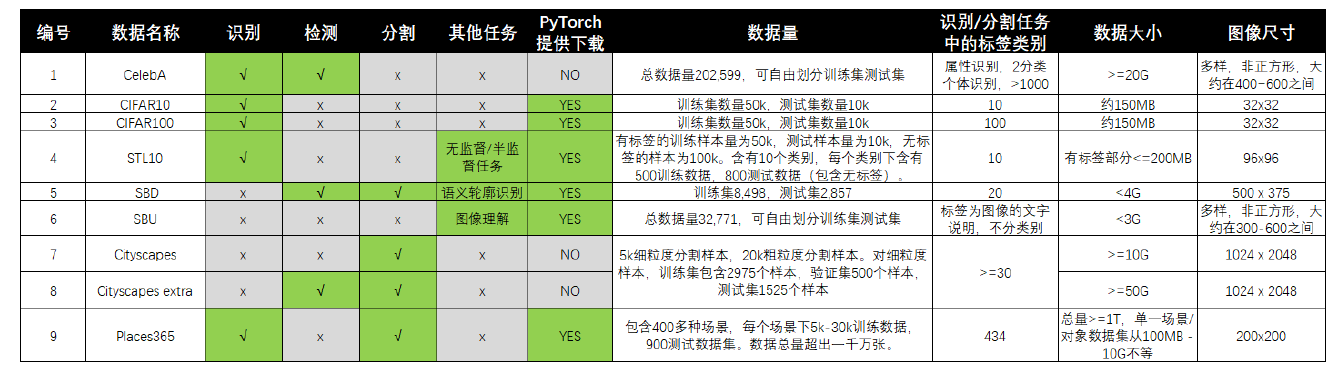

哨兵2号(Sentinel-2)的下载及处理

1、 Kubernetes basic concept + environment installation (build cross server public network environment)

放图仓库-Tsai

V-viewer use

![[PCB open source sharing] stc8a8k64d4 development board](/img/df/14f47295dace857c0a32545c3eca39.png)

[PCB open source sharing] stc8a8k64d4 development board

The difference between SQL join and related subinquiry

随机推荐

6_梯度下降法(Gradient Descent)

Codeforces C1. Simple Polygon Embedding

Codeforces E. maximum subsequence value (greed + pigeon nest principle)

今日份20220719折腾deeplabcut

Blue Bridge Cup brush question notes (word analysis)

机器人学台大林教授课程笔记

Halloween treatments (drawer principle)

信号与系统冲激响应与阶跃响应

Chapter 7 course summary

View where Anaconda created the environment

uni-app学习(二)

"Could not load host key" error when xshell connects to the server

C and pointers Chapter 18 runtime environment 18.8 programming exercises

Oracle data guard service, process and protection mode

9_逻辑回归(Logistic Regression)

转置卷积相关

在pycharm中部署yolov5报错问题

C and pointer Chapter 18 runtime environment 18.2 interface between C and assembly language

放图仓库-2(函数图像)

Codeforces d.constructing the array (priority queue)