当前位置:网站首页>01. Solr7.3.1 deployment and configuration of jetty under win10 platform

01. Solr7.3.1 deployment and configuration of jetty under win10 platform

2022-07-05 14:11:00 【Full stack programmer webmaster】

What is? Solr

Solr yes Apache Next top open source project , use Java Development , It is based on Lucene Full text search server for .Solr Provides a ratio Lucene Richer query language , At the same time, configurable 、 Scalable , And index 、 Search performance optimized

Solr Can run independently , Running on the Jetty、Tomcat Such as these Servlet In the container ,Solr The implementation of index is very simple , use POST Method direction Solr Server sends a description Field And its contents XML file ,Solr according to xml Document add 、 Delete 、 Update index .Solr Search only needs to be sent HTTP GET request , Then on Solr return Xml、json Analysis of query results in equal format , Organize page layout .Solr Build not available UI The function of ,Solr Provides a management interface , Query through the management interface Solr Configuration and operation of .

solr Is based on lucene Develop enterprise search server , It's actually encapsulation lucene.

Solr Is an independent enterprise search application server , It is similar to Web-service Of API Interface . The user can go through http request , Submit a file in a certain format to the search engine server , Build index ; You can also make a search request , And get the return result

Solr similar webservice, Call interface , Realize increase , modify , Delete , Query index library .

Solr And Lucene The difference between

Lucene Is an open source full-text search engine toolkit , It is not a complete full-text search engine ,Lucene Provides a complete query engine and index engine , The goal is to provide software developers with an easy-to-use toolkit , In order to facilitate the realization of full-text retrieval in the target system , Or with Lucene Build a full-text search engine based on .

Solr The goal is to build an enterprise level search engine system , It's a search engine service , Can run independently , adopt Solr Can be very fast to build enterprise search engine , adopt Solr It can also efficiently complete the search function in the station .

Solr similar webservice, Provide the interface , Call interface , Send some characteristic statements , Realize increase , Delete , modify , Inquire about .

1、solr Download and install

Download address : http://www.apache.org/dyn/closer.lua/lucene/solr/7.3.1

2 install solr

After downloading , take solr-7.3.1.zip Send it under your specific drive letter . But be careful that this directory had better not have spaces , Chinese or other special characters .

3、 start-up solr

decompression solr-7.3.1.zip after , Enter its bin Catalog : In the blanks shift+ Right mouse button , Get into Powershell window :

Then input cmd.exe

solr 7.3 Bring their own jetty, Can run independently , No need to use Tomcat start-up .

Input solr.cmd start Then knock back , You can start it solr 了 . The default port is :8983

Type in the browser localhost:8983/solr, You can see that solr It's already started

4、 Create the core core

So-called core By analogy mysql Database to understand , like mysql One by one database , A warehouse for storing specific data tables .

Remember that you cannot directly use the add core To create core

In the window just opened , Input solr.cmd create -c test_Core

At this point, enter server\solr, You can see that a test_Core Catalog

Enter this directory

After creation , Refresh the page , At the same time, in the drop-down box in the figure, you can see the Core 了 . If you can't see it , stay dos Window type solr restart -p 8983 restart solr that will do

Click on myCore You can see the following information : This information includes the word breaker , And data import , Data query and other functions

5、 start-up solr And the creation of core When it's done , This step configures the Chinese word splitter :

Add Chinese word segmentation plug-in :solr 7.3.1 Chinese word segmentation plug-in comes with , take solr-7.3.1\contrib\analysis-extras\lucene-libs\lucene-analyzers-smartcn-7.3.1.jar Copied to the solr-7.3.1\server\solr-webapp\webapp\WEB-INF\lib Directory

Paste to the target path :

Configure Chinese word segmentation , modify solr-7.3.1\server\solr\test_Core**【 This test_Core It was just created core name 】**\conf\managed-schema file , Add Chinese word segmentation

file location :

After opening this file , Search for Italian, stay Italian Add our Chinese configuration ( Copy and paste ):

<!-- Italian --> <!-- Configure Chinese word breaker --> <fieldType name="text_cn" class="solr.TextField" positionIncrementGap="100"> <analyzer type="index"> <tokenizer class="org.apache.lucene.analysis.cn.smart.HMMChineseTokenizerFactory"/> </analyzer> <analyzer type="query"> <tokenizer class="org.apache.lucene.analysis.cn.smart.HMMChineseTokenizerFactory"/> </analyzer> </fieldType> Configuration complete :

Use solr restart -p 8983 restart solr service

Refresh to open the management page

Test Chinese word breaker :

6 、 Configure Chinese word breaker IK-Analyzer-Solr7

Adapt to the latest version solr7, And add the function of dynamically loading dictionary table ;

No restart is required solr Load the new dictionary in the case of service .

To download solr7 Version of ik Word segmentation is , Download address :http://search.maven.org/#search%7Cga%7C1%7Ccom.github.magese

Word segmentation is GitHub Source code address :https://github.com/magese/ik-analyzer-solr7

GitHub There is a way to use the word separator

Will download okay jar Put in bags solr-7.3.1/server/solr-webapp/webapp/WEB-INF/lib Directory

And then to solr-7.3.1/server/solr/test_Core/conf Open... In the directory managed-schema file , Add the following configuration

<!-- ik Word segmentation is --> <fieldType name="text_ik" class="solr.TextField"> <analyzer type="index"> <tokenizer class="org.wltea.analyzer.lucene.IKTokenizerFactory" useSmart="false" conf="ik.conf"/> <filter class="solr.LowerCaseFilterFactory"/> </analyzer> <analyzer type="query"> <tokenizer class="org.wltea.analyzer.lucene.IKTokenizerFactory" useSmart="true" conf="ik.conf"/> <filter class="solr.LowerCaseFilterFactory"/> </analyzer> </fieldType> take gitHub Download the original code resources In the catalog 5 Configuration files are put into solr Service jetty or tomcat Of webapp/WEB-INF/classes/ Under the table of contents ( If WEB-INF Under no classes Catalog , Then create it manually );

①IKAnalyzer.cfg.xml

②ext.dic

③stopword.dic

④ik.conf

⑤dynamicdic.txtext.dic To expand the dictionary ;

stopword.dic Dictionary for stop words ;

IKAnalyzer.cfg.xml For profile .

Each word is on a separate line

Restart again after configuration solr service

Participle test

For me, , yes , No more ( Because in stopword.dic Stop words are configured in the dictionary ).

thus .Solr Configuration complete .

Publisher : Full stack programmer stack length , Reprint please indicate the source :https://javaforall.cn/111283.html Link to the original text :https://javaforall.cn

边栏推荐

- 登录界面代码

- Why do I support bat to dismantle "AI research institute"

- Elk enterprise log analysis system

- R language ggplot2 visual bar graph: visualize the bar graph through the two-color gradient color theme, and add label text for each bar (geom_text function)

- WebRTC的学习(二)

- 04_solr7.3之solrJ7.3的使用

- 关于Apache Mesos的一些想法

- R语言ggplot2可视化密度图:按照分组可视化密度图、自定义配置geom_density函数中的alpha参数设置图像透明度(防止多条密度曲线互相遮挡)

- Oneconnect listed in Hong Kong: with a market value of HK $6.3 billion, ye Wangchun said that he was honest and trustworthy, and long-term success

- R语言ggplot2可视化条形图:通过双色渐变配色颜色主题可视化条形图、为每个条形添加标签文本(geom_text函数)

猜你喜欢

瑞能实业IPO被终止:年营收4.47亿 曾拟募资3.76亿

基于 TiDB 场景式技术架构过程 - 理论篇

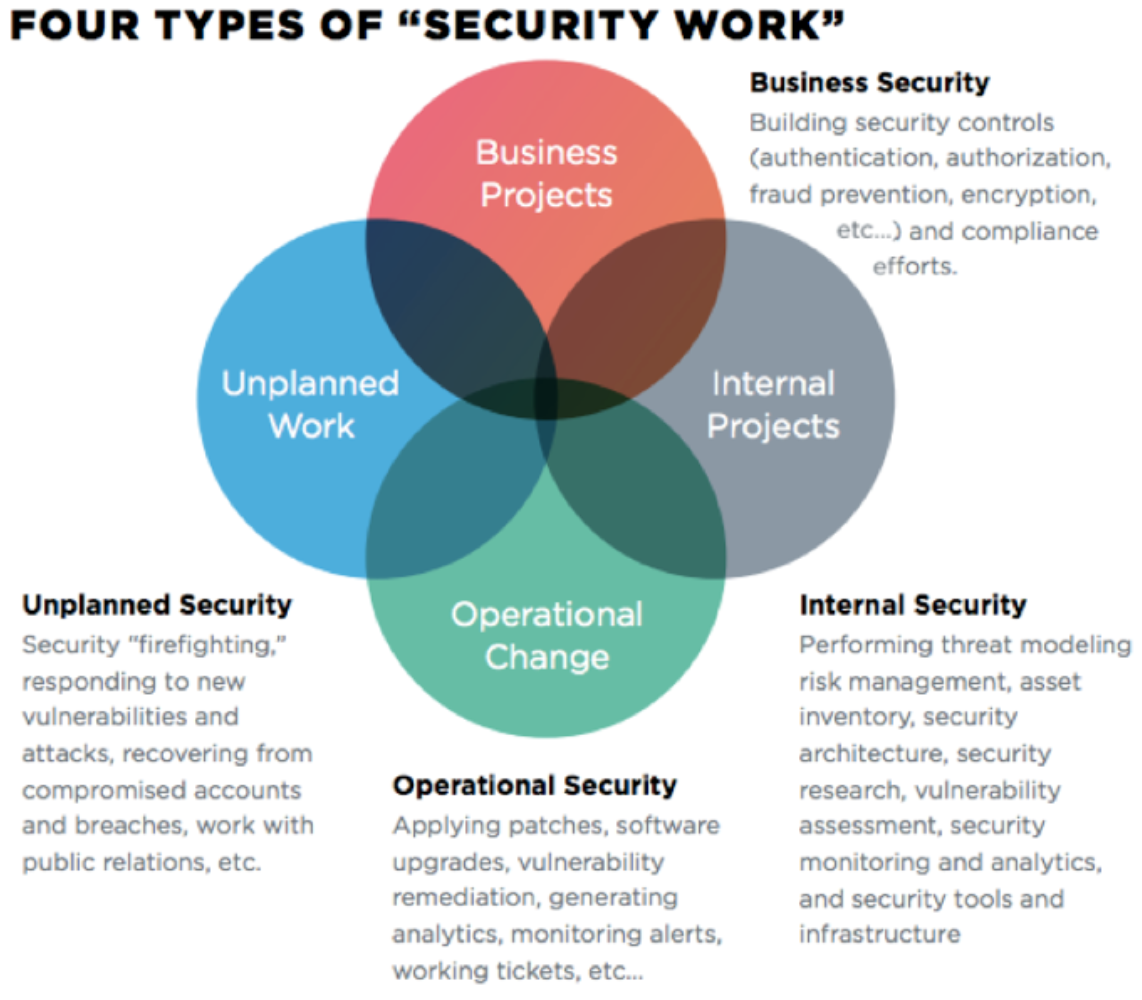

How to introduce devsecops into enterprises?

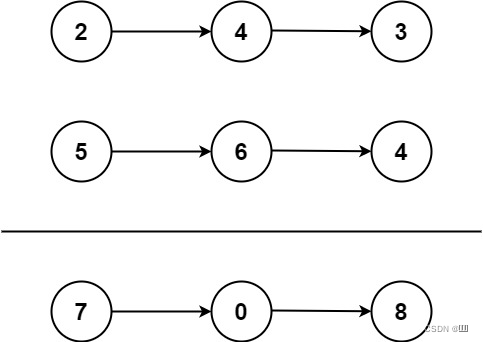

LeetCode_2(两数相加)

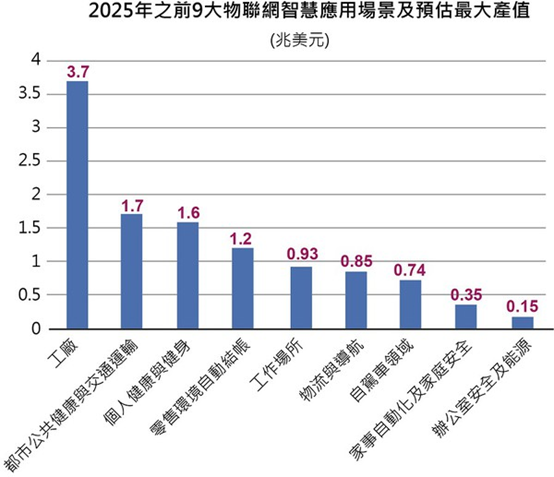

Current situation, trend and view of neural network Internet of things in the future

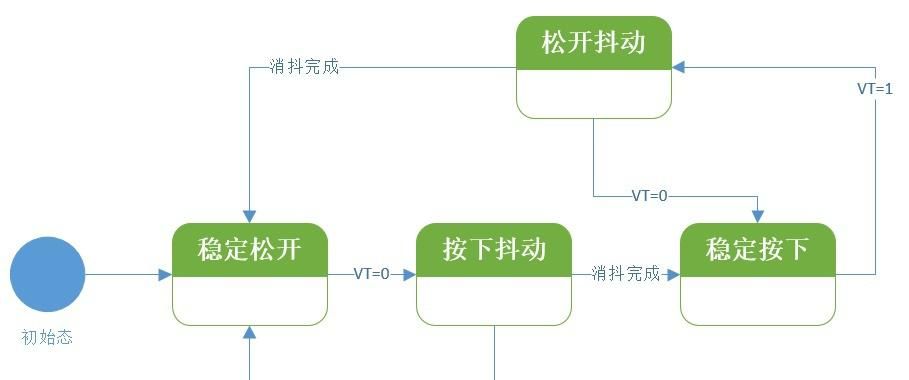

如何深入理解“有限状态机”的设计思想?

清大科越冲刺科创板:年营收2亿 拟募资7.5亿

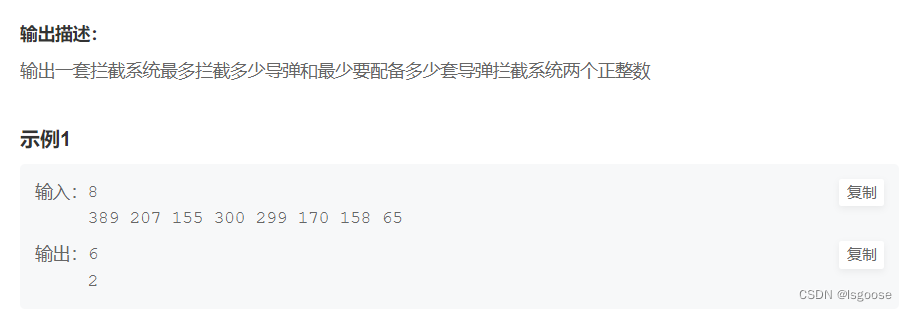

牛客网:拦截导弹

如何将 DevSecOps 引入企业?

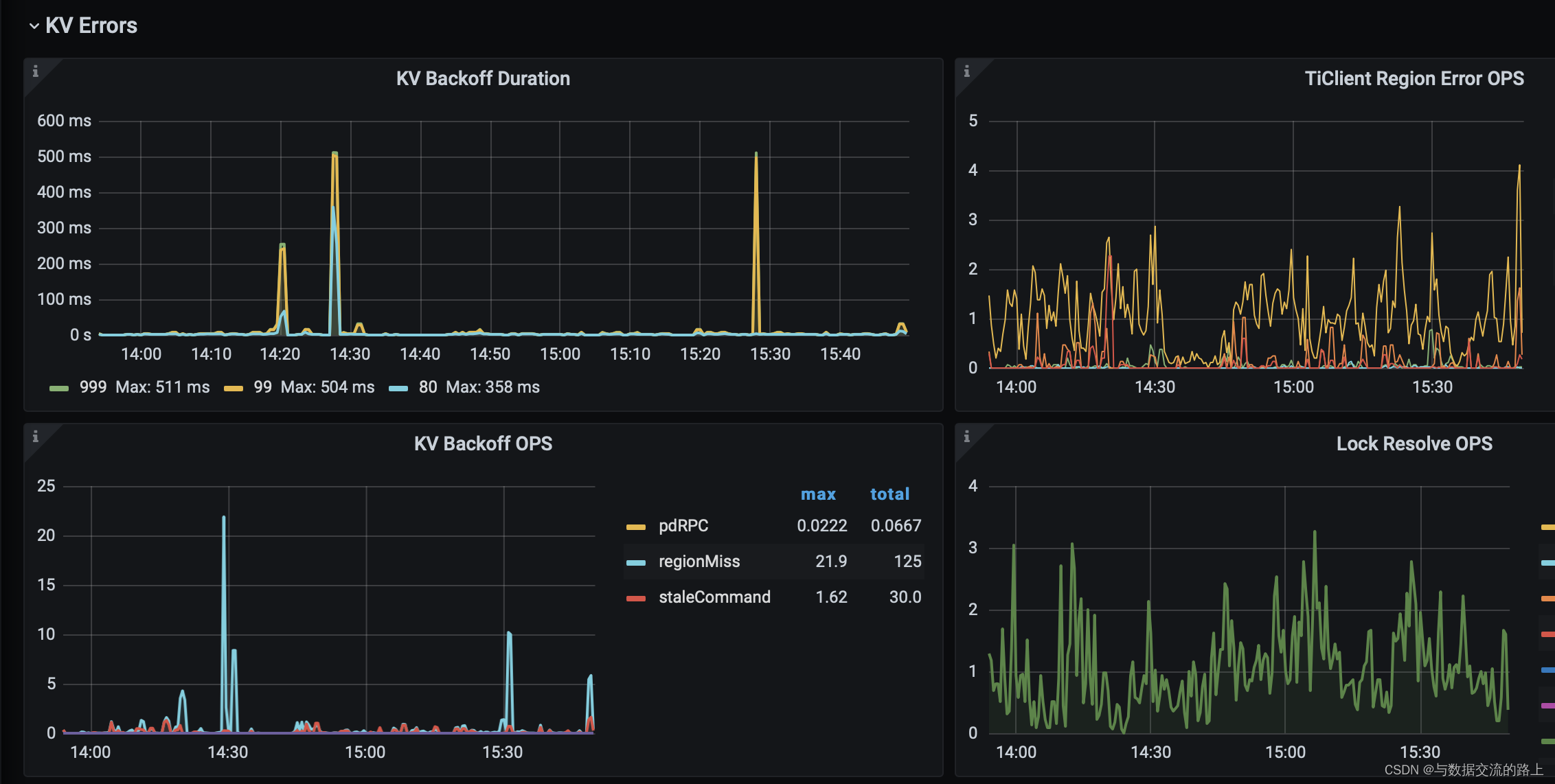

tidb-dm报警DM_sync_process_exists_with_error排查

随机推荐

鏈錶(簡單)

Financial one account Hong Kong listed: market value of 6.3 billion HK $Ye wangchun said to be Keeping true and true, long - term work

关于Apache Mesos的一些想法

Geom of R language using ggplot2 package_ Histogram function visual histogram (histogram plot)

Introduction, installation, introduction and detailed introduction to postman!

Xampp configuring multiple items

TiFlash 源码解读(四) | TiFlash DDL 模块设计及实现分析

R语言ggplot2可视化:gganimate包基于transition_time函数创建动态散点图动画(gif)、使用shadow_mark函数为动画添加静态散点图作为动画背景

R language dplyr package select function, group_ By function, mutate function and cumsum function calculate the cumulative value of the specified numerical variable in the dataframe grouping data and

享你所想。智创未来

C语言中限定符的作用

Matlab learning 2022.7.4

Assembly language

Some ideas about Apache mesos

Postman简介、安装、入门使用方法详细攻略!

Requset + BS4 crawling shell listings

魅族新任董事长沈子瑜:创始人黄章先生将作为魅族科技产品战略顾问

金融壹账通香港上市:市值63亿港元 叶望春称守正笃实,久久为功

Tidb DM alarm DM_ sync_ process_ exists_ with_ Error troubleshooting

3W原则[通俗易懂]