当前位置:网站首页>Tidb DM alarm DM_ sync_ process_ exists_ with_ Error troubleshooting

Tidb DM alarm DM_ sync_ process_ exists_ with_ Error troubleshooting

2022-07-05 14:03:00 【Tidb community dry goods portal】

author : db_user The source of the original :https://tidb.net/blog/c84753ca

One 、 background

dm Synchronization task alarm DM_sync_process_exists_with_error, Automatically recover in a minute , Want to check the reason

Two 、 Observation log error

1.dm journal

[2022/06/28 14:31:13.364 +00:00] [ERROR] [db.go:201] ["execute statements failed after retry"] [task=task-name] [unit="binlog replication"] [queries="[sql]"] [arguments="[[]]"] [error="[code=10006:class=database:scope=not-set:level=high], Message: execute statement failed: commit, RawCause: invalid connection"]2. The upstream mysql journal

2022-06-28T14:31:19.413211Z 28801 [Note] Aborted connection 28801 to db: 'unconnected' user: '***' host: 'ip' (Got an error reading communication packets)2022-06-28T14:31:22.154980Z 28802 [Note] Aborted connection 28802 to db: 'unconnected' user: '***' host: 'ip' (Got an error reading communication packets)2022-06-28T14:31:32.158508Z 28804 [Note] Start binlog_dump to master_thread_id(28804) slave_server(429505412), pos(mysql-bin-changelog.103037, 36247149)2022-06-28T14:31:32.158739Z 28803 [Note] Start binlog_dump to master_thread_id(28803) slave_server(429505202), pos(mysql-bin-changelog.103037, 40373779)3. The downstream tidb journal

[2022/06/28 14:31:12.419 +00:00] [WARN] [client_batch.go:638] ["wait response is cancelled"] [to=dm_worker_ip:20160] [cause="context canceled"][2022/06/28 14:31:12.419 +00:00] [WARN] [client_batch.go:638] ["wait response is cancelled"] [to=dm_worker_ip:20160] [cause="context canceled"][2022/06/28 14:31:12.419 +00:00] [WARN] [client_batch.go:638] ["wait response is cancelled"] [to=dm_worker_ip:20160] [cause="context canceled"][2022/06/28 14:31:12.419 +00:00] [WARN] [client_batch.go:638] ["wait response is cancelled"] [to=dm_worker_ip:20160] [cause="context canceled"][2022/06/28 14:31:12.419 +00:00] [WARN] [client_batch.go:638] ["wait response is cancelled"] [to=dm_worker_ip:20160] [cause="context canceled"]4. The downstream tikv journal

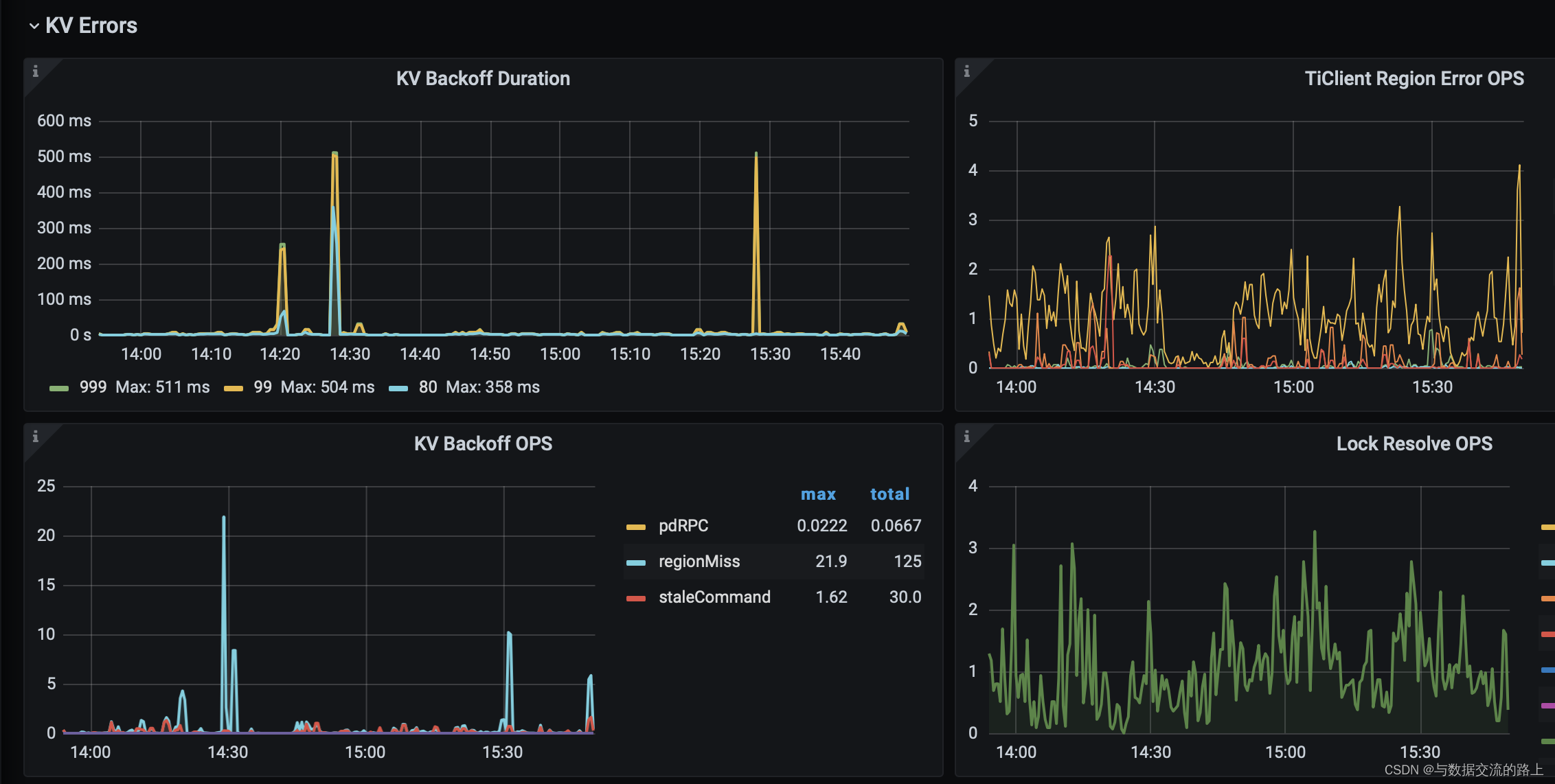

[2022/06/28 14:31:12.585 +00:00] [WARN] [endpoint.rs:537] [error-response] [err="Region error (will back off and retry) message: \"peer is not leader for region 2641161, leader may Some(id: 2641164 store_id: 5)\" not_leader { region_id: 2641161 leader { id: 2641164 store_id: 5 } }"][2022/06/28 14:31:12.585 +00:00] [WARN] [endpoint.rs:537] [error-response] [err="Region error (will back off and retry) message: \"peer is not leader for region 2641165, leader may Some(id: 2641167 store_id: 4)\" not_leader { region_id: 2641165 leader { id: 2641167 store_id: 4 } }"][2022/06/28 14:31:12.585 +00:00] [WARN] [endpoint.rs:537] [error-response] [err="Region error (will back off and retry) message: \"peer is not leader for region 2709997, leader may Some(id: 2709999 store_id: 4)\" not_leader { region_id: 2709997 leader { id: 2709999 store_id: 4 } }"][2022/06/28 14:31:12.585 +00:00] [WARN] [endpoint.rs:537] [error-response] [err="Region error (will back off and retry) message: \"peer is not leader for region 2839445, leader may Some(id: 2839447 store_id: 4)\" not_leader { region_id: 2839445 leader { id: 2839447 store_id: 4 } }"][2022/06/28 14:31:20.400 +00:00] [WARN] [endpoint.rs:537] [error-response] [err="Region error (will back off and retry) message: \"peer is not leader for region 2957169, leader may Some(id: 2957170 store_id: 1)\" not_leader { region_id: 2957169 leader { id: 2957170 store_id: 1 } }"][2022/06/28 14:31:20.400 +00:00] [WARN] [endpoint.rs:537] [error-response] [err="Region error (will back off and retry) message: \"peer is not leader for region 2957169, leader may Some(id: 2957170 store_id: 1)\" not_leader { region_id: 2957169 leader { id: 2957170 store_id: 1 } }"][2022/06/28 14:31:20.400 +00:00] [WARN] [endpoint.rs:537] [error-response] [err="Region error (will back off and retry) message: \"peer is not leader for region 2957169, leader may Some(id: 2957170 store_id: 1)\" not_leader { region_id: 2957169 leader { id: 2957170 store_id: 1 } }"][2022/06/28 14:31:05.617 +00:00] [WARN] [endpoint.rs:537] [error-response] [err="Key is locked (will clean up) primary_lock: 748000000F000 lock_version: 434222311815512066 key: 748000009725552F000 lock_ttl: 3003 txn_size: 1"][2022/06/28 14:31:05.634 +00:00] [WARN] [endpoint.rs:537] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000092F000 lock_version: 434222311815512092 key: 748000000000 lock_ttl: 3018 txn_size: 5"][2022/06/28 14:31:15.389 +00:00] [ERROR] [kv.rs:931] ["KvService response batch commands fail"][2022/06/28 14:31:15.432 +00:00] [ERROR] [kv.rs:931] ["KvService response batch commands fail"]5.pd journal

[2022/06/28 14:30:55.329 +00:00] [INFO] [operator_controller.go:424] ["add operator"] [region-id=2641161] [operator="\"transfer-hot-read-leader {transfer leader: store 1 to 5} (kind:hot-region,leader, region:2641161(25913,5), createAt:2022-06-28 14:30:55.329497692 +0000 UTC m=+8421773.911777457, startAt:0001-01-01 00:00:00 +0000 UTC, currentStep:0, steps:[transfer leader from store 1 to store 5])\""] ["additional info"=][2022/06/28 14:30:55.329 +00:00] [INFO] [operator_controller.go:620] ["send schedule command"] [region-id=2641161] [step="transfer leader from store 1 to store 5"] [source=create][2022/06/28 14:30:55.342 +00:00] [INFO] [cluster.go:567] ["leader changed"] [region-id=2641161] [from=1] [to=5][2022/06/28 14:30:55.342 +00:00] [INFO] [operator_controller.go:537] ["operator finish"] [region-id=2641161] [takes=12.961676ms] [operator="\"transfer-hot-read-leader {transfer leader: store 1 to 5} (kind:hot-region,leader, region:2641161(25913,5), createAt:2022-06-28 14:30:55.329497692 +0000 UTC m=+8421773.911777457, startAt:2022-06-28 14:30:55.329597613 +0000 UTC m=+8421773.911877386, currentStep:1, steps:[transfer leader from store 1 to store 5]) finished\""] ["additional info"=]6. monitor cluster_tidb --> kv errors

3、 ... and 、 Conclusion

It can be seen that this alarm is caused by dm-worker There are errors invalid connection, This error is due to tidb There is wait response is cancelled, and tidb This kind of problem is caused by tikv There are locks and backoff As a result of , As for why locks and backoff, You can see pd My log is right hot-read-leader Scheduled , This is the production of backoff The key to , and lock The reason is from the business sql To find the

Official documents : Lock conflict description document

边栏推荐

猜你喜欢

Detailed explanation of IP address and preparation of DOS basic commands and batch processing

深拷贝真难

NFT value and white paper acquisition

![Primary code audit [no dolls (modification)] assessment](/img/b8/82c32e95d1b72f75823ca91c97138e.jpg)

Primary code audit [no dolls (modification)] assessment

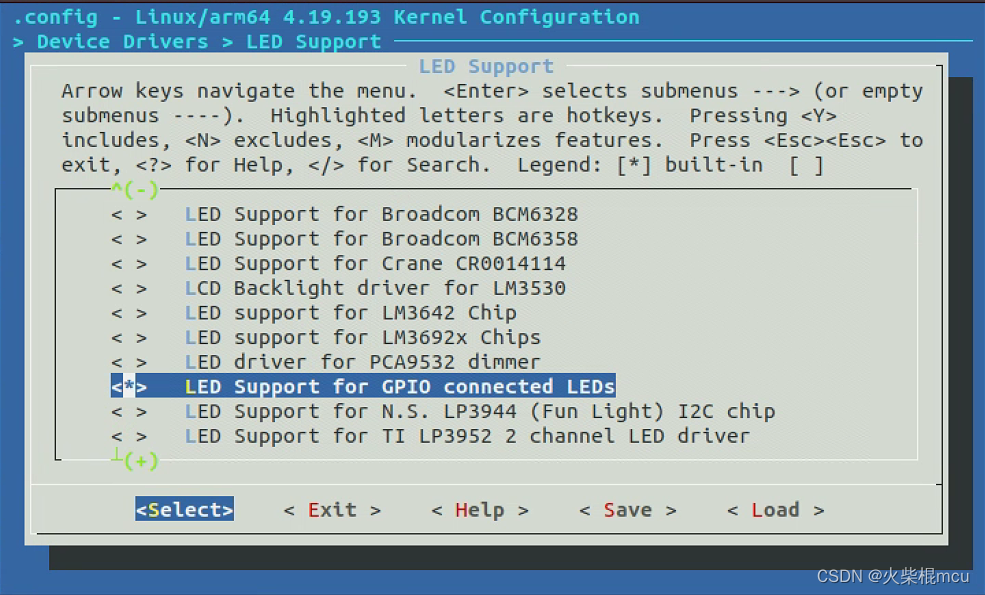

RK3566添加LED

In addition to the root directory, other routes of laravel + xampp are 404 solutions

Zibll theme external chain redirection go page beautification tutorial

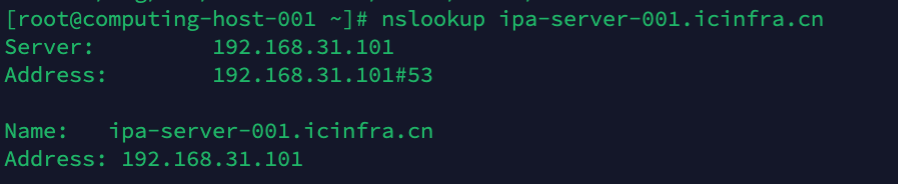

::ffff:192.168.31.101 是一个什么地址?

基于 TiDB 场景式技术架构过程 - 理论篇

![[cloud resources] what software is good for cloud resource security management? Why?](/img/c2/85d6b4a956afc99c2dc195a1ac3938.png)

[cloud resources] what software is good for cloud resource security management? Why?

随机推荐

【云资源】云资源安全管理用什么软件好?为什么?

基于 TiDB 场景式技术架构过程 - 理论篇

PostgreSQL Usage Summary (PIT)

鏈錶(簡單)

01 、Solr7.3.1 在Win10平台下使用jetty的部署及配置

Rk3566 add LED

Introduction to Chapter 8 proof problem of njupt "Xin'an numeral base"

PHP character capture notes 2020-09-14

国富氢能冲刺科创板:拟募资20亿 应收账款3.6亿超营收

Why do I support bat to dismantle "AI research institute"

为什么我认识的机械工程师都抱怨工资低?

Pancake Bulldog robot V2 (code optimized)

04_solr7.3之solrJ7.3的使用

matlab学习2022.7.4

In addition to the root directory, other routes of laravel + xampp are 404 solutions

UE source code reading [1]--- starting with problems delayed rendering in UE

WebRTC的学习(二)

Solution to the prompt of could not close zip file during phpword use

Implementation process of WSDL and soap calls under PHP5

Simple PHP paging implementation