当前位置:网站首页>[AI system frontier dynamics, issue 40] Hinton: my deep learning career and research mind method; Google refutes rumors and gives up tensorflow; The apotheosis framework is officially open source

[AI system frontier dynamics, issue 40] Hinton: my deep learning career and research mind method; Google refutes rumors and gives up tensorflow; The apotheosis framework is officially open source

2022-07-04 13:12:00 【Zhiyuan community】

1、Geoffrey Hinton: My 50 years of in-depth study career and Research on mental skills

https://mp.weixin.qq.com/s/kRdlK3VEqeKSr9up0ay5dg

2、Google Refute a rumor and give up TensorFlow

https://mp.weixin.qq.com/s/JAGHRVUb1Mla_wIWoyJPeA

3、 The apotheosis framework is officially open source , Easy pre training and fine tuning “ Granting titles to gods ” Major models

https://mp.weixin.qq.com/s/NtaEVMdTxzTJfVr-uQ419Q

4、 Training GPT-3, Why can't the original in-depth learning framework ?

https://mp.weixin.qq.com/s/qZ6qYfAX442vQBiJXwt6uA

5、 Combat software system complexity ①: If not necessary , Do not add entities.

https://mp.weixin.qq.com/s/TmbTQYakDcDEh7nbnfumPQ

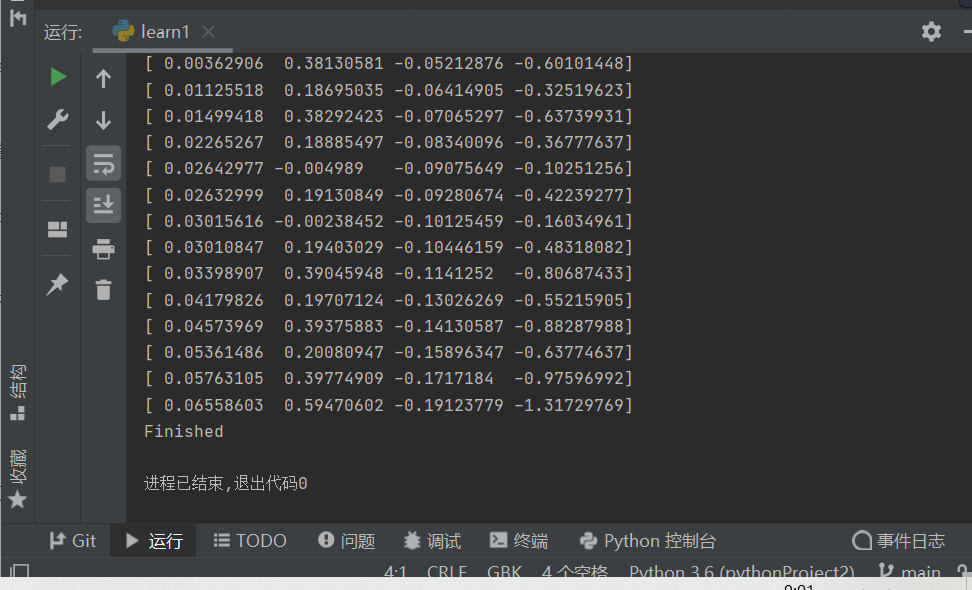

6、 How to develop machine learning system : High performance GPU Matrix multiplication

https://zhuanlan.zhihu.com/p/531498210

7、 For deep learning GPU share

https://zhuanlan.zhihu.com/p/285994980

8、 Explain profound theories in simple language GPU Optimization series :reduce Optimize

https://zhuanlan.zhihu.com/p/426978026

9、 from MLPerf Talking about : How to lead AI The next wave of accelerators

https://mp.weixin.qq.com/s/n116POs9H9v5wqJA7_km6A

10、 Open source compiler for Xiaobai

https://zhuanlan.zhihu.com/p/515999515

11、OneFlow The source code parsing : Automatic inference of operator signature

https://mp.weixin.qq.com/s/_0w3qhIk2e8Dm9_csCfEcQ

Everyone else is watching

- OneFlow v0.7.0 Release

- The illustration OneFlow Learning rate adjustment strategy of

- The optimal parallel strategy of distributed matrix multiplication is derived by hand

- OneFlow The source code parsing : Automatic inference of operator signature

- Train a large model with hundreds of billions of parameters , Four parallel strategies are indispensable

- Reading Pathways( Two ): The next step forward is OneFlow

- Hinton: My 50 years of in-depth study career and Research on mental skills

Welcome to experience OneFlow v0.7.0:https://github.com/Oneflow-Inc/oneflow/

边栏推荐

- 【云原生 | Kubernetes篇】深入了解Ingress(十二)

- Argminer: a pytorch package for processing, enhancing, training, and reasoning argument mining datasets

- Dry goods sorting! How about the development trend of ERP in the manufacturing industry? It's enough to read this article

- PostgreSQL 9.1 飞升之路

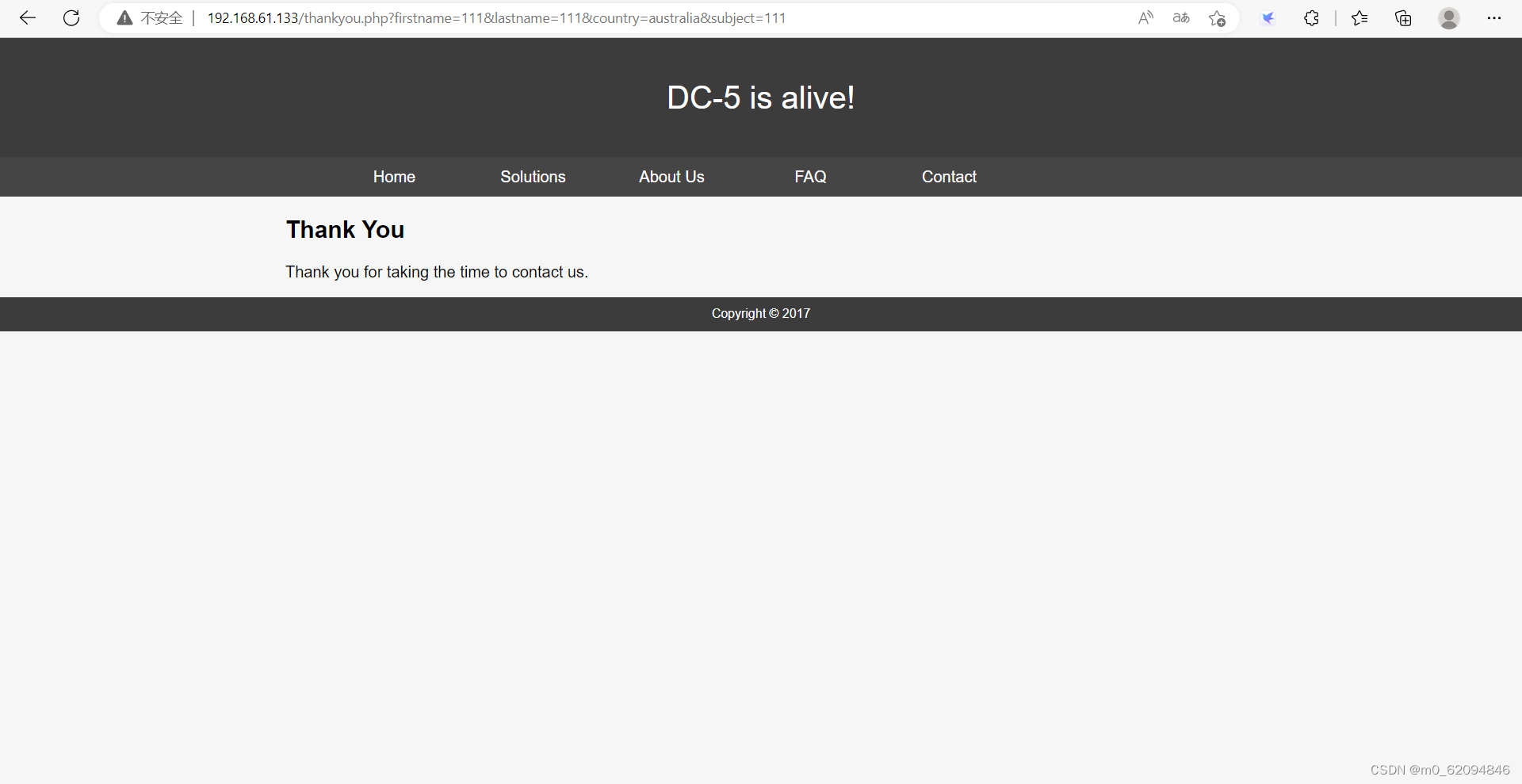

- DC-5靶机

- 强化学习-学习笔记1 | 基础概念

- Rsyslog configuration and use tutorial

- 阿里云有奖体验:用PolarDB-X搭建一个高可用系统

- Annual comprehensive analysis of China's mobile reading market in 2022

- Jetson TX2 configures common libraries such as tensorflow and pytoch

猜你喜欢

轻松玩转三子棋

Golang sets the small details of goproxy proxy proxy, which is applicable to go module download timeout and Alibaba cloud image go module download timeout

CVPR 2022 | TransFusion:用Transformer进行3D目标检测的激光雷达-相机融合

Read the BGP agreement in 6 minutes.

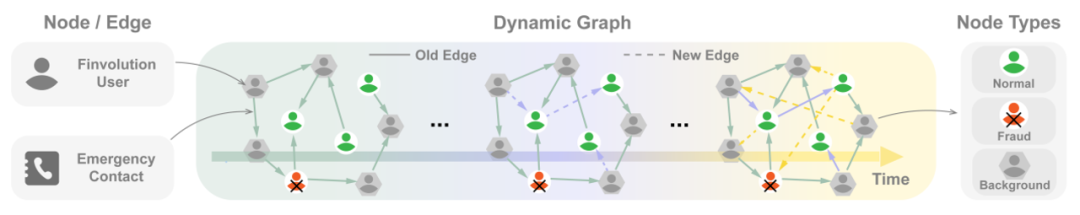

DGraph: 大规模动态图数据集

![[Android kotlin] lambda return statement and anonymous function](/img/d8/a367c26b51d9dbaf53bf4fe2a13917.png)

[Android kotlin] lambda return statement and anonymous function

强化学习-学习笔记1 | 基础概念

DC-5靶机

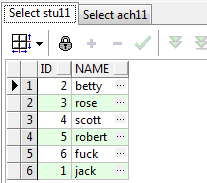

求解:在oracle中如何用一条语句用delete删除两个表中jack的信息

实时云交互如何助力教育行业发展

随机推荐

[Android kotlin] lambda return statement and anonymous function

C語言:求100-999是7的倍數的回文數

读《认知觉醒》

[leetcode] 96 and 95 (how to calculate all legal BST)

【云原生 | Kubernetes篇】深入了解Ingress(十二)

Reptile exercises (I)

WPF double slider control and forced capture of mouse event focus

「小技巧」给Seurat对象瘦瘦身

Agile development / agile testing experience

VIM, another program may be editing the same file If this is the solution of the case

【AI系统前沿动态第40期】Hinton:我的深度学习生涯与研究心法;Google辟谣放弃TensorFlow;封神框架正式开源

n++也不靠谱

【FAQ】华为帐号服务报错 907135701的常见原因总结和解决方法

诸神黄昏时代的对比学习

Etcd 存储,Watch 以及过期机制

Concepts and theories related to distributed transactions

老掉牙的 synchronized 锁优化,一次给你讲清楚!

Apache服务器访问日志access.log设置

Golang sets the small details of goproxy proxy proxy, which is applicable to go module download timeout and Alibaba cloud image go module download timeout

Building intelligent gray-scale data system from 0 to 1: Taking vivo game center as an example