In today's business scenario , The requirements for high availability are getting higher and higher , Core business cross availability zone has become standard . Liu Jiawen, senior engineer of Tencent cloud database, combined with Tencent cloud database's kernel practical experience , Share with you Redis How to realize multiple availability zones , It contains Redis Master-slave version 、 Cluster native architecture , Tencent cloud Redis Cluster mode master-slave 、 many AZ Architecture implementation and many AZ Key technical points , It can be divided into the following four parts :

The first part : Introduce Redis The native architecture of , Including master-slave version and cluster version ;

The second part : Tencent introduces Redis framework , In order to solve the problems of master-slave architecture , Tencent cloud uses the master-slave version of the cluster mode . Secondly, in order to better adapt to the cloud Redis framework , Introduced Proxy;

The third part : Analyze the original Redis Why can't we achieve more AZ High availability of the architecture and how Tencent cloud realizes multiple availability zones ;

The fourth part : Share several key technical points of realizing multiple availability zones , Include node deployment 、 Nearby access and node selection mechanism .

Redis Native architecture

Redis The native architecture of includes master-slave version and cluster version . The master-slave version consists of a master, Multiple slave form . The high availability of master-slave usually adopts sentinel mode . Sentinel mode after node failure , It can automatically offline the corresponding nodes , But sentinel mode cannot fill nodes . In order to cooperate with complement , The control component is required to assist , Therefore, generally Monitoring components and control components Cooperate to complete a high availability of master-slave version . Either way , The availability of the master-slave version depends on external arbitration components , There are problems of long recovery time and high availability of components . Secondly, the master-slave version will also lead to Double write Problems and Raising the Lord is detrimental Functional defects of .

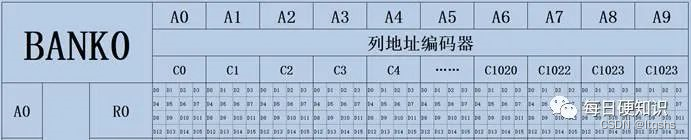

Redis Each node in the cluster version of is independent of each other , Nodes pass through Gossip Protocol to communicate , Each node stores an information of all nodes in the cluster . Cluster version data be based on slot Conduct slice management ,slot All in all 16384 individual , Node slot It's not fixed , You can move key To complete slot Migration , With slot Relocation function of , The cluster version can Realize data balance and dynamic adjustment of segmentation .

Redis The cluster version integrates Gossip agreement , adopt Gossip The agreement can be completed Automatic discovery and disaster of nodes The function of . Automatic discovery means that a node wants to join the cluster , Just join any node in the cluster , After joining , The information of the new node will pass Gossip Extend to all nodes in the cluster . Empathy , When a node fails , All nodes will send fault information to other nodes of the cluster , Through a certain death sentence logic , It will make this node automatically offline , This is Redis Cluster version Automatic disaster recovery function .

To illustrate how a single zone is deployed , We need to learn more about Redis Cluster version of automatic disaster recovery . Automatic disaster recovery is divided into two steps , The first is our The logic of death , When more than half of the master nodes think that the node is faulty , The cluster will think that this node has failed . At this point, the slave node will Vote , When more than half of the master nodes authorize this node to be the master node , It will turn the character into the master node , At the same time, broadcast the role information .

According to the above two analysis , It's not hard to find out Redis The cluster version has two deployment requirements , One is Master and slave cannot be on the same machine , When the master-slave machine fails , The whole partition is equivalent to a failure , The cluster becomes unavailable . The second is our The number of nodes cannot exceed half of the number of slices , Note the number of nodes , Instead of just limiting the number of main nodes .

The right part of the above figure shows the wrong deployment method , When the state of the cluster node does not change , It can meet the high availability , But after the master-slave switch of the cluster , There are more master nodes on a machine than most , And after most of the machines break down , The cluster cannot recover automatically . Therefore, three-thirds of the cluster version , A total of six machines are needed to meet high availability .

Tencent cloud Redis framework

In order to solve the problem of dual master and support the operation of lossless master lifting , Tencent cloud uses the master-slave version of the cluster mode . Realize the master-slave version of the cluster mode , First, we need to solve three problems :

The first is that the cluster mode requires At least 3 A vote ( arbitration ) node The problem of , Because there is only one master-slave version Master, In order to achieve 3 Arbitration nodes , We introduced two Arbiter node ,Arbiter Only voting rights , Don't store data , After this transformation , It can meet the high availability of the cluster version .

The second is many DB problem , Because Tencent cloud introduced Proxy, Reduced to many DB The complexity of management , Therefore, the bill can be released DB Limit .

The last one is the need Enable cross slot visit , In master-slave version , be-all slot All on one node , There is no cross node problem , Therefore, cross slot Limit .

After solving these main problems , The cluster mode can be fully compatible with the master-slave version , At the same time, it has the cluster version of automatic disaster recovery 、 Without damage to the owner and with business support , Seamlessly upgrade to the cluster version .

because Client There are many versions , In order to be compatible with different Client, Tencent cloud introduced Proxy.Proxy In addition to the screen Client The difference between , Also shield the back end Redis Different versions of , Business can use master-slave version Client Use the cluster version of the back end .Proxy It also complements Redis Lack of traffic isolation and support for richer indicator monitoring , It can also convert multiple connected requests into pipeline The request is forwarded to the backend , promote Redis Performance of .

Redis More AZ framework

Deploy high availability multi availability zone architecture , At least two conditions need to be met :

Master slave cannot be deployed to the same zone ;

The number of nodes in a zone cannot exceed half of the number of partitions .

If we deploy a three-part instance , That should need a 6 Only three zones can ensure its high availability . Even if the available area is sufficient , It also has performance jitter , Visit this zone , The performance is the same as that of a single zone , But if you access across zones , At least appear 2ms Delay , So the original Redis It is not suitable for the deployment of multiple availability zones , In order to achieve high availability deployment , We need to analyze its problem more deeply . The high availability of this scenario is not satisfied mainly because The master node drifts , The voting right and the master node are bound . When the voting rights are switched in different available ranges , As a result, more than most voting nodes are in the availability zone , At this time, the cluster cannot recover after the availability zone fails .

From the above analysis, we can see that , The problem of high availability is caused by the drift of voting rights . If the voting rights can be fixed on some nodes , In this way, the voting rights can no longer drift . Of course, the voting right cannot be fixed on the slave or master node , For multiple zones , The best way is to introduce a ZoneArbiter node , It only decides the death of nodes and selects the master , Don't store any data . In this way, the voting rights are separated from the storage nodes . After the separation of voting rights , Even if the data node Master Can be located in a zone , High availability can also be achieved by locating in different availability zones . The performance of business access in the primary zone is the same as that in the single zone .

many AZ The key technology

After ensuring high availability , Next, we will introduce three key points of multi availability zones : How to deploy high availability 、 How to achieve optimal performance 、 Ensure the automatic recovery of the cluster after the failure of the availability zone .

Node deployment also needs to meet two points : The first is the The master and slave cannot be in the same zone , This is easier to satisfy , As long as there is 2 Zones are enough , The second point is At least three ZoneArbiter Nodes are located in different zones , The second condition requires three zones , If there are no three zones available, you can also ZoneArbiter Deploy in the nearest area , Because the data node and the arbitration node are separated , Nodes located in other zones will only have milliseconds of delay in judging death and raising the master , There will be no impact on performance and high availability .

After analyzing the deployment , And then look at Data storage link , Storage links are divided into read and write links , The write link is from client To LB, Until then Proxy, Finally, write the data to the corresponding Master. While reading , After the feature of reading nearby is enabled , Link slave client To LB, Until then Proxy, Finally, select a nearby node to read data . The nearest route selection includes LB The nearest choice and Proxy The nearest choice ,LB According to Client Select the address corresponding to Proxy. If it's reading ,Proxy Select the nodes in the same zone for read access according to the information of their own zone . If it is written ,Proxy Need to access the primary zone Master node . You can visit nearby , The most critical point is to LB And Proxy To store the availability information of the relevant backend , With this information , The nearest route selection can be realized .

The biggest difference between single availability zone and multi availability zone faults is : First, after a node in the multi availability zone fails , The primary node may switch to other zones Cause performance fluctuations . Secondly, for the instance of multiple availability zones , After the whole availability zone fails , More nodes need to vote than nodes in a single zone . In the scenario test of multi node failure ,128 Fragmentation ,63 Nodes fail at the same time ,99% None of the above can restore the cluster normally . And the key to recovery is Redis The host selection mechanism of . So we need a deeper understanding Redis The selection mechanism of .

First, let's look at the main mechanism Authorization mechanism , When the master node receives a failover After the message , Check that its own node is Master, Then check the voting node cluster epoch Is it the latest , And in this epoch, No nodes voted , To prevent multiple slave nodes of a node from repeatedly initiating voting , Here it is 30s Repeated initiation is not allowed in . Finally, check this slot Whether the ownership right of belongs to the initiator failover This node of , If there's no problem , Then it will vote for this node .

Sum up , The conditions for this master node to vote are :

- The cluster information of the node that initiated the voting is up-to-date ;

- One epoch Only one node can be authorized ;

- 30s The same partition can only be authorized once ;

- slot The ownership of is correct .

After reading the authorization mechanism of the master node , Take another look at from The mechanism of node initiating voting . The process of initiating voting is to check 1 There has been no vote in minutes , Then check whether the node data is valid ( Cannot be disconnected from the Lord 160s). The slave node is valid , Start counting the time to initiate voting , When it's time to vote , Will cluster epoch+1, And then launch failover, If the authorization of the master node exceeds half of the number of partitions , Then it is raised to the primary node , And broadcast node information . There are two key points for voting from nodes , You can only retry voting once a minute , Maximum retry 160s, The slave node can vote at most 3 Time . When more than half of master Authorize the owner , Success in raising the Lord , Otherwise, it will be overtime .

After a primary node fails , Try to choose the host of the cluster The same as the zone Choose from . The master selection time between different slave nodes in a partition is determined by the offset Ranking and 500ms The random time of . Write less and read more ,offset Ranking is mostly the same . In the single zone scenario , Randomly selecting a node has no effect on itself , But there will be performance jitter in multiple availability zones . Therefore, we need to introduce the ranking of the same availability zone into the ranking . The ranking of the same availability zone requires each node to know the availability zone information of all nodes . stay Gossip There happens to be a reserved field in , We store the availability information in this reserved field , Then the availability information of this node will be broadcast to all nodes , In this way, each node has the availability information of all nodes . When voting, we follow offset And the ranking of availability zone information to ensure the priority of the same availability zone .

After the primary analysis of a node , Let's analyze Voting opportunity of multi node failure . When multiple nodes initiate voting , They'll randomly choose 500 A point in time within milliseconds , Then vote . Suppose the cluster has 100 Main node ,500 After millisecond voting , The voting time of each node is 5 millisecond ,5 Milliseconds is definitely not enough for a voting cycle . The previous test results of multi node failure voting success rate also proved that this situation can hardly succeed .

Voting cannot be successfully shut down The voting of different nodes in the cluster is initiated randomly As a result of , Since there are random conflicts , The most direct solution is Vote in order . Voting in order can be simply divided into two kinds , One is Rely on external control , The introduction of external dependencies requires high availability , In general , It is better not to rely on external components for high availability of storage links , Otherwise, the overall availability will be affected by the high availability of external components and storage nodes . Then think again Implement sequential voting within the cluster , There are also two ways to implement sequential voting within the cluster , One is that the arbitration node authorizes in order . But this way is easy to break a epoch The principle of throwing a slave node , After the break, the voting results may not meet expectations . Another solution is to vote sequentially from the slave nodes .

The slave node should ensure that voting is initiated in sequence , Then you need to ensure that the ranking of each node is the same , And node ID Is unique in the life cycle , And each node has other nodes ID Information , So here Select node ID Ranking It's a better plan , Each slave node ID Before voting , First check your node ID Is it the first , If so, vote , If not, wait 500ms. This 500ms It's to prevent the team leader from losing the vote . After optimization in this way , Voting can be successful . Because it is distributed , There is still a small probability of failure , External monitoring is needed after failure , Forcibly lift the Lord , Ensure that the cluster recovers as soon as possible .

Experts answer questions

1. adopt sentinel Connect redis Will there also be double writing ?

answer : Double writing is for the connection of stock , If the connection of the stock is not disconnected , It will be written to the previous master node , And the new connection will be written to the new master node , This is double writing . The cluster mode has the most double writes 15s( Time of death ), because 15s After that, I found myself out of the majority , The node will be switched to a cluster Fail, At this time, write and read errors , And avoid the problem of double writing .

2. Fixed node voting , Will these nodes become single points

answer : Single point is unavoidable , such as 1 Lord 1 from , The master is dead , Then after lifting the Lord , This node is a single point , The occurrence of a single point requires us to make up for it . Here, the arbitration node fails , Just add one node , As long as most arbitration nodes work normally , Because arbitration and data access are separate , Failure and patching nodes have no impact on data access .

3. Failure of the whole availability zone and node failure in the availability zone failover What is the processing strategy of ?

answer : Whether it is the fault of the whole availability zone or the multi node fault caused by the single machine fault , Should be completed by sequential voting , Reduce conflict , If there are slave nodes in the same zone , This node should give priority to the master .

4. I want to ask for a request , Do you want to synchronize and wait for the replication to complete ?

answer :Redis Not strong synchronization ,Redis Strong synchronization requires wait Order to complete .

5. What's the maximum support redis In pieces , More nodes are used gossip Is there any performance problem ?

answer : Better not to exceed 500 individual , exceed 500 Nodes will appear ping Performance jitter caused , At this time, you can only increase cluster_node_timeout To reduce performance jitter

6. Multi zone master node , How to realize write synchronization ?

answer : What you may want to ask is the example of global multi activity , Multiple live instances generally require data to fall into the disk first , Then synchronize to other nodes , When synchronizing, ensure that the data is received from the node first , Can be sent to other nodes . We also need to solve the data synchronization loop , Data conflict and other issues .

7. Currently, the main logic and raft Is there a big difference in choosing a master ?

answer : The same points need to meet most authorizations , There is a randomly selected time , Different points Redis The primary node has the right to vote ( For multi slice situation ), and raft It can be considered as all nodes ( For single slice ).Raft The data is written to more than half of the nodes successfully before returning to .Redis Use weak synchronization ( have access to wait Strong master and slave return after synchronization ), After writing the master node, return immediately , After the main fault , Nodes that need newer data are preferred ( The data offset value is determined by Gossip Notify other nodes ), But there is no guarantee that it will succeed .

About author

Liu Jiawen , Tencent cloud database senior engineer , In charge of Linux Kernel and redis Related R & D work , Currently, I am mainly responsible for Tencent cloud database Redis Development and architecture design of , Yes Redis High availability , I have rich experience in kernel development .

Redis How to realize multiple availability zones ? More articles about

- Cloud area (region), Availability zone (AZ), Cross regional data replication (Cross-region replication) And disaster recovery (Disaster Recovery)( part 1)

This paper is divided into two parts : part 1 and part 2. part 1 Introduce AWS, part 2 Introduce Alibaba cloud and OpenStack cloud . 1. AWS 1.1 AWS Overview of geographical components AWS There are three geographic components : Regions: Area , namely A ...

- Pod In multiple availability zones worker High availability deployment on nodes

One . Demand analysis At present kubernetes In the cluster worker Nodes can support adding ECS, The purpose of this deployment method is to enable multiple applications of one application pod( At least two. ) It can be distributed in different availability zones , At least it can't be distributed in the same ...

- UCloud The design concept and function of the usable area are explained in detail

Reading guide In the past few months ,UCloud We have comprehensively upgraded our cloud computing infrastructure , Recently announced that the infrastructure fully supports regions and availability zones , And name the availability zone project Sixshot. Organize cloud services through these two-tier design architectures , It can provide users with high availability ...

- Redis Sentinel Master slave high availability solution

Redis Sentinel Master slave high availability solution In this paper, we introduce a new method through Jed and Sentinel Realization Redis colony ( Master-slave ) High availability solution for , The solution needs to use Jedis2.2.2 And above ( mandatory ),Redis2.8 And above ...

- AWS Explain the concept of zone and availability zone

AWS Each area of the is generally composed of multiple zones (AZ) form , A zone is generally composed of multiple data centers .AWS The main purpose of introducing availability zone design is to improve the high availability of user applications . Because the design of availability zones is independent of each other , That is to say, they will ...

- Redis Sentinel Achieve high availability configuration

In general yum install redis The startup directory of is in :”/usr/sbin” : The configuration directory is in ”/etc/redis/” There will be a default redis.conf and redis-sentinel.conf redis high ...

- Redis Master slave configuration and pass Keepalived Realization Redis Auto switch high availability

Redis Master slave configuration and pass Keepalived Realization Redis Auto switch high availability [ date :2014-07-23] source :Linux Community author :fuquanjun [ typeface : Big in Small ] One : Introduction to the environment : M ...

- redis Use as a cache scenario , When memory runs out , All of a sudden, there's a lot of evictions , Blocking normal read and write requests during this eviction process , Lead to redis Not available for a short time

redis A sudden mass eviction results in read-write requests block Content catalog : The phenomenon background reason Solution ref The phenomenon redis Use as a cache scenario , When memory runs out , All of a sudden, there's a lot of evictions , Block normal read and write during this eviction. Please ...

- Redis How to achieve high availability 【 Master slave copy + Sentinel mechanism +keepalived】

Realization redis Some methods of high availability mechanism : Guarantee redis High availability mechanisms require redis Master slave copy .redis Persistence mechanism . Sentinel mechanism .keepalived And so on . The role of master-slave replication : The data backup . Read / write separation . Distributed cluster . real ...

- redis How to achieve high availability 【 Master slave copy 、 Sentinel mechanism 】

Realization redis Some methods of high availability mechanism : Guarantee redis High availability mechanisms require redis Master slave copy .redis Persistence mechanism . Sentinel mechanism .keepalived And so on . The role of master-slave replication : The data backup . Read / write separation . Distributed cluster . real ...

Random recommendation

- C++ arrow -> Double colon :: Order number . Operator difference

spot (.) If the variable is an object or an object reference , It is used to access object members . arrow ( ->) If the variable is an object pointer , It is used to access object members . Double colon (::) If the operation target is an identifier with a namespace , Use it ...

- Virtual Friend Function

generally speaking , Friend as a tightly coupled design , Considered part of a class . A classic application is about serialization and deserialization of classes . class A { friend ostream& operator<<(ost ...

- Set up contentType

// Define code header( 'Content-Type:text/html;charset=utf-8 '); //Atom header('Content-type: application/at ...

- MySQL Optimizer cost Calculation

Record MySQL 5.5 On , Optimizer cost The method of calculation . Chapter one : Monomorphic cost Calculation data structure : 1. table_share: Contains table metadata , The index part : key_info: One key Structure ...

- centos account uid and gid

modify /etc/passwd and /etc/group Of documents UID and GID by 0, You can get root jurisdiction , But not recommended ~ UID and GID Linux How can the system distinguish different users ? It's natural to think of , Using a different user name should ...

- c++ 07

One . multiple inheritance 1) Subclasses have two or more base classes at the same time , Inherits all the properties and behaviors of the base class at the same time . Salesperson The manager \ / sales manager automobile Customer characteristics Commercial features \ | / ...

- HTML Usage of

I learned it today HTMIL, label . This thing , Nothing is difficult , Just practice and play more . Some personal experiences today : label : Names that exist in pairs Pay attention to the label :1. Tag name placed in <> 2. Tags exist in pairs 3. The end tag has a slash / example ...

- Use org.apache.poi export Excel form

public HSSFWorkbook MakeExcel(List<TransactionLogVO> logList) { // SimpleDateFormat sdf = new ...

- SQL Problems during transaction log backup

1. During transaction log backup , Here's the picture : 3041 Considerations when troubleshooting messages : Won't just one database or all databases have problems ? Is it backup to local storage or remote storage ? What type of backup ( Database backup . Log backup and differential backup ...

- pixi.js summary

My blog is simple It may not be clear . If you have an idea , Please leave a message . thank you ! Group :881784250 https://github.com/ccaleb/endless-runner/tree/master/jav ...

![[office] eight usages of if function in Excel](/img/ce/ea481ab947b25937a28ab5540ce323.png)