当前位置:网站首页>TensorRTx-YOLOv5工程解读(二)

TensorRTx-YOLOv5工程解读(二)

2022-08-04 05:24:00 【单胖】

TensorRTx-YOLOv5工程解读(二)

具体Workflow

if (!wts_name.empty()) {

IHostMemory* modelStream{

nullptr };

APIToModel(BATCH_SIZE, &modelStream, is_p6, gd, gw, wts_name);

assert(modelStream != nullptr);

std::ofstream p(engine_name, std::ios::binary);

if (!p) {

std::cerr << "could not open plan output file" << std::endl;

return -1;

}

p.write(reinterpret_cast<const char*>(modelStream->data()), modelStream->size());

modelStream->destroy();

return 0;

}

在加载完毕权重之后,建立IHostMemory*类型的变量modelStream,并将构建好的engine保存在本地磁盘之中。

之所以要把engine文件保存在本地,是因为如果每次运行程序之前都要重新制作一遍engine,整个过程将会非常的缓慢。而具体的engine制作工艺的流程就在函数APItoModel之内。因此具体分析一下APItoModel。

void APIToModel(unsigned int maxBatchSize, IHostMemory** modelStream, bool& is_p6, float& gd, float& gw, std::string& wts_name) {

// Create builder

IBuilder* builder = createInferBuilder(gLogger);

IBuilderConfig* config = builder->createBuilderConfig();

// Create model to populate the network, then set the outputs and create an engine

ICudaEngine *engine = nullptr;

if (is_p6) {

engine = build_engine_p6(maxBatchSize, builder, config, DataType::kFLOAT, gd, gw, wts_name);

} else {

engine = build_engine(maxBatchSize, builder, config, DataType::kFLOAT, gd, gw, wts_name);

}

assert(engine != nullptr);

// Serialize the engine

(*modelStream) = engine->serialize();

// Close everything down

engine->destroy();

builder->destroy();

config->destroy();

}

APItoModel中的就是engine的一般传统工艺。首先声明IBuilder*类型的builder,后面很多组件都会需要builder来build之。接着声明IBuilderConfig*类型的config,使用builder中的方法createBuilderConfig来创建,顾名思义,就是创建了一个build过程中的配置变量。

然后声明一个ICudaEngine*类型的engine变量。这个变量初始化为空指针即可。

接着就是调用build_engine()函数去创建engine,具体build_engine函数在下面分析。拿到engine后,将其序列化,赋值给*modelStream。engine在序列化后才能够保存到本地。

回到主函数,创建一个std::ofstream的对象p,并用p.write()函数保存modelStream->data()和modelStream->size(),再destroy()modelStream。

回过头来看build_engine()函数。

ICudaEngine* build_engine(unsigned int maxBatchSize, IBuilder* builder, IBuilderConfig* config, DataType dt, float& gd, float& gw, std::string& wts_name) {

INetworkDefinition* network = builder->createNetworkV2(0U);

// Create input tensor of shape {3, INPUT_H, INPUT_W} with name INPUT_BLOB_NAME

ITensor* data = network->addInput(INPUT_BLOB_NAME, dt, Dims3{

3, INPUT_H, INPUT_W });

assert(data);

std::map<std::string, Weights> weightMap = loadWeights(wts_name);

/* ------ yolov5 backbone------ */

auto focus0 = focus(network, weightMap, *data, 3, get_width(64, gw), 3, "model.0");

auto conv1 = convBlock(network, weightMap, *focus0->getOutput(0), get_width(128, gw), 3, 2, 1, "model.1");

auto bottleneck_CSP2 = C3(network, weightMap, *conv1->getOutput(0), get_width(128, gw), get_width(128, gw), get_depth(3, gd), true, 1, 0.5, "model.2");

auto conv3 = convBlock(network, weightMap, *bottleneck_CSP2->getOutput(0), get_width(256, gw), 3, 2, 1, "model.3");

auto bottleneck_csp4 = C3(network, weightMap, *conv3->getOutput(0), get_width(256, gw), get_width(256, gw), get_depth(9, gd), true, 1, 0.5, "model.4");

auto conv5 = convBlock(network, weightMap, *bottleneck_csp4->getOutput(0), get_width(512, gw), 3, 2, 1, "model.5");

auto bottleneck_csp6 = C3(network, weightMap, *conv5->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(9, gd), true, 1, 0.5, "model.6");

auto conv7 = convBlock(network, weightMap, *bottleneck_csp6->getOutput(0), get_width(1024, gw), 3, 2, 1, "model.7");

auto spp8 = SPP(network, weightMap, *conv7->getOutput(0), get_width(1024, gw), get_width(1024, gw), 5, 9, 13, "model.8");

/* ------ yolov5 head ------ */

auto bottleneck_csp9 = C3(network, weightMap, *spp8->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.9");

auto conv10 = convBlock(network, weightMap, *bottleneck_csp9->getOutput(0), get_width(512, gw), 1, 1, 1, "model.10");

auto upsample11 = network->addResize(*conv10->getOutput(0));

assert(upsample11);

upsample11->setResizeMode(ResizeMode::kNEAREST);

upsample11->setOutputDimensions(bottleneck_csp6->getOutput(0)->getDimensions());

ITensor* inputTensors12[] = {

upsample11->getOutput(0), bottleneck_csp6->getOutput(0) };

auto cat12 = network->addConcatenation(inputTensors12, 2);

auto bottleneck_csp13 = C3(network, weightMap, *cat12->getOutput(0), get_width(1024, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.13");

auto conv14 = convBlock(network, weightMap, *bottleneck_csp13->getOutput(0), get_width(256, gw), 1, 1, 1, "model.14");

auto upsample15 = network->addResize(*conv14->getOutput(0));

assert(upsample15);

upsample15->setResizeMode(ResizeMode::kNEAREST);

upsample15->setOutputDimensions(bottleneck_csp4->getOutput(0)->getDimensions());

ITensor* inputTensors16[] = {

upsample15->getOutput(0), bottleneck_csp4->getOutput(0) };

auto cat16 = network->addConcatenation(inputTensors16, 2);

auto bottleneck_csp17 = C3(network, weightMap, *cat16->getOutput(0), get_width(512, gw), get_width(256, gw), get_depth(3, gd), false, 1, 0.5, "model.17");

/* ------ detect ------ */

IConvolutionLayer* det0 = network->addConvolutionNd(*bottleneck_csp17->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{

1, 1 }, weightMap["model.24.m.0.weight"], weightMap["model.24.m.0.bias"]);

auto conv18 = convBlock(network, weightMap, *bottleneck_csp17->getOutput(0), get_width(256, gw), 3, 2, 1, "model.18");

ITensor* inputTensors19[] = {

conv18->getOutput(0), conv14->getOutput(0) };

auto cat19 = network->addConcatenation(inputTensors19, 2);

auto bottleneck_csp20 = C3(network, weightMap, *cat19->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.20");

IConvolutionLayer* det1 = network->addConvolutionNd(*bottleneck_csp20->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{

1, 1 }, weightMap["model.24.m.1.weight"], weightMap["model.24.m.1.bias"]);

auto conv21 = convBlock(network, weightMap, *bottleneck_csp20->getOutput(0), get_width(512, gw), 3, 2, 1, "model.21");

ITensor* inputTensors22[] = {

conv21->getOutput(0), conv10->getOutput(0) };

auto cat22 = network->addConcatenation(inputTensors22, 2);

auto bottleneck_csp23 = C3(network, weightMap, *cat22->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.23");

IConvolutionLayer* det2 = network->addConvolutionNd(*bottleneck_csp23->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{

1, 1 }, weightMap["model.24.m.2.weight"], weightMap["model.24.m.2.bias"]);

auto yolo = addYoLoLayer(network, weightMap, "model.24", std::vector<IConvolutionLayer*>{

det0, det1, det2});

yolo->getOutput(0)->setName(OUTPUT_BLOB_NAME);

network->markOutput(*yolo->getOutput(0));

// Build engine

builder->setMaxBatchSize(maxBatchSize);

config->setMaxWorkspaceSize(16 * (1 << 20)); // 16MB

#if defined(USE_FP16)

config->setFlag(BuilderFlag::kFP16);

#elif defined(USE_INT8)

std::cout << "Your platform support int8: " << (builder->platformHasFastInt8() ? "true" : "false") << std::endl;

assert(builder->platformHasFastInt8());

config->setFlag(BuilderFlag::kINT8);

Int8EntropyCalibrator2* calibrator = new Int8EntropyCalibrator2(1, INPUT_W, INPUT_H, "./coco_calib/", "int8calib.table", INPUT_BLOB_NAME);

config->setInt8Calibrator(calibrator);

#endif

std::cout << "Building engine, please wait for a while..." << std::endl;

ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

std::cout << "Build engine successfully!" << std::endl;

// Don't need the network any more

network->destroy();

// Release host memory

for (auto& mem : weightMap)

{

free((void*)(mem.second.values));

}

return engine;

}

这个函数很明显是在手写YOLOv5的network结构。首先先声明INetworkDefinition*类型的变量network,此处也是需要使用builder->createNetworkV2()来创建。再创建网络的输入张量,即声明ITensor*类型的data变量,由network->addInput来创建即可。这个输入张量需要在函数中指定输入张量的大小。

接下来就是定义YOLOv5的各个层。自定义层稍后做分析。

网络搭建完成后,通过builder->setMaxBatchSize()设置最大Batch的大小,再使用config->setMaxWorkspaceSize()设置每一层在实现时最大需要多少临时工作空间。最后使用config->setFlag设置推理的数据类型,一般设置成kFP16,不少平台不支持int8。

设置完毕后,在builder中调用builder->buildEngineWithConfig(*network, *config);即可完成engine的创建,再将netwok销毁,并释放内存空间。

边栏推荐

- [One step in place] Jenkins installation, deployment, startup (complete tutorial)

- Delphi-C端有趣的菜单操作界面设计

- C1认证之web基础知识及习题——我的学习笔记

- The 2022 PMP exam has been delayed, should we be happy or worried?

- el-Select 选择器 底部固定

- Turn: Management is the love of possibility, and managers must have the courage to break into the unknown

- MySQL数据库(基础)

- 处理List<Map<String, String>>类型

- canal实现mysql数据同步

- 7.16 Day22---MYSQL(Dao模式封装JDBC)

猜你喜欢

编程大杂烩(三)

MySQL database (basic)

8.03 Day34---BaseMapper query statement usage

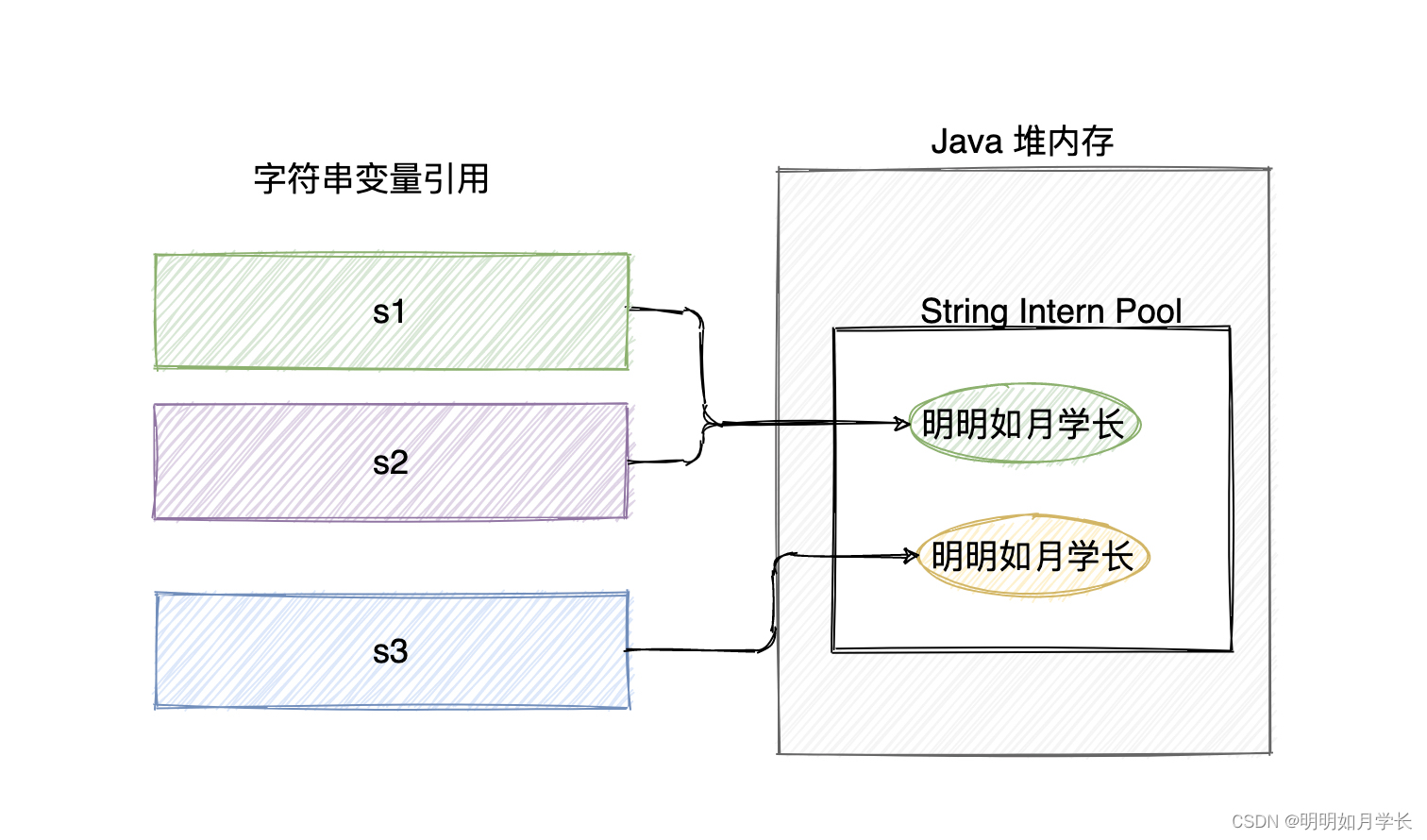

震惊,99.9% 的同学没有真正理解字符串的不可变性

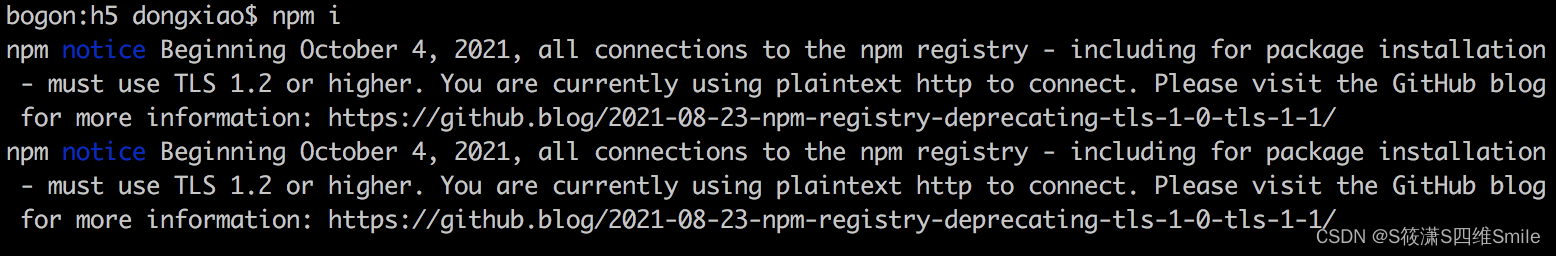

npm报错Beginning October 4, 2021, all connections to the npm registry - including for package installa

Turn: Management is the love of possibility, and managers must have the courage to break into the unknown

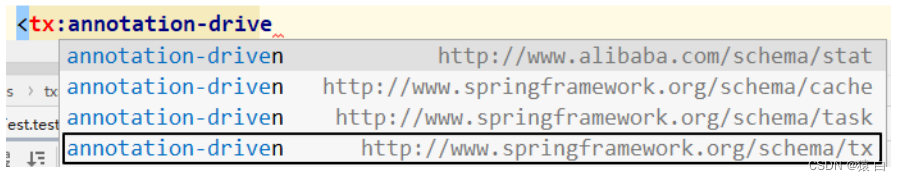

4.3 基于注解的声明式事务和基于XML的声明式事务

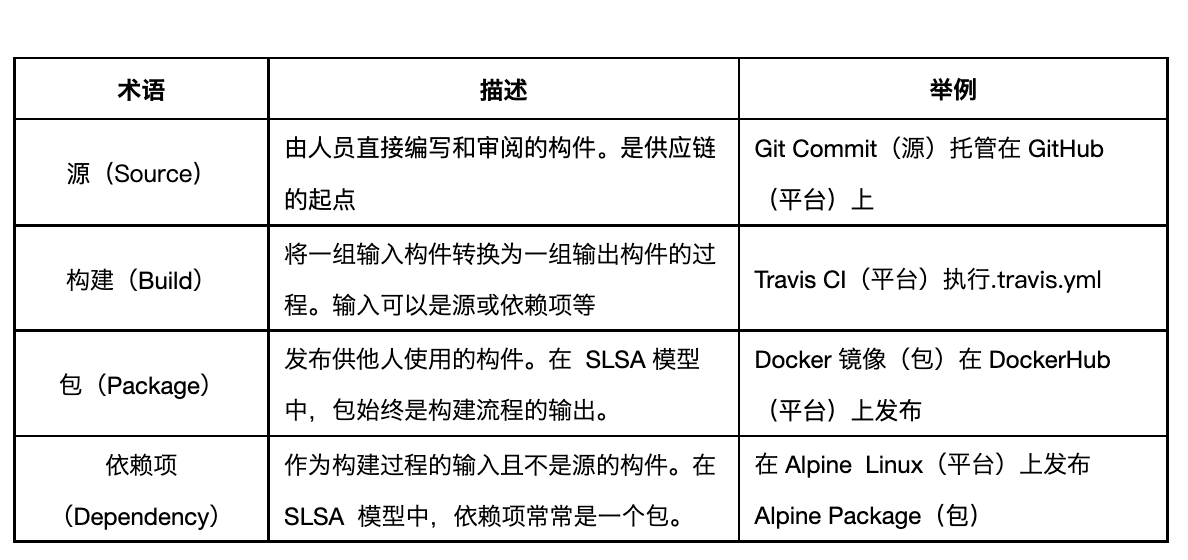

SLSA 框架与软件供应链安全防护

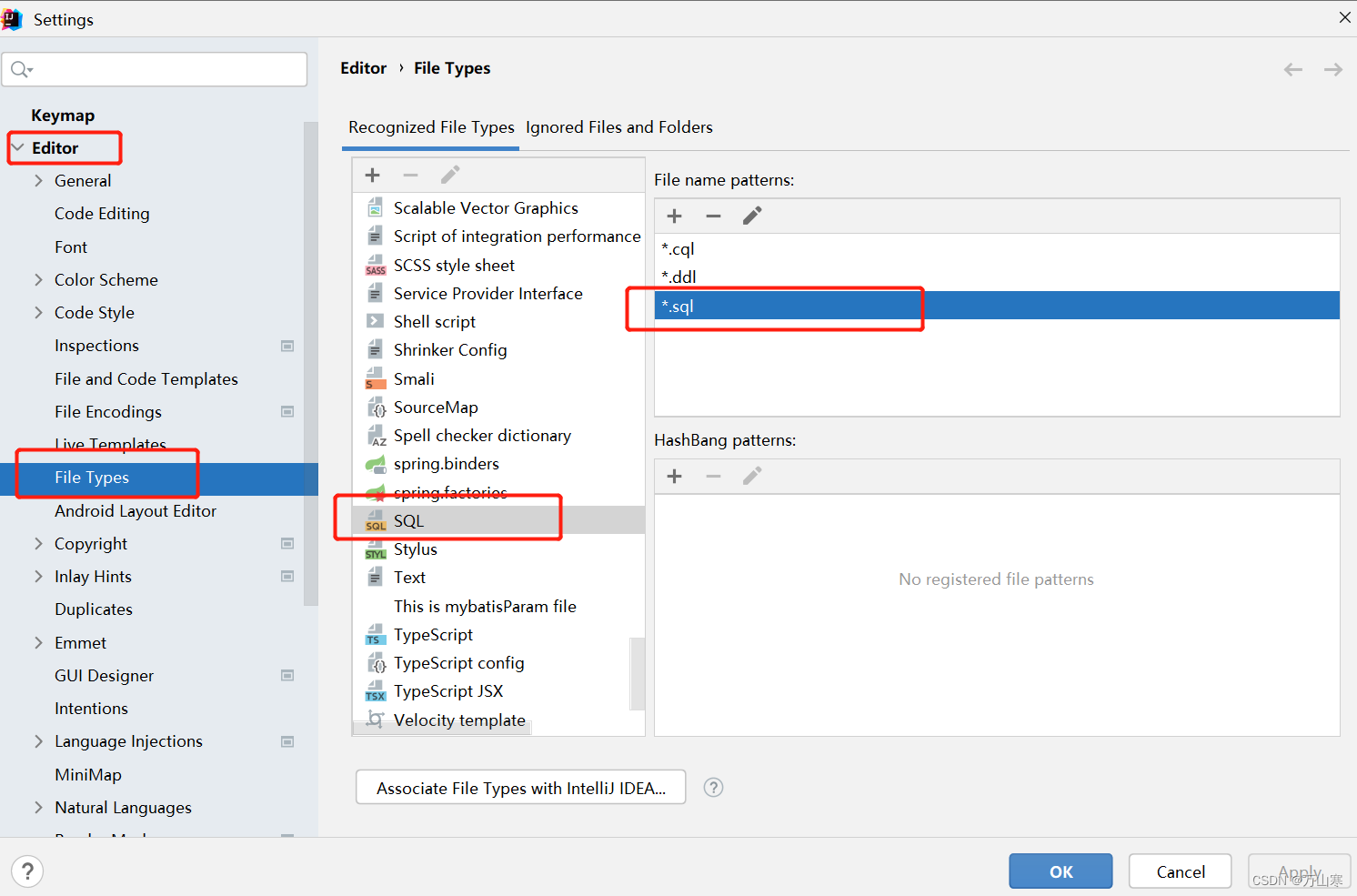

The idea setting recognizes the .sql file type and other file types

应届生软件测试薪资大概多少?

随机推荐

About yolo7 and gpu

9、动态SQL

8大软件供应链攻击事件概述

LCP 17. 速算机器人

一个对象引用的思考

How to view sql execution plan offline collection

4.3 Annotation-based declarative transactions and XML-based declarative transactions

[Cocos] cc.sys.browserType可能的属性

符号表

谷粒商城-基础篇(项目简介&项目搭建)

7.16 Day22---MYSQL(Dao模式封装JDBC)

8、自定义映射resultMap

Towards Real-Time Multi-Object Tracking (JDE)

去重的几种方式

day13--postman interface test

C Expert Programming Chapter 5 Thinking about Linking 5.1 Libraries, Linking and Loading

力扣:70. 爬楼梯

C Expert Programming Chapter 4 The Shocking Fact: Arrays and pointers are not the same 4.2 Why does my code not work

8款最佳实践,保护你的 IaC 安全!

canal实现mysql数据同步