当前位置:网站首页>Windows 10 tensorflow (2) regression analysis of principles, deep learning framework (gradient descent method to solve regression parameters)

Windows 10 tensorflow (2) regression analysis of principles, deep learning framework (gradient descent method to solve regression parameters)

2020-11-06 01:22:00 【Elementary school students in IT field】

windows10 tensorflow( Two ) Regression analysis of principle and actual combat , Deep learning framework ( The gradient descent method is used to solve the regression parameters )

TF Data generation : Reference resources TF The data generated 12 Law

TF Basic principles and conceptual understanding : tensorflow( One )windows 10 64 Bit installation tensorflow1.4 And basic concept interpretation tf.global_variables_initializer

Model :

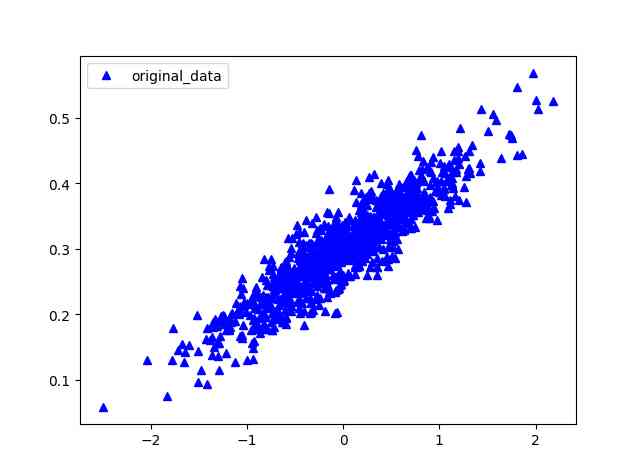

A simple linear regression y = W * x + b, use numpy Building complete regression data , And increase interference noise

import numpy as np

# Establish a linear regression equation of one variable y=0.1x1+0.3 , At the same time, a positive distribution deviation np.random.normal(0.0,0.03) For witnessing TF The algorithm of

num_points=1000

vectors_set=[]

for i in range(num_points):

x1=np.random.normal(loc=0.0,scale=0.66)

y1=x1*0.1+0.3+np.random.normal(0.0,0.03)

vectors_set.append([x1,y1])

x_data=[v[0] for v in vectors_set]

y_data=[v[1] for v in vectors_set]

Graphic display Data distribution results

import matplotlib.pyplot as plt

#https://www.cnblogs.com/zqiguoshang/p/5744563.html

##line_styles=['ro-','b^-','gs-','ro--','b^--','gs--'] #set line style

plt.plot(x_data,y_data,'ro',marker='^',c='blue',label='original_data')

plt.legend()

plt.show()

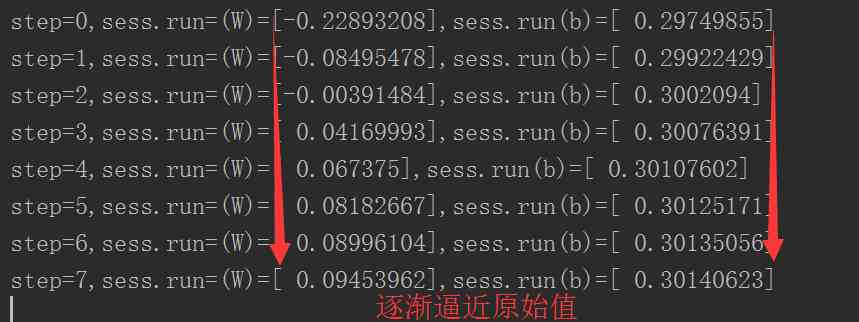

adopt TensorFlow The code finds the best parameters W And b, Make the input data of x_data, Generate output data y_data, In this case, there will be a straight line y_data=W*x_data+b. The reader knows W It will be close 0.1,b near 0.3, however TensorFlow Don't know , It needs to calculate the value itself . Therefore, the gradient descent method is used to solve the data iteratively

import tensorflow as tf

import math

# One 、 establish graph data

# Arbitrarily construct the parameters of a univariate regression equation W And b

W=tf.Variable(tf.random_uniform([1], minval=-1.0, maxval=1.0))

b=tf.Variable(tf.zeros([1]))

y=W*x_data+b

# Define the following minimum variance

#1. Define the minimum square root of error

loss=tf.reduce_mean(tf.square(y-y_data))

#2.learning_rate=0.5

optimizer=tf.train.GradientDescentOptimizer(learning_rate=0.5)

#3. Optimize the minimum

train=optimizer.minimize(loss)

# Two 、 Initialize variable

init=tf.global_variables_initializer()

# 3、 ... and 、 start-up graph

sess=tf.Session()

sess.run(init)

for step in range(8):

sess.run(train)

print("step={},sess.run=(W)={},sess.run(b)={}".format(step,sess.run(W),sess.run(b)))

Here's the iteration 8 Results of . Gradient is like a compass , Guiding us in the smallest direction . To calculate the gradient ,TensorFlow It will take the derivative of the wrong function , In our case , The algorithm needs to work on W and b Calculating partial derivatives , To indicate the direction of advance in each iteration .

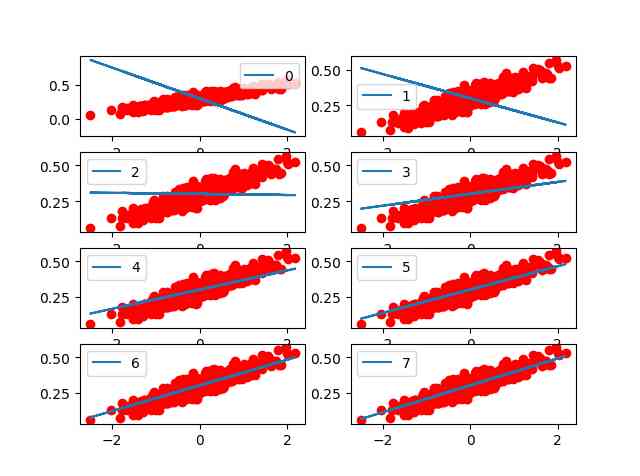

The following is the visualization of each iteration :

#Graphic display

# print(sub_1+'41')

# Be careful : You can use commas for each parameter , Separate . The first parameter represents the number of rows in the subgraph ; The second parameter represents the number of columns in the row of images ; The third parameter represents the number of images in each row , From left to right , From top to next add .

plt.subplot(4,2,step+1)

plt.plot(x_data,y_data,'ro')

plt.plot(x_data,sess.run(W)*x_data+

sess.run(b),label=step)

plt.legend()

plt.show()

版权声明

本文为[Elementary school students in IT field]所创,转载请带上原文链接,感谢

边栏推荐

- 你的财务报告该换个高级的套路了——财务分析驾驶舱

- CCR炒币机器人:“比特币”数字货币的大佬,你不得不了解的知识

- Leetcode's ransom letter

- Using consult to realize service discovery: instance ID customization

- 如何将数据变成资产?吸引数据科学家

- After brushing leetcode's linked list topic, I found a secret!

- 做外包真的很难,身为外包的我也无奈叹息。

- Elasticsearch 第六篇:聚合統計查詢

- 阿里云Q2营收破纪录背后,云的打开方式正在重塑

- 一篇文章带你了解CSS对齐方式

猜你喜欢

业内首发车道级导航背后——详解高精定位技术演进与场景应用

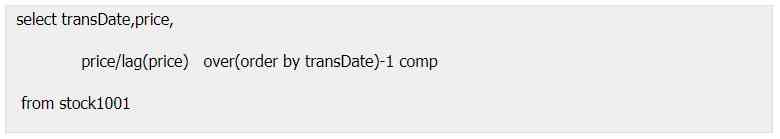

Calculation script for time series data

速看!互联网、电商离线大数据分析最佳实践!(附网盘链接)

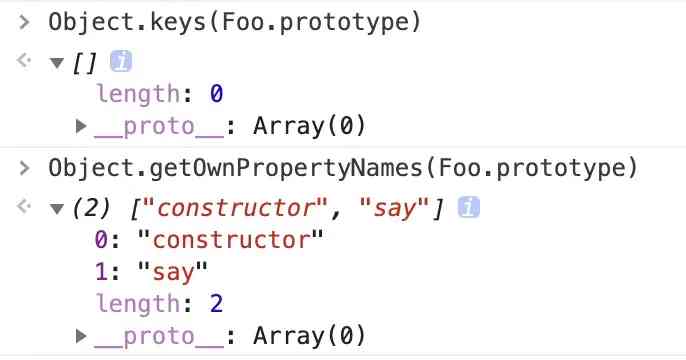

Using Es5 to realize the class of ES6

Architecture article collection

小程序入门到精通(二):了解小程序开发4个重要文件

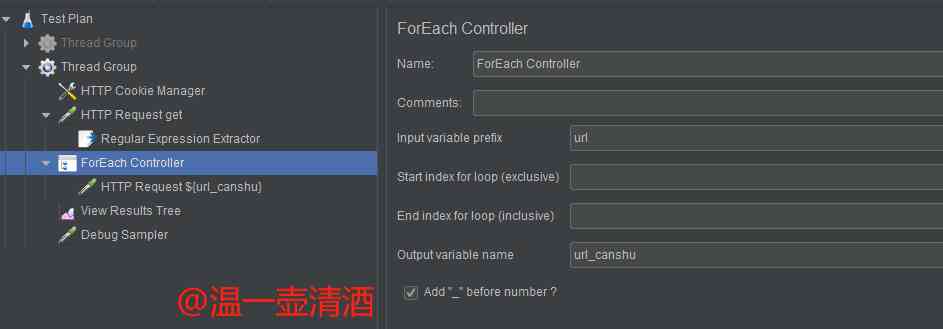

Jmeter——ForEach Controller&Loop Controller

快快使用ModelArts,零基础小白也能玩转AI!

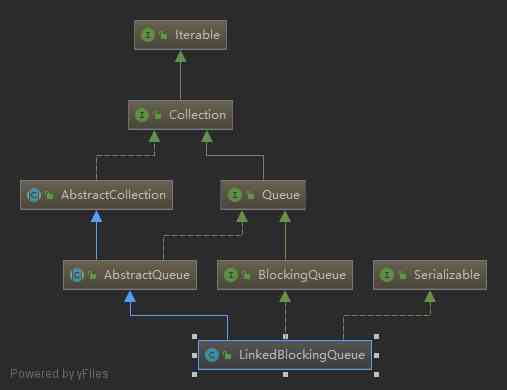

Linked blocking Queue Analysis of blocking queue

助力金融科技创新发展,ATFX走在行业最前列

随机推荐

Did you blog today?

Troubleshooting and summary of JVM Metaspace memory overflow

PHP应用对接Justswap专用开发包【JustSwap.PHP】

Want to do read-write separation, give you some small experience

In depth understanding of the construction of Intelligent Recommendation System

Arrangement of basic knowledge points

Analysis of ThreadLocal principle

Process analysis of Python authentication mechanism based on JWT

Save the file directly to Google drive and download it back ten times faster

TRON智能钱包PHP开发包【零TRX归集】

嘗試從零開始構建我的商城 (二) :使用JWT保護我們的資訊保安,完善Swagger配置

Calculation script for time series data

Every day we say we need to do performance optimization. What are we optimizing?

快快使用ModelArts,零基础小白也能玩转AI!

EOS创始人BM: UE,UBI,URI有什么区别?

Skywalking series blog 2-skywalking using

阿里云Q2营收破纪录背后,云的打开方式正在重塑

全球疫情加速互联网企业转型,区块链会是解药吗?

Do not understand UML class diagram? Take a look at this edition of rural love class diagram, a learn!

How to get started with new HTML5 (2)