Basic concepts

Definition

- A distributed real-time document storage , Each field Can be indexed and searched

- A distributed real-time analysis search engine

- Be able to extend hundreds of service nodes , And support PB Level of structured or unstructured data

purpose

- Full text search

- Structured search

- analysis

VS Traditional database

- Traditional database

- Provide precise matching

- ES

- Provide precise matching

- Full text search

- Dealing with synonyms

- Rate document relevance

- Generate analytics and aggregate data

- real time

Proper noun

-

Indexes ( Noun )

Similar to database

-

Indexes ( Verb )

Be similar to insert. For example, index a document to an index

-

Inverted index

By default, each attribute has an inverted index , You can set the property not to be indexed , It can only be covered , Cannot be modified

-

type

It's like a watch , Different types of the same index , You can have different fields , But it should have most of the similar fields . It can contain case , Can't contain period , Cannot start with a dash , The length is limited to 256.

-

Id

Document id, You can specify or automatically generate , Automatically generated ID, In most cases, when there are multiple nodes, only . If you create a document ,ID Conflict , The server will return 409

-

file

It's like a record , Documents can only be replaced , It can't be modified , The field types of documents need to be consistent , Otherwise, we can't match exactly

-

Exact value field

For numbers , date , Boolean and a not_analyzed Field , The query , Will apply to exact matching

-

Full text search field

otherwise , Search for relevance

PUT /megacorp/employee/1

{

"first_name" : "John",

"last_name" : "Smith",

"age" : 25,

"about" : "I love to go rock climbing",

"interests": [ "sports", "music" ]

}

for example

- megacorp It's the index

- employee It's the type

- 1 It's documentation id

- json The content is a document

Interaction

RESTful API

curl -X<VERB> '<PROTOCOL>://<HOST>:<PORT>/<PATH>?<QUERY_STRING>' -d '<BODY>'

VERB |

proper HTTP Method or The predicate : GET、 POST、 PUT、 HEAD perhaps DELETE. |

|---|---|

PROTOCOL |

http perhaps https( If you are in the Elasticsearch There's one in front https agent ) |

HOST |

Elasticsearch The host name of any node in the cluster , Or use localhost Represents the node on the local machine . |

PORT |

function Elasticsearch HTTP The port number of the service , The default is 9200 . |

PATH |

API The terminal path of ( for example _count The number of documents in the cluster will be returned ).Path It may contain multiple components , for example :_cluster/stats and _nodes/stats/jvm . |

QUERY_STRING |

Any optional query string parameter ( for example ?pretty Will format the output JSON Return value , Make it easier to read ) |

BODY |

One JSON Format of the request body ( If the request requires ) |

Example

request:

curl -XGET 'http://localhost:9200/_count?pretty' -d '

{

"query": {

"match_all": {}

}

}

'

response:

{

"count" : 0,

"_shards" : {

"total" : 5,

"successful" : 5,

"failed" : 0

}

}

Search document function

-

Get a document

GET /megacorp/employee/1 -

Simple query

GET /megacorp/employee/_search # Look up the top ten recordsGET /megacorp/employee/_search?q=last_name:Smith # Inquire about smith Top 10 records of -

Expression query

GET /megacorp/employee/_search { "query" : { "match" : { "last_name" : "Smith" } } }GET /megacorp/employee/_search { "query" : { "bool": { "must": { "match" : { "last_name" : "smith" } }, "filter": { "range" : { "age" : { "gt" : 30 } } } } } } -

Full text search

GET /megacorp/employee/_search { "query" : { "match" : { "about" : "rock climbing" } } } -

Phrase query

GET /megacorp/employee/_search { "query" : { "match_phrase" : { "about" : "rock climbing" } } } -

analysis ( It's like polymerization group by)

request:

GET /megacorp/employee/_search { "aggs" : { "all_interests" : { "terms" : { "field" : "interests" }, "aggs" : { "avg_age" : { "avg" : { "field" : "age" } } } } } }response:

... "all_interests": { "buckets": [ { "key": "music", "doc_count": 2, "avg_age": { "value": 28.5 } }, { "key": "forestry", "doc_count": 1, "avg_age": { "value": 35 } }, { "key": "sports", "doc_count": 1, "avg_age": { "value": 25 } } ] } -

Modify the document

PUT /website/blog/123 { "title": "My first blog entry", "text": "I am starting to get the hang of this...", "date": "2014/01/02" }Old documents will not be deleted immediately

New documents will be indexed

Document version Will add one

-

Delete the document

DELETE /megacorp/employee/123Document version It's still going to add one

Distributed features

ES Distributed actions that are automatically executed

- Assign documents to different containers or Fragmentation in , Documents can be stored on one or more nodes

- These shards are evenly distributed by cluster nodes , Thus, the indexing and search processes are load-balanced

- Each shard is replicated to support data redundancy , To prevent data loss caused by hardware failure

- Routing requests from any node in the cluster to the node that holds the relevant data

- Seamless integration of new nodes during cluster expansion , Reassignment of shards to recover from outliers

Horizontal expansion VS Vertical expansion

ES It's friendly to horizontal expansion , By buying more machines , You can use it better ES Distributed functions of

colony

A cluster has one or more nodes , When a node joins or exits the cluster , The cluster redistributes the distribution of all data equally

Master node function

-

increase / Delete index

-

increase / Delete node

It doesn't involve document changes and searches , Therefore, a single primary node will not become the performance bottleneck of the cluster

The metadata of index fragmentation is in each ES Every node has storage , Each node receives a request , We all know which one to go to ES node Find the data , By forwarding a request to ES node Where the machine is

The maximum number of documents in a fragment :(2^31-128)

The number of primary partitions of an index is determined at the time of establishment , And cannot be modified : Because documents are stored with shard = hash(routing) % number_of_primary_shards To determine the location of the document .routing The default is id, You can also customize it

The number of copies can be changed at any time

Index

PUT /blogs

{

"settings" : {

"number_of_shards" : 3,

"number_of_replicas" : 1

}

}

Fail over

Add a machine to the cluster

Delete node

Distributed write conflicts

For multiple client Writing ES, May cause write conflicts , Cause data loss

In some scenarios , Data loss is acceptable

But in some scenarios , It's not allowed .

Pessimistic controls concurrency

Traditional database control mode . By locking records , To implement concurrent serial execution

Optimistic concurrency control

ES Adopt optimistic control

So called optimistic control , The server assumes that most of the time , There will be no conflict , If there is a conflict , Refuse to modify , The client may need to retrieve and try again to process .

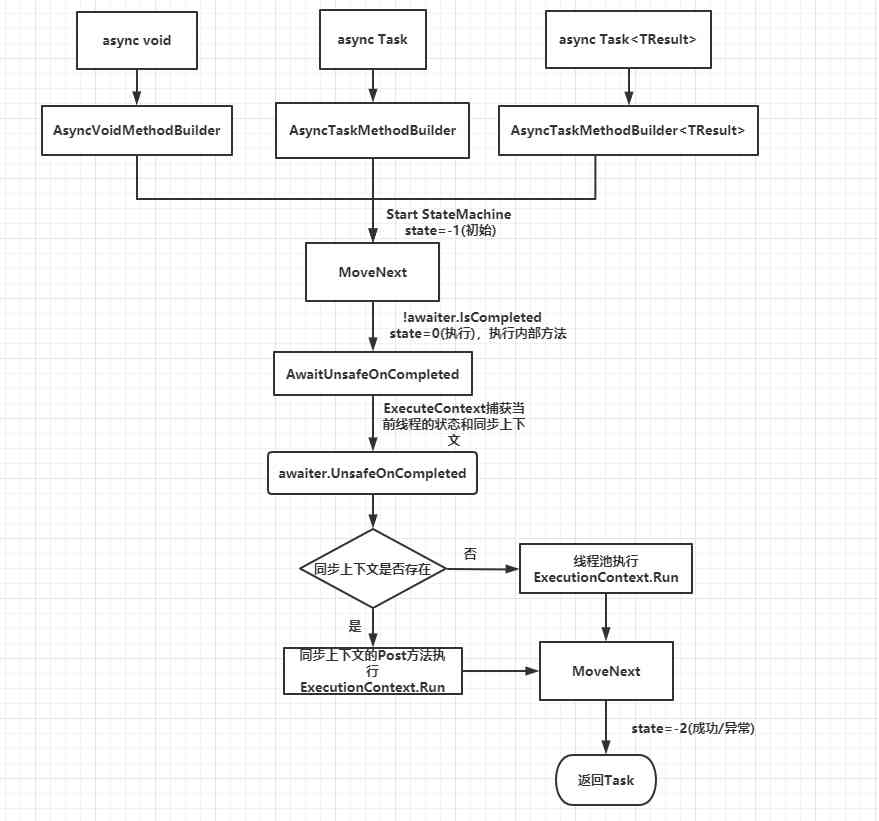

The process is as follows

Distributed document storage

Make sure that the document is located in shard

shard = hash(routing) % number_of_primary_shards

API Support belt routing Parameters , From defining the route , To ensure that related documents are routed to the same fragment

With ID newly build , Write and delete documents

Consistency assurance

- none: The main slice is active , Allow writing

- all: Active in all segments , Allow writing

- quorum: More than half of the nodes are active , Allow writing

If there are not enough slices active for the time being ,ES Will wait for , Default wait 1 minute , You can use the parameter timeout Change this value , If the timeout , Then failure returns

By default, the new index has

1Two copies shard , It means to satisfyA specified quantityshould Two shard copies of the activity are required . however , These default settings prevent us from doing anything on a single node . To avoid this problem , Only whennumber_of_replicasGreater than 1 When , It's only when the number is set .

With ID Retrieve documents

Similar to the picture above

With ID Update the document

Similar to the picture above , But after updating the document , Will rebuild the index

When updating a document locally , The master fragment is synchronized to the copy as a whole document , To ensure data integrity

Get multiple documents by condition

Search for

Return special fields

GET /_search

{

"hits" : {

"total" : 14,

"hits" : [

{

"_index": "us",

"_type": "tweet",

"_id": "7",

"_score": 1,

"_source": {

"date": "2014-09-17",

"name": "John Smith",

"tweet": "The Query DSL is really powerful and flexible",

"user_id": 2

}

},

... 9 RESULTS REMOVED ...

],

"max_score" : 1

},

"took" : 4,

"_shards" : {

"failed" : 0,

"successful" : 10,

"total" : 10

},

"timed_out" : false

}

-

took

The number of milliseconds executed

-

_shards

Query the status of shards , For example, there are several pieces that failed , Several are successful

-

timeout

You can set the timeout in the query , If the query is over time , Only the data that has been successfully obtained is returned , The rest of the data will be discarded

-

_index

Data sources Lucene Indexes , Every field of the document , Have a different Lucene Indexes

In the query, you can specify Lucene The index of , Default is not specified , So all indexes of the document will be queried , And summarize the results . If specified , It will be limited to the specified Lucene Query data in the index

Pagination

GET /_search?size=5&from=5

This only works with shallow pagination , If the query is too deep , Can cause serious performance problems .

Because, for example, to query size by 5,from=10000. that ES It will look up from each fragment 10005 Bar record , If there is 100 individual shard, Then there will be 100*10005 Bar record ,ES And then to this 100*10005 Sort , And only return to 5 Bar record

Deep pagination

Use cursors scroll

It's in ES A valid snapshot has been created in , Provide to scroll In depth query of data

Inverted index

Reverse the document :

- The quick brown fox jumped over the lazy dog

- Quick brown foxes leap over lazy dogs in summer

obtain :

Term Doc_1 Doc_2

-------------------------

Quick | | X

The | X |

brown | X | X

dog | X |

dogs | | X

fox | X |

foxes | | X

in | | X

jumped | X |

lazy | X | X

leap | | X

over | X | X

quick | X |

summer | | X

the | X |

------------------------

The challenge of inverted arrangement

- Quick Follow quick, Users may think they are the same , It's also possible to think it's different

- dog and dogs Very close to , In correlation search , They should all be searched

- jump and leap Synonyms , In correlation search , They should all be searched

Understanding relevance

Correlation score is a vague concept . There is no exact value , There is no single right answer . It is a quantitative estimation of documents according to various rules .

Its scoring criteria are as follows :

-

Search word frequency

The more frequently a search term appears in this field , The higher the score

-

Reverse document frequency

The more frequently a search term appears in an index , The lower the score

-

Field length criteria

The longer the field is , The lower the score

Correlation destruction

When using full-text search for a keyword , There will be , Documents with low relevance score higher than those with high relevance .

For example, search terms milk. There are two main slices in the index ,milk stay P1 There is 5 Time , stay P2 There is 6 Time . because P1 and P2 The word distribution is different .

P1 The amount of words is more than P2 The number of words is high , that milk Count in P1 There is a small proportion of them , Lead to in P1 Get a high correlation score , And in the P2, Proportion da, Lead to in P2 The score of correlation is low .

reason

It's because of the uneven distribution of local data

resolvent

- Insert more documents

- Use ?search_type=dfs_query_then_fetch Give a global score . But there will be serious performance problems . It is not recommended to use .

Query filtering bitset

Every time you use the key words to query , Will create a search term bitset,bitset Contains the serial number of the matching document . In hot search for search terms ,ES It's going to be about these bitset Cache targeted , Instead of having to query again , Find inverted index again .

For multiple queries, you can have the following figure

When the inverted index is rebuilt ,bitset In the cache will automatically fail

Caching strategy

- lately 256 Used the next time bitset, Will be cached

- The record in the segment is less than 1w Of , Will not be cached

Index management

Create index

You can explicitly create , You can also implicitly create .

Under big clusters , Index creation , It's about metadata synchronization , It may lead to a large increase in cluster load . In this case, you need to disable the implicit creation of the index

action.auto_create_index: false

Delete index

Delete index , It will involve the deletion of a large amount of data , If the user accidentally tries to pass a command , Delete all indexes , This can lead to terrible consequences

By disabling this operation , It can be set as follows

action.destructive_requires_name: true

analyzer

Each index can have its own parser , The analyzer is mainly used in full-text indexing , Through different languages , Use different participles , Different word conversions to construct inverted indexes and calculate correlations .

Fragmentation

The invariance of inverted index

benefits

- Once read into the system cache , Will stay there forever , until LRU The algorithm removes the unused inverted index . This is right ES Read performance provides a very big improvement

Not good.

- New document added , Cannot incrementally update , You can only rebuild the index and replace

How to ensure that new data can be queried in real time

Use more indexes .

For new documents , Don't rebuild the index right now , But by adding extra indexes . When querying data , By polling all the indexes , And merge the results back to .

ES It's not strictly real-time , It's quasi real time, exactly , because data From insertion to inverted index creation , New data is not accessible

polymerization

Like the database group by. It's just that the grammar is different . Functions are interlinked

Application layer performance tuning

turn up refresh interval

The default refresh time is 1s, Each refresh will have a disk write , And create a new segment . By setting a larger refresh time , It can make the disk write less times , A larger segment was written . Reduce the number of segment merges .

prohibit OS hold ES Replace it

OS When memory is tight , Replace the process with another village . And for the performance is strongly related to memory ES Come on , Displacement to external storage is fatal . By setting the parameters of the process in the kernel , No substitution , You can avoid OS This kind of movement of

Reserve a large amount of file system cache for ES

because ES The invariance of most data , bring ES Most of the disk operations , Can be accelerated through file system caching . once ES The inverted index and data cache to the system , If there is no interference from other processes , And it's more frequently accessed data , Will always reside in the system cache , bring ES Most of the operations are in memory . Generally speaking , Allocate half of the memory to the file system , It is appropriate. .

Use auto build ID

If specified ID,ES It will check whether ID Already exist , For large clusters , It's expensive . If ID It's generated automatically ,ES Will skip the check , Insert the document directly

Better hardware

- More memory

- SSD

- Local disk

Do not use join Relational query

ES Not suitable for association query , Can cause serious performance problems .

If the business has to join, The associated data can be written to an index , Or through the application to do the associated action .

mandatory merge Read only index

merge Into a single paragraph , Better performance

Add a copy of the

There are more machines , By increasing the number of copies , It can improve reading efficiency

Don't return big data

ES It's not suitable for this scene

Avoid sparsity

Don't store irrelevant information in the same index

Data preheating

For hot spot data , It can be requested through a client ES, Let the data take over first filesystem cache.

Separation of hot and cold data

Hot and cold data are deployed on different machines , It can prevent hot data from being washed away by cold data in the cache

Kernel layer performance tuning

Current limiting

If ES There are high load requests ,ES The coordinator node will accumulate a large number of requests in memory waiting to be processed , As the number of requests increases , The memory consumption of the coordination node will increase , Finally lead to OOM.

Through current limiting , Can effectively alleviate .

The query

If the client sends a complex query , Make the data that needs to be returned abnormally large , This can also lead to OOM problem .

By modifying the kernel , If the requested memory usage is more than the system can bear , Then truncate to solve

FST Too much leads to OOM

FST It is the index of inverted index in memory , It's done by prefixing the state machine , Quickly locate the key words on the disk of inverted index , To reduce the number of disk access and accelerate the retrieval speed .

But because of FST It's memory resident , If the inverted index reaches a certain size ,FST It is bound to cause OOM problem . and FST Is stored in JVM In heap memory . The maximum amount of memory in the heap 32G.

and 10 TB The data needs to be 10G To 15G Memory to store FST.

- Through the FST The storage of is put into out of heap memory

- adopt LRU Algorithms to manage FST, For those that are not commonly used FST Replace the memory

- modify ES visit FST The logic of , bring ES You can directly access from inside the heap to out of heap FST

- Add... To the stack FST Of cache, Speed up the hit

Ref: