当前位置:网站首页>[elt.zip] openharmony paper Club - fast random access string compression

[elt.zip] openharmony paper Club - fast random access string compression

2022-06-11 03:35:00 【ELT. ZIP】

- This article from the

ELT.ZIPThe team ,ELT<=>Elite( elite ),.ZIP In compressed format ,ELT.ZIP That is to compress the elite . - member :

- Sophomore at Shanghai University of engineering and Technology

- Sophomores of Hefei Normal University

- Sophomores at Tsinghua University

- Freshman of Chengdu University of Information Engineering

- Freshmen of Heilongjiang University

- Freshman of South China University of Technology

- We are from

6 A place toClassmate , We areOpenHarmony Growth plan gnawing paper Clubin , AndHuawei 、 Soft power 、 Runhe software 、 Rio d information 、 ShenkaihongWait for the company , Study and ResearchOperating system technology…

List of articles

【 Looking back 】

① 2 month 23 Japan 《 I'm here for a series of tours 》 And Why is Lao Zi —— ++ Interpretation of compression coding from the perspective of overview ++

② 3 month 11 Japan 《 I'm here for a series of tours 》 And I'll show you the scenery —— ++ Multidimensional exploration universal lossless compression ++

③ 3 month 25 Japan 《 I'm here for a series of tours 》 And I witnessed the vicissitudes of life —— ++ Turn over those immortal poems ++

④ 4 month 4 Japan 《 I'm here for a series of tours 》 And I visited a river —— ++ Count the compressed bits of life ++

⑤ 4 month 18 Japan ++【ELT.ZIP】OpenHarmony Paper Club —— One article penetrates the cutting edge of multimedia ++

⑥ 4 month 18 Japan ++【ELT.ZIP】OpenHarmony Paper Club —— You shouldn't miss these little scenery ++

⑦ 4 month 18 Japan ++【ELT.ZIP】OpenHarmony Paper Club —— Analysis of sparse representation of medical images ++

⑧ 4 month 29 Japan ++【ELT.ZIP】OpenHarmony Paper Club —— Computer vision data compression application ++

⑨ 4 month 29 Japan ++【ELT.ZIP】OpenHarmony Paper Club —— Ignite the spark of main cache compression technology ++

⑩ 4 month 29 Japan ++【ELT.ZIP】OpenHarmony Paper Club —— Immediate Conquest 3D Trellis compression coding ++

⑪ 5 month 10 Japan ++【ELT.ZIP】OpenHarmony Paper Club —— Cloud computing data compression scheme ++

⑫ 5 month 10 Japan ++【ELT.ZIP】OpenHarmony Paper Club —— Big data framework performance optimization system ++

⑬ 5 month 10 Japan ++【ELT.ZIP】OpenHarmony Paper Club —— Internet of things swing door trend algorithm ++

⑭ 5 month 22 Japan ++【ELT.ZIP】OpenHarmony Paper Club —— Electronic device software update compression ++

⑮ 5 month 22 Japan ++【ELT.ZIP】OpenHarmony Paper Club —— Artificial intelligence short string compression ++

⑯ 5 month 22 Japan ++【ELT.ZIP】OpenHarmony Paper Club —— Multi tier storage hierarchical data compression ++

【 This issue focuses on 】

FSST The core of thoughtFSST Evolution ofFSST And LZ4 contrastReproduce with your own hands FSST

【 technology DNA】

【 Intelligent scene 】

| ********** | ******************** | ******************** | ******************** | ******************** | ******************** | ******************** | ******************** | ******************** | ******************** | ******************** | ******************** | ******************** | ******************** | ******************** | ***************** |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| scene | Autopilot / AR | Voice signals | Streaming video | GPU Rendering | science 、 Cloud computing | Memory reduction | Scientific application | Medical images | database server | Artificial intelligence image | Text transfer | GAN Media compression | Image compression | File synchronization | Database system |

| technology | Point cloud compression | Sparse fast Fourier transform | Lossy video compression | Grid compression | Dynamic selection compression algorithm framework | lossless compression | Hierarchical data compression | Medical image compression | Lossless universal compression | Artificial intelligence image compression | Short string compression | GAN Compressed on-line multi particle distillation | Image compression | File transfer compression | Fast random access string compression |

| Open source project | Draco / Based on deep learning algorithm /PCL/OctNet | SFFT | AV1 / H.266 code / H.266 decode /VP9 | MeshOpt / Draco | Ares | LZ4 | HCompress | DICOM | Brotli | RAISR | AIMCS | OMGD | OpenJPEG | rsync | FSST |

FSST

Fast static symbol table (FSST) Compress , This is a lightweight string encoding scheme .

introduction :

::: hljs-left

• Strings are common in real-world datasets . They usually take up a lot of data , The processing speed is very slow .

• In many real databases , A large part of the data is represented by strings .

• This is because the string is It is often used as a universal type of data .

• Artificially generated text ( Description or comment fields )

:::

• Machine generated identifier (url、 E-mail address 、IP Address )

1. Existing solutions for string processing

• Strings are usually highly compressible , Many systems rely on dictionaries to compress strings . But dictionary compression requires complete repetition of strings to reduce size , So when strings are similar but not equal , Dictionary compression has no advantage . Most strings stored in the database, each string is usually less than 30 byte .LZ4 It is not suitable for compressing small 、 A single string , Because they need to reach KB A good compression factor can only be obtained with an input size of orders of magnitude . however , Database systems usually require random access to a single string , and LZ4 The algorithm is implemented by accessing data blocks , This can not meet the needs of the database system .

2. The main features

• Random access ( The ability to decompress a single string without decompressing a larger block )

• Fast decoding (≈1-3 cycle / byte , or 1-3 GB/s Every nucleus )

• A good compression factor for text string data sets (≈2×)

• High coding performance (≈4 Cycles per byte , or ≈1 GB Per second per byte )

3. Key ideas

• Yes, it is 1 Bytecode substitution occurs most frequently 8 Substring of bytes , These elements form an immutable symbol table .

4. The accumulation of predecessors

• Database system lightweight compression Our research focuses on Integer data , However, the prevalence of strings in real workloads and performance challenges require more research . The most common way to compress a string is to use Dictionary deduplication . The dictionary maps each unique string to an integer code , Use the integer compression scheme to compress the code . In most database systems , The string itself is not compressed .

Binnig Et al. Proposed a band

Incremental prefix compressionOfOrdered stringDictionaries .

The dictionary is represented as a hybrid try /B-tree data structure , Store unique strings in sorted order . Although for some datasets ( for example url) It works , But many other common string datasets ( for example uuid) Not for a long timeShare prefix, This makes the scheme invalid . The global dictionary also includesUpdates are expensiveEtc , This hinders their widespread adoption .

Another way to compress a string dictionary is by Arz and Fischer Put forward

They developedLZ78 A variation of the, allowUnzip a single string. however , Decompression of this methodIt's very expensive, For an average length of 19 String , Need to exceed 1 Microseconds . This is equivalent to about per character 100 individual CPU Cycles or tens of megabytes per second , This is too slow for many data management use cases .

PostgreSQL

Do not use a string dictionary , Instead, it implements a system called“ Super large attribute storage technology ”(TOAST)Methods . Greater than 2 KB Compression of , Smaller values remain uncompressed .

Byte pairs

This scheme is a minorityAllows you to decompress a single short stringOne of the compression schemes of . It first performs a complete transfer of data , Determine which byte values do not appear in the input , And calculate the frequency of each pair of bytes . Then replace the most common byte pairs with unused byte values . Repeat the process , Until there are no more unused bytes . Byte pair dependency on unused bytes means , Given an existing compressed table , Invisible data cannot be compressed . The recursive nature of byte pairs makesDecompress iterations , slowly.

Recursive pairing

A kind ofRandom access compression format, It recursively constructsHierarchical symbolic grammar. The initial syntax consists of all single byte symbols , By replacing the most frequent continuous symbol pair in the source text with a new symbol 、 Recalculate the frequency of all symbol pairs relative to the extended syntax , And recursively extend .

Main stepsMake a static symbol table

Identify frequently occurringCommon substring( Call it a symbol ), And replace them with short 、 Fixed size code , For the sake of efficiency , The length of the symbol is 1 To 8 Between bytes , And in bytes ( Not a bit ) Mark on the boundary . Code is always 1 Byte length , This means that at most 256 Symbols , One of the codes is reserved asEscape code.decompression

Decompression is quite simple . Every piece of code goes throughArray searchConvert to its symbol , And append the symbol to the output buffer . To decompress effectively , Each symbol is represented as a 8 byte (64 position ) 's words , And store all the symbols in an array . Besides , There is a second array , Used to store the length of each word . Use this expression , Can unconditionally put 64 Bitwords are stored in the output buffer , The output buffer is then pushed forward to the actual length of the symbol to decompress the code , Rely on the... Available on modern processorsFast unaligned storage, This implementation requiresFew instructions, andThere are no branches. itsCache efficiency is also very high, Because the symbol table (2048 byte ) And length array (256 byte ) Can easily Put it in the first level CPU In cache .

Several explanations

::: hljs-left

1. Advantages of using escape characters

PS:( Escape codes are not strictly necessary ; You can also use only those bytes that do not appear in the input string as code )

Direct cause : Keep the code 255 As an escape token , Indicates that the following bytes in the input need to be copied as is , Instead of looking up in the symbol table .

Three advantages

(1) Support the use of existing symbol tables Compress any ( Invisible ) Text .

(2) Allow in Data samples Execute symbol table on structure , To accelerate compression .

(3) it Release The original was kept as Symbol of low frequency byte , thus Improved compression factor .

2. Continuous injection of single byte symbols

By two if Shorter The symbol of Merge to create One longer The symbol of , If the longer symbol is followed by Gain sort In the At the end of It will be used by other strings with longer gain replace , That means The original The two Shorter The string of Then disappear .

essentially , If you don't consider single bytes , Symbols only get longer , If that's better , It is never possible to go back to shorter symbols . Continuous injection of single byte symbols allows Grow again Because of this Too greedy A valuable long symbol lost in the choice of .

- FSST Good properties

- Keep string properties : It will not be converted into other types of text because of encoding .

- Compress query processing . Directly compare the symbols in the table .

- string matching . You can also perform more complex and frequently occurring string operations on compressed strings ( for example ,LIKE Pattern matching ), Automata designed to recognize them by converting them into byte streams , Remap them into the code flow .

- Symbol table is very small . The maximum size of the symbol table is 8*255+255 byte , But it usually takes only a few hundred bytes , Because the average symbol length is usually 2 about . therefore , It is perfectly possible to compress each page of each string column using a separate symbol table , However, a coarser granularity can also be used ( By row group or entire table ). Finer grained symbol table construction leads to better compression factors , Therefore, the symbol table will be more suitable for compressed data .

- Parallelism . Because there is no ( Explain ) Compression state ,FSST( Explain ) Compression parallelization is simple —— Only the symbol table construction algorithm may need serialization . The other side Noodles , Let each thread that loads data blocks in batches construct a separate symbol table ( It should be placed in each block ) It's also acceptable , This compression will also become very parallel .

- 0-terminated character string .FSST Optionally generated to 0 a null-terminated string , Because in order to 0 The bytes in the ending string only appear at the end of each string , In fact, there are 254 Generations The code needs to be compressed . This slightly reduces the level of compression ( The lowest value 255 Symbols must be deleted from the symbol table , It will appear using escape bytes To deal with ), But this optional mode allows FSST Adapt to many existing infrastructures .

:::

5. Existing problems

- repeat

First, calculate the length as 1 To 8 Frequency of occurrence of substrings of in data , And then according toGain sequenceChoose the former 255 Symbols . The problem with this approach is :The selected symbols may overlap, So the calculated gain will beoverestimate. for example , stay URL Data set ,8 Byte symbol http://w Almost every string contains , Most likely to be selected . But the symbols ttp://ww and tp://www It also looks promising , Adding all three candidates to the symbol table is a waste of a limited amount of code , And will have a negative impact on the compression factor . - Greed

Greedily during encodingSelect the longestSymbols of do not necessarily maximize compression efficiency . Refer to what we mentioned aboveContinuous single byte injection. - in summary , Symbol overlap and greedy coding , Cause the dependency problem between symbols , This makes it difficult to estimate the gain , To create a good symbol table .

Optimization plan

::: hljs-left

(1) Iterative corpus , Use the current symbol table Dynamic coding . This stage Calculation The overall quality of the symbol table ( Compressibility factor ), But also calculate the value of each symbol in the compressed representation Frequency of occurrence , And each Consecutive sign pairs .

(2) Use these counts , By choice The apparent gain is the highest The symbol of Build a new symbol table .

example

corpus “tumcwitumvldb” Upper 4 Sub iteration . To make the example easy to illustrate , Limit the maximum symbol length to 3( instead of 8), The maximum symbol table size is limited to 5( instead of 255). After each iteration , Display the compressed string at the top , But for readability , Do not display code , Instead, the corresponding symbol is displayed .“$” Represents an escape byte .

:::

- This is the original uncompressed string

- In the first iteration , The length of the compressed string

Temporary double, Because the symbol tableAt first it was empty, Every symbol must be escaped . At the bottom of the picture , We show the symbol table , front 5 Bit symbols are based onStatic gain.

iteration 1 after , Static gain ranked top 5 Bitwise is “um”、“tu”、“wi”,“cw” and “mc”. The first two uppermost symbols (“um”,“tu”) It appears twice , So the gain is 4, The last three symbols (“wi”,“cw” and “mc”) Only one Time , So the gain is 2. Be careful , Symbol “mv”,“vl”,“ld”,“db”, “m”,“t”,“u” The gain is also 2, Can also be selected . let me put it another way , When the top symbol is selected , The algorithm will Choose... Arbitrarily .

- In the 2、3 and 4 In the next iteration , Symbol table

Steady improvement in quality.

iteration 4 after , The initial length is 13 The corpus of is compressed into 5.

The figure also shows , The algorithm is also

There will be errors, But these mistakes will happen inFixed in next iteration.for example , In the 2 In the next iteration , Symbol “tu” It looks quite attractive , The static gain is 4, But because of “tum” Also in the symbol table in ,“tu” Eventually it becomes worthless

- And in the first place 3 Corrected in iteration

Technology optimization :

AVX512 Compress

First step ,FSST API Compress a batch of strings , The string is copied to a string created by 512 A temporary..., consisting of segments buffer in , If necessary Division Long string , And add Terminator .

The second step , First, the job queue array is sorted by the reverse string length Radix sorting —— fast , Just one pass —— So first process the longest string , This helps Load balancing . Jobs may be completed in a discontinuous sequence , Therefore, the coding work is started in a discontinuous sequence due to sorting , Does not make the algorithm ( further ) complicate .

The third step ,AVX512 The advantage of is not memory access , But in the compressed kernel Parallel computing . Not just a one-time start SIMD kernel To deal with it 8 In one channel 8 A string ( or 24 In one channel 24 A string ,3× an ), Because some strings are much shorter than others , And some strings are better than Other strings are much more compressed . This will mean that at the end of the coding work , Many channels will be empty . therefore , buffer 512 Homework , And refill the lanes in each iteration as needed . Exit the job ( In the job control register through Avenue ) send use compress_store finger Make , and fill charge expand_load Instructions .

FSST And LZ4 contrast

· Speed

The table above shows LZ4 and FSST The relative performance of the three indicators on each data set respectively and averaged .

For almost all data sets ,FSST The compression factor and compression speed are better than LZ4. On average, , In addition to producing a better compression factor FSST The compression speed is also improved 60%. For decompression speed ,FSST Faster on some datasets , and LZ4 Faster on other datasets —— The average speed is almost the same .

· Random access

In the database scenario , Large files are not usually stored , Instead, use string attributes or dictionaries that contain a large number of relatively short strings . use LZ4 single Compressing these strings alone yields a very poor compression factor , Ordinary LZ4 (LZ4 That's ok ) Can't reasonably handle short strings — The compression factor is less than 1, This means that the data size actually increases slightly .LZ4 Optionally, the use of additional dictionaries is also supported , The dictionary needs to be provided with compressed data for . Use zstd Pre generate a dictionary suitable for the corpus (LZ4 Dictionaries ), The compression factor is improved to some extent , But it seriously affects the compression speed .

The following figure shows the test results

· Sub text data

Outside the database environment , Compressed communities often evaluate Silesia corpus Compression method on , The corpus is composed of 11 Files make up , among 4 individual yes text file (dick- ens, reymont, mr, webster),1 Yes XML, 6 Yes Binary .FSST The compressed size of text files is increased on average 10%, But the compressed size of binary files is reduced on average 25%. Although binary files are considered to be related to FSST irrelevant , But it's in the big XML and JSON file Upper Compression ratio Than LZ4 Bad 2-2.5 times , It's related . however , The database system should not store these combined values as simple strings , Instead, it should be stored as a special type that allows query processing . for example ,Snowflake distinguish JSON Structure in column , And inside will

Every common occurrence JSON Attributes are stored in separate internal Columns .

FSST Evolution of

FSST The compression algorithm achieves the current design after many iterations . submit a memorial to the emperor Shows 7 Of various varieties Compressibility factor (CF)、 Symbol table construction cost (CS) He Zi String encoding speed (SE)

The first design is based on the suffix array , The compression factor reaches 1.97, But the construction of the symbol table requires 74.3 cy- cles/ byte , Coding requires 160 cycles/ byte .

current AVX512 edition ( Variations in the figure 7) Fast in table building 90 times , fast 40 times Used to encode more than the first version - At the same time, it provides a higher compression factor .

The final version is also better than the original version FSST edition ( variant 4) Much faster , This is due to the loss of perfect hashes and AVX512—— Despite having to sacrifice relative to the variant 4 about 6% Spatial gain of .

Although it takes many iterations , However, the construction time of symbol table only accounts for a small part of the coding time . To optimize the construction, we need to reduce the number of iterations from 10 Times reduced to 5 Time , Build an example ( Each iteration grows ), And reduce the memory occupation of the counter .

Repeat the process

System requirements

- Linux、Windows、MacOS All possible , Here is Deepin 20.5 / Linux Environmental Science

Source code preparation

- First , Came to FSST Open source repository of https://github.com/cwida/fsst, On configured Git In the case of environment, you can copy according to the position indicated in the figure https or ssh Address to clone the source code to the local ; If not configured Git, May refer to github or gitee According to the relevant instructions of . however ,Git It is not necessary here , It can also be manually operated “Download ZIP” Get the compressed package and decompress it .

- As mentioned above , in the light of Git Environmental Science , Type the following command in the terminal :

git clone https://github.com/cwida/fsst.git # If configured SSH Public key , The following figure can be used

cd fsst/

Environment building

- CMake install

- FSST Use C++ Language implementation , So rely on CMake Tools to compile and build ,Debian It can be used conveniently under the system apt Implement tool installation initialization , Type and execute the following command :

sudo apt update

sudo apt install cmake

You can see cmake Has been installed before , And the version is 3.18.4 :

- Zstd And LZ4 The installation of the library

- FSST Need to call zstd And lz4 Correlation API To generate the corresponding dictionary during compression , Therefore, you also need to prepare the corresponding dynamic library :

sudo apt install zstd* lz4* libzstd* liblz4* # Same as cmake Installation

After completion , Can be in /usr/lib/x86_64-linux-gnu/ See that the relevant files have been generated :

Besides , It also needs to be established to /usr/lib/ The soft links , Avoid the problem that the default directory cannot be found during subsequent links :

# Be careful , Below, such as '1.4.8' '1.8.3' The version number of a class needs to be replaced according to the actual situation

cd /usr***/lib/x86_64-linux-gnu/

echo '../libzstd.so ../libzstd.so.1 ../libzstd.so.1.4.8' | sudo xargs -n 1 ln -s libzstd.so.1.4.8

echo '../liblz4.so ../liblz4.so.1 ../liblz4.so.1.8.3' | sudo xargs -n 1 ln -s liblz4.so.1.8.3

Compiling and constructing

- The environment deployment is basically completed , Let's start compiling .

cd -

cmake ./

make -j8 && make binary

100% After completion , Description compilation succeeded , Check the directory , You can see fsst Binary program for has been generated :

- For the convenience of subsequent operation , It is recommended to add it to the environment variable :

vim ~/.bashrc

export PATH=/home/yanxu/ELT.ZIP/fsst:$PATH # Add... At the end of the line

:wq # Save and exit

It can be used normally , Input fsst You can view the instructions :

Evaluation test

automated testing

- Sufficient data sets are available in the warehouse to evaluate the algorithm , There are also automated scripts to help you run the whole course with one click , Let's have a try :

cd paper/

chmod +x *.sh

results The results tested by the author have been stored in the directory , But we can do it again on our own machine , Execute the following command :

make experiments

It will take a long time , Just wait patiently , Here is an example of the author :

The leftmost column is a wide variety of character sets , The other three frames represent the compression ratio 、 Compression speed 、 Decompression speed , among , On the left is LZ4、 The right side is FSST. It's not hard to see. , Compression ratio ,FSST Only in urls Upper comparison LZ4 A little weaker ; Compression speed ,LZ4 Only in hex Got a slight advantage in ; In terms of decompression speed , It's the two that win or lose , Can be regarded as negligible .

Manual testing

- Then besides , You can also manually compare more algorithms .

reference :

边栏推荐

- 词汇表的构建——代码补全快餐教程(3)-分词

- Why is vfly, a high-end brand of Yadi that does not live up to its name, not high-end?

- Windows10 installing keras

- three. JS cool technology background H5 animation

- Thinkphp3.2.3 deserialization using chain analysis

- UML系列文章(28)体系结构建模---协作

- PostgreSQL source code learning (XX) -- fault recovery ① - transaction log format

- Canvas rotation drawing H5 animation JS effect

- 科技PRO实力测评:高端按摩椅市场综合PK,究竟谁才配得上机皇?

- jlink最新版本下载

猜你喜欢

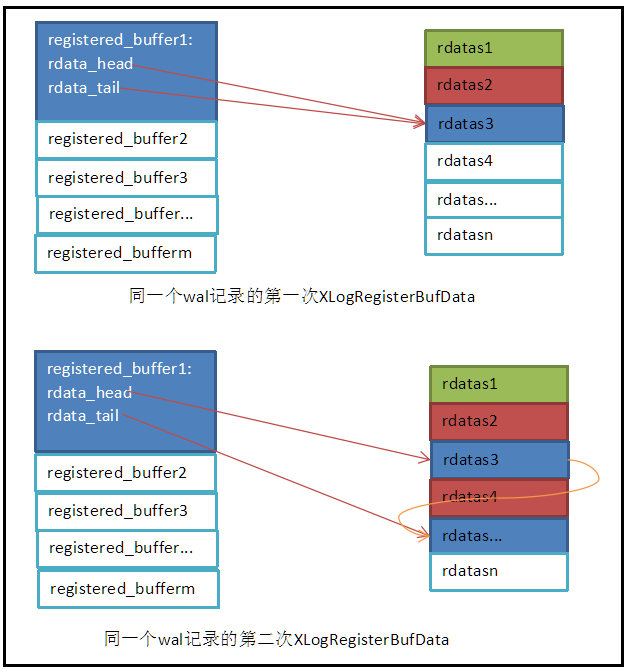

PostgreSQL source code learning (22) - fault recovery ③ - transaction log registration

用Fragment实现图片简易浏览

has been blocked by CORS policy: No ‘Access-Control-Allow-Origin‘ header is present on the requested

OpenGL错误指南

iQOO 8实测上手体验:王者归来,从不高调

Canvas+svg line particle animation web page background

基于SSM框架的连锁超市购物零售后台管理系统

基于SSM框架的学生在线教育教学课程管理系统

net::ERR_ FILE_ NOT_ Found error

LVGL中文字体制作

随机推荐

Oppo K9 tests "bundling sales" and consumers "earn" or "lose"?

【ELT.ZIP】OpenHarmony啃论文俱乐部——数据高通量无损压缩方案

Why is vfly, a high-end brand of Yadi that does not live up to its name, not high-end?

Path count 2 (DP + number of combinations)

Product milestones in May 2022

Checkbox beautify button selected style

The dependent version number in the dependencymanagement in the POM project parent in the idea is red

Mavros控制无人机在gazebo环境下进行双目SLAM

{dataSource-1} closing ... {dataSource-1} closed

ARM开发板方案与厂商分析

被“内卷”酸翻的OPPO Reno6

postgresql 函数的参数为自定义类型时传参格式

R analysis visual practical data (flight \u education \u restaurant \u tenant \u change \u life \u safety)

Simple image browsing with fragment

How should Xiaobai start the Amazon self support evaluation?

Multi thread alternate output ab

Oppo reno6 turned sour by "inner roll"

RequestContextHolder

R bioinformatics statistical analysis

SQL | some indicators of the game industry