当前位置:网站首页>Several methods of online database migration

Several methods of online database migration

2022-07-04 19:35:00 【Koikoi123】

Internet system , There is often a need for data migration . The system moves from the computer room to the cloud platform , Moving from one cloud platform to another , After system reconfiguration, the table structure has changed , Sub database and sub table , Change the database selection and so on , Many scenarios require data migration .

In the Internet industry , A lot of systems have high traffic , Even at two or three in the morning, there's a certain amount of traffic . Due to system data migration , Cause the service to be suspended for a few minutes , It's hard to be accepted by the business side ! In this article, let's talk about , On the premise that users have no perception , How to design a non-stop data migration solution !

What key points should we pay attention to in the process of data migration ? First of all , Ensure that the data after migration is accurate and not lost , That is, every record is accurate and does not lose records ; second , It doesn't affect the user experience ( Especially those with high traffic C End business needs smooth migration without downtime ); Third , Ensure performance and stability after migration .

Traditional technical solutions

Traditional schemes generally consist of DBA To do it ,DBA Stop using the database first , Then write a migration script to export the data to an intermediate file . Then write the import script , Import target library .

Disadvantages of traditional schemes

- The database will be out of service for hours or even days , The application can't use , It is basically unacceptable in some industry applications .

- It cannot meet the migration of heterogeneous data , If oralce or sqlservcer and mysql The data structure is different , It has a relatively complex mapping relationship , Need to do data conversion , Can't satisfy migration .

Hang from the library

Build a slave library on the master library . After data synchronization from the library is completed , Upgrade the slave library to the master library ( New library ), Then switch the flow to the new library . This method is suitable for data structure unchanged , And the traffic is very low in idle time , Scenarios that allow downtime migration . It usually happens in the scenario of platform migration , Such as moving from computer room to cloud platform , Moving from one cloud platform to another . Most small and medium-sized Internet Systems , Low access in idle time . In free time , A few minutes of downtime , Little impact on users , The business side is acceptable . So we can adopt the plan of downtime migration . Steps are as follows :

1, New slave Library ( New database ), Data starts to synchronize from master to slave .

2, After data synchronization is complete , Find a free time period . In order to ensure the consistency of master-slave database data , We need to stop the service first , Then upgrade the slave library to the master library . If you use a domain name to access the database , Directly resolve the domain name to the new database ( Upgrade from library to master library ), If you access the database with IP, take IP Change to a new database IP.

3, Last start service , The whole migration process is complete .

The advantage of this migration scheme is that the cost of migration is low , The migration period is short . The disadvantage is that , The database switching process needs to stop the service .

Double write

The old library and the new library are written at the same time , Then batch migrate the old data to the new library , Finally, the traffic switches to the new library and closes the reading and writing of the old library .

This method is suitable for data structure change , Scenarios where downtime migration is not allowed .

It usually happens when the system is refactoring , The data structure changes , Such as table structure change or sub database sub table and so on . Some big Internet Systems , Usually the concurrency is very high , Even in idle time, there are quite a lot of visits . A few minutes of downtime , It will also have a significant impact on users , Even lead to a certain loss of users , This is unacceptable to the business side . So we need to consider a non-stop migration scheme without user perception . Take user system reconfiguration as an example , Let's talk about the specific plan . This was the scene , The number of user table records reaches 3000 When all , System performance and maintainability become worse , So we split the user center from the single project and reconstructed it , Redesigned the table structure , And the business side requires that they go online without downtime ! Here's our plan at the time , Steps are as follows :

- Code preparation . Where the user table is added, deleted or modified in the service layer , To operate the new library and the old one at the same time , The corresponding code needs to be modified ( Write new and old libraries at the same time ). Prepare the migration script , For old data migration . Prepare the checker script , It is used to check whether the data of the new library is consistent with that of the old library .

- Turn on double writing , The old library and the new library are written at the same time . Be careful : Any addition, deletion and modification of the database must be double written ; For update operations , If the new library has no records , We need to find out the records from the old library first , Write the updated records to the new library ; To ensure write performance , After Lao Ku finished writing , Message queuing can be used to write asynchronously to the new library .

- Using scripts , Migrate the old data before a certain time stamp to a new library . Be careful :1, The timestamp must select the time point after double writing is enabled ( For example, when double writing is turned on 10 Minutes of time ), Avoid missing some old data ;2, Record conflicts are ignored during migration ( Because the first 2 Step update operation , You may have pulled the records to a new library );3, Log the migration process , Especially the error log , If it fails to fill in , We can recover data from logs , In order to ensure the data consistency between the old and new databases .

- The first 3 After step , We also need to verify the data through a script program , Check whether the new database data is accurate and whether there is missing data

- After the data check is OK , Turn on double reading , At first, a small amount of flow was put into the new warehouse , New library and old library read at the same time . Due to the delay problem , There may be a small amount of inconsistent data records between the new database and the old database , So if you can't read the new library, you need to read the old one again . Gradually switch the read traffic to the new library , Equivalent to the process of gray line . If there is a problem, you can switch the traffic back to the old database in time

- After all read traffic is switched to the new library , Close the old database to write ( You can add a hot configuration switch to the code ), Just write the new library

- Migration completed , After that, we can remove the useless code related to double writing and double reading .

Using data synchronization tools

We can see that the above scheme is troublesome , A lot of database writing places need to modify the code . Is there a better plan ? We can also use Canal,DataBus And other tools to do data synchronization . Open source with Alibaba Canal For example .

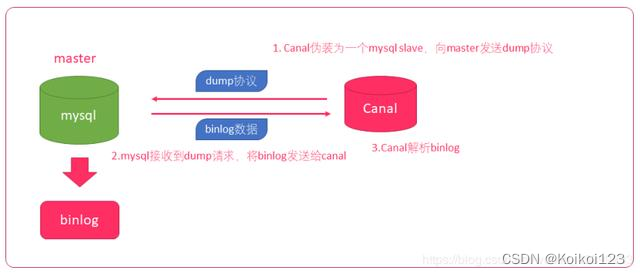

It's on it Canal The schematic diagram of

- Canal simulation mysql slave Interaction protocol , Disguise yourself as mysql Slave Library

- towards mysql master send out dump agreement

- mysql master received dump agreement , send out binary log to slave(canal)

- canal analysis binary log Byte stream object , According to the application scenario binary log The byte stream is processed accordingly

So the user system data migration above , There's no need to turn on double writing , The service layer also doesn't need to write double write code , Direct use Canal Do incremental data synchronization . The corresponding step becomes :

- Code preparation . Get ready Canal Code , analysis binary log Byte stream object , And write the parsed user data into the new library . Prepare the migration script , For old data migration . Prepare the checker script , It is used to check whether the data of the new library is consistent with that of the old library .

- function Canal Code , Start incremental data ( New data generated online ) Synchronization from old library to new library .

- Using scripts , Migrate the old data before a certain time stamp to a new library . Be careful :1, The timestamp must be selected to start running Canal The time point after the program ( Like running Canal After code 10 Minutes of time ), Avoid missing some old data ;3, Log the migration process , Especially the error log , If some records fail to write , We can recover data from logs , In order to ensure the data consistency between the old and new databases .

- The first 3 After step , We also need to verify the data through a script program , Check whether the new database data is accurate and whether there is missing data

- After the data check is OK , Turn on double reading , At first, a small amount of flow was put into the new warehouse , New library and old library read at the same time . Due to the delay problem , There may be a small amount of inconsistent data records between the new database and the old database , So if you can't read the new library, you need to read the old one again . Gradually switch the read traffic to the new library , Equivalent to the process of gray line . If there is a problem, you can switch the traffic back to the old database in time

- After all read traffic is switched to the new library , Switch write traffic to a new library ( You can add a hot configuration switch to the code . notes : Because of the switching process Canal The program is still running , Still able to get the data changes of the old library and synchronize to the new library , Therefore, the switching process will not cause some old database data to be unable to synchronize with the new database )

- close Canal Program

- Migration completed

Besides , For the data structure does not change the non-stop data migration , You can also use Canal Handle . Except for 3 Step DBA You can use tools to migrate old data directly , The other steps are basically the same as above .

Alibaba cloud's data transmission service DTS

Data transmission service (Data Transmission Service, abbreviation DTS) It is a kind of support provided by alicloud RDBMS( Relational database )、NoSQL、OLAP Data flow services for data interaction between multiple data sources .DTS Provides data migration 、 Real time data subscription and data real-time synchronization and other data transmission capabilities , It can realize data migration without stopping service 、 Data remote disaster recovery 、 Different live ( unitized )、 Cross border data synchronization 、 Real time data warehouse 、 Query report split 、 Cache update 、 Asynchronous message notification and other business application scenarios , Help you build high security 、 Scalable 、 Highly available data architecture .

advantage : The data transfer (Data Transmission) service DTS Support RDBMS、NoSQL、OLAP Data transmission between multiple data sources . It provides data migration 、 Real time data subscription and data real-time synchronization and other data transmission methods . Compared to third-party data flow tools , Data transmission service DTS Provide more variety 、 High performance 、 High security and reliable transmission link , At the same time, it provides many convenient functions , It greatly facilitates the creation and management of transmission links .

Personal understanding : It's a message queue , It will be sent to you packaged sql object , You can do a service to analyze these sql object .

Alibaba document quick entry :https://help.aliyun.com/product/26590.html

Eliminate the expensive cost of deployment and maintenance .DTS For Alibaba cloud RDS( Online relational databases )、DRDS And so on , It's solved Binlog Log recycling , Active standby switching 、VPC High availability of subscription in network switching scenarios . meanwhile , in the light of RDS The performance optimization is carried out . Out of stability 、 Performance and cost considerations , Recommended .

attach datagrip Derived data

边栏推荐

- Is Guoyuan futures a regular platform? Is it safe to open an account in Guoyuan futures?

- 1002. A+B for Polynomials (25)(PAT甲级)

- 1006 Sign In and Sign Out(25 分)(PAT甲级)

- Oracle with as ora-00903: invalid table name multi report error

- Unity adds a function case similar to editor extension to its script, the use of ContextMenu

- 1007 Maximum Subsequence Sum(25 分)(PAT甲级)

- 明明的随机数

- 牛客小白月赛7 谁是神箭手

- 项目中遇到的线上数据迁移方案1---总体思路整理和技术梳理

- Hough transform Hough transform principle

猜你喜欢

LM10丨余弦波动顺势网格策略

从实时应用角度谈通信总线仲裁机制和网络流控

Wireshark网络抓包

Lenovo explains in detail the green smart city digital twin platform for the first time to solve the difficulties of urban dual carbon upgrading

FPGA timing constraint sharing 01_ Brief description of the four steps

Hough Transform 霍夫变换原理

Stream stream

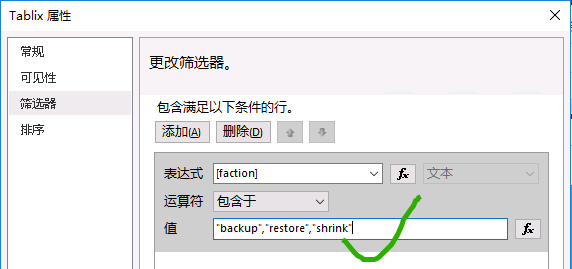

SSRS筛选器的IN运算(即包含于)用法

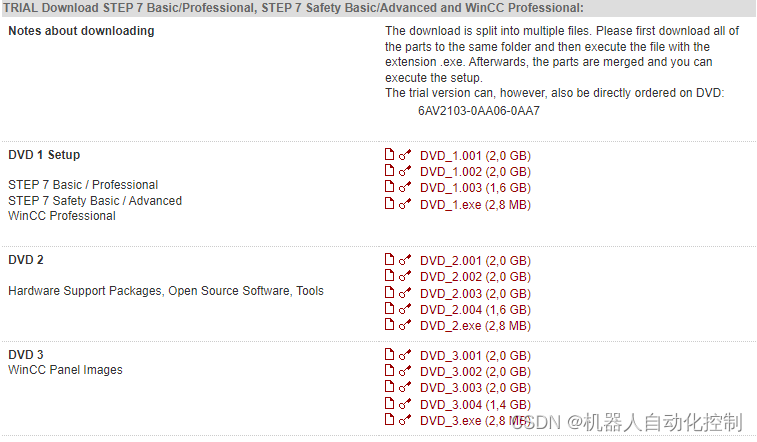

西门子HMI下载时提示缺少面板映像解决方案

2022CoCa: Contrastive Captioners are Image-Text Fountion Models

随机推荐

Shell 编程核心技术《二》

数组中的第K个最大元素

如何使用Async-Awati异步任务处理代替BackgroundWorker?

LeetCode第300场周赛(20220703)

Jetpack Compose 教程

Have you guys ever used CDC direct Mysql to Clickhouse

测试工程师如何“攻城”(上)

Lenovo explains in detail the green smart city digital twin platform for the first time to solve the difficulties of urban dual carbon upgrading

YOLOv5s-ShuffleNetV2

Oracle with as ORA-00903: invalid table name 多表报错

测试工程师如何“攻城”(下)

How to use async Awati asynchronous task processing instead of backgroundworker?

Stream stream

Go microservice (II) - detailed introduction to protobuf

Unity编辑器扩展C#遍历文件夹以及子目录下的所有图片

1009 Product of Polynomials(25 分)(PAT甲级)

Leetcode fizzbuzz C # answer

QT realizes interface sliding switching effect

C#实现定义一套中间SQL可以跨库执行的SQL语句(案例详解)

2022CoCa: Contrastive Captioners are Image-Text Fountion Models