当前位置:网站首页>Hands on deep learning -- activation function and code implementation of multi-layer perceptron

Hands on deep learning -- activation function and code implementation of multi-layer perceptron

2022-06-12 08:13:00 【Orange acridine 21】

perceptron

A given input x( vector ), The weight w( vector ), And offset b( Scalar ), Perceptron output :

The perceptron cannot fit XOR function , He can only produce linear split surfaces .

Perceptron is a binary model , Is the earliest AI One of the models .

The solution algorithm of the perceptron is equivalent to using a batch size of 1 The gradient of .

Multilayer perceptron

1、 Study XOR

2、 Single hidden layer - Single category

Why do you need a nonlinear activation function ?

If the activation function is not added , Namely n Three full connection layers are superimposed together , The output is also the simplest linear model .

3、 Activation function

3.1 sigmoid function

He means for x Speaking of , Project him onto (0,1) The open range of , Is that if x Greater than 0, Namely 1; If x Less than 0, Namely 0. It is a jump function , Stiff .

sigmoid Function is to project the input to (0,1) The open range of , It is a curvilinear function , Soft and smooth .

# sigmoid function

import torch

import numpy as np

import matplotlib.pylab as plt

import sys

sys.path.append("..")

import d2lzh_pytorch as d2l

def xypolt(x_vals,y_vals,name):

d2l.set_figsize(figsize=(5,2.5))

d2l.plt.plot(x_vals.detach().numpy(),y_vals.detach().numpy())

d2l.plt.xlabel('x')

d2l.plt.ylabel(name+'(x)')

x=torch.arange(-8.0,8.0,0.1,requires_grad=True)

y=x.sigmoid()

xypolt(x,y,'sigmoid')

plt.show()

"""

Derivation implementation

"""

x.grad.zero_() # Gradient clear

y.sum().backward() # To find the derivative

xypolt(x,x.grad,'grad of sigmoid')

plt.show()

3.2 tanh function

import torch

import numpy as np

import matplotlib.pylab as plt

import sys

sys.path.append("..")

import d2lzh_pytorch as d2l

def xypolt(x_vals,y_vals,name):

d2l.set_figsize(figsize=(5,2.5))

d2l.plt.plot(x_vals.detach().numpy(),y_vals.detach().numpy())

d2l.plt.xlabel('x')

d2l.plt.ylabel(name+'(x)')

#tanh function

x=torch.arange(-8.0,8.0,0.1,requires_grad=True)

y=x.tann()

xypolt(x,y,'tanh')

plt.show()

"""

Derivation implementation

"""

x.grad.zero_() # Gradient clear

y.sum().backward() # To find the derivative

xypolt(x,x.grad,'grad of tanh')

plt.show()

3.3 ReLU function

import torch

import numpy as np

import matplotlib.pylab as plt

import sys

sys.path.append("..")

import d2lzh_pytorch as d2l

def xypolt(x_vals,y_vals,name):

d2l.set_figsize(figsize=(5,2.5))

d2l.plt.plot(x_vals.detach().numpy(),y_vals.detach().numpy())

d2l.plt.xlabel('x')

d2l.plt.ylabel(name+'(x)')

#1、ReLU function

"""

Next use NDArray Provided relu Function to draw ReLU function

"""

x=torch.arange(-8.0,8.0,0.1,requires_grad=True)

y=x.relu()

xypolt(x,y,'relu')

plt.show()

"""

obviously , When the input is negative ,ReLU The derivative of the function is 0; When the input is a positive number ,ReLU The derivative of the function is 1. Although the input is 0 when ReLU Functions are not differentiable ,

But we can take the derivative here as 0, Now draw ReLU Derivative of a function .

"""

x.grad.zero_() # Gradient clear

y.sum().backward()

xypolt(x,x.grad,'grad of relu')

plt.show()

4、 Multilayer perceptron

5、 summary

- Multilayer perceptron uses hidden layer and activation function to obtain nonlinear model .

- The usual activation function is Sigmoid、Tanh、ReLU.

- Use Softmax To handle multiple classifications .

- The super parameters are the number of hidden layers and the size of each hidden layer .

边栏推荐

猜你喜欢

如何理解APS系统的生产排程?

工厂的生产效益,MES系统如何提供?

You get download the installation and use of artifact

Vision Transformer | Arxiv 2205 - TRT-ViT 面向 TensorRT 的 Vision Transformer

(p36-p39) right value and right value reference, role and use of right value reference, derivation of undetermined reference type, and transfer of right value reference

Model Trick | CVPR 2022 Oral - Stochastic Backpropagation A Memory Efficient Strategy

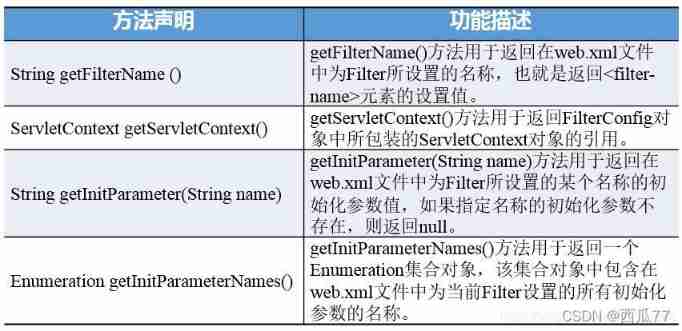

Servlet advanced

MinGW offline installation package (free, fool)

从AC5到AC6转型之路(1)——补救和准备

Final review of Discrete Mathematics (predicate logic, set, relation, function, graph, Euler graph and Hamiltonian graph)

随机推荐

(p25-p26) three details of non range based for loop and range based for loop

企业上MES系统的驱动力来自哪里?选型又该注意哪些问题?

MATLAB image processing -- image transformation correction second-order fitting

MYSQL中的调用存储过程,变量的定义,

Derivation of Poisson distribution

MYSQL中的触发器

APS究竟是什么系统呢?看完文章你就知道了

C语言printf输出整型格式符简单总结

What is an extension method- What are Extension Methods?

Installation series of ROS system (I): installation steps

智能制造的时代,企业如何进行数字化转型

Talk about the four basic concepts of database system

DUF:Deep Video Super-Resolution Network Using Dynamic Upsampling Filters ... Reading notes

Leetcode notes: Weekly contest 277

Map the world according to the data of each country (take the global epidemic as an example)

Easyexcel exports excel tables to the browser, and exports excel through postman test [introductory case]

2.2 linked list - Design linked list (leetcode 707)

OpenMP task 原理与实例

Model Trick | CVPR 2022 Oral - Stochastic Backpropagation A Memory Efficient Strategy

对企业来讲,MES设备管理究竟有何妙处?