当前位置:网站首页>[point cloud processing paper crazy reading classic version 11] - mining point cloud local structures by kernel correlation and graph pooling

[point cloud processing paper crazy reading classic version 11] - mining point cloud local structures by kernel correlation and graph pooling

2022-07-03 09:09:00 【LingbinBu】

KCNet: Mining Point Cloud Local Structures by Kernel Correlation and Graph Pooling

Abstract

- problem : The disorder of point clouds (unorder) It is still very challenging for semantic learning , In existing methods ,PointNet Good results are obtained by learning directly on the point set , however PointNet Local neighborhood information of the point cloud is not fully utilized , The local neighborhood information of point cloud contains fine-grained structural information , It can contribute to better semantic learning .

- Method : Two new operations are proposed , In order to improve the PointNet Explore the efficiency of local structures .

- Technical details :

① The first operation focuses on local 3D The geometric (geometric) structure , take point-set kernel Define as a group of learnable 3D points, these points Respond to a set of adjacent points , adopt kernel correlation Measure the geometric relationship between these adjacent points

② The second operation utilizes local high-dimensional features (feature) structure , Through point cloud 3D The position is calculated nearest-neighbor-graph, And then in nearest-neighbor-graph Feature aggregation recursively on (feature aggregation) Implement the operation . - Code :

① http://www.merl.com/research/license#KCNet

② https://github.com/ftdlyc/KCNet_Pytorch PyTorch edition It may not work , It's part of the code

introduction

- PointNet Directly aggregate the features of all points into global features , indicate PointNet Local structure mining that does not make full use of points fine-grained patterns: Every point of MLP Output only for 3D In a particular nonlinear partition of space 3D Rough coding of points .

- If MLP It's not just about “wether” Point of existence for coding , It can also be used for nonlinear 3D The points existing in the space partition are determined “what type of”( for example , Corner and plane 、 Convex and concave points, etc ) Encoding , Is the more distinctive expression we expect .

- such “type” Information must come from 3D Learning in the local domain of the point on the surface of the target .

- Main contributions :

- kernel correlation layer ——> Mining local geometry

- graph-based pooling layer ——> Mining local feature structure

Method

Learning on Local Geometric Structure

In this paper , With a point cloud local neighborhood As source, With learnable kernel( A set of points ) As reference, Thus, it represents the local structure / The shape of the type. In the point cloud registration task ,source and reference The points in the are fixed , But this article allows reference The point in changes , Through the study ( Back propagation ) The way to go more “ Fit ” shape . Unlike point cloud registration , We want to learn in a non transformational way reference, Not through optimal transformation . You can learn in this way kernel Point and convolution kernel It's the same , It responds to each point in the adjacent area , And capture local geometry in this perception domain , This perception domain is through kernel Functions and kernel wedth Decisive . With this configuration , The process of learning can be seen as finding a set of local geometric structures that can encode the most efficient and useful reference spot , And combine other parameters in the network to obtain the best learning performance .

To be specific , Definition with M M M One can learn point-set kernel κ \boldsymbol{\kappa} κ And with N N N Current in point cloud of points anchor point x i \mathbf{x}_{i} xi Between kernel correlation (KC) by :

K C ( κ , x i ) = 1 ∣ N ( i ) ∣ ∑ m = 1 M ∑ n ∈ N ( i ) K σ ( κ m , x n − x i ) \mathrm{KC}\left(\boldsymbol{\kappa}, \mathbf{x}_{i}\right)=\frac{1}{|\mathcal{N}(i)|} \sum_{m=1}^{M} \sum_{n \in \mathcal{N}(i)} \mathrm{K}_{\sigma}\left(\boldsymbol{\kappa}_{m}, \mathbf{x}_{n}-\mathbf{x}_{i}\right) KC(κ,xi)=∣N(i)∣1m=1∑Mn∈N(i)∑Kσ(κm,xn−xi)

among κ m \boldsymbol{\kappa}_{m} κm yes kernel No m m m Learning points , N ( i ) \mathcal{N}(i) N(i) yes anchor point x i \mathbf{x}_{i} xi Neighborhood index of , x n \mathbf{x}_{n} xn yes x i \mathbf{x}_{i} xi One of the neighbor point. K σ ( ⋅ , ⋅ ) : ℜ D × ℜ D → \mathrm{K}_{\sigma}(\cdot, \cdot): \Re^{D} \times \Re^{D} \rightarrow Kσ(⋅,⋅):ℜD×ℜD→ ℜ \Re ℜ Is arbitrary kernel function . In order to effectively store the local neighborhood of a point , We take each point as a vertex , Only adjacent vertices are connected by edges , So as to construct a KNNG.

Without losing generality , This article chooses Gaussian kernel:

K σ ( k , δ ) = exp ( − ∥ k − δ ∥ 2 2 σ 2 ) K_{\sigma}(\mathbf{k}, \boldsymbol{\delta})=\exp \left(-\frac{\|\mathbf{k}-\boldsymbol{\delta}\|^{2}}{2 \sigma^{2}}\right) Kσ(k,δ)=exp(−2σ2∥k−δ∥2)

among ∥ ⋅ ∥ \|\cdot\| ∥⋅∥ Represents the Euclidean distance between two points , σ \sigma σ yes kernel Width , Control the influence of the distance between points .Gaussian kernel A good property of is that the distance between two points tends to be an exponential function , There is also one from each kernel Point to anchor point Of neighbor points Between soft-assignment( Derivable ).KC Yes kernel points and neighboring points Code the distance between , And increase the similarity of the two groups of point clouds in shape . It's worth noting that kernel width, Too big or too small will lead to unsatisfactory results , You can choose by experiment .

Given :

- Network loss function L \mathcal{L} L

- The loss function is relative to each anchor point x i \mathbf{x}_{i} xi Of K C \mathrm{KC} KC Derivative of back propagation from the top d i = ∂ L ∂ K C ( κ , x i ) d_{i}=\frac{\partial \mathcal{L}}{\partial \mathrm{KC}\left(\boldsymbol{\kappa}, \mathbf{x}_{i}\right)} di=∂KC(κ,xi)∂L

This article provides each kernel point κ m \kappa_{m} κm Back propagation formula of :

∂ L ∂ κ m = ∑ i = 1 N α i d i [ ∑ n ∈ N ( i ) v m , i , n exp ( − ∥ v m , i , n ∥ 2 2 σ 2 ) ] \frac{\partial \mathcal{L}}{\partial \boldsymbol{\kappa}_{m}}=\sum_{i=1}^{N} \alpha_{i} d_{i}\left[\sum_{n \in \mathcal{N}(i)} \mathbf{v}_{m, i, n} \exp \left(-\frac{\left\|\mathbf{v}_{m, i, n}\right\|^{2}}{2 \sigma^{2}}\right)\right] ∂κm∂L=i=1∑Nαidi⎣⎡n∈N(i)∑vm,i,nexp(−2σ2∥vm,i,n∥2)⎦⎤

Middle point x i x_{i} xi The normalization constant of is α i = − 1 ∣ N ( i ) ∣ σ 2 \alpha_{i}=\frac{-1}{|\mathcal{N}(i)| \sigma^{2}} αi=∣N(i)∣σ2−1, The local deviation vector is v m , i , n = κ m + x i − x n \mathbf{v}_{m, i, n}=\boldsymbol{\kappa}_{m}+\mathbf{x}_{i}-\mathbf{x}_{n} vm,i,n=κm+xi−xn.

Learning on Local Feature Structure

K C \mathrm{KC} KC It only acts on the front end of the network to extract local geometry . We also talked about before , By construction KNNG To get the information of adjacent points , This graph It can also be used to mine local feature structures in deeper layers . Affected convolution network can locally aggregate features , And through multiple pooling The layer gradually increases the inspiration of the receptive field , We walked along 3D neighborhood graph Edge recursively propagates and aggregates features , In order to extract local features in the upper layer .

One of our key ideas is neighbor points They tend to have similar geometric structures , So by neighborhood graph Propagation features can help learn more robust parts patterns. It is worth noting that , We specifically avoid changes in these layers above neighborhood graph Structure .

Make X ∈ ℜ N × K \mathbf{X} \in \Re^{N \times K} X∈ℜN×K Express graph pooling The input of , that KNNG Have adjacency matrix W ∈ \mathbf{W} \in W∈ ℜ N × N \Re^{N \times N} ℜN×N, If the vertex i i i And vertex j j j There is an edge between , that W ( i , j ) = 1 \mathbf{W}(i, j)=1 W(i,j)=1, otherwise W ( i , j ) = 0 \mathbf{W}(i, j)=0 W(i,j)=0. Intuitively , Forming a local surface neighboring points Usually share similar features pattern. therefore , adopt graph pooling The operation aggregates features in the neighborhood of each point :

Y = P X \mathbf{Y}=\mathbf{P X} Y=PX

pooling The operation can be average pooling, It can also be max pooling.

Graph average pooling By using P ∈ ℜ N × N \mathbf{P} \in \Re^{N \times N} P∈ℜN×N As a normalized adjacency matrix, average all features in the neighborhood of a point :

P = D − 1 W , \mathbf{P}=\mathbf{D}^{-1} \mathbf{W}, P=D−1W,

among D ∈ ℜ N × N \mathbf{D} \in \Re^{N \times N} D∈ℜN×N Is the degree matrix , The first ( i , j ) (i, j) (i,j) individual entry d i , j d_{i, j} di,j Defined as :

d i , j = { deg ( i ) , if i = j 0 , otherwise d_{i, j}= \begin{cases}\operatorname{deg}(i), & \text { if } \quad i=j \\ 0, & \text { otherwise }\end{cases} di,j={ deg(i),0, if i=j otherwise

among deg ( i ) \operatorname{deg}(i) deg(i) Is the vertex. i i i Degree , Represents all connected to vertices i i i The number of vertices of .

Graph max pooling(GM) Take the largest feature in the neighborhood of each vertex , stay K K K Operate independently on three dimensions . So output Y \mathbf{Y} Y Of the ( i , k ) (i, k) (i,k) individual entry by :

Y ( i , k ) = max n ∈ N ( i ) X ( n , k ) \mathbf{Y}(i, k)=\max _{n \in \mathcal{N}(i)} \mathbf{X}(n, k) Y(i,k)=n∈N(i)maxX(n,k)

among N ( i ) \mathcal{N}(i) N(i) Represent point set X i \mathbf{X}_i Xi Index of neighbors , from W \mathbf{W} W It is calculated that .

experiment

Shape Classification

Network Configuration

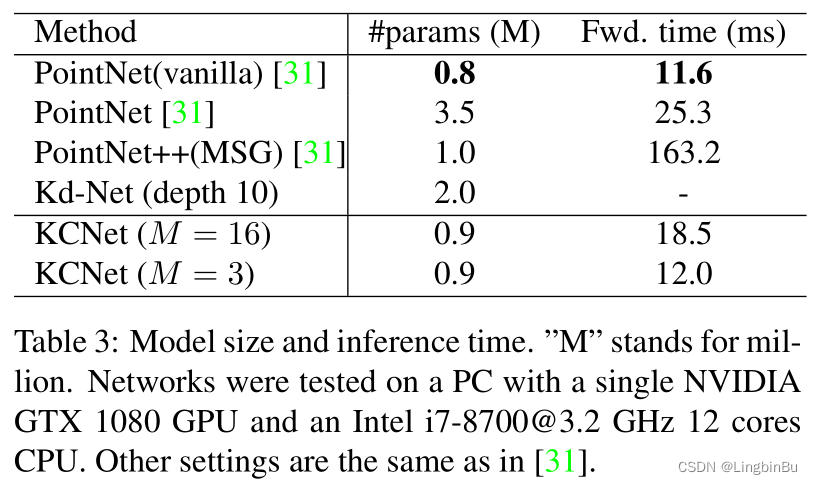

As shown in the figure above ,KCNet share 9 layer . It is worth noting that ,KNN-Group Don't take part in training , It can even be calculated in advance , Its function is to construct adjacent points and feature aggregation , Play a structural role .

- first floor ,kernel correlation, Take point coordinates as input , The output is local structural features , And then splice with the point coordinates .

- Then the feature passes through the first two MLP Carry out feature learning point by point .

- next graph pooling layer Aggregate the output point-by-point features into more robust local structural features , And the second two MLP The output is spliced .

The rest of the structure follows PointNet Very similar , except 1) Don't use Batchnorm,ReLU Used behind each full connection ;2)Dropout For the last full connection layer , Value is set to 0.5;

stay kernel computation and graph max pooling Use in 16-NN graph,kernel The number of L L L by 32, Every kernel There are 16 A little bit , The initial value is set to [ − 0.2 , 0.2 ] [-0.2, 0.2] [−0.2,0.2],kernel width σ = 0.005 \sigma=0.005 σ=0.005.

Results

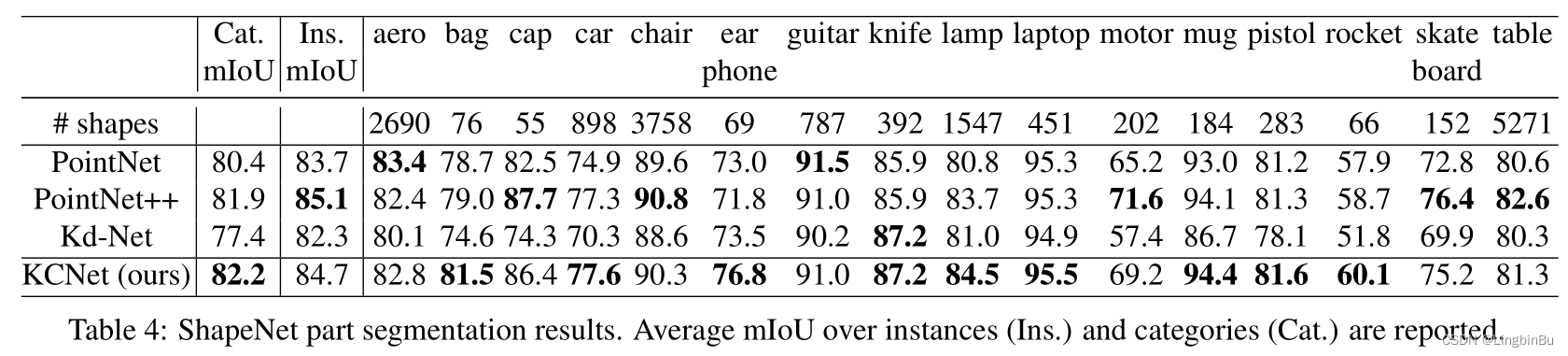

Part Segmentation

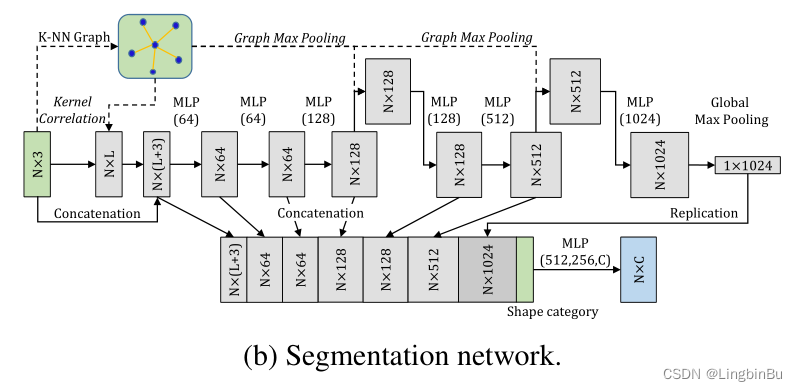

Network Configuration

Semantic Web has 10 layer , The local features captured by the features of different layers will be spliced with the global features and shape information . It doesn't use Batchnorm,Dropout Layer is used for full connection layer , The value is 0.3.18-NN graph be used for kernel computation and graph max pooling.kernel The quantity of is 16, Every kernel There is 18 A little bit , The initial value is [ − 0.2 , 0.2 ] [-0.2, 0.2] [−0.2,0.2] Between ,kernel width σ = 0.005 \sigma=0.005 σ=0.005.

Results

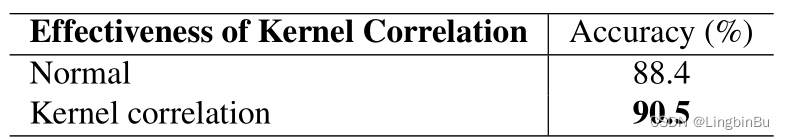

Ablation Study

Effectiveness of Kernel Correlation

Symmetric Functions

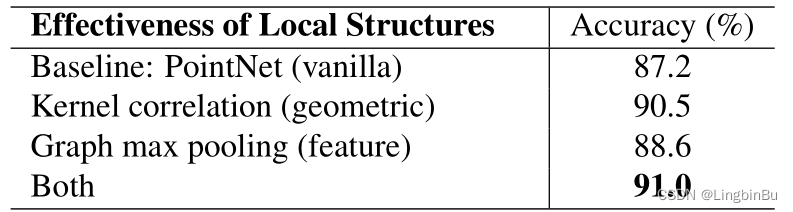

Effectiveness of Local Structures

Choosing Hyper-parameters

Robustness Test

边栏推荐

- LeetCode 515. 在每个树行中找最大值

- Apache startup failed phpstudy Apache startup failed

- 20220630学习打卡

- With low code prospect, jnpf is flexible and easy to use, and uses intelligence to define a new office mode

- PHP uses foreach to get a value in a two-dimensional associative array (with instances)

- Introduction to the basic application and skills of QT

- Using variables in sed command

- What is an excellent fast development framework like?

- Sending and receiving of request parameters

- Excel is not as good as jnpf form for 3 minutes in an hour. Leaders must praise it when making reports like this!

猜你喜欢

LeetCode 438. Find all letter ectopic words in the string

拯救剧荒,程序员最爱看的高分美剧TOP10

Discussion on enterprise informatization construction

【点云处理之论文狂读前沿版8】—— Pointview-GCN: 3D Shape Classification With Multi-View Point Clouds

How to check whether the disk is in guid format (GPT) or MBR format? Judge whether UEFI mode starts or legacy mode starts?

Digital management medium + low code, jnpf opens a new engine for enterprise digital transformation

AcWing 785. Quick sort (template)

AcWing 787. 归并排序(模板)

一个优秀速开发框架是什么样的?

dried food! What problems will the intelligent management of retail industry encounter? It is enough to understand this article

随机推荐

Noip 2002 popularity group selection number

低代码前景可期,JNPF灵活易用,用智能定义新型办公模式

Severity code description the project file line prohibits the display of status error c2440 "initialization": unable to convert from "const char [31]" to "char *"

LeetCode 513. Find the value in the lower left corner of the tree

Methods of checking ports according to processes and checking processes according to ports

<, < <,>, > > Introduction in shell

On a un nom en commun, maître XX.

PHP uses foreach to get a value in a two-dimensional associative array (with instances)

Phpstudy 80 port occupied W10 system

LeetCode 241. 为运算表达式设计优先级

Find the combination number acwing 886 Find the combination number II

TP5 multi condition sorting

状态压缩DP AcWing 91. 最短Hamilton路径

Using DLV to analyze the high CPU consumption of golang process

LeetCode 871. Minimum refueling times

The method for win10 system to enter the control panel is as follows:

Format - C language project sub file

LeetCode 324. 摆动排序 II

Wonderful review | i/o extended 2022 activity dry goods sharing

网络安全必会的基础知识