当前位置:网站首页>Model-Free Prediction

Model-Free Prediction

2022-07-07 00:26:00 【Evergreen AAS】

The last article introduced Model-based The general method of —— Dynamic programming , The content of this paper Model-Free Under the circumstances Prediction problem , namely “Estimate the value function of an unknown MDP”.

- Model-based:MDP It is known that , That is, the transfer matrix and reward function are known

- Model-Free:MDP Unknown

Monte Carlo learning

Monte Carlo method (Monte-Carlo Methods, abbreviation MC) Also called Monte Carlo simulation , It means using random numbers ( Or more common pseudo-random numbers ) To solve a lot of computational problems . In fact, the essence is , Produce a posterior by acting as randomly as possible , Then the target system is characterized by a posteriori .

stay Model-Free Under the circumstances ,MC The application of reinforcement learning is to obtain the value function , Its characteristics are as follows :

- MC From the complete episodes Middle school learning (no bootstrapping)

- MC Calculate the value by the mean , namely value = mean(return)

- MC Only applicable to episodic MDPs( Co., LTD. MDPs)

First-Visit Monte Carlo strategy evaluation

First-Visit Monte-Carlo Policy Evaluation:

Evaluate the status s In a given strategy π Value function under v~π~(s)) when , In a episode in , state s At the moment t The first time I was interviewed , Counter N(s)←N(s)+1 , Cumulative value S(s)←S(s)+Gt When the whole process is over , state s The value of V(s) = \frac{S(s)}{N(s)} According to the law of large numbers (Law of Large Numbers):V(s) → v_{\pi}(s) \text{ as } N(s) → \infty

Every-Visit Monte Carlo strategy evaluation

Every-Visit Monte-Carlo Policy Evaluation:

Evaluate the status s In a given strategy π Value function under v~π(~s) when , In a episode in , state s At the moment t Every time When interviewed , Counter N(s)←N(s)+1, Cumulative value S(s)←S(s)+Gt When the whole process is over , state s The value of V(s)=S(s)/N(s) According to the law of large numbers (Law of Large Numbers):V(s)→v~π~(s) as N(s)→∞

Incremental Monte-Carlo

Incremental averaging : The mean μ1,μ2,… of a sequence x1,x2,… . can be computed incrementally:

According to the above formula, we can get incremental MC Updated formula : Every time episode After the end , Incremental Updating V(s) , For each state St , Their corresponding return by Gt :

N(S_t) ← N(S_t) + 1 \\ V(S_t) ← V(S_t) + \frac{1}{N(S_t)}(G_t - V(S_t))

In non static problems , The form of the update formula can be changed as follows :

V(S_t) ← V(S_t) + \alpha (G_t - V(S_t))

Timing difference learning

Time series difference method (Temporal-Difference Methods, abbreviation TD) characteristic :

- TD Can pass bootstrapping From incomplete episodes Middle school learning

- TD updates a guess towards a guess

TD(λ)

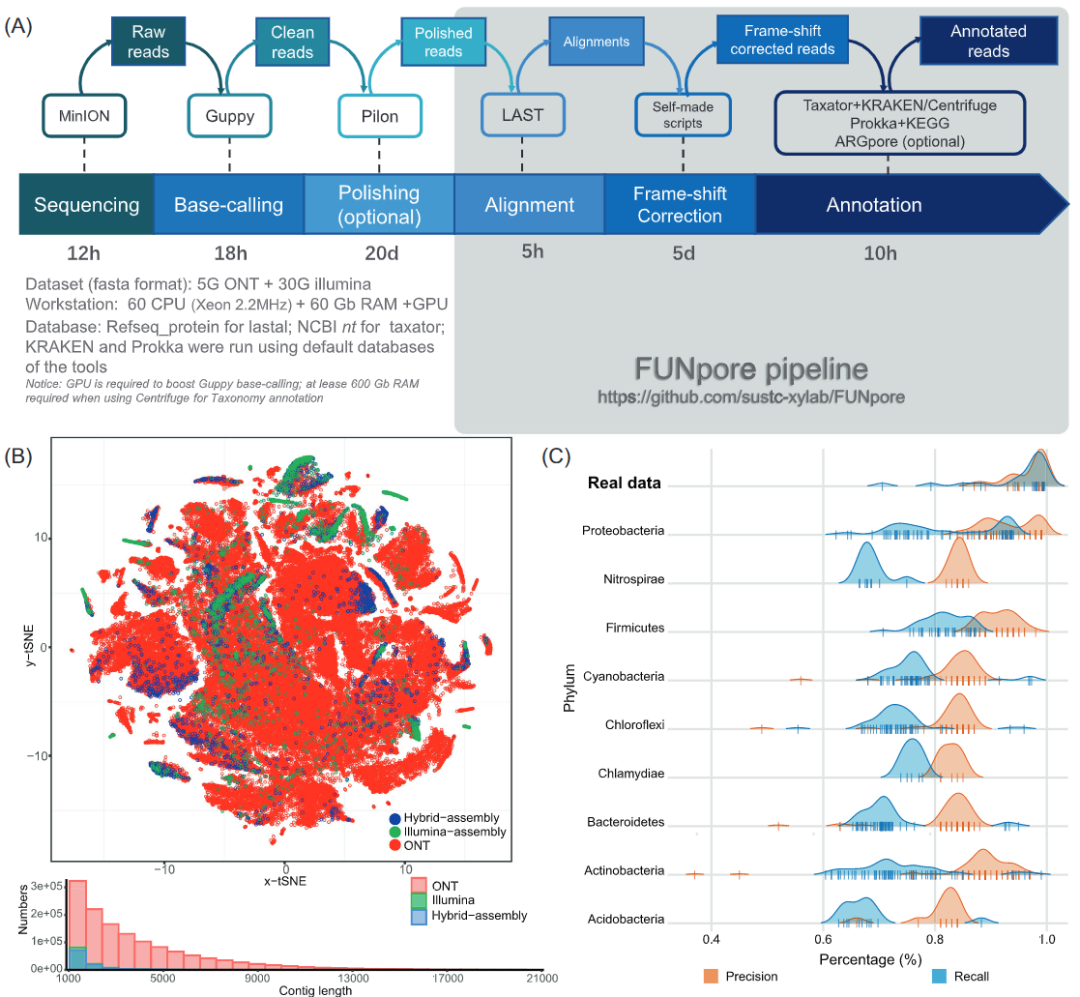

The following figure for TD target In different n Diagram below :

As can be seen from the above figure , When n When the termination is reached , It's a episode, At this time, the corresponding method is MC, So from this point of view ,MC Belong to TD In special circumstances .

n-step Return

n-step returns It can be expressed as follows :

n=1 when :G_{t}^{(1)} = R_{t+1} + \gamma V(S_{t+1})

n=2 when :G_{t}^{(2)} = R_{t+1} + \gamma R_{t+2} + \gamma^2 V(S_{t+2})…

n=∞ when :G_{t}^{\infty} = R_{t+1} + \gamma R_{t+2} + … + \gamma^{T-1} R_T)

therefore ,n-step return G_{t}^{(n)} = R_{t+1} + \gamma R_{t+2} + … + \gamma^{n}V(S_{t+n})

n-step TD Update formula :

V(S_t) ← V(S_t) + \alpha (G_t^{(n)} - V(S_t))

Forward View of TD(λ)

Can we put all n-step return combined ? The answer must be yes , After the combination of return Known as yes λ-return, among λ To combine different n-step returns The weight factor introduced .

λ-return:

G_t^{\lambda} = (1-\lambda)\sum_{n=1}^{\infty}\lambda^{n-1}G_t^{(n)}

Forward-view TD(λλ):

V(S_t) ← V(S_t) + \alpha\Bigl(G_t^{\lambda} - V(S_t)\Bigr)

TD(λ) The corresponding weight formula is (1−λ)λ^n−1^, The distribution diagram is as follows :

Forward-view TD(λ) Characteristics :

- Update value function towards the λ-return

- Forward-view looks into the future to compute GλtGtλ

- Like MC, can only be computed from complete episodes

Backward View TD(λ)

- Forward view provides theory

- Backward view provides mechanism

- Update online, every step, from incomplete sequences

With qualification marks TD(λλ):

among δt by TD-error,Et(s) For qualification trace .

Qualification trail (Eligibility Traces)

The essence of qualification trace is for high frequency , The recent state gives higher trust (credit)/ The weight .

The following figure is a description of the qualification track :

About TD(λ) There is a conclusion :

The sum of offline updates is identical for forward-view and backward-view TD(λ).

The content of this section will not be introduced in depth , Those who are interested can read it Sutton Books and David A tutorial for .

Monte Carlo learning vs. Timing difference learning

MC And TD Similarities and differences

The same thing : They all learn given strategies online from experience π The value function of v~π~

Difference :

- Incremental every-visit Monte-Carlo: Towards the real return G~t~ to update V(S~t~)

V(S_t) ← V(S_t) + \alpha (\textcolor{Red}{G_t} - V(S_t))

- Simplest temporal-difference learning algorithm: TD(0)

- Towards the estimated return \color{Red}{R_{t+1} + \gamma V(S_{t+1})} to update V(S~t~)

- \color{Red}{R_{t+1} + \gamma V(S_{t+1})} It's called TD target

- \color{Red}{R_{t+1} + \gamma V(S_{t+1})}−V(S_{t}) It's called TD error

In the figure below Drive Home Give an example to illustrate the difference between the two ,MC The prediction of the time of returning home can only be changed after returning home , and TD In each step, constantly adjust your prediction according to the actual situation .

MC And TD Advantages and disadvantages

Learning style

- TD You can learn before you know the final result ( As an example above )

- TD can learn online after every step

- MC must wait until end of episode before return is known

- TD You can learn without the final result ( Such as infinite / continuity MDPs)

- TD can learn from incomplete sequences

- MC can only learn from complete sequences

- TD works in continuing (non-terminating) environments

- MC only works for episodic (terminating) environments

Variance and bias

- MC has high variance, zero bias( High variance , Zero deviation )

- Good convergence properties

- Not very sensitive to initial value

- Very simple to understand and use

- TD has low variance, some bias( Low variance , There is a certain deviation )

- Usually more efficient than MC

- TD(0) converges to vπ(s)vπ(s)

- More sensitive to initial value

About MC and TD Explanation of variance and deviation in :

- MC The update is based on real return G_t = R_{t+1} + \gamma R_{t+2} + … + \gamma^{T-1}R_{T} yes v~π~(St) Unbiased estimation of .

- Actual TD target R_{t+1} + \gamma v_{\pi}(S_{t+1}) It's also vπ(St) Unbiased estimation of . But it is used in the actual update TD target R_{t+1} + \gamma V(S_{t+1}) yes vπ(St) The biased estimate of .

- TD target With lower deviation :

- Return Each simulation depends on many random actions 、 Transfer probability and return

- TD target Rely on only one random action at a time 、 Transfer probability and return

Markov nature

- TD exploits Markov property

- Usually more efficient in Markov environments

- MC does not exploit Markov property

- Usually more effective in non-Markov environments

DP、MC as well as TD(0)

First we start with backup tree Go up and intuitively understand the differences between the three .

- DP backup tree:Full-Width step( complete step)

- MC backup tree: complete episode

- TD(0) backup tree: Single step

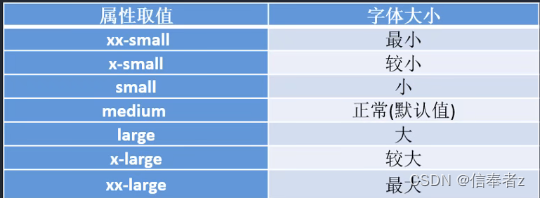

Bootstrapping vs. Sampling

Bootstrapping: Update based on the predicted value

- DP bootstraps

- MC does not bootstrap

- TD bootstraps

Sampling: Update based on sampling expectations

- DP does not sample(model-based methods don’t need sample)

- MC samples(model-free methods need sample)

- TD samples(model-free methods need sample)

The figure below shows RL The difference between several basic methods :

边栏推荐

- The way of intelligent operation and maintenance application, bid farewell to the crisis of enterprise digital transformation

- 从外企离开,我才知道什么叫尊重跟合规…

- MIT 6.824 - Raft学生指南

- 基于SSM框架的文章管理系统

- Clipboard management tool paste Chinese version

- pinia 模块划分

- pytest多进程/多线程执行测试用例

- The programmer resigned and was sentenced to 10 months for deleting the code. Jingdong came home and said that it took 30000 to restore the database. Netizen: This is really a revenge

- 2022/2/10 summary

- Typescript incremental compilation

猜你喜欢

1000 words selected - interface test basis

37頁數字鄉村振興智慧農業整體規劃建設方案

Imeta | Chen Chengjie / Xia Rui of South China Agricultural University released a simple method of constructing Circos map by tbtools

Jenkins' user credentials plug-in installation

DAY THREE

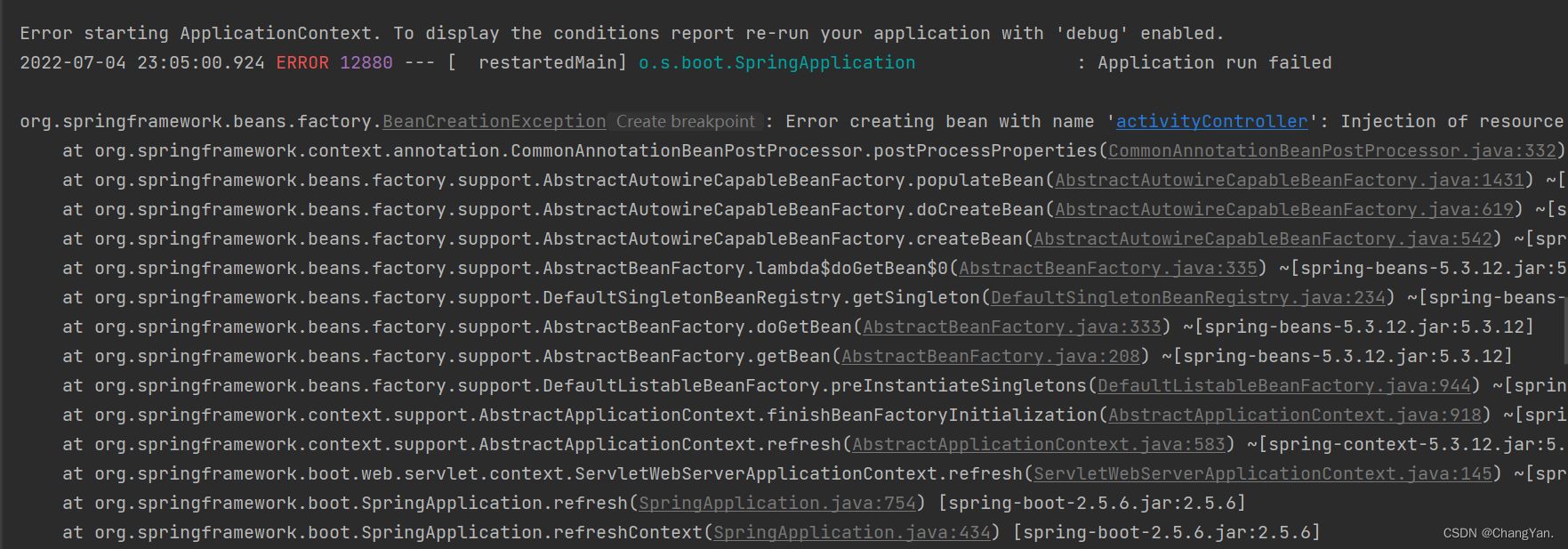

@TableId can‘t more than one in Class: “com.example.CloseContactSearcher.entity.Activity“.

17、 MySQL - high availability + read / write separation + gtid + semi synchronous master-slave replication cluster

Devops can help reduce technology debt in ten ways

![[automated testing framework] what you need to know about unittest](/img/4d/0f0e0a67ec41e41541e0a2b5ca46d9.png)

[automated testing framework] what you need to know about unittest

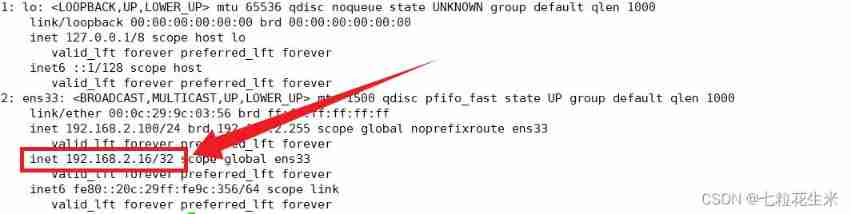

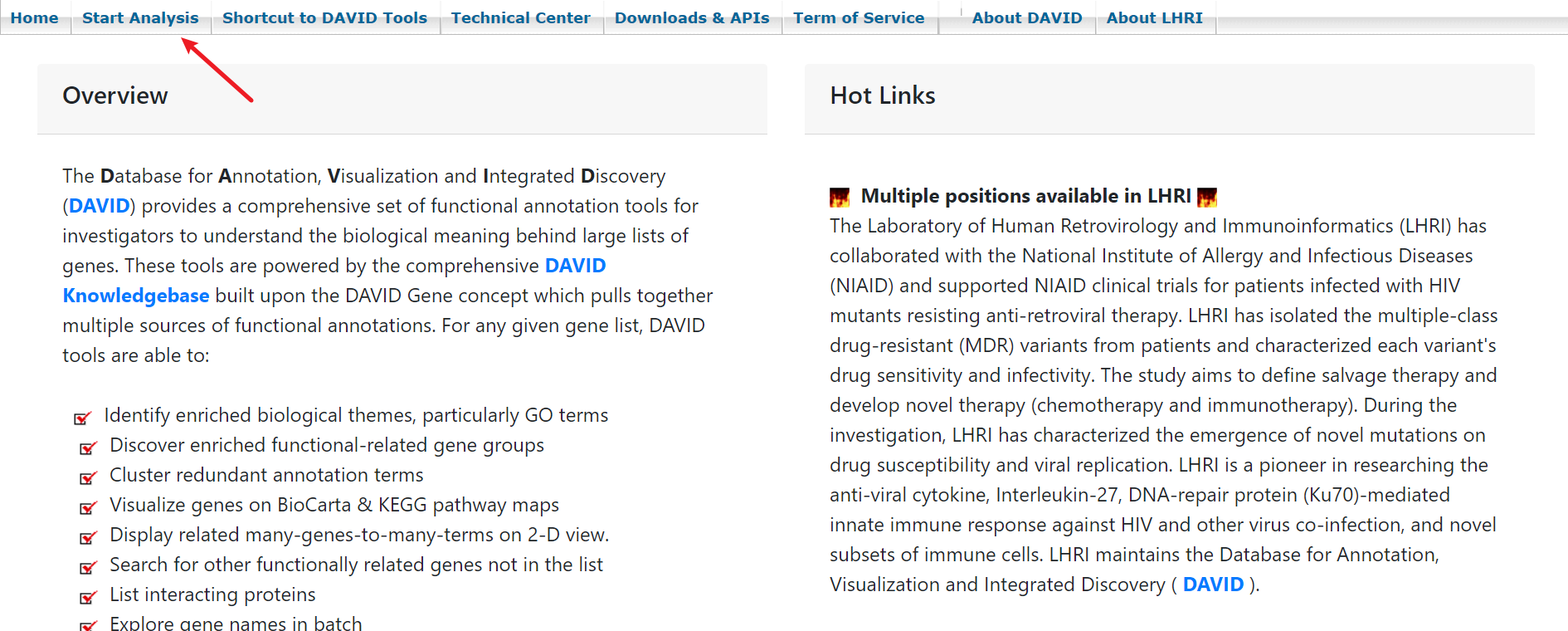

Geo data mining (III) enrichment analysis of go and KEGG using David database

随机推荐

openresty ngx_lua子请求

MySQL主从之多源复制(3主1从)搭建及同步测试

Huawei mate8 battery price_ Huawei mate8 charges very slowly after replacing the battery

[vector retrieval research series] product introduction

How engineers treat open source -- the heartfelt words of an old engineer

MIT 6.824 - raft Student Guide

使用yum来安装PostgreSQL13.3数据库

How can computers ensure data security in the quantum era? The United States announced four alternative encryption algorithms

Use Yum or up2date to install the postgresql13.3 database

Tourism Management System Based on jsp+servlet+mysql framework [source code + database + report]

iMeta | 华南农大陈程杰/夏瑞等发布TBtools构造Circos图的简单方法

Compilation of kickstart file

uniapp中redirectTo和navigateTo的区别

After leaving a foreign company, I know what respect and compliance are

[2022 the finest in the whole network] how to test the interface test generally? Process and steps of interface test

Introduction au GPIO

DAY ONE

2022/2/10 summary

Clipboard management tool paste Chinese version

PDF文档签名指南