当前位置:网站首页> 使用Scrapy框架爬取网页并保存到Mysql的实现

使用Scrapy框架爬取网页并保存到Mysql的实现

2022-07-07 13:11:00 【1024问】

大家好,这一期阿彬给大家分享Scrapy爬虫框架与本地Mysql的使用。今天阿彬爬取的网页是虎扑体育网。

(1)打开虎扑体育网,分析一下网页的数据,使用xpath定位元素。

(2)在第一部分析网页之后就开始创建一个scrapy爬虫工程,在终端执行以下命令:

“scrapy startproject huty(注:‘hpty’是爬虫项目名称)”,得到了下图所示的工程包:

(3)进入到“hpty/hpty/spiders”目录下创建一个爬虫文件叫‘“sww”,在终端执行以下命令: “scrapy genspider sww” (4)在前两步做好之后,对整个爬虫工程相关的爬虫文件进行编辑。 1、setting文件的编辑:

把君子协议原本是True改为False。

再把这行原本被注释掉的代码把它打开。

2、对item文件进行编辑,这个文件是用来定义数据类型,代码如下:

# Define here the models for your scraped items## See documentation in:# https://docs.scrapy.org/en/latest/topics/items.htmlimport scrapyclass HptyItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() 球员 = scrapy.Field() 球队 = scrapy.Field() 排名 = scrapy.Field() 场均得分 = scrapy.Field() 命中率 = scrapy.Field() 三分命中率 = scrapy.Field() 罚球命中率 = scrapy.Field()3、对最重要的爬虫文件进行编辑(即“hpty”文件),代码如下:

import scrapyfrom ..items import HptyItemclass SwwSpider(scrapy.Spider): name = 'sww' allowed_domains = ['https://nba.hupu.com/stats/players'] start_urls = ['https://nba.hupu.com/stats/players'] def parse(self, response): whh = response.xpath('//tbody/tr[not(@class)]') for i in whh: 排名 = i.xpath( './td[1]/text()').extract()# 排名 球员 = i.xpath( './td[2]/a/text()').extract() # 球员 球队 = i.xpath( './td[3]/a/text()').extract() # 球队 场均得分 = i.xpath( './td[4]/text()').extract() # 得分 命中率 = i.xpath( './td[6]/text()').extract() # 命中率 三分命中率 = i.xpath( './td[8]/text()').extract() # 三分命中率 罚球命中率 = i.xpath( './td[10]/text()').extract() # 罚球命中率 data = HptyItem(球员=球员, 球队=球队, 排名=排名, 场均得分=场均得分, 命中率=命中率, 三分命中率=三分命中率, 罚球命中率=罚球命中率) yield data4、对pipelines文件进行编辑,代码如下:

# Define your item pipelines here## Don't forget to add your pipeline to the ITEM_PIPELINES setting# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html# useful for handling different item types with a single interfacefrom cursor import cursorfrom itemadapter import ItemAdapterimport pymysqlclass HptyPipeline: def process_item(self, item, spider): db = pymysql.connect(host="Localhost", user="root", passwd="root", db="sww", charset="utf8") cursor = db.cursor() 球员 = item["球员"][0] 球队 = item["球队"][0] 排名 = item["排名"][0] 场均得分 = item["场均得分"][0] 命中率 = item["命中率"] 三分命中率 = item["三分命中率"][0] 罚球命中率 = item["罚球命中率"][0] # 三分命中率 = item["三分命中率"][0].strip('%') # 罚球命中率 = item["罚球命中率"][0].strip('%') cursor.execute( 'INSERT INTO nba(球员,球队,排名,场均得分,命中率,三分命中率,罚球命中率) VALUES (%s,%s,%s,%s,%s,%s,%s)', (球员, 球队, 排名, 场均得分, 命中率, 三分命中率, 罚球命中率) ) # 对事务操作进行提交 db.commit() # 关闭游标 cursor.close() db.close() return item(5)在scrapy框架设计好了之后,先到mysql创建一个名为“sww”的数据库,在该数据库下创建名为“nba”的数据表,代码如下: 1、创建数据库

create database sww;2、创建数据表

create table nba (球员 char(20),球队 char(10),排名 char(10),场均得分 char(25),命中率 char(20),三分命中率 char(20),罚球命中率 char(20));3、通过创建数据库和数据表可以看到该表的结构:

(6)在mysql创建数据表之后,再次回到终端,输入如下命令:“scrapy crawl sww”,得到的结果

到此这篇关于使用Scrapy框架爬取网页并保存到Mysql的实现的文章就介绍到这了,更多相关Scrapy爬取网页并保存内容请搜索软件开发网以前的文章或继续浏览下面的相关文章希望大家以后多多支持软件开发网!

边栏推荐

- 缓冲区溢出保护

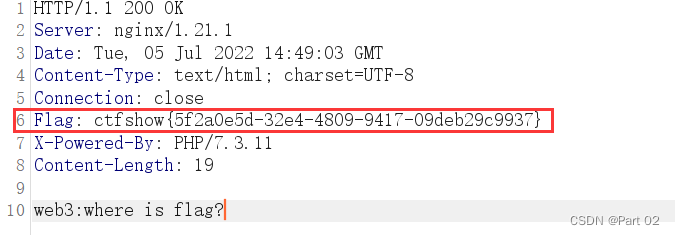

- CTFshow,信息搜集:web2

- Apache多个组件漏洞公开(CVE-2022-32533/CVE-2022-33980/CVE-2021-37839)

- buffer overflow protection

- Find your own value

- ⼀个对象从加载到JVM,再到被GC清除,都经历了什么过程?

- 全日制研究生和非全日制研究生的区别!

- Apache multiple component vulnerability disclosure (cve-2022-32533/cve-2022-33980/cve-2021-37839)

- Ctfshow, information collection: web6

- What is the process of ⼀ objects from loading into JVM to being cleared by GC?

猜你喜欢

Zhiting doesn't use home assistant to connect Xiaomi smart home to homekit

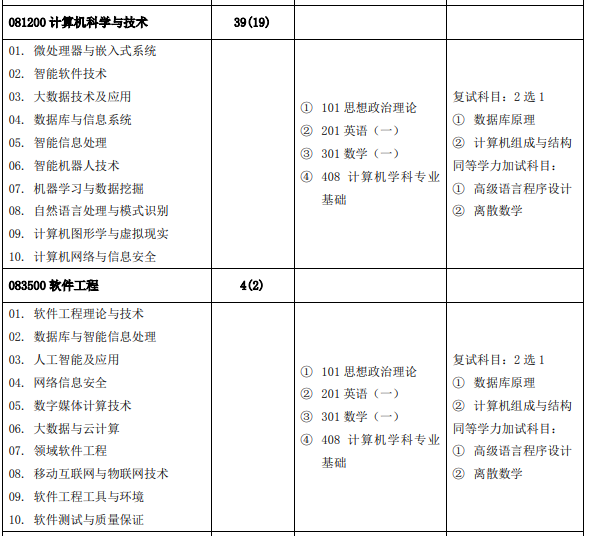

居然从408改考自命题!211华北电力大学(北京)

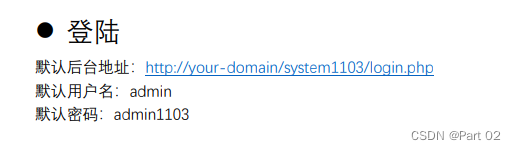

asp.netNBA信息管理系统VS开发sqlserver数据库web结构c#编程计算机网页源码项目详细设计

Ctfshow, information collection: Web3

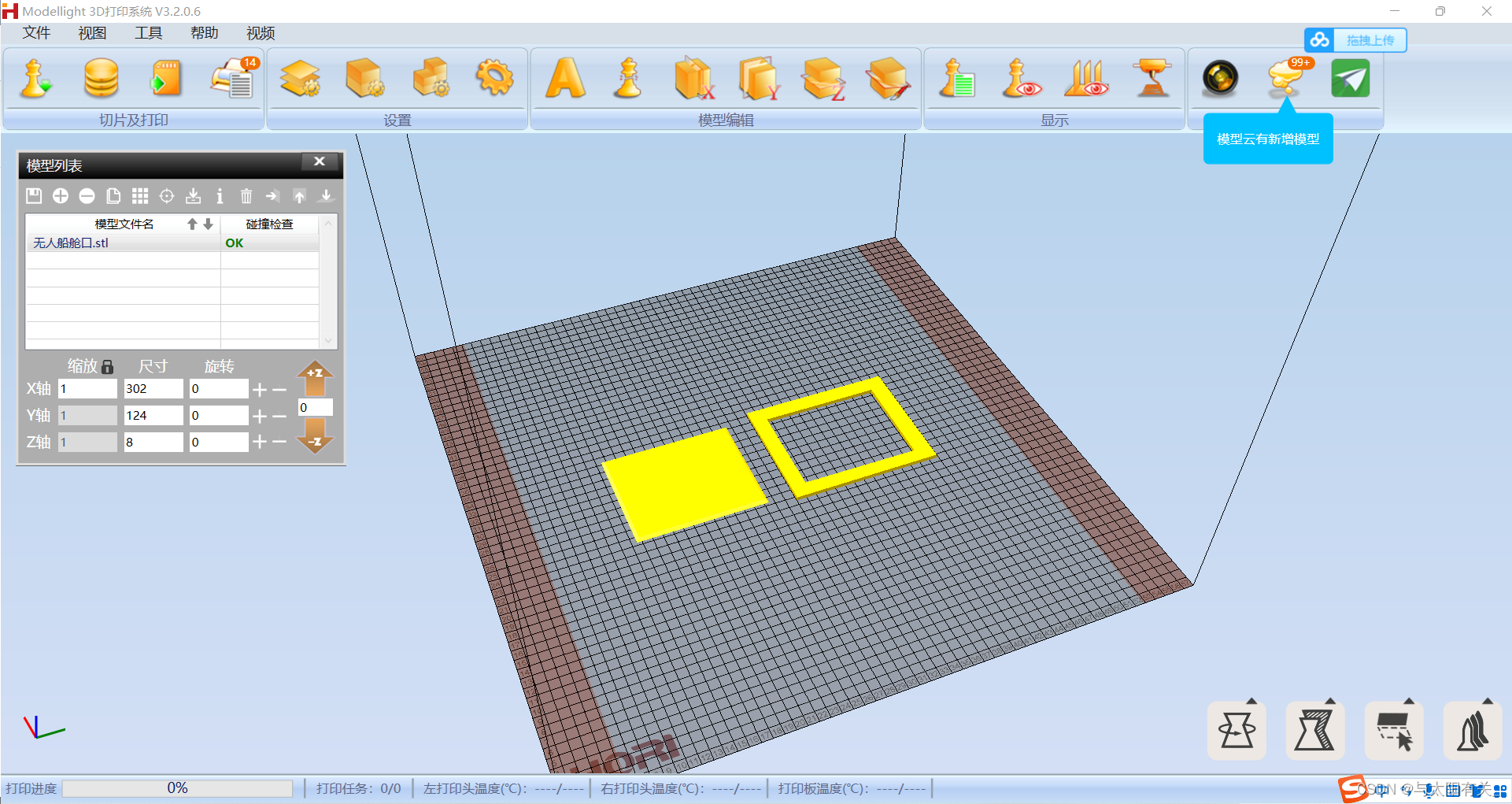

【搞船日记】【Shapr3D的STL格式转Gcode】

CPU与chiplet技术杂谈

CTFshow,信息搜集:web13

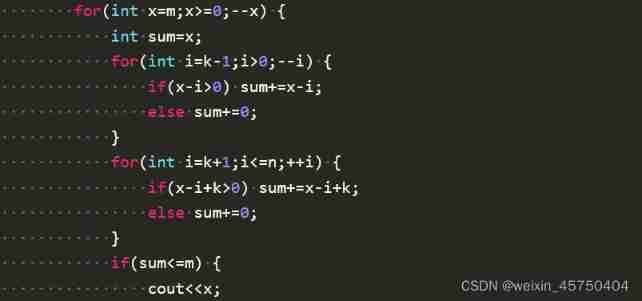

Niuke real problem programming - day15

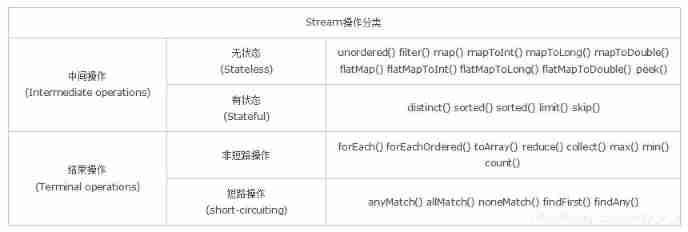

Stream learning notes

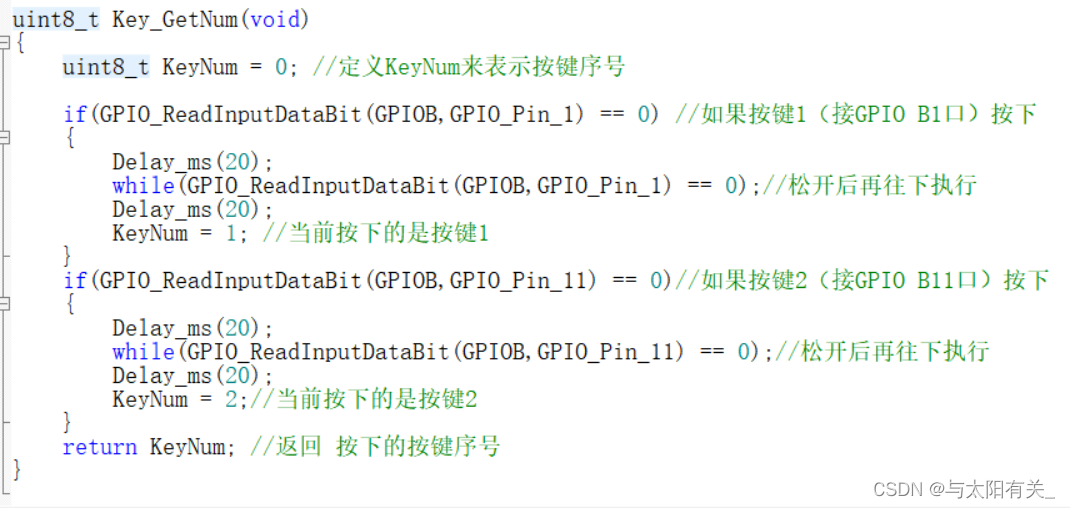

【跟着江科大学Stm32】STM32F103C8T6_PWM控制直流电机_代码

随机推荐

Comparable and comparator of sorting

Ctfshow, information collection: web7

Bits and Information & integer notes

Discussion on CPU and chiplet Technology

CTFshow,信息搜集:web10

一文读懂数仓中的pg_stat

2022年5月互联网医疗领域月度观察

[target detection] yolov5 Runtong voc2007 data set

MongoDB数据库基础知识整理

How does the database perform dynamic custom sorting?

Protection strategy of server area based on Firewall

CTFshow,信息搜集:web3

Several ways of JS jump link

How to enable radius two factor / two factor (2fa) identity authentication for Anheng fortress machine

摘抄的只言片语

Spatiotemporal deformable convolution for compressed video quality enhancement (STDF)

#HPDC智能基座人才发展峰会随笔

上半年晋升 P8 成功,还买了别墅!

Niuke real problem programming - day15

Window环境下配置Mongodb数据库