当前位置:网站首页>Loss function and positive and negative sample allocation in target detection: retinanet and focal loss

Loss function and positive and negative sample allocation in target detection: retinanet and focal loss

2022-07-07 05:53:00 【cartes1us】

RetinaNet

In the field of target detection , For the first time, the accuracy of single-stage algorithm exceeds that of two-stage algorithm , Namely RetinaNet.

Network structure :

The network structure designed by the author is not very innovative , This is what the article says :

The design of our RetinaNet detector shares many similarities with

previous dense detectors, in particular the concept of ‘anchors’

introduced by RPN [3] and use of features pyramids as in SSD [9] and

FPN [4]. We emphasize that our simple detector achieves top results

not based on innovations in network design but due to our novel loss.

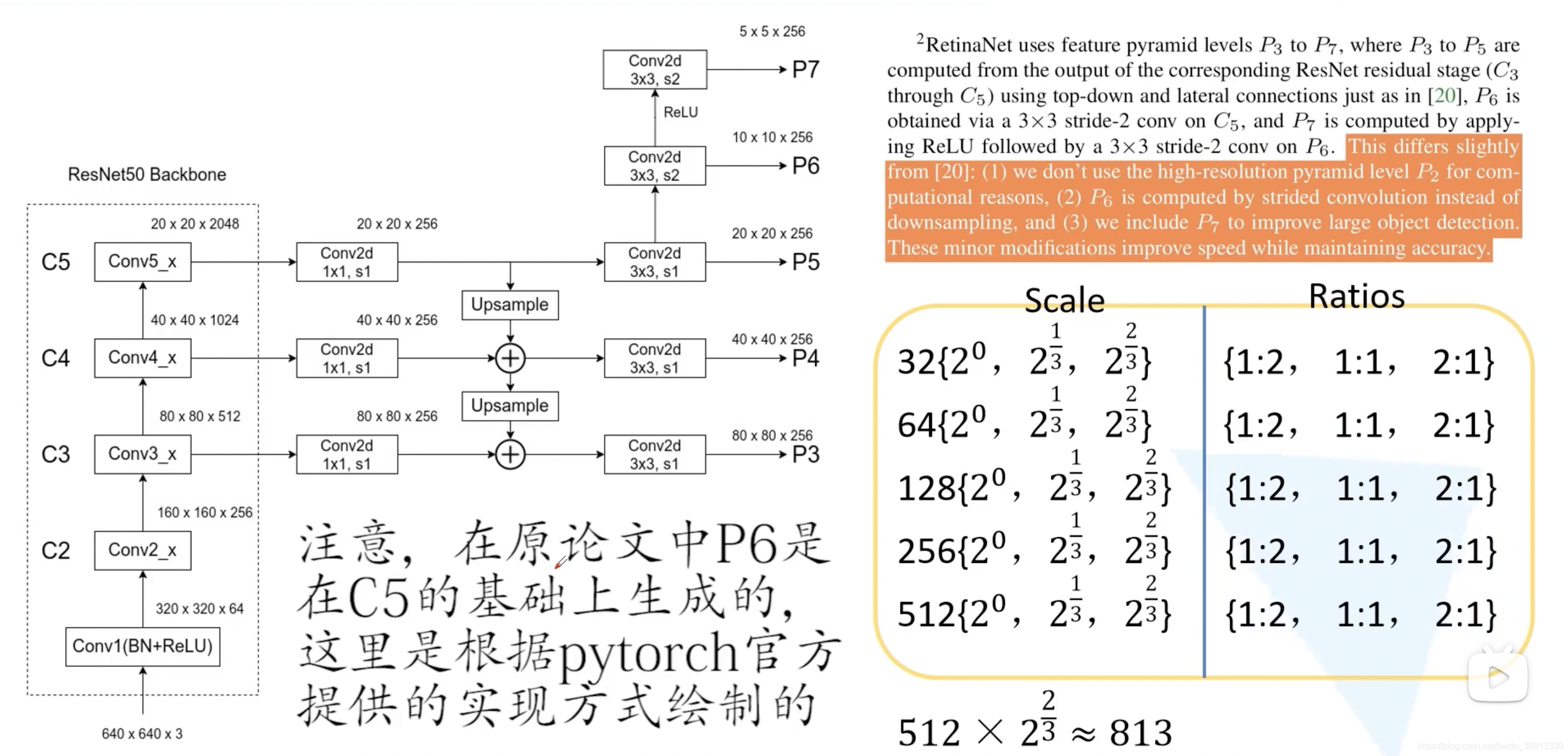

Detection head is Classification and BBox Regression decoupling Of , And it is based on anchor frame , after FPN After that, five characteristic maps with different scales are output , Each layer corresponds to 32~512 Anchor frame of scale , And each layer is based on scale and ratios There are different combinations of 9 Seed anchor frame , Finally, the anchor frame size of the whole network is 32 ~ 813 Between . Use the offset predicted by the network relative to the anchor box to calculate BBox Methods and Faster R-CNN identical . The following figure is the picture of thunderbolt .

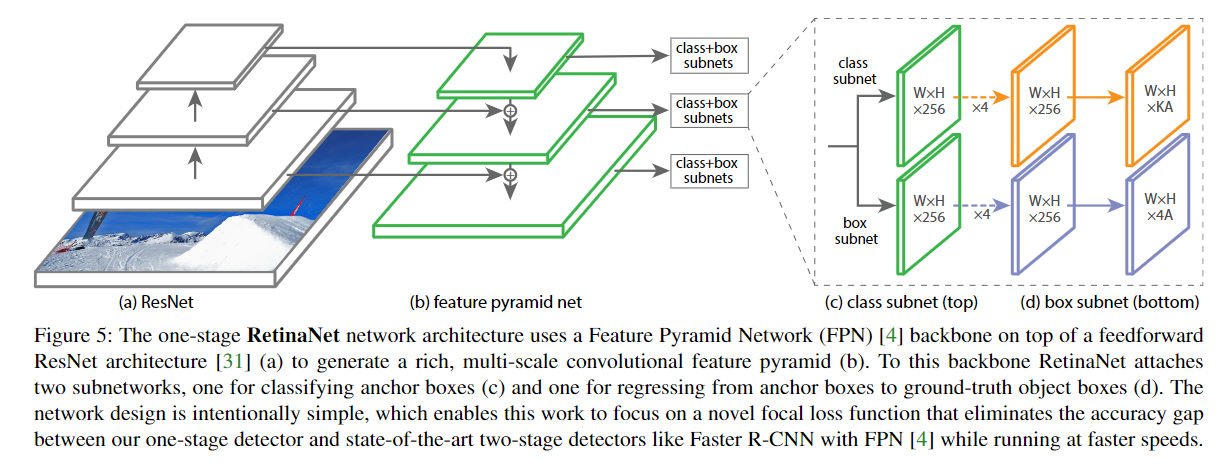

The structure diagram in the paper is as follows , Only by FPN The characteristic diagrams of three scales are drawn ,W,H,K,A Respectively represent the width of the feature map , high , Number of categories ( Does not contain background classes ), Number of anchor frames (9).

Positive and negative samples match

Positive sample : Predicted BBox And gt IoU>=0.5,

Negative sample : Predicted BBox And gt IoU<0.4,

Other samples are discarded

prospects , Background quantity imbalance

CE Variants of loss

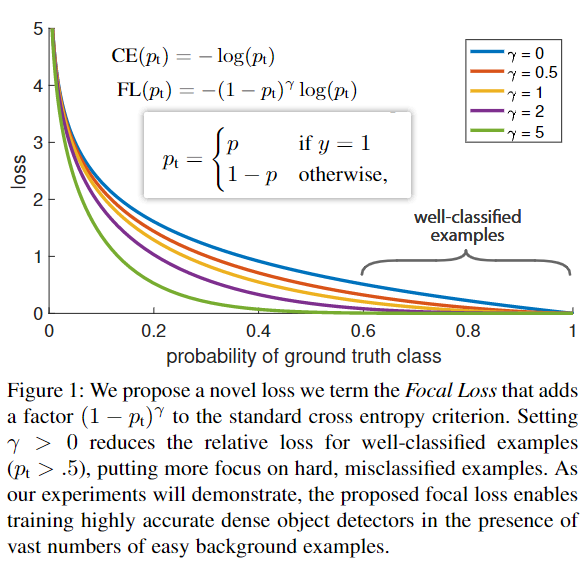

The biggest innovation of this work :Focal loss, Rewrite the classic cross entropy loss , Apply to class subnet Branch , The weight of the loss of easily classified samples is greatly reduced , Beautiful form . In the paper γ \gamma γ Recommended 2, if γ \gamma γ take 0, be FL It degenerates into CE.

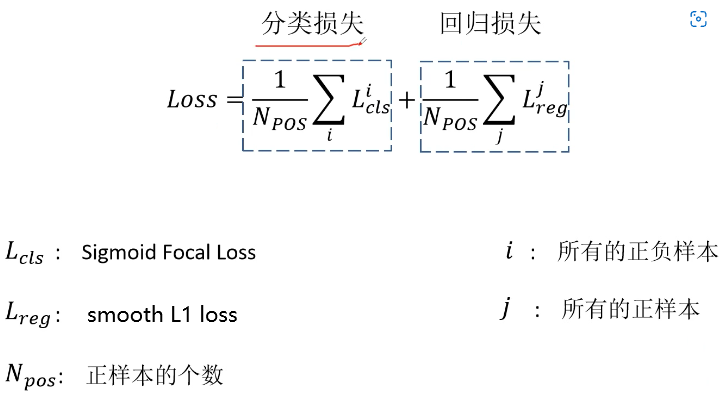

Loss :

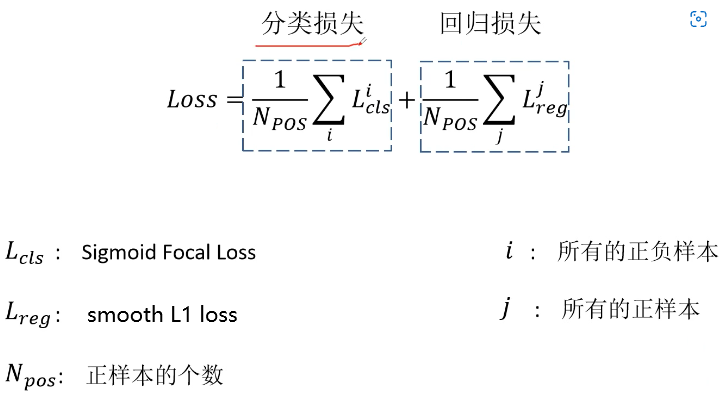

The first category loss is to calculate all samples ( Including positive and negative ) Of Focal loss, Then remove the number of positive samples N p o s N_{pos} Npos.BBox The return loss is Fast R-CNN Proposed in smooth L1 loss.

To be continued

边栏推荐

- Win configuration PM2 boot auto start node project

- Wechat applet Bluetooth connects hardware devices and communicates. Applet Bluetooth automatically reconnects due to abnormal distance. JS realizes CRC check bit

- Get the way to optimize the one-stop worktable of customer service

- [云原生]微服务架构是什么?

- Différenciation et introduction des services groupés, distribués et microservices

- What EDA companies are there in China?

- Senior programmers must know and master. This article explains in detail the principle of MySQL master-slave synchronization, and recommends collecting

- 分布式事务解决方案之TCC

- Reptile exercises (III)

- Digital IC interview summary (interview experience sharing of large manufacturers)

猜你喜欢

目标检测中的损失函数与正负样本分配:RetinaNet与Focal loss

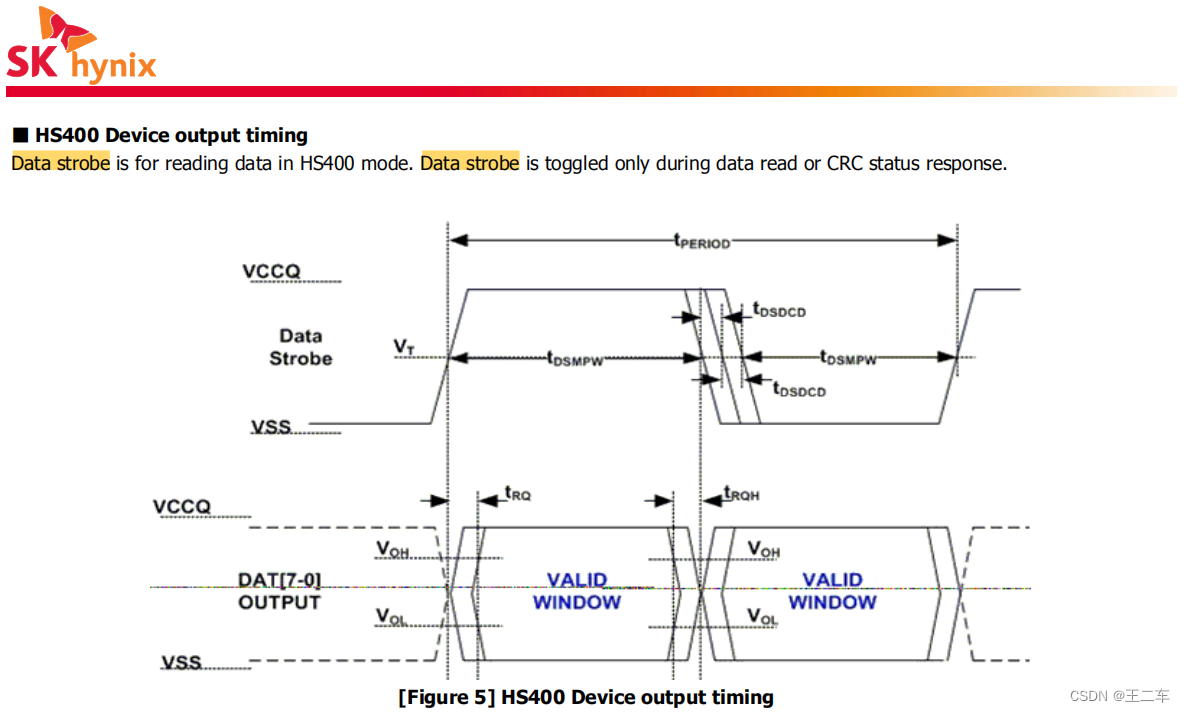

EMMC print cqhci: timeout for tag 10 prompt analysis and solution

架构设计的五个核心要素

An example of multi module collaboration based on NCF

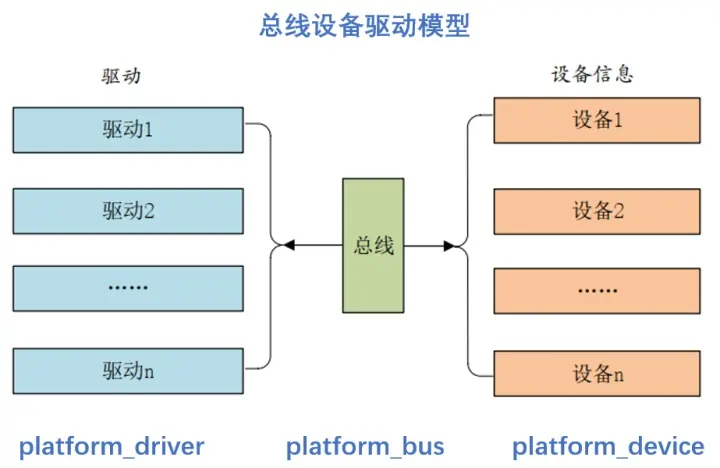

驱动开发中platform设备驱动架构详解

Hcip eighth operation

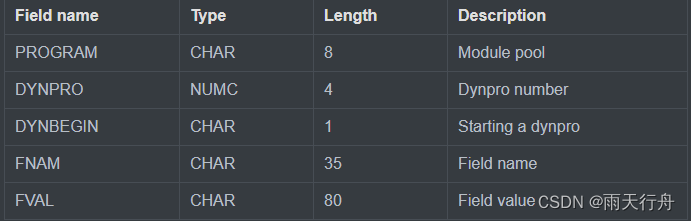

SAP ABAP BDC (batch data communication) -018

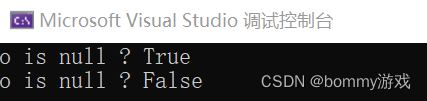

C#可空类型

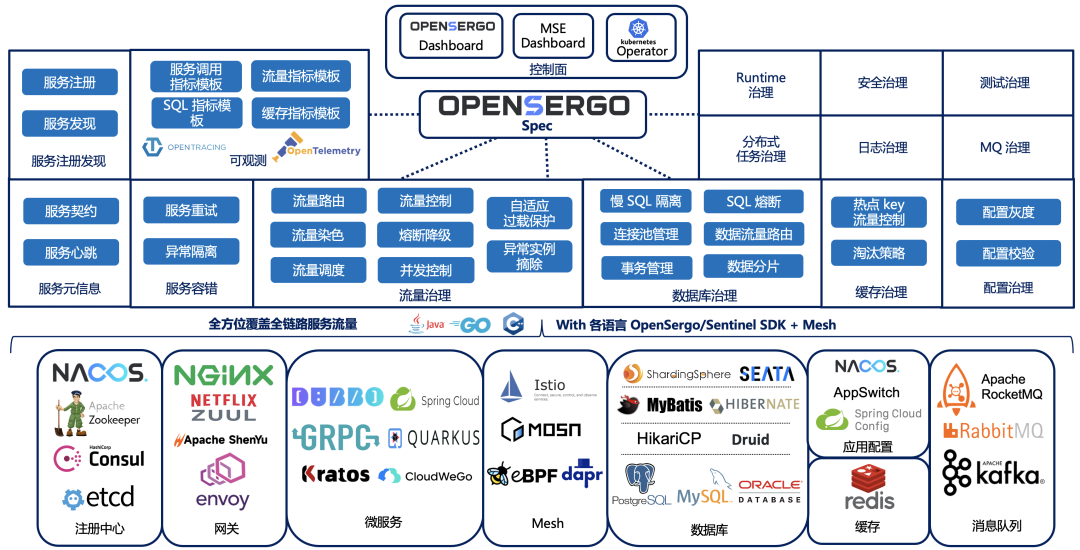

Opensergo is about to release v1alpha1, which will enrich the service governance capabilities of the full link heterogeneous architecture

![Reading the paper [sensor enlarged egocentric video captioning with dynamic modal attention]](/img/db/feb719e2715c7b9c669957995e1d83.png)

Reading the paper [sensor enlarged egocentric video captioning with dynamic modal attention]

随机推荐

Bat instruction processing details

404 not found service cannot be reached in SAP WebService test

Classic questions about data storage

原生小程序 之 input切换 text与password类型

上海字节面试问题及薪资福利

async / await

Web architecture design process

Web Authentication API兼容版本信息

PTA 天梯赛练习题集 L2-003 月饼 测试点2,测试点3分析

原生小程序 之 input切換 text與password類型

Forkjoin is the most comprehensive and detailed explanation (from principle design to use diagram)

得物客服一站式工作台卡顿优化之路

make makefile cmake qmake都是什么,有什么区别?

What EDA companies are there in China?

Red Hat安装内核头文件

nVisual网络可视化

2pc of distributed transaction solution

Digital IC interview summary (interview experience sharing of large manufacturers)

Question 102: sequence traversal of binary tree

On the difference between FPGA and ASIC