当前位置:网站首页>[2020]GRAF: Generative Radiance Fields for 3D-Aware Image Synthesis

[2020]GRAF: Generative Radiance Fields for 3D-Aware Image Synthesis

2022-07-05 06:17:00 【Dark blue blue blue】

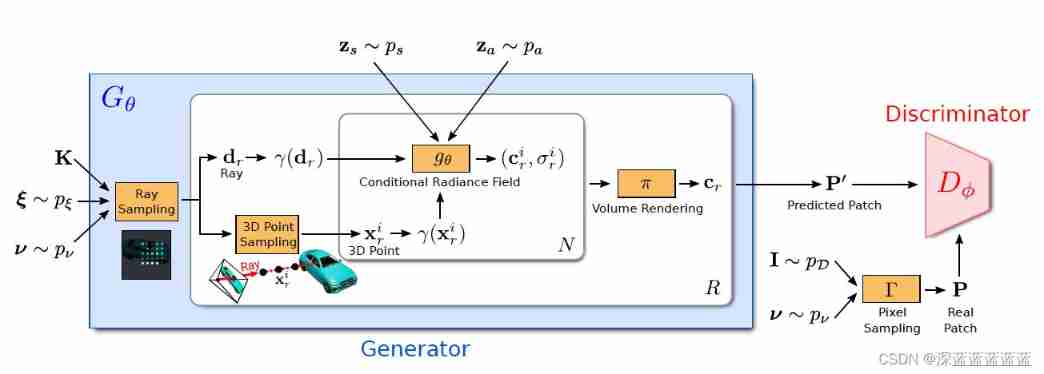

This is an improvement NeRF The article , Mainly introduced GAN, Thus avoiding NeRF Requirements for camera parameter labels .

The main structure is very intuitive , It's a standard conditional GAN, then NeRF The part of is placed in the generator .

First , The input of the generator is the various parameters of the camera , Location included , Direction , The focus of , Distance and other information , These parameters are completely random from a uniform distribution .

Then input the light sampler to determine the falling point of light and the number of light .

Then there are two ways to input the conditional radiation field :

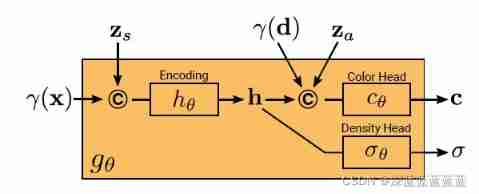

1. Sample along the light , Determine the location of the sampling point . Then the position information and randomly sampled shape information are combined and input into the neural network , Learn a shape representation . Shape representation can be used to predict Density of target points

2. Combine the light fall point information with the above shape representation , Combined with randomly sampled texture information , Predict together The color of the target point

With the density and color of the target points, the final result can be rendered by volume rendering , That is, the result generated by the generator .

Then we will sample some results from real images , Input the discriminator together with the generated result , So that the discriminator can learn the distribution of real images .

Be careful :

1. In order to speed up the training , Both generation and sampling only synthesize some pixels in the image , There is no one-time generation of the entire image .

2. In fact, it was difficult to understand when I first read this article , Why can we learn a smooth view transition without any constraints on the camera position ? I personally think it is the effect of radiation field , Because the radiation field implicitly establishes an infinite resolution 3D object , And the simplest way is to build a reasonable 3D The way of objects is according to the real world 3D Structure to build , Therefore, it can ensure that the transition of perspective is smooth . But if the number of images for reference is too small , Or the structure of the object is too simple , I think there will be some strange problems .

边栏推荐

- 1996. number of weak characters in the game

- 【Rust 笔记】15-字符串与文本(上)

- Leetcode-3: Longest substring without repeated characters

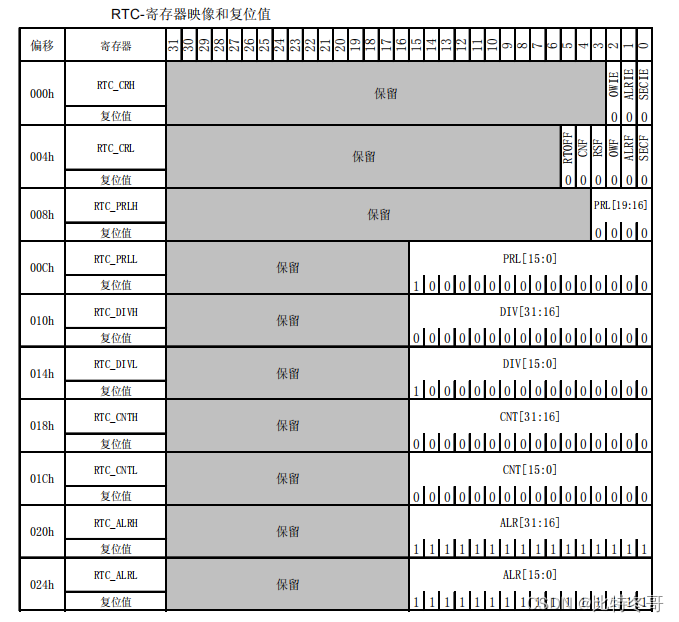

- 实时时钟 (RTC)

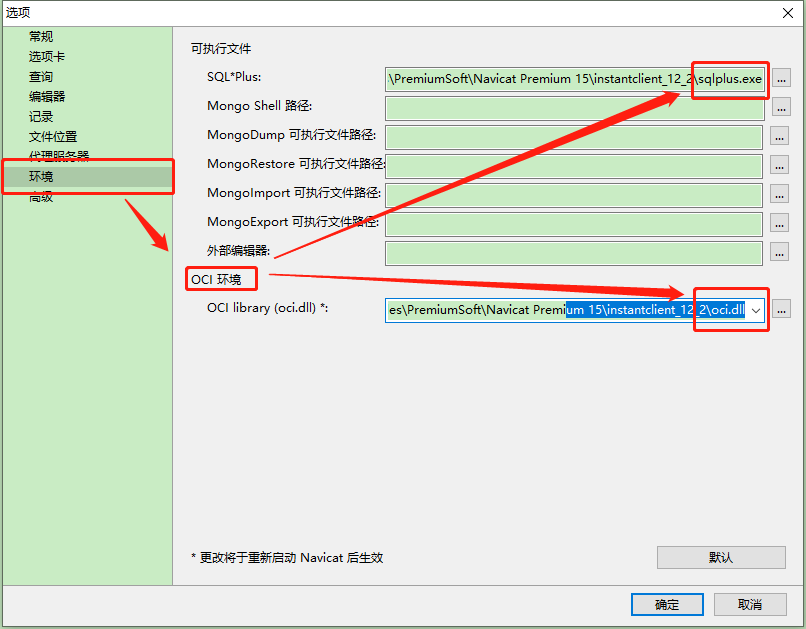

- Erreur de connexion Navicat à la base de données Oracle Ora - 28547 ou Ora - 03135

- 【Rust 笔记】13-迭代器(中)

- One question per day 1447 Simplest fraction

- 打印机脱机时一种容易被忽略的原因

- Navicat连接Oracle数据库报错ORA-28547或ORA-03135

- Appium基础 — 使用Appium的第一个Demo

猜你喜欢

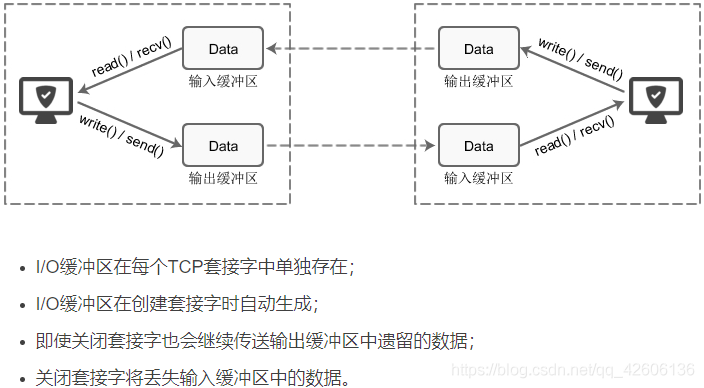

什么是套接字?Socket基本介绍

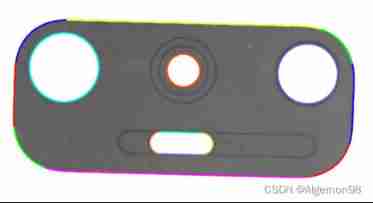

2021apmcm post game Summary - edge detection

Error ora-28547 or ora-03135 when Navicat connects to Oracle Database

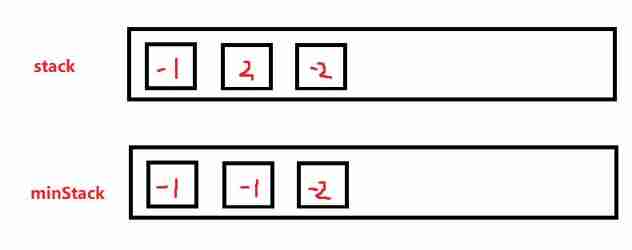

Leetcode stack related

Leetcode-6110: number of incremental paths in the grid graph

Real time clock (RTC)

快速使用Amazon MemoryDB并构建你专属的Redis内存数据库

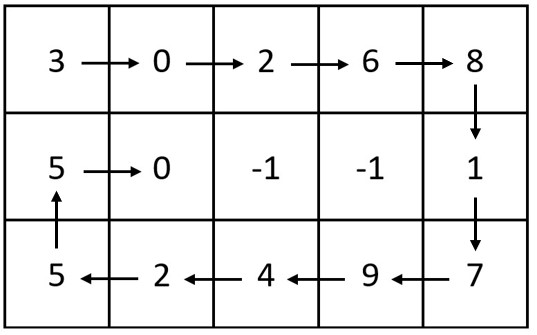

leetcode-6111:螺旋矩阵 IV

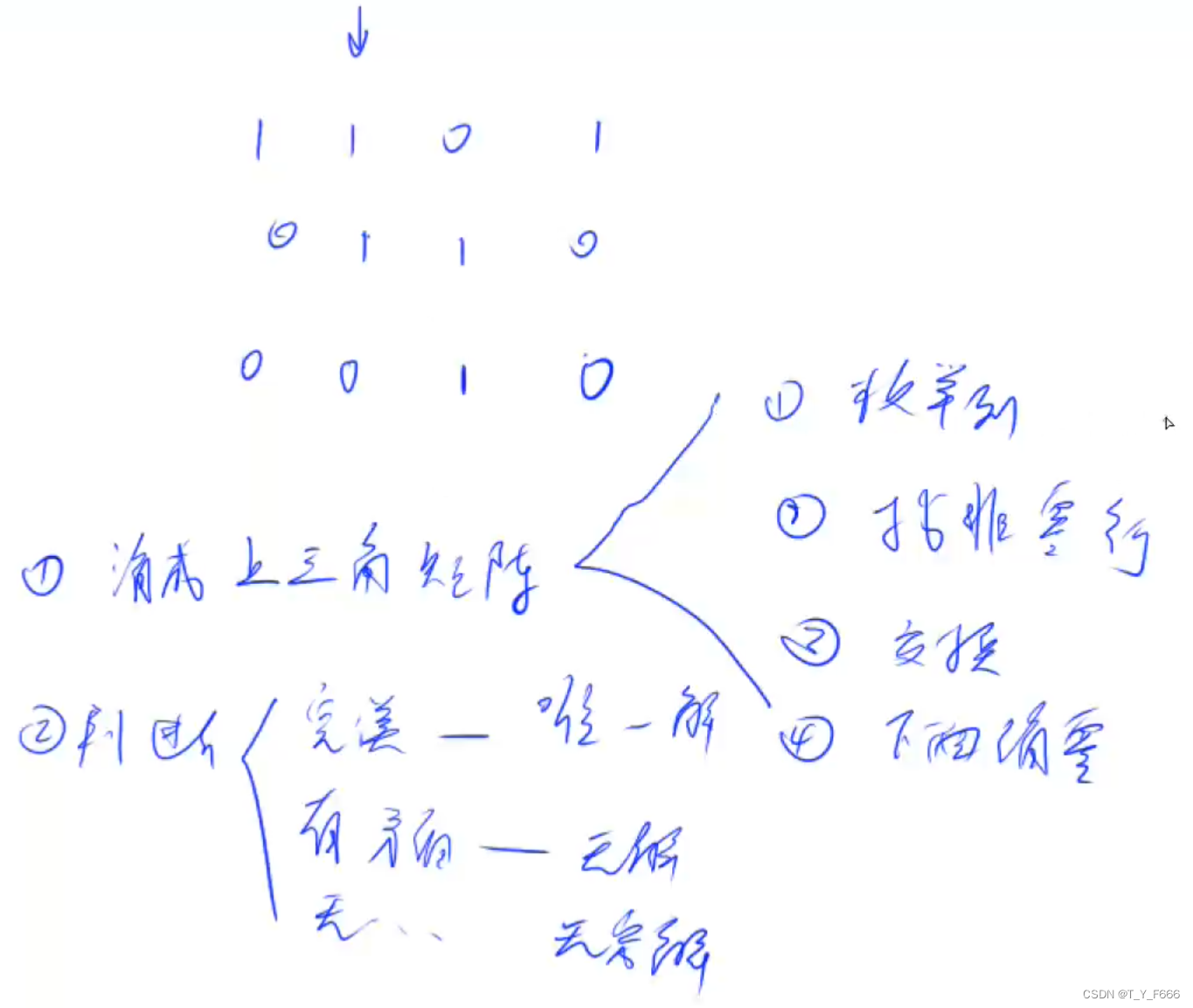

高斯消元 AcWing 884. 高斯消元解异或线性方程组

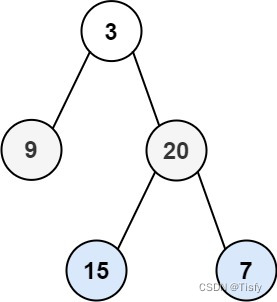

LeetCode 0107. Sequence traversal of binary tree II - another method

随机推荐

【LeetCode】Day95-有效的数独&矩阵置零

New title of module a of "PanYun Cup" secondary vocational network security skills competition

Usage scenarios of golang context

liunx启动redis

Leetcode stack related

【Rust 笔记】17-并发(下)

1.13 - RISC/CISC

927. Trisection simulation

对for(var i = 0;i < 5;i++) {setTimeout(() => console.log(i),1000)}的深入分析

【Rust 笔记】13-迭代器(中)

【LeetCode】Easy | 20. Valid parentheses

Redis publish subscribe command line implementation

One question per day 1765 The highest point in the map

MIT-6874-Deep Learning in the Life Sciences Week 7

Collection: programming related websites and books

One question per day 1447 Simplest fraction

The sum of the unique elements of the daily question

leetcode-6108:解密消息

Leetcode array operation

1996. number of weak characters in the game