当前位置:网站首页>Spark source code-task submission process-6.2-sparkContext initialization-TaskScheduler task scheduler

Spark source code-task submission process-6.2-sparkContext initialization-TaskScheduler task scheduler

2022-08-05 06:12:00 【zdaiqing】

TaskScheduler

1.入口

TaskSchedulerThe initialization of the task scheduler is in sparkContext的初始化过程中完成;

具体入口位置如下代码段所示:在sparkContext类的try catch代码块中;

调用链:try catch块 ->createTaskScheduler方法;

in this code,完成了taskScheduler、schedulerBackend、dagScheduler的初始化,最后调用taskScheduler.start方法启动taskScheduler;

class SparkContext(config: SparkConf) extends Logging {

//无关代码省略...

// Create and start the scheduler

try{

//实例化taskScheduler、schedulerBackend

val (sched, ts) = SparkContext.createTaskScheduler(this, master, deployMode)

_schedulerBackend = sched

_taskScheduler = ts

//实例化dagScheduler

_dagScheduler = new DAGScheduler(this)

//将taskSchedulerInstance completion event notificationheartbeatReceiver

_heartbeatReceiver.ask[Boolean](TaskSchedulerIsSet)

// start TaskScheduler after taskScheduler sets DAGScheduler reference in DAGScheduler's

// constructor

//启动taskScheduler

_taskScheduler.start()

//无关代码省略...

}catch{

}

}

2.createTaskScheduler

2.1.参数

sc: SparkContext

master:

配置文件中spark.master参数指定;

The cluster manager to connect to;

传递给 Spark 的master URL:

local、local[K]、local[K,F]、local[*]、local[*,F]、local-cluster[N,C,M]、spark://HOST:PORT、spark://HOST1:PORT1,HOST2:PORT2、yarn

deployMode:

配置文件中spark.submit.deployMode参数指定,Default if not specifiedclient;

SparkThe deployment mode of the driver,“客户端”或“集群”,This means locally on a node inside the cluster(“客户端”)或远程(“集群”)启动驱动程序.

masterThe value of the parameter can refer to the file【master URLS】

2.2.创建流程

1、According to different deployment modes(master参数指定),Choose a different implementation to instantiate;

local模式:TaskSchedulerImpl、LocalSchedulerBackend;

local[N] 或local[*]模式:TaskSchedulerImpl、LocalSchedulerBackend;

The degree of parallelism is specified by the parameterN确定,如果参数为*,根据javaThe virtual machine is availablecpu核数确定

local[K,F]模式:TaskSchedulerImpl、LocalSchedulerBackend;

The degree of parallelism is specified by the parameterN确定,如果参数为*,根据javaThe virtual machine is availablecpu核数确定;

spark://(.*)模式:TaskSchedulerImpl、StandaloneSchedulerBackend;

local-cluster[N, cores, memory]模式:TaskSchedulerImpl、StandaloneSchedulerBackend;

其他情况(yarn、mesos://HOST:PORT、k8s://HOST:PORT等):Created using cluster managertaskScheduler、schedulerBackend

2、调用taskScheduler的initialize方法,将backend的引用绑定到scheduler;Create scheduling pools based on scheduling policiesrootPool;

3、返回(backend, scheduler);

object SparkContext extends Logging {

private def createTaskScheduler(

sc: SparkContext,

master: String,

deployMode: String): (SchedulerBackend, TaskScheduler) = {

import SparkMasterRegex._

// When running locally, don't try to re-execute tasks on failure.

//失败重试次数

val MAX_LOCAL_TASK_FAILURES = 1

//根据不同master(部署模式)匹配不同的taskScheduler和schedulerBackend实现

master match {

//local模式:TaskSchedulerImpl、LocalSchedulerBackend

case "local" =>

val scheduler = new TaskSchedulerImpl(sc, MAX_LOCAL_TASK_FAILURES, isLocal = true)

val backend = new LocalSchedulerBackend(sc.getConf, scheduler, 1)

scheduler.initialize(backend)

(backend, scheduler)

//local[N] 或local[*]模式:TaskSchedulerImpl、LocalSchedulerBackend

//The degree of parallelism is specified by the parameterN确定,如果参数为*,根据javaThe virtual machine is availablecpu核数确定

case LOCAL_N_REGEX(threads) =>

def localCpuCount: Int = Runtime.getRuntime.availableProcessors()

// local[*] estimates the number of cores on the machine; local[N] uses exactly N threads.

val threadCount = if (threads == "*") localCpuCount else threads.toInt

if (threadCount <= 0) {

throw new SparkException(s"Asked to run locally with $threadCount threads")

}

val scheduler = new TaskSchedulerImpl(sc, MAX_LOCAL_TASK_FAILURES, isLocal = true)

val backend = new LocalSchedulerBackend(sc.getConf, scheduler, threadCount)

scheduler.initialize(backend)

(backend, scheduler)

//local[K,F]模式:TaskSchedulerImpl、LocalSchedulerBackend

//The degree of parallelism is specified by the parameterN确定,如果参数为*,根据javaThe virtual machine is availablecpu核数确定

case LOCAL_N_FAILURES_REGEX(threads, maxFailures) =>

def localCpuCount: Int = Runtime.getRuntime.availableProcessors()

// local[*, M] means the number of cores on the computer with M failures

// local[N, M] means exactly N threads with M failures

val threadCount = if (threads == "*") localCpuCount else threads.toInt

val scheduler = new TaskSchedulerImpl(sc, maxFailures.toInt, isLocal = true)

val backend = new LocalSchedulerBackend(sc.getConf, scheduler, threadCount)

scheduler.initialize(backend)

(backend, scheduler)

//spark://(.*)模式:TaskSchedulerImpl、StandaloneSchedulerBackend

case SPARK_REGEX(sparkUrl) =>

val scheduler = new TaskSchedulerImpl(sc)

//可能存在多个HOST:PORTCombinations are separated by commas

val masterUrls = sparkUrl.split(",").map("spark://" + _)

val backend = new StandaloneSchedulerBackend(scheduler, sc, masterUrls)

scheduler.initialize(backend)

(backend, scheduler)

//local-cluster[N, cores, memory]模式:TaskSchedulerImpl、StandaloneSchedulerBackend

//Shut down the local cluster through the callback function

case LOCAL_CLUSTER_REGEX(numSlaves, coresPerSlave, memoryPerSlave) =>

// Check to make sure memory requested <= memoryPerSlave. Otherwise Spark will just hang.

val memoryPerSlaveInt = memoryPerSlave.toInt

if (sc.executorMemory > memoryPerSlaveInt) {

throw new SparkException(

"Asked to launch cluster with %d MB RAM / worker but requested %d MB/worker".format(

memoryPerSlaveInt, sc.executorMemory))

}

val scheduler = new TaskSchedulerImpl(sc)

val localCluster = new LocalSparkCluster(

numSlaves.toInt, coresPerSlave.toInt, memoryPerSlaveInt, sc.conf)

val masterUrls = localCluster.start()

val backend = new StandaloneSchedulerBackend(scheduler, sc, masterUrls)

scheduler.initialize(backend)

backend.shutdownCallback = (backend: StandaloneSchedulerBackend) => {

localCluster.stop()

}

(backend, scheduler)

//其他情况(yarn、mesos://HOST:PORT、k8s://HOST:PORT等):Created using cluster managertaskScheduler、schedulerBackend

case masterUrl =>

val cm = getClusterManager(masterUrl) match {

case Some(clusterMgr) => clusterMgr

case None => throw new SparkException("Could not parse Master URL: '" + master + "'")

}

try {

val scheduler = cm.createTaskScheduler(sc, masterUrl)

val backend = cm.createSchedulerBackend(sc, masterUrl, scheduler)

cm.initialize(scheduler, backend)

(backend, scheduler)

} catch {

case se: SparkException => throw se

case NonFatal(e) =>

throw new SparkException("External scheduler cannot be instantiated", e)

}

}

}

}

2.3.scheduler.initialize方法

initialize方法将backend的引用绑定到scheduler,Create scheduling pools based on scheduling policiesrootPool;

Scheduling mode byspark.scheduler.mode参数指定,Default scheduling modeFIFO;

private[spark] class TaskSchedulerImpl(

//Default scheduling mode:FIFO

//可由spark.scheduler.mode参数指定

private val schedulingModeConf = conf.get(SCHEDULER_MODE_PROPERTY, SchedulingMode.FIFO.toString)

val schedulingMode: SchedulingMode =

try {

SchedulingMode.withName(schedulingModeConf.toUpperCase(Locale.ROOT))

} catch {

case e: java.util.NoSuchElementException =>

throw new SparkException(s"Unrecognized $SCHEDULER_MODE_PROPERTY: $schedulingModeConf")

}

//Default scheduling pool

val rootPool: Pool = new Pool("", schedulingMode, 0, 0)

def initialize(backend: SchedulerBackend) {

//将backend的引用绑定到scheduler

this.backend = backend

//Create scheduling pools based on different scheduling policies

schedulableBuilder = {

schedulingMode match {

case SchedulingMode.FIFO =>

new FIFOSchedulableBuilder(rootPool)

case SchedulingMode.FAIR =>

new FairSchedulableBuilder(rootPool, conf)

case _ =>

throw new IllegalArgumentException(s"Unsupported $SCHEDULER_MODE_PROPERTY: " +

s"$schedulingMode")

}

}

schedulableBuilder.buildPools()

}

}

3.实例化DAGScheduler

在实例化过程中,通过sparkContext对象,将taskScheduler、listenerBus、mapOutputTracker、blockManagerMaster、envThe application of the object is bound todagScheduler上;

private[spark] class DAGScheduler(

private[scheduler] val sc: SparkContext,

private[scheduler] val taskScheduler: TaskScheduler,

listenerBus: LiveListenerBus,

mapOutputTracker: MapOutputTrackerMaster,

blockManagerMaster: BlockManagerMaster,

env: SparkEnv,

clock: Clock = new SystemClock())

extends Logging {

def this(sc: SparkContext, taskScheduler: TaskScheduler) = {

this(

sc,

taskScheduler,

sc.listenerBus,

sc.env.mapOutputTracker.asInstanceOf[MapOutputTrackerMaster],

sc.env.blockManager.master,

sc.env)

}

def this(sc: SparkContext) = this(sc, sc.taskScheduler)

}

4.taskScheduler.start

override def start() {

//调用schedulerBackend的start方法

backend.start()

//For non-native mode,根据spark.speculationThe parameter determines whether a speculative execution thread needs to be started

if (!isLocal && conf.getBoolean("spark.speculation", false)) {

logInfo("Starting speculative execution thread")

speculationScheduler.scheduleWithFixedDelay(new Runnable {

override def run(): Unit = Utils.tryOrStopSparkContext(sc) {

checkSpeculatableTasks()

}

}, SPECULATION_INTERVAL_MS, SPECULATION_INTERVAL_MS, TimeUnit.MILLISECONDS)

}

}

5.参考资料

Spark 源码解读(四)SparkContextThe initialization of the task scheduler is createdTaskScheduler

Spark 源码分析(三): SparkContext 初始化之 TaskScheduler 创建与启动

【Spark内核源码】SparkContextInterpretation of some methods

边栏推荐

- Getting Started Documentation 12 webserve + Hot Updates

- Getting Started 05 Using cb() to indicate that the current task is complete

- Why can't I add a new hard disk to scan?How to solve?

- Getting Started Documentation 10 Resource Mapping

- [Paper Intensive Reading] Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation (R-CNN)

- 论那些给得出高薪的游戏公司底气到底在哪里?

- lvm逻辑卷及磁盘配额

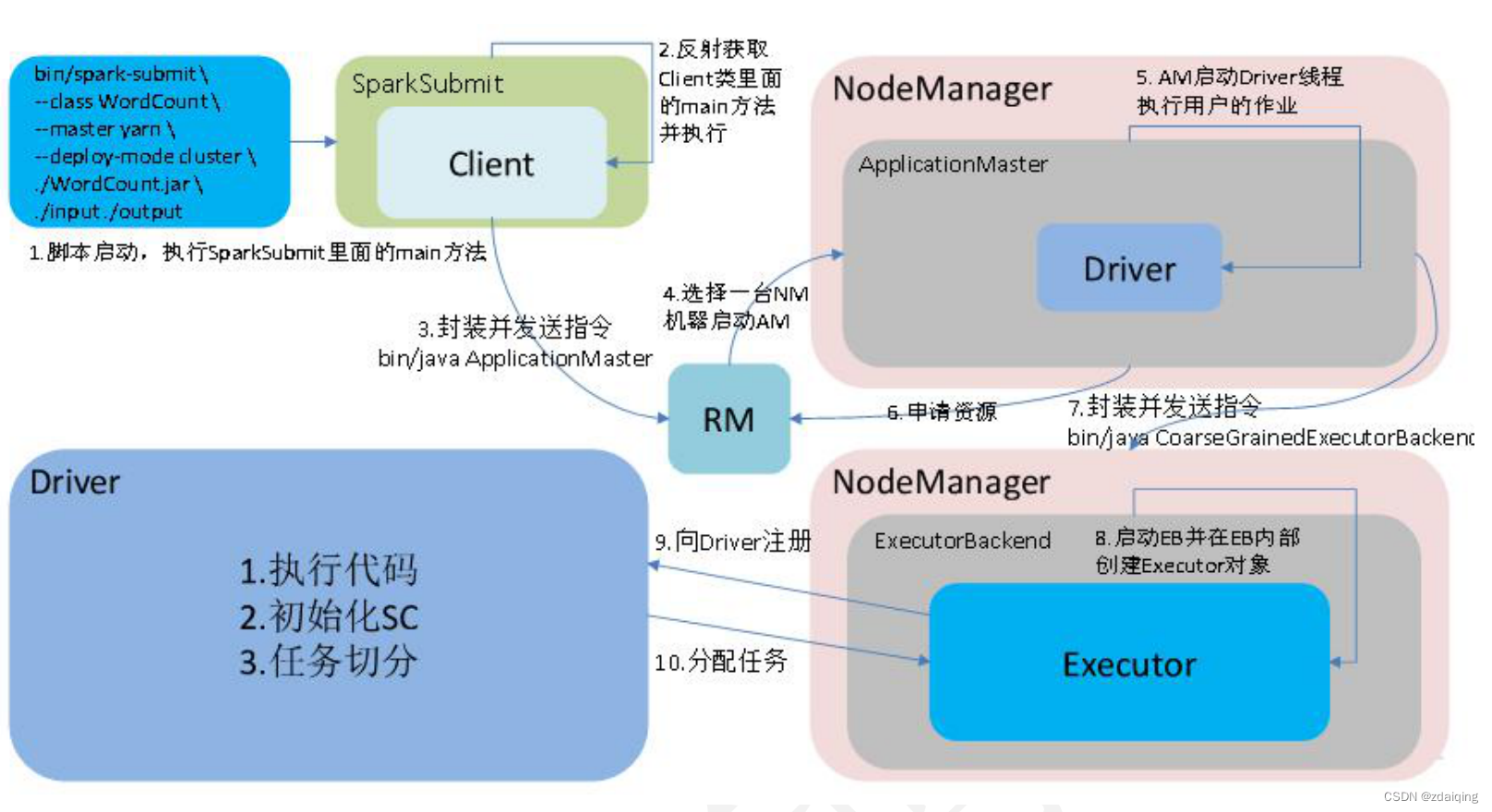

- spark源码-任务提交流程之-3-ApplicationMaster

- 入门文档07 分阶段输出

- OpenCV3.0 兼容VS2010与VS2013的问题

猜你喜欢

随机推荐

Why can't I add a new hard disk to scan?How to solve?

OpenCV3.0 兼容VS2010与VS2013的问题

[Day6] File system permission management, file special permissions, hidden attributes

【Day1】VMware软件安装

ROS video tutorial

偷题——腾讯游戏开发面试问题及解答

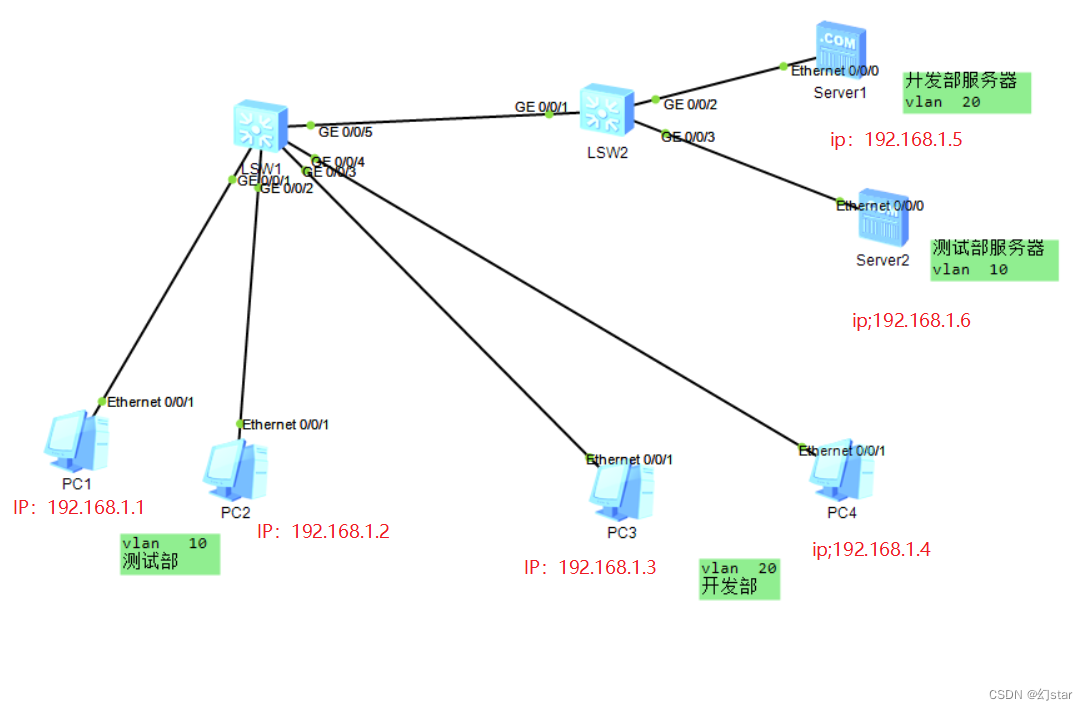

IP数据包格式(ICMP协议与ARP协议)

D39_向量

Cocos Creator小游戏案例《棍子士兵》

spark source code - task submission process - 2-YarnClusterApplication

Cocos Creator开发中的事件响应

Hugo搭建个人博客

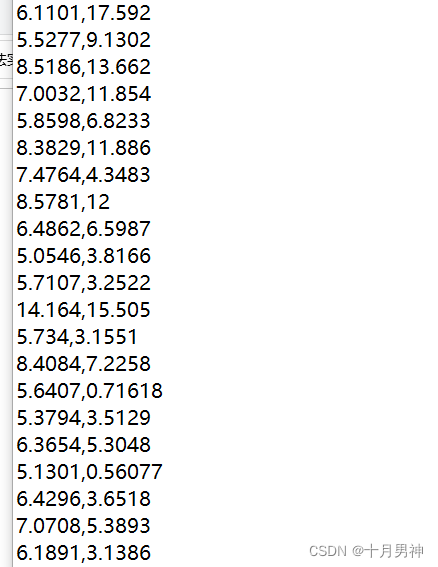

【机器学习】1单变量线性回归

CIPU,对云计算产业有什么影响

Servlet跳转到JSP页面,转发和重定向

Spark source code-task submission process-6.1-sparkContext initialization-create spark driver side execution environment SparkEnv

Apache配置反向代理

无影云桌面

来来来,一文让你读懂Cocos Creator如何读写JSON文件

spark operator-wholeTextFiles operator