当前位置:网站首页>Tips and tricks of image segmentation summarized from 39 Kabul competitions

Tips and tricks of image segmentation summarized from 39 Kabul competitions

2022-07-05 12:41:00 【Zhiyuan community】

The author took part in 39 individual Kaggle match , According to the order of the whole competition , Summarized the data processing before the game , Model training , And post-processing can help everyone tips and tricks, A lot of skills and experience , Now I want to share it with you .

Imagine , If you can get all the tips and tricks, You need to go to a Kaggle match . I've passed 39 individual Kaggle match , Include :

- Data Science Bowl 2017 – $1,000,000

- Intel & MobileODT Cervical Cancer Screening – $100,000

- 2018 Data Science Bowl – $100,000

- Airbus Ship Detection Challenge – $60,000

- Planet: Understanding the Amazon from Space – $60,000

- APTOS 2019 Blindness Detection – $50,000

- Human Protein Atlas Image Classification – $37,000

- SIIM-ACR Pneumothorax Segmentation – $30,000

- Inclusive Images Challenge – $25,000

Now dig up all this knowledge for you !

External data

- Use LUng Node Analysis Grand Challenge data , Because this dataset contains annotation details from radiology .

- Use LIDC-IDRI data , Because it has all the radiologic descriptions that found the tumor .

- Use Flickr CC, Wikipedia universal data set

- Use Human Protein Atlas Dataset

- Use IDRiD Data sets

Data exploration and intuition

- Use 0.5 The threshold value for 3D Segmentation and clustering

- Make sure there are no differences in the label distribution between the training set and the test set

Preprocessing

- Use DoG(Difference of Gaussian) methods blob testing , Use skimage The method in .

- Using a patch Input for training , In order to reduce training time .

- Use cudf Load data , Do not use Pandas, Because reading data is faster .

- Make sure all images have the same orientation .

- In histogram equalization , Use contrast limit .

- Use OpenCV General image preprocessing .

- Using automated active learning , Manually add .

- Scale all images to the same resolution , You can use the same model to scan different thicknesses .

- Normalize the scanned image to 3D Of numpy Array .

- For a single image, the dark channel prior method is used for image defogging .

- Convert all images into Hounsfield Company ( Concepts in radiology ).

- Use RGBY To find redundant images .

- Develop a sampler , Make the label more balanced .

- Fake the test image to improve the score .

- The image /Mask Downsampling to 320x480.

- Histogram equalization (CLAHE) When you use kernel size by 32×32

- take DCM Turn into PNG.

- When there are redundant images , Calculate... For each image md5 hash value .

Data to enhance

- Use albumentations Data enhancement .

- Use random 90 Degree of rotation .

- Use horizontal flip , Flip up and down .

- You can try larger geometric transformations : Elastic transformation , Affine transformation , Spline affine transformation , Occipital distortion .

- Use random HSV.

- Use loss-less Enhance to generalize , Prevent useful image information from appearing big loss.

- application channel shuffling.

- Data enhancement based on the frequency of categories .

- Use Gaussian noise .

- Yes 3D Image use lossless Rearrangement for data enhancement .

- 0 To 45 Degree random rotation .

- from 0.8 To 1.2 Random scaling .

- Brightness conversion .

- Random change hue And saturation .

- Use D4:https://en.wikipedia.org/wiki/Dihedral_group enhance .

- In histogram equalization, use contrast limitation .

- Use AutoAugment:https://arxiv.org/pdf/1805.09501.pdf Enhancement strategy .

Model

structure

- Use U-net As infrastructure , And adjust to fit 3D The input of .

- Use automated active learning and add manual tagging .

- Use inception-ResNet v2 architecture Different receptive field training characteristics were used in the structure .

- Use Siamese networks Conduct confrontation training .

- Use _ResNet50_, Xception, Inception ResNet v2 x 5, The last layer uses full connectivity .

- Use global max-pooling layer, Whatever input size , Returns a fixed length output .

- Use stacked dilated convolutions.

VoxelNet.

- stay LinkNet To replace the addition with splicing and conv1x1.

- Generalized mean pooling.

- Use 224x224x3 The input of , use Keras NASNetLarge Training models from scratch .

- Use 3D Convolution network .

- Use ResNet152 As a pre training feature extractor .

- take ResNet The last full connection layer of is replaced by 3 One use dropout The full connection layer of .

- stay decoder Transpose convolution is used in .

- Use VGG As infrastructure .

- Use C3D The Internet , Use adjusted receptive fields, At the end of the network 64 unit bottleneck layer .

- Use... With pre training weights UNet The structure of type is in 8bit RGB Improve convergence and binary segmentation performance on the input image .

- Use LinkNet, Because it's fast and saves memory .

MASKRCNN

- BN-Inception

- Fast Point R-CNN

- Seresnext

- UNet and Deeplabv3

- Faster RCNN

- SENet154

- ResNet152

- NASNet-A-Large

- EfficientNetB4

- ResNet101

- GAPNet

- PNASNet-5-Large

- Densenet121

- AC-GAN

- XceptionNet (96), XceptionNet (299), Inception v3 (139), InceptionResNet v2 (299), DenseNet121 (224)

- AlbuNet (resnet34) from ternausnets

- SpaceNet

- Resnet50 from selim_sef SpaceNet 4

- SCSEUnet (seresnext50) from selim_sef SpaceNet 4

- A custom Unet and Linknet architecture

- FPNetResNet50 (5 folds)

- FPNetResNet101 (5 folds)

- FPNetResNet101 (7 folds with different seeds)

- PANetDilatedResNet34 (4 folds)

- PANetResNet50 (4 folds)

hardware setup

- Use of the AWS GPU instance p2.xlarge with a NVIDIA K80 GPU

- Pascal Titan-X GPU

- Use of 8 TITAN X GPUs

- 6 GPUs: 2_1080Ti + 4_1080

- Server with 8×NVIDIA Tesla P40, 256 GB RAM and 28 CPU cores

- Intel Core i7 5930k, 2×1080, 64 GB of RAM, 2x512GB SSD, 3TB HDD

- GCP 1x P100, 8x CPU, 15 GB RAM, SSD or 2x P100, 16x CPU, 30 GB RAM

- NVIDIA Tesla P100 GPU with 16GB of RAM

- Intel Core i7 5930k, 2×1080, 64 GB of RAM, 2x512GB SSD, 3TB HDD

- 980Ti GPU, 2600k CPU, and 14GB RAM

Loss function

- Dice Coefficient , Because it works well on unbalanced data .

- Weighted boundary loss The aim is to reduce segmentation and prediction ground truth Distance between .

- MultiLabelSoftMarginLoss Use one-versus-all Loss optimized multiple tags .

- Balanced cross entropy (BCE) with logit loss The weights of positive and negative samples are assigned by coefficients .

- Lovasz be based on sub-modular Lost convex Lovasz Extend to optimize the average directly IoU Loss .

- FocalLoss + Lovasz take Focal loss and Lovasz losses Add up to get .

- Arc margin loss By adding margin To maximize the separability of face categories .

- Npairs loss Calculation y_true and y_pred Between npairs Loss .

- take BCE and Dice loss combined .

- LSEP – A sort of pairwise sort loss , Smooth everywhere, so it's easy to optimize .

- Center loss At the same time, learn the feature centers of each category , And punish the samples which are too far away from the feature center .

- Ring Loss The standard loss function is enhanced , Such as Softmax.

- Hard triplet loss Training network for feature embedding , Maximize the distance between features of different categories .

- 1 + BCE – Dice Contains BCE and DICE Loss plus 1.

- Binary cross-entropy – log(dice) Binary cross entropy minus dice loss Of log.

- BCE, dice and focal A combination of losses .

- BCE + DICE - Dice Loss smoothed by calculation dice The coefficient gives .

- Focal loss with Gamma 2 The upgrade of standard cross entropy loss .

- BCE + DICE + Focal – 3 Add up the losses .

- Active Contour Loss Added area and size information , And integrated into the deep learning model .

- 1024 * BCE(results, masks) + BCE(cls, cls_target)

- Focal + kappa – Kappa It's a loss for multi category classification , Here and Focal loss Add up .

- ArcFaceLoss — For face recognition Additive Angular Margin Loss.

- soft Dice trained on positives only – Using the prediction probability Soft Dice.

- 2.7 * BCE(pred_mask, gt_mask) + 0.9 * DICE(pred_mask, gt_mask) + 0.1 * BCE(pred_empty, gt_empty) A custom loss .

- nn.SmoothL1Loss().

- Use Mean Squared Error objective function, In some scenarios, it is better than binary cross entropy loss .

边栏推荐

- How to design an interface?

- Xi IO flow

- MySQL splits strings for conditional queries

- [superhard core] is the core technology of redis

- How can beginners learn flutter efficiently?

- Instance + source code = see through 128 traps

- ZABBIX monitors mongodb templates and configuration operations

- Basic operations of MySQL data table, addition, deletion and modification & DML

- GPS data format conversion [easy to understand]

- MySQL transaction

猜你喜欢

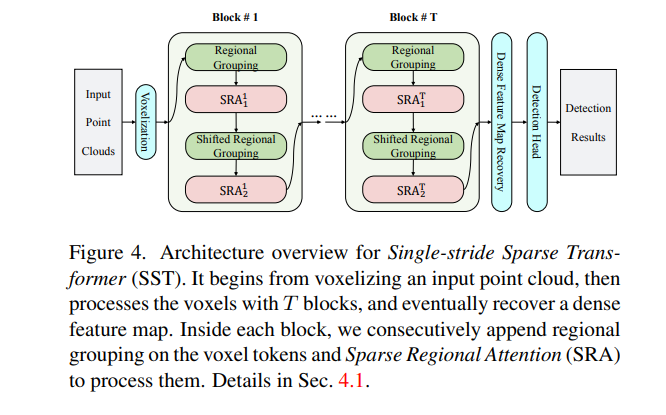

CVPR 2022 | 基于稀疏 Transformer 的单步三维目标识别器

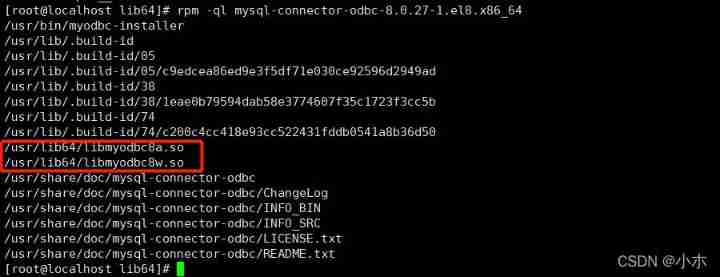

ZABBIX ODBC database monitoring

ActiveMQ installation and deployment simple configuration (personal test)

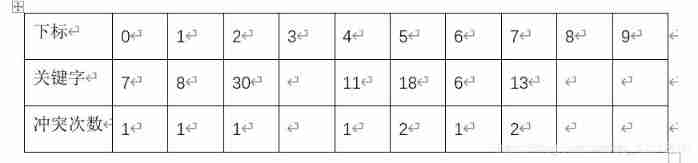

Average lookup length when hash table lookup fails

Automated test lifecycle

A guide to threaded and asynchronous UI development in the "quick start fluent Development Series tutorials"

VoneDAO破解组织发展效能难题

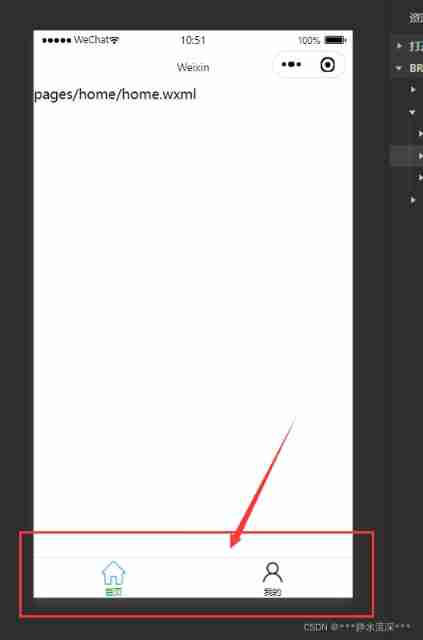

Tabbar configuration at the bottom of wechat applet

The evolution of mobile cross platform technology

How can beginners learn flutter efficiently?

随机推荐

Pytoch implements tf Functions of the gather() function

ZABBIX customized monitoring disk IO performance

ZABBIX agent2 monitors mongodb templates and configuration operations

Experimental design - using stack to realize calculator

CVPR 2022 | 基于稀疏 Transformer 的单步三维目标识别器

Conversion du format de données GPS [facile à comprendre]

The evolution of mobile cross platform technology

Correct opening method of redis distributed lock

Array cyclic shift problem

Learn JVM garbage collection 05 - root node enumeration, security points, and security zones (hotspot)

OPPO小布推出预训练大模型OBERT,晋升KgCLUE榜首

Redis highly available sentinel cluster

Iterator details in list... Interview pits

Time conversion error

MySQL function

MySQL installation, Windows version

MySQL view

ZABBIX agent2 monitors mongodb nodes, clusters and templates (official blog)

Volatile instruction rearrangement and why instruction rearrangement is prohibited

ZABBIX monitors mongodb (template and deployment operations)