当前位置:网站首页>Deconvolution popular detailed analysis and nn Convtranspose2d important parameter interpretation

Deconvolution popular detailed analysis and nn Convtranspose2d important parameter interpretation

2022-07-07 10:07:00 【iioSnail】

List of articles

The function of deconvolution

Traditional convolution usually convolutes a large picture into a small picture , Deconvolution is the reverse , Turn a small picture into a big picture .

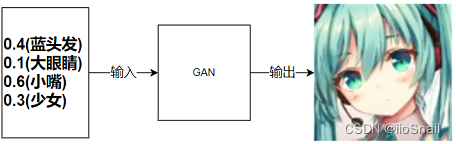

But what's the use of that ? It works , for example , In generating networks (GAN) in , We are giving the network a vector , Then generate a picture

So we need to find a way to expand this vector all the time , Finally, it expands to the size of the picture .

The convolution padding A few concepts

Before understanding deconvolution , First, let's learn some of the traditional convolution padding Concept , Because later deconvolution also has the same concept

No Padding

No Padding Namely padding by 0, In this way, the image size will be reduced after convolution , You probably know that

The following pictures are Blue is the input picture , Green is the output picture .

Half(Same) Padding

Half Padding Also known as Same Padding, First say Same,Same It means that the output image is the same size as the input image , And in the stride by 1 Under the circumstances , If you want the input and output dimensions to be consistent , You need to specify the p = ⌊ k / 2 ⌋ p=\lfloor k/2 \rfloor p=⌊k/2⌋, This is it. Half The origin of , namely padding The number of kerner_size Half of .

stay pytorch Chinese support same padding, for example :

inputs = torch.rand(1, 3, 32, 32)

outputs = nn.Conv2d(in_channels=3, out_channels=3, kernel_size=5, padding='same')(inputs)

outputs.size()

torch.Size([1, 3, 32, 32])

Full Padding

When p = k − 1 p=k-1 p=k−1 It's time to arrive Full Padding. Why do you say that ? Look at the picture above , k = 3 k=3 k=3, p = 2 p=2 p=2, At this time, when convoluting in the first lattice , Only one input unit is involved in convolution . hypothesis p = 3 p=3 p=3 了 , Then there will be some convolution operations without input units at all , The resulting value is 0, That's the same as not doing it .

We can use pytorch Make a test , First, let's have a Full Padding:

inputs = torch.rand(1, 1, 2, 2)

outputs = nn.Conv2d(in_channels=1, out_channels=1, kernel_size=3, padding=2, bias=False)(inputs)

outputs

tensor([[[[-0.0302, -0.0356, -0.0145, -0.0203],

[-0.0515, -0.2749, -0.0265, -0.1281],

[ 0.0076, -0.1857, -0.1314, -0.0838],

[ 0.0187, 0.2207, 0.1328, -0.2150]]]],

grad_fn=<SlowConv2DBackward0>)

You can see that the output at this time is normal , We will padding Increase again , Turn into 3:

inputs = torch.rand(1, 1, 2, 2)

outputs = nn.Conv2d(in_channels=1, out_channels=1, kernel_size=3, padding=3, bias=False)(inputs)

outputs

tensor([[[[ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000],

[ 0.0000, 0.1262, 0.2506, 0.1761, 0.3091, 0.0000],

[ 0.0000, 0.3192, 0.6019, 0.5570, 0.3143, 0.0000],

[ 0.0000, 0.1465, 0.0853, -0.1829, -0.1264, 0.0000],

[ 0.0000, -0.0703, -0.2774, -0.3261, -0.1201, 0.0000],

[ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000]]]],

grad_fn=<SlowConv2DBackward0>)

You can see that there is an extra circle around the final output image 0, This is the partial convolution without the input image , Results in invalid calculations .

deconvolution

Deconvolution is actually the same as convolution , It's just that the parameter correspondence changes a little . for example :

This is a padding=0 Deconvolution of , At this time, you must ask , this padding Obviously 2 Well , What do you say is 0 Well ? Please see the following

In deconvolution Padding Parameters

In traditional convolution , our padding The scope is [ 0 , k − 1 ] [0, k-1] [0,k−1], p = 0 p=0 p=0 go by the name of No padding, p = k − 1 p=k-1 p=k−1 go by the name of Full Padding.

And in deconvolution p ′ p' p′ Just the opposite , That is to say p ′ = k − 1 − p p' = k-1 - p p′=k−1−p . That is, when we pass p ′ = 0 p'=0 p′=0 when , It is equivalent to transmitting in the traditional convolution p = k − 1 p=k-1 p=k−1, Biography p ′ = k − 1 p'=k-1 p′=k−1 when , It is equivalent to transmitting in the traditional convolution p = 0 p=0 p=0.

We can use the following experiments to verify :

inputs = torch.rand(1, 1, 32, 32)

# Define deconvolution , here p'=2, Is in deconvolution Full Padding

transposed_conv = nn.ConvTranspose2d(in_channels=1, out_channels=1, kernel_size=3, padding=2, bias=False)

# Define convolution , here p=0, Is in convolution No Padding

conv = nn.Conv2d(in_channels=1, out_channels=1, kernel_size=3, padding=0, bias=False)

# Let deconvolution and convolution kernel Consistent parameters , In fact, the transpose of convolution kernel parameters is assigned to deconvolution

transposed_conv.load_state_dict(OrderedDict([('weight', torch.Tensor(np.array(conv.state_dict().get('weight'))[:, :, ::-1, ::-1].copy()))]))

# Forward pass

transposed_conv_outputs = transposed_conv(inputs)

conv_outputs = conv(inputs)

# Print convolution output and deconvolution output size

print("transposed_conv_outputs.size", transposed_conv_outputs.size())

print("conv_outputs.size", conv_outputs.size())

# Check whether their output values are consistent .

#( Because the above parameter is changed to numpy, And back again , So in fact, the parameters of convolution and deconvolution have errors ,

# So you can't use ==, In this way , In fact, it's equivalent to ==)

(transposed_conv_outputs - conv_outputs) < 0.01

transposed_conv_outputs.size: torch.Size([1, 1, 30, 30])

conv_outputs.size: torch.Size([1, 1, 30, 30])

tensor([[[[True, True, True, True, True, True, True, True, True, True, True,

.... // A little

As can be seen from the above example , Deconvolution and convolution are actually the same , There are only a few differences :

- When deconvolution is performed , The parameter used is kernel The transpose , But we don't need to care about this

- Deconvolution padding Parameters p ′ p' p′ and Parameters of traditional convolution p p p The corresponding relation of is p ′ = k − 1 − p p'=k-1-p p′=k−1−p. let me put it another way , The convolution no padding Corresponding to deconvolution full padding; The convolution full padding Corresponding to no padding.

- from 2 You can also see one thing in , In deconvolution p ′ p' p′ It can't be infinite , The maximum value is k − 1 − p k-1-p k−1−p.( In fact, it's not )

Digression , If you are not interested, you can skip , In the third point above, we said p ′ p' p′ The maximum value of is k − 1 − p k-1-p k−1−p, But actually you use pytorch The experiment will find , p ′ p' p′ It can be greater than this value . And behind this , Equivalent to Cut the original image .

stay pytorch Of nn.Conv2d in ,padding Can't be negative , Will report a mistake , But sometimes you may need to let padding It's a negative number ( There should be no such demand ), At this point, deconvolution can be used to achieve , for example :

inputs = torch.ones(1, 1, 3, 3)

transposed_conv = nn.ConvTranspose2d(in_channels=1, out_channels=1, kernel_size=1, padding=1, bias=False)

print(transposed_conv.state_dict())

outputs = transposed_conv(inputs)

print(outputs)

OrderedDict([('weight', tensor([[[[0.7700]]]]))])

tensor([[[[0.7700]]]], grad_fn=<SlowConvTranspose2DBackward0>)

In the above example , What we send to the Internet is pictures :

[ 1 1 1 1 1 1 1 1 1 ] \begin{bmatrix} 1 & 1 &1 \\ 1 & 1 &1 \\ 1 & 1 &1 \end{bmatrix} ⎣⎡111111111⎦⎤

But we passed p ′ = 1 , k = 1 p'=1, k=1 p′=1,k=1, This is equivalent to p = k − 1 − p ′ = − 1 p=k-1-p'=-1 p=k−1−p′=−1, amount to Conv2d(padding=-1), So when doing convolution , It's actually a picture [ 1 ] [1] [1] Doing convolution ( Because I cut a circle around ), So the final output size is ( 1 , 1 , 1 , 1 ) (1,1,1,1) (1,1,1,1)

This digression seems to have no practical use , It's better to understand the function of deconvolution padding Parameter bar .

Deconvolution stride Parameters

Deconvolution stride The name is somewhat ambiguous , I don't feel very good , The specific meaning can be seen in the figure below :

On the left is stride=1( be called No Stride) Deconvolution of , On the right is stride=2 Deconvolution of . You can see , The difference between them is that the pixels of the original image are filled 0. you 're right , In deconvolution ,stride The parameter is to fill the middle of every two pixels of the input image 0, And the amount of filling is stride - 1.

for example , We are right. 32x32 Deconvolution of pictures ,stride=3, Then it will fill the middle of every two pixels with two 0, The size of the original image will become 32 + 31 × 2 = 94 32+31\times 2=94 32+31×2=94. Experiment with code :

inputs = torch.ones(1, 1, 32, 32)

transposed_conv = nn.ConvTranspose2d(in_channels=1, out_channels=1, kernel_size=3, padding=2, stride=3, bias=False)

outputs = transposed_conv(inputs)

print(outputs.size())

torch.Size([1, 1, 92, 92])

Let's figure it out , Here I use deconvolution Full Padding( It is equivalent to that the edge of the original image is not padding), then stride Yes 3, It is equivalent to filling two... Between every two pixels 0, Then the original image will become 94x94 Of , then kernal yes 3, So the final output image size is 94 − 3 + 1 = 92 94-3+1=92 94−3+1=92.

Deconvolution summary

The function of deconvolution is to expand the original image

There is little difference between deconvolution and traditional convolution , The main differences are :

2.1 padding The corresponding relationship of has changed , Deconvolution padding Parameters p ′ = k − 1 − p p' = k-1-p p′=k−1−p. among k k k yes kernel_size, p For traditional convolution padding value ;

2.2 stride The meaning of parameters is different , In deconvolution stride Means filling the middle of the input image 0, The number of fills between every two pixels is stride-1

2.3 In addition to the above two parameters , Other parameters make no difference

Reference material

Convolution arithmetic: https://github.com/vdumoulin/conv_arithmetic

A guide to convolution arithmetic for deep

learning: https://arxiv.org/pdf/1603.07285.pdf

nn.ConvTranspose2d Official documents : https://pytorch.org/docs/stable/generated/torch.nn.ConvTranspose2d.html

边栏推荐

- uboot机构简介

- Writing file types generated by C language

- C# XML的应用

- Selenium+bs4 parsing +mysql capturing BiliBili Tarot data

- There is a problem using Chinese characters in SQL. Who has encountered it? Such as value & lt; & gt;` None`

- 反卷积通俗详细解析与nn.ConvTranspose2d重要参数解释

- Become a "founder" and make reading a habit

- flinkcdc采集oracle在snapshot阶段一直失败,这个得怎么调整啊?

- 第十四次试验

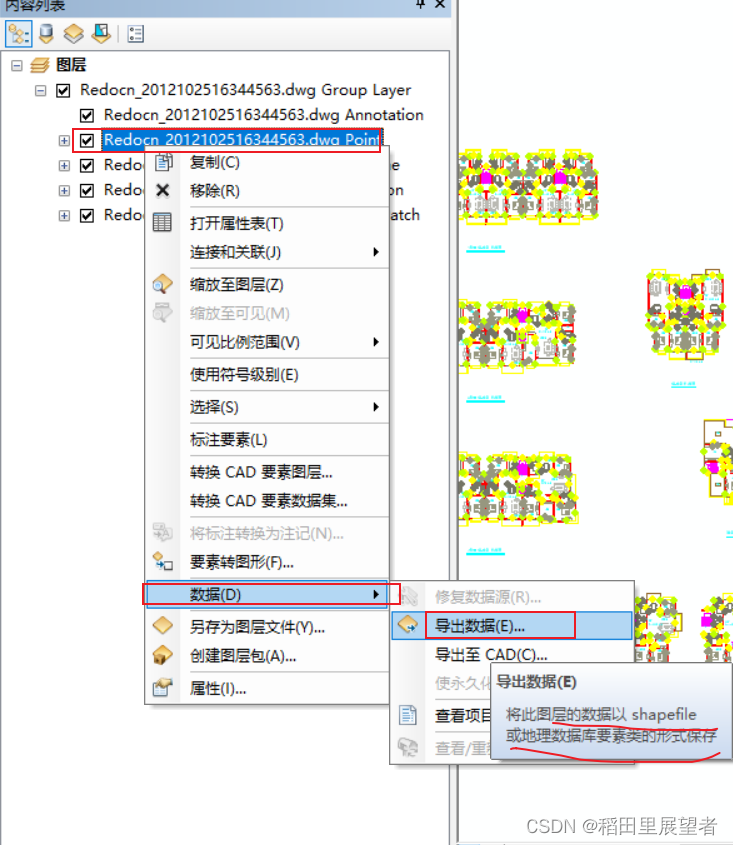

- arcgis操作:dwg数据转为shp数据

猜你喜欢

arcgis操作:dwg数据转为shp数据

【原创】程序员团队管理的核心是什么?

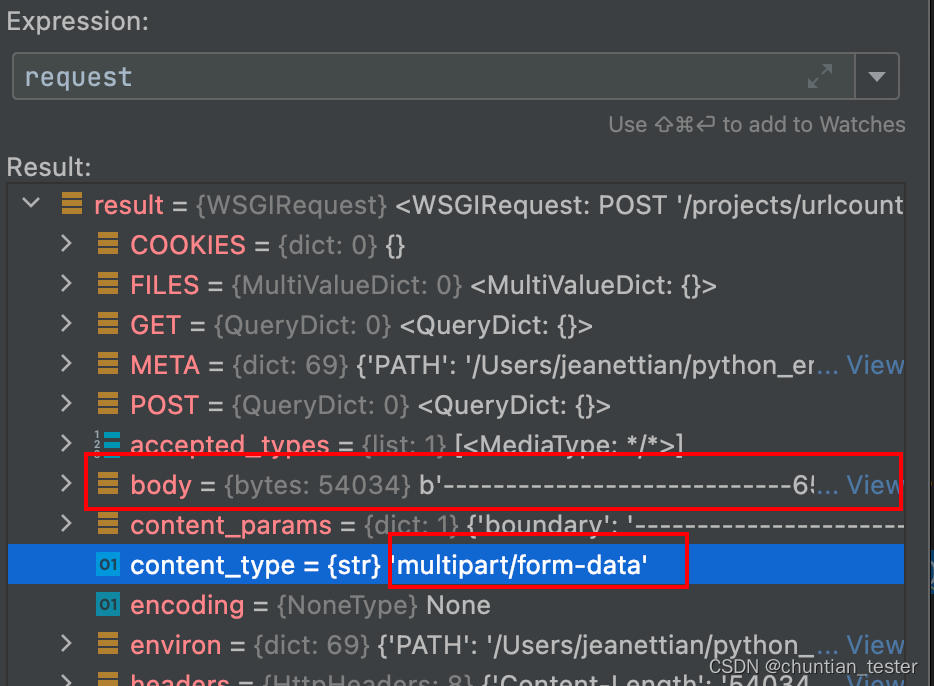

request对象对请求体,请求头参数的解析

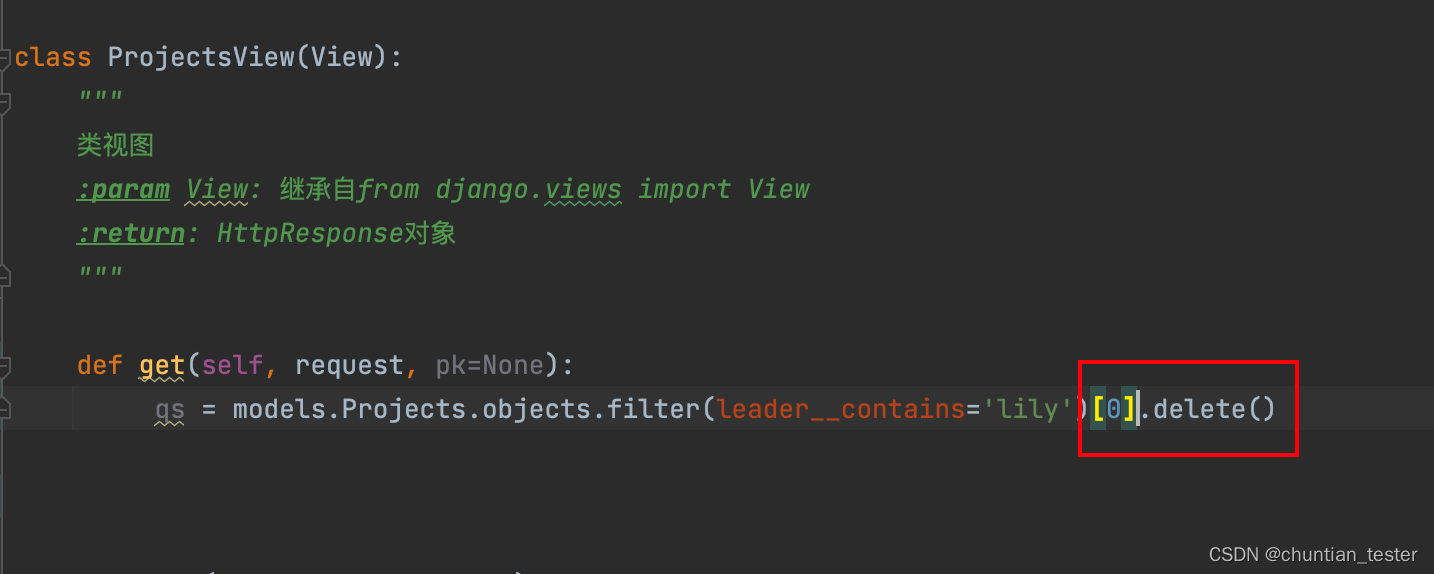

ORM--逻辑关系与&或;排序操作,更新记录操作,删除记录操作

小程序弹出半角遮罩层

企业实战|复杂业务关系下的银行业运维指标体系建设

CentOS installs JDK1.8 and mysql5 and 8 (the same command 58 in the second installation mode is common, opening access rights and changing passwords)

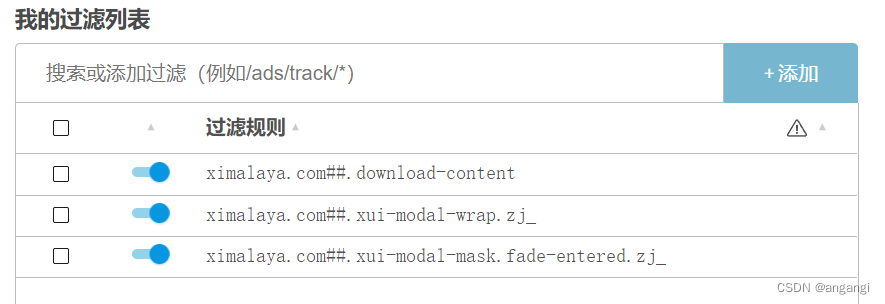

The Himalaya web version will pop up after each pause. It is recommended to download the client solution

Garbage disposal method based on the separation of smart city and storage and living digital home mode

Agile course training

随机推荐

Win10 installation vs2015

根据热门面试题分析Android事件分发机制(二)---事件冲突分析处理

Switching value signal anti shake FB of PLC signal processing series

Why are social portals rarely provided in real estate o2o applications?

位操作==c语言2

Horizontal split of database

Flex flexible layout

Gauss elimination

Use 3 in data modeling σ Eliminate outliers for data cleaning

小程序实现页面多级来回切换支持滑动和点击操作

Analyze Android event distribution mechanism according to popular interview questions (I)

CentOS installs JDK1.8 and mysql5 and 8 (the same command 58 in the second installation mode is common, opening access rights and changing passwords)

request对象对请求体,请求头参数的解析

Scratch crawler mysql, Django, etc

MongoDB创建一个隐式数据库用作练习

Luogu p2482 [sdoi2010] zhuguosha

Codeforces - 1324d pair of topics

C# 初始化程序时查看初始化到哪里了示例

flinkcdc采集oracle在snapshot阶段一直失败,这个得怎么调整啊?

The landing practice of ByteDance kitex in SEMA e-commerce scene