当前位置:网站首页>Using keras in tensorflow to build convolutional neural network

Using keras in tensorflow to build convolutional neural network

2022-07-07 10:03:00 【guluC】

Application tensorflow Medium keras Build convolutional neural network

Six steps

1、 Import tensorflow library

import tensorflow.keras as keras

2、 Prepare training data

x_train,y_train

x_test,y_test

3、 Build a network structure

- Generate a container to store the network structure

model = keras.models.Sequential() # Describe each layer of network

- Convolution layer

keras.layers.Conv2D(

filters, # Number of convolution kernels

kernel_size, # Convolution kernel size It's usually (3,3)

strides=(1, 1), # Sliding step Default (1,1)

padding='valid', # Zero filling strategy 'valid' perhaps 'same'

activation=None, # Activation function Commonly used relu,softmax,selu

input_shape # Define the input data style (64,64,3)64*64 Three dimensional view of

)

- Pooling layer

keras.layers.MaxPooling2D(

pool_size=(2, 2), # Pool layer size

strides=None, # step

padding='valid', # Zero filling strategy 'valid' perhaps 'same'

data_format=None

)

- Flatten ( Change the data into one dimension , It is often used in the transition from convolution layer to full connection layer )

keras.layers.Flatten()

- Fully connected layer

keras.layers.Dense(

units, # Dimension of output space

activation=None, # Activation function Commonly used relu,softmax,selu

use_bias=True, # Boolean value , Whether to use offset vector

)

- Dropout layer ( Prevent too fitting , Improve the generalization ability of the model )

keras.layers.Dropout(

rate, #0-1 Decimal between Percentage discarded

noise_shape=None,

seed=None # Random seeds

)

4、 Print network structure and parameter statistics

model.summary()

summary Function is used to print network structure and parameter statistics

5、 Configure the optimizer for training 、 Loss function and accuracy evaluation criteria

model.compile(

optimizer, # Optimizer

loss, # Loss function

metrics # Network evaluation index

)

- optimizer Parameters can be character string The optimizer name given by the form , It can also be in the form of a function , The learning rate can be set in the form of a function 、 Momentum and hyperparameters

- “sgd” perhaps keras.optimizers.SGD(lr = Learning rate ,decay = Learning decay rate ,momentum = Momentum parameter )

- "adagrad’" perhaps keras.optimizers.Adagrad(lr = Learning rate , decay = Learning rate decay rate )

- "adadelta" perhaps keras.optimizers.Adadelta(lr = Learning rate , decay = Learning rate decay rate )

- "adam" perhaps keras.optimizers.Adam(lr = Learning rate ,decay = Learning rate decay rate )

- lose Parameters can be given in string form Loss function Name , It can also be in the form of a function 、

- "mse" perhaps keras.losses.MeanSquaredError()

- “sparse_categorical_crossentropy” perhaps keras.losses.SparseCatagoricalCrossentropy(from_logits = False)

- Metrics Label network evaluation index

- “accuracy” : y_ and y It's all numbers , Such as y_ = [1] y = [1] #y_ For real value ,y For the predicted value

- "sparse_accuracy"y_ and y It's all based on a single hot code And probability distribution , Such as y_ = [0, 1, 0], y = [0.256, 0.695, 0.048]

- "sparse_categorical_accuracy"y_ It is given in numerical form ,y In order to The unique heat code gives , Such as y_ = [1], y = [0.256 0.695, 0.048]

6、fit

model.fit(

x, y,

batch_size=32,

epochs=10,

verbose=1,

callbacks=None,

validation_split=0.0,

validation_data=None,

shuffle=True,

class_weight=None,

sample_weight=None,

initial_epoch=0

)

- x: input data . If the model has only one input , that x The type is numpy

array, If the model has multiple inputs , that x The type should be list,list Is corresponding to each input numpy array - y: label ,numpy array

- batch_size: Integers , Specifies the gradient descent for each batch Number of samples included . One for training batch The sample will be calculated as a gradient descent , Optimize the target function one step .

- epochs: Integers , At the end of training epoch value , The training will be at the end of the day epoch Value , When there is no setting initial_epoch when , It is the total number of rounds of training , Otherwise, the total number of rounds of training is epochs - inital_epoch

- verbose: The log shows ,0 Output log information for non-standard output stream ,1 Record for the output progress bar ,2 For each epoch Output line record

- callbacks:list, The elements are keras.callbacks.Callback The object of . This list The callback function will be called at the appropriate time during the training , Refer to the callback function

- validation_split:0~1 The floating point number between , A percentage of the data used to specify the training set is used as the validation set . Validation sets will not be trained , And in each epoch End - of - test model metrics , Like the loss function 、 Precision etc. . Be careful ,validation_split The division of the shuffle Before , So if your data itself is ordered , You need to manually scramble it before you specify it validation_split, Otherwise, an uneven sample of the validation set may occur .

- validation_data: In the form of (X,y) Of tuple, Is the specified validation set . This parameter overrides validation_spilt.

- shuffle: Boolean or string , Is generally a Boolean value , Indicates whether the sequence of input samples is randomly scrambled during training . If string “batch”, It's used to deal with HDF5 The special case of data , It will be batch Internally scrambles the data .

- class_weight: Dictionaries , Mapping different categories to different weights , This parameter is used to adjust the loss function during training ( Only for training )

- sample_weight: Weights of numpy

array, Used to adjust the loss function during training ( For training purposes only ). You can pass a 1D The vector with the same length as the sample is used to carry on the sample 1 Yes 1 A weighted , Or in the case of temporal data , The form of passing one is (samples,sequence_length) To assign different weights to the samples on each time step . In this case be sure to add when compiling the model sample_weight_mode=’temporal’. - initial_epoch: Specified from this parameter epoch Start training , It's useful to continue the previous training .

fit The function returns a History The object of , Its History.history Attribute records the value of loss function and other indicators epoch Changing circumstances , If there is a verification set , Also contains the change of these indicators of the verification set

边栏推荐

- 视频化全链路智能上云?一文详解什么是阿里云视频云「智能媒体生产」

- Three years after graduation

- The combination of over clause and aggregate function in SQL Server

- 为什么安装mysql时starting service报错?(操作系统-windows)

- The industrial chain of consumer Internet is actually very short. It only undertakes the role of docking and matchmaking between upstream and downstream platforms

- iNFTnews | 时尚品牌将以什么方式进入元宇宙?

- Flex flexible layout

- Diffusion模型详解

- 终于可以一行代码也不用改了!ShardingSphere 原生驱动问世

- Bean 作⽤域和⽣命周期

猜你喜欢

Pytest learning - dayone

农牧业未来发展蓝图--垂直农业+人造肉

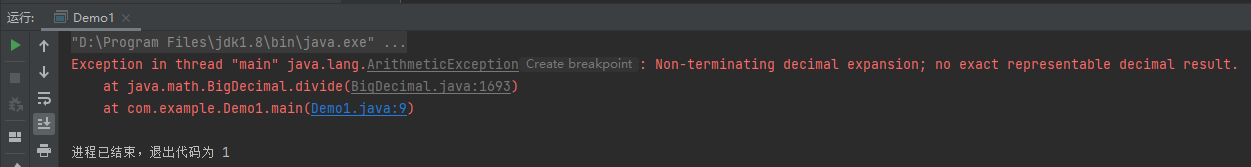

使用BigDecimal的坑

CentOS installs JDK1.8 and mysql5 and 8 (the same command 58 in the second installation mode is common, opening access rights and changing passwords)

Qualifying 3

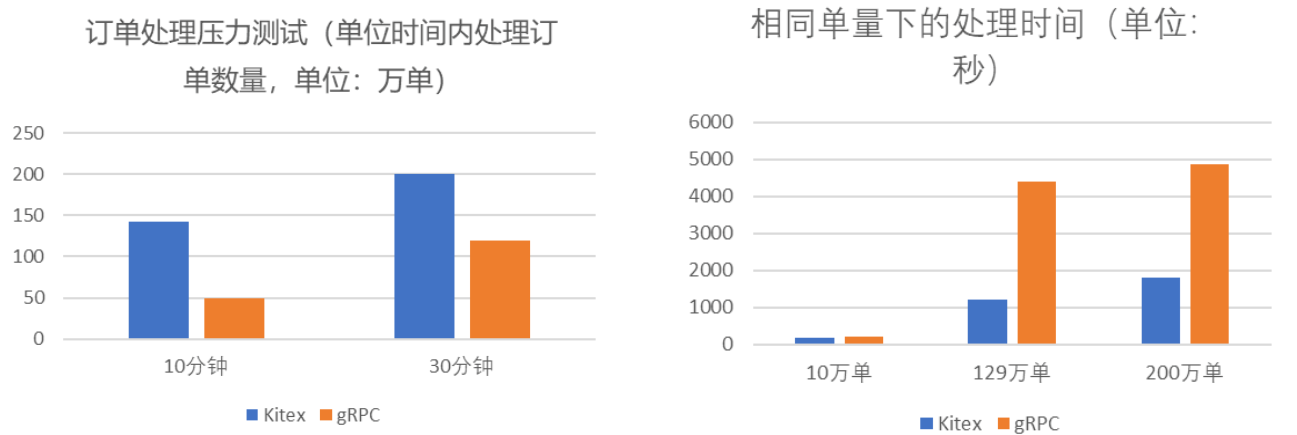

字节跳动 Kitex 在森马电商场景的落地实践

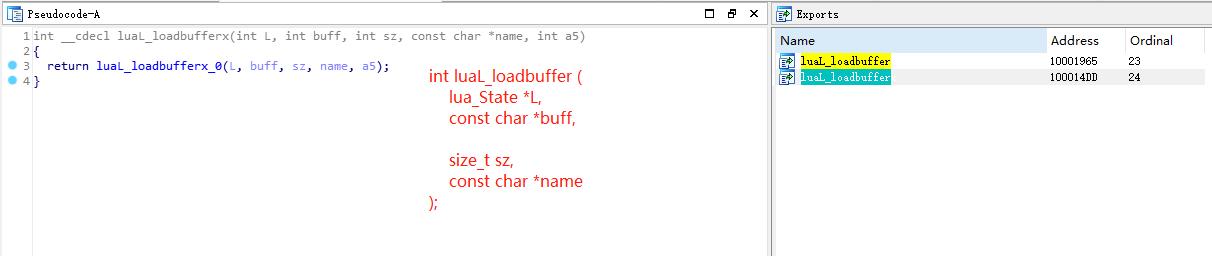

【frida实战】“一行”代码教你获取WeGame平台中所有的lua脚本

基于智慧城市与储住分离数字家居模式垃圾处理方法

Deep understanding of UDP, TCP

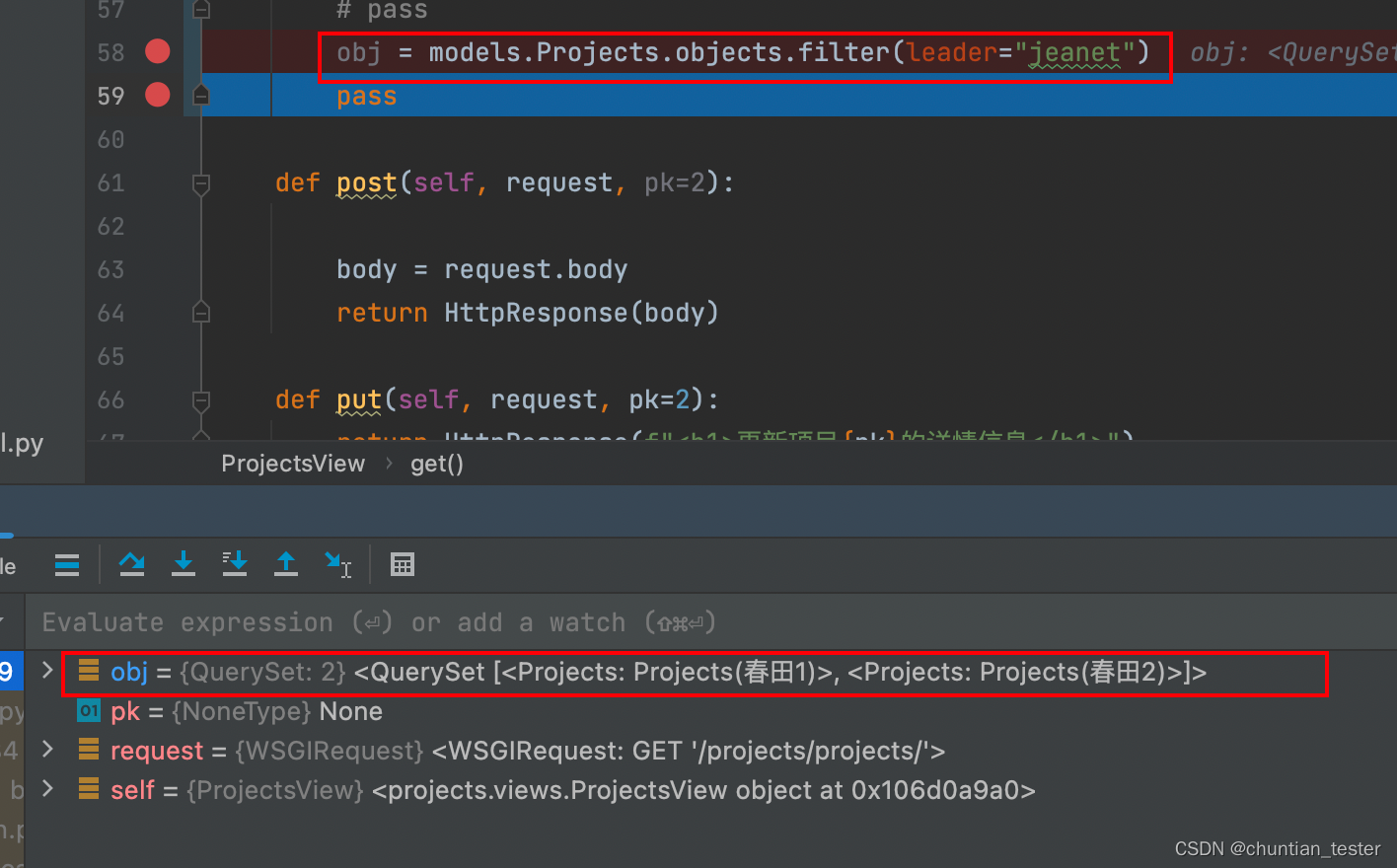

ORM模型--数据记录的创建操作,查询操作

随机推荐

Impression notes finally support the default markdown preview mode

web3.0系列之分布式存储IPFS

Deep understanding of UDP, TCP

thinkphp数据库的增删改查

ORM--数据库增删改查操作逻辑

Internship log - day04

Hcip first day notes sorting

MongoDB创建一个隐式数据库用作练习

Delete a record in the table in pl/sql by mistake, and the recovery method

基础篇:带你从头到尾玩转注解

EXT2 file system

arcgis操作:dwg数据转为shp数据

Three years after graduation

网上可以开炒股账户吗安全吗

如何成为一名高级数字 IC 设计工程师(5-3)理论篇:ULP 低功耗设计技术精讲(下)

大佬们,有没有遇到过flink cdc读MySQLbinlog丢数据的情况,每次任务重启就有概率丢数

MySQL can connect locally through localhost or 127, but cannot connect through intranet IP (for example, Navicat connection reports an error of 1045 access denied for use...)

Elaborate on MySQL mvcc multi version control

【frida实战】“一行”代码教你获取WeGame平台中所有的lua脚本

Why are social portals rarely provided in real estate o2o applications?