当前位置:网站首页>B站刘二大人-多元逻辑回归 Lecture 7

B站刘二大人-多元逻辑回归 Lecture 7

2022-07-06 05:33:00 【宁然也】

系列文章:

import torch

import matplotlib.pyplot as plt

import numpy as np

class LogisticRegressionModel(torch.nn.Module):

def __init__(self):

super(LogisticRegressionModel, self).__init__()

# 输入维度8输出维度6

self.lay1 = torch.nn.Linear(8,6)

self.lay2 = torch.nn.Linear(6,4)

self.lay3 = torch.nn.Linear(4,1)

self.sigmod = torch.nn.Sigmoid()

def forward(self,x):

x = self.sigmod(self.lay1(x))

x = self.sigmod(self.lay2(x))

x = self.sigmod(self.lay3(x))

return x

model = LogisticRegressionModel()

criterion = torch.nn.BCELoss(reduction='mean')

optimizer = torch.optim.SGD(model.parameters(), lr=0.005)

# 读取数据

xy = np.loadtxt('./datasets/diabetes.csv.gz', delimiter=',', dtype=np.float32)

x_data = torch.from_numpy(xy[:,:-1])

y_data = torch.from_numpy(xy[:,[-1]])

epoch_list = []

loss_list = []

for epoch in range(1000):

# 没有用到最小批处理

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

loss_list.append(loss.item())

epoch_list.append(epoch)

optimizer.zero_grad()

loss.backward()

optimizer.step()

plt.plot(epoch_list, loss_list)

plt.xlabel("epoch")

plt.ylabel("loss")

plt.show()

边栏推荐

- Cuda11.1 online installation

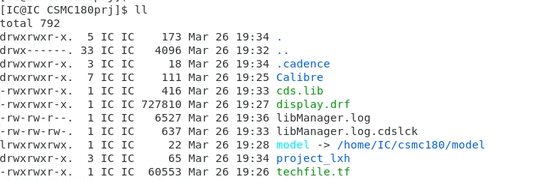

- PDK工艺库安装-CSMC

- 2022 half year summary

- Web Security (VI) the use of session and the difference between session and cookie

- Promotion hung up! The leader said it wasn't my poor skills

- JDBC calls the stored procedure with call and reports an error

- Vulhub vulnerability recurrence 73_ Webmin

- [force buckle]43 String multiplication

- Jvxetable implant j-popup with slot

- The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

猜你喜欢

PDK process library installation -csmc

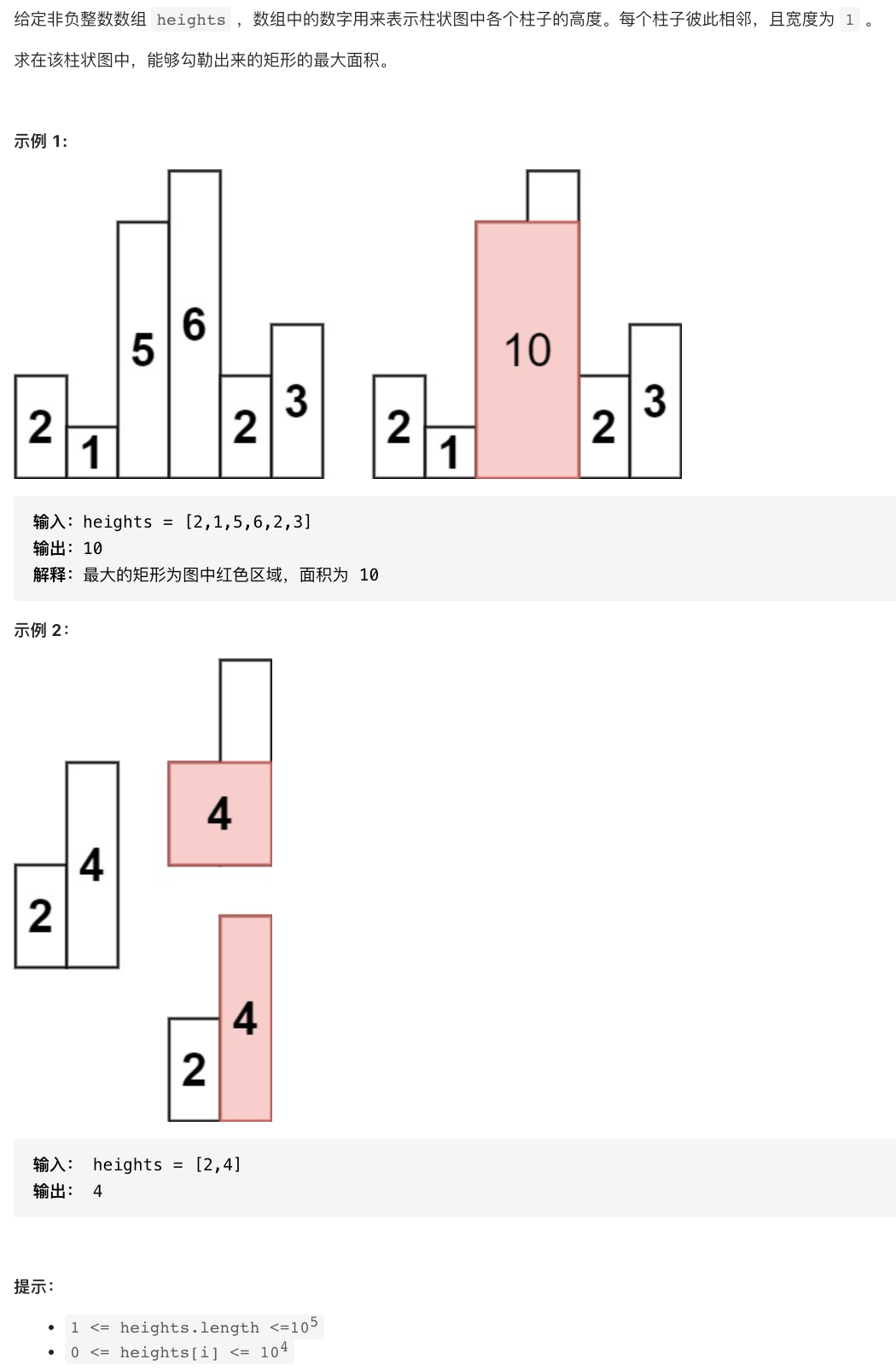

Sword finger offer II 039 Maximum rectangular area of histogram

Fluent implements a loadingbutton with loading animation

![[leetcode16] the sum of the nearest three numbers (double pointer)](/img/99/a167b0fe2962dd0b5fccd2d9280052.jpg)

[leetcode16] the sum of the nearest three numbers (double pointer)

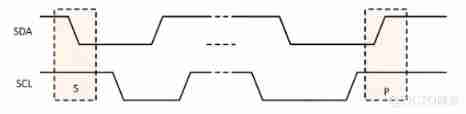

Easy to understand IIC protocol explanation

Hyperledger Fabric2. Some basic concepts of X (1)

Safe mode on Windows

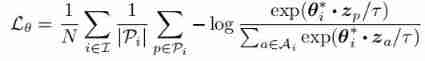

Text classification still stays at Bert? The dual contrast learning framework is too strong

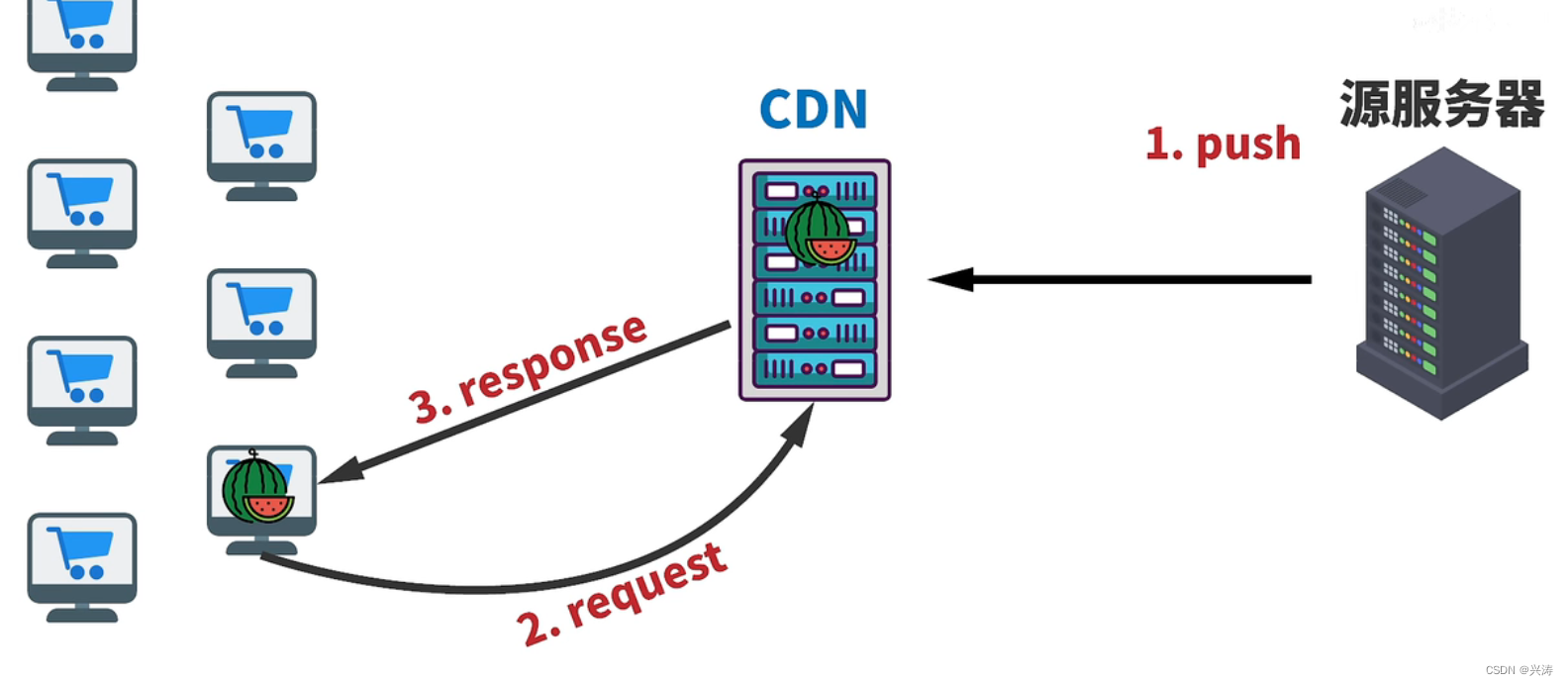

First acquaintance with CDN

PDK工艺库安装-CSMC

随机推荐

01. Project introduction of blog development project

[untitled]

指針經典筆試題

Easy to understand I2C protocol

UCF (2022 summer team competition I)

29io stream, byte output stream continue write line feed

HAC cluster modifying administrator user password

[JVM] [Chapter 17] [garbage collector]

Codeless June event 2022 codeless Explorer conference will be held soon; AI enhanced codeless tool launched

Notes, continuation, escape and other symbols

Modbus协议通信异常

JDBC calls the stored procedure with call and reports an error

03. 开发博客项目之登录

剑指 Offer II 039. 直方图最大矩形面积

【华为机试真题详解】统计射击比赛成绩

[Tang Laoshi] C -- encapsulation: classes and objects

SQLite queries the maximum value and returns the whole row of data

Codeforces Round #804 (Div. 2) Editorial(A-B)

Huawei equipment is configured with OSPF and BFD linkage

[QNX Hypervisor 2.2用户手册]6.3.3 使用共享内存(shmem)虚拟设备