当前位置:网站首页>Mmdetection3d loads millimeter wave radar data

Mmdetection3d loads millimeter wave radar data

2022-07-07 02:36:00 【naca yu】

Preface

mmdetection3d Not provided radar Data reading , Only for LiDAR and Camera Data read from 、 Handle , So it needs to be based on LiDAR Improve the data processing process , Create a set suitable for radar Data processing flow , This processing flow refers to FUTR3D Ideas provided , And some improvements are made .

Before we start , First of all, you need to know about mmdetection3d The following description of :

- mmdetection3d Of NuScenes The data set coordinate system is in LiDAR The coordinate system is a reference , Include bbox attribute 、 Sensors to lidar The projection matrix of ;

- mmdetection3d The radar projection coordinate system and other coordinate systems have been modified , In order to facilitate the unification of the target pose of different sensors ;

- NuScenes In the data annotation of , The marking of the example is subject to the sensor , The example here is marked in LIDAR The coordinates under ;

- RADAR The data itself has [x,y,z=0] Properties of , Through the coordinate transformation matrix, it can be directly converted to LIDAR Next [x,y,z] And directly as LIDAR Point cloud use ;

Based on the above background knowledge , Let's start by introducing Radar Implementation process of data loading .

Realization

1.1 Generate .pkl data

- create_data.py In file :

_fill_trainval_infos() stay mmdetection3d Is responsible for generating .pkl file , This file contains all the data we need .

# load data tranforlation matrix and data path

def _fill_trainval_infos(nusc,

train_scenes,

val_scenes,

test=False,

max_sweeps=10):

train_nusc_infos = []

val_nusc_infos = []

for sample in mmcv.track_iter_progress(nusc.sample):

lidar_token = sample['data']['LIDAR_TOP']

sd_rec = nusc.get('sample_data', sample['data']['LIDAR_TOP'])

cs_record = nusc.get('calibrated_sensor',

sd_rec['calibrated_sensor_token'])

pose_record = nusc.get('ego_pose', sd_rec['ego_pose_token'])

# boxes Corresponding multiple box Class

lidar_path, boxes, _ = nusc.get_sample_data(lidar_token)

mmcv.check_file_exist(lidar_path)

info = {

'token': sample['token'],

'cams': dict(),

'radars': dict(),

'lidar2ego_translation': cs_record['translation'],

'lidar2ego_rotation': cs_record['rotation'],

'ego2global_translation': pose_record['translation'],

'ego2global_rotation': pose_record['rotation'],

'timestamp': sample['timestamp'],

}

# lidar and global translation to each

l2e_r = info['lidar2ego_rotation']

l2e_t = info['lidar2ego_translation']

e2g_r = info['ego2global_rotation']

e2g_t = info['ego2global_translation']

l2e_r_mat = Quaternion(l2e_r).rotation_matrix

e2g_r_mat = Quaternion(e2g_r).rotation_matrix

# obtain 6 image's information per frame

camera_types = [

'CAM_FRONT',

'CAM_FRONT_RIGHT',

'CAM_FRONT_LEFT',

'CAM_BACK',

'CAM_BACK_LEFT',

'CAM_BACK_RIGHT',

]

for cam in camera_types:

cam_token = sample['data'][cam]

cam_path, _, cam_intrinsic = nusc.get_sample_data(cam_token)

# camera to lidar_top matrix

cam_info = obtain_sensor2top(nusc, cam_token, l2e_t, l2e_r_mat,

e2g_t, e2g_r_mat, cam)

cam_info.update(cam_intrinsic=cam_intrinsic)

info['cams'].update({

cam: cam_info})

# radar load

radar_names = [

'RADAR_FRONT',

'RADAR_FRONT_LEFT',

'RADAR_FRONT_RIGHT',

'RADAR_BACK_LEFT',

'RADAR_BACK_RIGHT'

]

for radar_name in radar_names:

radar_token = sample['data'][radar_name]

radar_rec = nusc.get('sample_data', radar_token)

sweeps = []

# load multi-radar sweeps into sweeps once sweeps < 5

while len(sweeps) < 5:

if not radar_rec['prev'] == '':

radar_path, _, radar_intrin = nusc.get_sample_data(radar_token)

# obtain translation matrix from radar to lidar_top

radar_info = obtain_sensor2top(nusc, radar_token, l2e_t, l2e_r_mat,

e2g_t, e2g_r_mat, radar_name)

sweeps.append(radar_info)

radar_token = radar_rec['prev']

radar_rec = nusc.get('sample_data', radar_token)

else:

radar_path, _, radar_intrin = nusc.get_sample_data(radar_token)

radar_info = obtain_sensor2top(nusc, radar_token, l2e_t, l2e_r_mat,

e2g_t, e2g_r_mat, radar_name)

sweeps.append(radar_info)

# one radar correspond to serveral sweeps

# sweeps:[sweep1_dict, sweep2_dict...]

info['radars'].update({

radar_name: sweeps})

# there annotation, yes lidar In a coordinate system annotaion

if not test:

annotations = [

nusc.get('sample_annotation', token)

for token in sample['anns']

]

locs = np.array([b.center for b in boxes]).reshape(-1, 3)

dims = np.array([b.wlh for b in boxes]).reshape(-1, 3)

rots = np.array([b.orientation.yaw_pitch_roll[0]

for b in boxes]).reshape(-1, 1)

velocity = np.array(

[nusc.box_velocity(token)[:2] for token in sample['anns']])

# valid_flag sign annotations Yes no pts

valid_flag = np.array(

[(anno['num_lidar_pts'] + anno['num_radar_pts']) > 0

for anno in annotations],

dtype=bool).reshape(-1)

# convert velo from global to lidar

for i in range(len(boxes)):

velo = np.array([*velocity[i], 0.0])

velo = velo @ np.linalg.inv(e2g_r_mat).T @ np.linalg.inv(

l2e_r_mat).T

velocity[i] = velo[:2]

names = [b.name for b in boxes]

for i in range(len(names)):

if names[i] in NuScenesDataset.NameMapping:

names[i] = NuScenesDataset.NameMapping[names[i]]

names = np.array(names)

# update valid now

name_in_track = [_a in nus_categories for _a in names]

name_in_track = np.array(name_in_track)

valid_flag = np.logical_and(valid_flag, name_in_track)

# add instance_ids

instance_inds = [nusc.getind('instance', ann['instance_token']) for ann in annotations]

# we need to convert rot to SECOND format.

# -rot translate lidar coordinate and then concatenate them together

# The angle is based on Π In units of

gt_boxes = np.concatenate([locs, dims, -rots - np.pi / 2], axis=1)

assert len(gt_boxes) == len(

annotations), f'{

len(gt_boxes)}, {

len(annotations)}'

info['gt_boxes'] = gt_boxes

info['gt_names'] = names

info['gt_velocity'] = velocity.reshape(-1, 2)

# Every gt_boxes Corresponding num_lidar_pts Used to filter pointless cloud targets

info['num_lidar_pts'] = np.array(

[a['num_lidar_pts'] for a in annotations])

# obtain radar info as lidar

info['num_radar_pts'] = np.array(

[a['num_radar_pts'] for a in annotations])

info['valid_flag'] = valid_flag

info['instance_inds'] = instance_inds

# Divided here train_info & test_infos

if sample['scene_token'] in train_scenes:

train_nusc_infos.append(info)

else:

val_nusc_infos.append(info)

return train_nusc_infos, val_nusc_infos

- This step calls the function generation in the previous part dict after , adopt create_nuscenes_infos() Save the generated pkl file

def create_nuscenes_infos(root_path,

info_prefix,

version='v1.0-trainval',

max_sweeps=10):

train_nusc_infos, val_nusc_infos = _fill_trainval_infos(

nusc, train_scenes, val_scenes, test, max_sweeps=max_sweeps)

metadata = dict(version=version)

if test:

print('test sample: {}'.format(len(train_nusc_infos)))

data = dict(infos=train_nusc_infos, metadata=metadata)

info_path = osp.join(root_path,

'{}_infos_test.pkl'.format(info_prefix))

mmcv.dump(data, info_path)

else:

print('train sample: {}, val sample: {}'.format(

len(train_nusc_infos), len(val_nusc_infos)))

data = dict(infos=train_nusc_infos, metadata=metadata)

info_path = osp.join(root_path,

'{}_infos_train.pkl'.format(info_prefix))

mmcv.dump(data, info_path)

data['infos'] = val_nusc_infos

info_val_path = osp.join(root_path,

'{}_infos_val.pkl'.format(info_prefix))

mmcv.dump(data, info_val_path)

1.2 Read pkl Data and build dataset Interface

- Excerpts , among get_data_info() Function loads the generated in the previous step pkl file , Generate input_dict Dictionary keeping , With no entry pipeline Use

@DATASETS.register_module()

class NuScenesDataset(Custom3DDataset):

def __init__(self,

ann_file,

pipeline=None,

data_root=None,

classes=None,

load_interval=1,

with_velocity=True,

modality=None,

box_type_3d='LiDAR',

filter_empty_gt=True,

test_mode=False,

eval_version='detection_cvpr_2019',

use_valid_flag=False):

self.load_interval = load_interval

self.use_valid_flag = use_valid_flag

super().__init__(

data_root=data_root,

ann_file=ann_file,

pipeline=pipeline,

classes=classes,

modality=modality,

box_type_3d=box_type_3d,

filter_empty_gt=filter_empty_gt,

test_mode=test_mode)

self.with_velocity = with_velocity

self.eval_version = eval_version

from nuscenes.eval.detection.config import config_factory

self.eval_detection_configs = config_factory(self.eval_version)

if self.modality is None:

self.modality = dict(

use_camera=False,

use_lidar=True,

use_radar=False,

use_map=False,

use_external=False,

)

def get_data_info(self, index):

info = self.data_infos[index]

input_dict = dict(

sample_idx=info['token'],

timestamp=info['timestamp'] / 1e6,

radar=info['radars'],

)

if self.modality['use_camera']:

image_paths = []

lidar2img_rts = []

intrinsics = []

extrinsics = []

for cam_type, cam_info in info['cams'].items():

image_paths.append(cam_info['data_path'])

# obtain lidar to image transformation matrix

lidar2cam_r = np.linalg.inv(cam_info['sensor2lidar_rotation'])

lidar2cam_t = cam_info[

'sensor2lidar_translation'] @ lidar2cam_r.T

lidar2cam_rt = np.eye(4)

lidar2cam_rt[:3, :3] = lidar2cam_r.T

lidar2cam_rt[3, :3] = -lidar2cam_t

intrinsic = cam_info['cam_intrinsic']

viewpad = np.eye(4)

viewpad[:intrinsic.shape[0], :intrinsic.shape[1]] = intrinsic

lidar2img_rt = (viewpad @ lidar2cam_rt.T)

lidar2img_rts.append(lidar2img_rt)

intrinsics.append(viewpad)

extrinsics.append(lidar2cam_rt.T)

# Add image information

input_dict.update(

dict(

img_filename=image_paths,

lidar2img=lidar2img_rts,

intrinsic=intrinsics,

extrinsic=extrinsics,

))

if not self.test_mode:

annos = self.get_ann_info(index)

input_dict['ann_info'] = annos

return input_dict

1.3 structure pipeline Processing data

- This part comes from what I defined in the previous step NuScenesDataset class , As a method in __ getitem __(self,index) In the called , Be responsible for calling the get_data_info() Go back to some sample All data dictionaries under , And feed pipeline In dealing with , Return the processed data on dataloader Use in .

def prepare_train_data(self, index):

"""Training data preparation. Args: index (int): Index for accessing the target data. Returns: dict: Training data dict of the corresponding index. """

# input_dict = dict(

# sample_idx=info['token'],

# timestamp=info['timestamp'] / 1e6,

# radar=info['radars'],

# anno = dict(

# gt_bboxes_3d=gt_bboxes_3d,

# gt_labels_3d=gt_labels_3d,

# gt_names=gt_names_3d)

# )

input_dict = self.get_data_info(index)

if input_dict is None:

return None

self.pre_pipeline(input_dict)

example = self.pipeline(input_dict)

if self.filter_empty_gt and \

(example is None or

~(example['gt_labels_3d']._data != -1).any()):

return None

return example

边栏推荐

- 本周 火火火火 的开源项目!

- 用全连接+softmax对图片的feature进行分类

- 差异与阵列和阵列结构和链表的区别

- How do I dump SoapClient requests for debugging- How to dump SoapClient request for debug?

- 豆瓣平均 9.x,分布式领域的 5 本神书!

- leetcode:736. LISP syntax parsing [flowery + stack + status enumaotu + slots]

- 猿桌派第三季开播在即,打开出海浪潮下的开发者新视野

- 1 -- Xintang nuc980 nuc980 porting uboot, starting from external mx25l

- 慧通编程入门课程 - 2A闯关

- MATLB|具有储能的经济调度及机会约束和鲁棒优化

猜你喜欢

随机推荐

MySQL

Douban average 9 x. Five God books in the distributed field!

postgresql 之 数据目录内部结构 简介

Stm32f4 --- PWM output

The third season of ape table school is about to launch, opening a new vision for developers under the wave of going to sea

企业中台建设新路径——低代码平台

postgresql之整體查詢大致過程

实施MES管理系统时,哪些管理点是需要注意的

go swagger使用

[paper reading | deep reading] anrl: attributed network representation learning via deep neural networks

MySQL

Application analysis of face recognition

写作系列之contribution

[Mori city] random talk on GIS data (II)

Introduction to FLIR blackfly s industrial camera

What to do when encountering slow SQL? (next)

进程管理基础

[paper reading | deep reading] rolne: improving the quality of network embedding with structural role proximity

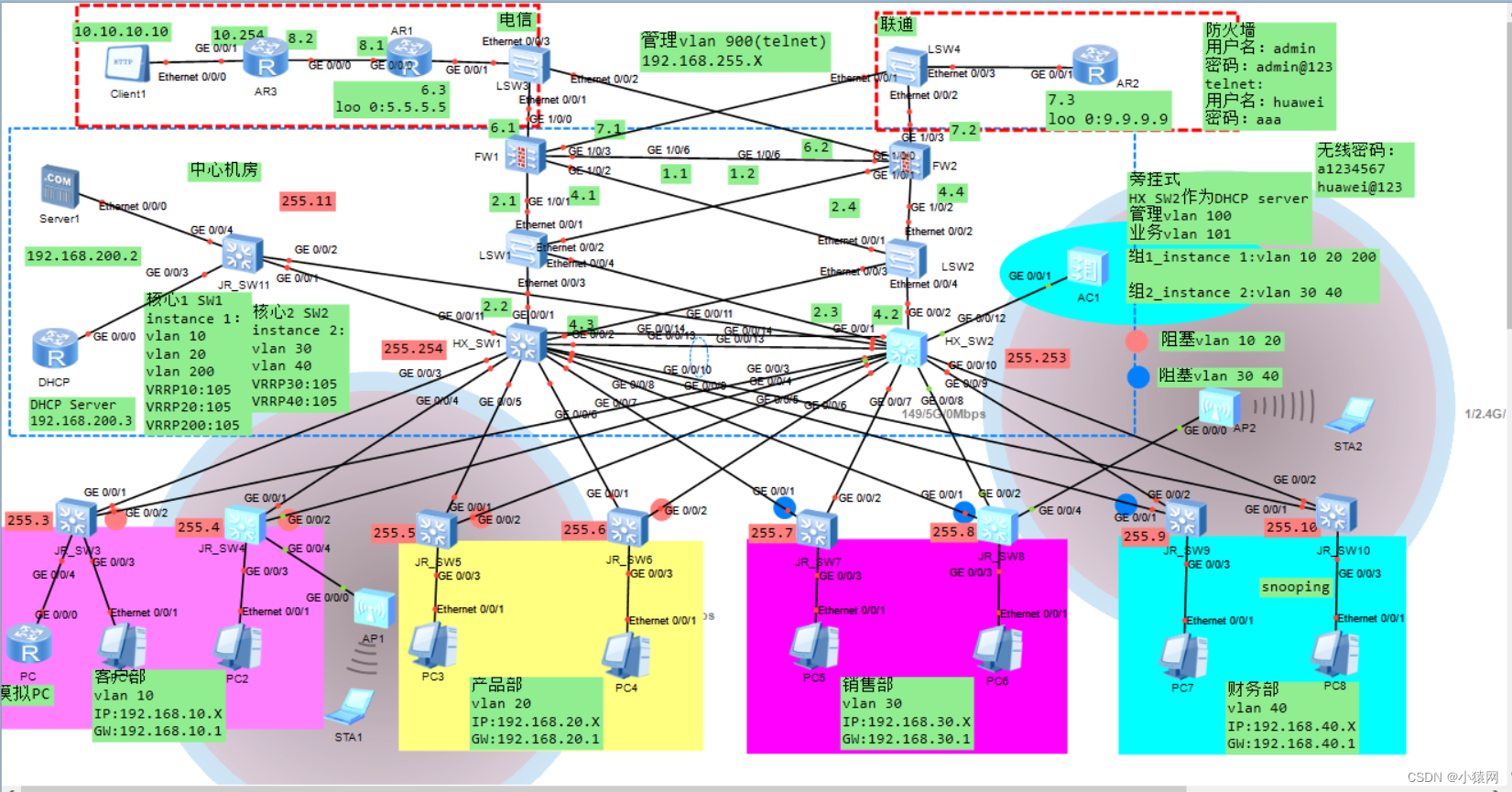

基于ensp防火墙双击热备二层网络规划与设计

The cities research center of New York University recruits master of science and postdoctoral students