当前位置:网站首页>[first release in the whole network] (tips for big tables) sometimes it takes only 1 minute for 2 hours of SQL operation

[first release in the whole network] (tips for big tables) sometimes it takes only 1 minute for 2 hours of SQL operation

2022-07-05 10:55:00 【Heapdump performance community】

Hello everyone , I am a yes.

Last article About a 5 I and DBA Of battle After that , Several friends came to ask me SQL How did you dismantle it .

Let's briefly discuss this article , Actually dismantle SQL It is because it involves the deletion of large tables .

such as , Now you need to delete a total 5 In the table of billion data 2021 Annual data , Suppose this table is called yes.

I believe your brain is 1s This one will definitely pop out of the SQL :

delete from yes where create_date > "2020-12-31" and create_date < "2022-01-01";

If you directly execute this SQL What's going to happen ?

Long business

We need to pay attention to a premise : This watch has 5 Billion of data , So it's a huge watch , So the where Conditions can involve a lot of data , So we can check the data volume from the offline data warehouse or standby database , Then we found this SQL Will delete 3 About 100 million data .

So one time delete The finished plan is not good , Because it's going to involve The problem of long affairs .

Long transactions involve locking , The lock will only be released after the transaction is completed , A lot of data is locked due to long transactions , If there are frequent DML Want to manipulate these data , Then it will cause congestion .

The connection is blocked , Business threads naturally block , That is to say, your service thread is waiting for the response of the database , Then it may affect other services , Avalanches may occur , So he GG 了 .

Long transactions may cause master-slave delays , Think about the main database that has been implemented for a long time , Only after the execution of the slave Library , It will take a long time to replay from the Library , Data may be out of sync for a long time .

There's another situation , Businesses have a special downtime window , You think you can do whatever you want , Then start to execute long affairs , And then we did 5 Hours later , I don't know what's wrong , Transaction rolled back , So waste 5 Hours , We have to start over .

Sum up , We need to avoid long transactions .

In the face of possible problems SQL How can we dismantle it ?

Demolition SQL

Let's take the above one SQL For example :

delete from yes where create_date > "2020-12-31" and create_date < "2022-01-01";

See this one SQL, If you want to split , Presumably, many friends will find it very simple , According to the date, it's over ?

delete from yes where create_date > "2020-12-31" and create_date < "2021-02-01";

delete from yes where create_date >= "2021-02-01" and create_date < "2021-03-01";

......

Of course it can , congratulations , You have split it successfully , Yes, it's that simple .

however , If create_date What if there is no index ?

If there is no index , The above table is scanned ?

The impact is not big , Without index, we will create index conditions for him , This condition is the primary key .

Let's go straight to one select min(id)... and select max(id).... Get the minimum and maximum primary key values of this table , Suppose the answer is 233333333 and 666666666.

Then we can start the operation :

delete from yes where (id >= 233333333 and id < 233433333) and create_date > "2020-12-31" and create_date < "2022-01-01";

delete from yes where (id >= 233433333 and id <233533333) and create_date > "2020-12-31" and create_date < "2022-01-01";

......

delete from yes where (id >= 666566666 and id <=666666666) and create_date > "2020-12-31" and create_date < "2022-01-01";

Of course, you can also be more precise , Get through date filtering maxId, This has little effect ( Not satisfying the conditions SQL Fast execution , It won't take much time ).

thus SQL It meets the batch operation , And it can be indexed .

If any statement fails to execute , Only a small part of the data will be rolled back , Let's check again , The impact is not big .

And split SQL Then you can Improve execution efficiency in parallel .

Of course, my previous article said , There may be lock contention in parallel , Cause individual statements to wait for timeout . But it doesn't matter , As long as the machine is in good condition , Fast execution , Waiting caused by lock competition does not necessarily timeout , If individual SQL If the timeout , Just re execute .

Sometimes you have to change your mind

Sometimes we need to change our thinking about deleting large tables , Turn deletion into insertion .

Suppose there is still one 5 Billion tables , At this point, you need to delete the inside 4.8 Billion of data , Then don't think about deleting at this time , Think about inserting .

It's simple , Delete 4.8 Billion of data , It's better to take what you want 2000W Insert into a new table , Just use the new table directly for our later business .

The two data volumes are compared , The difference in time efficiency is self-evident ?

The specific operation is also simple :

Create a new table , be known as yes_temp take yes Tabular 2000W data select into To yes_temp in take yes surface rename become yes_233 take yes_temp surface rename become yes

Civet for Prince , It's done !

There was a record table before, and that's how we operate , Just select into The data of the past month is added to the new table , Old data used to be ignored , then rename once , It's very fast ,1 It will be done in minutes .

This kind of similar operation has tools , such as pt-online-schema-change etc. , But I didn't use it , If you are interested, you can go and have a look , It's the same thing , A few more triggers , I won't go into more details here .

Last

We still have to learn more about the operation and principle of database in development , Because many database operations need you to do it yourself , Small companies don't DBA Don't say anything , We don't know about big companies DBA To what extent will you care , You still have to rely on yourself .

I looked through my previous articles , As if MySQL Most of them are related , If you are interested, you can take a look at this collection :MySQL Collection

However, there should be many articles that have not been added to this collection , I'll tidy up when I'm free .

Welcome to follow my personal public number :【yes Training strategy 】

See you here , If you are interested in what I write , Have any questions , Welcome to leave a message below , I will give you an answer for the first time , thank you !

I am a yes, From a little to a hundred million, I'll see you in the next chapter ~

边栏推荐

猜你喜欢

爬虫(9) - Scrapy框架(1) | Scrapy 异步网络爬虫框架

【广告系统】增量训练 & 特征准入/特征淘汰

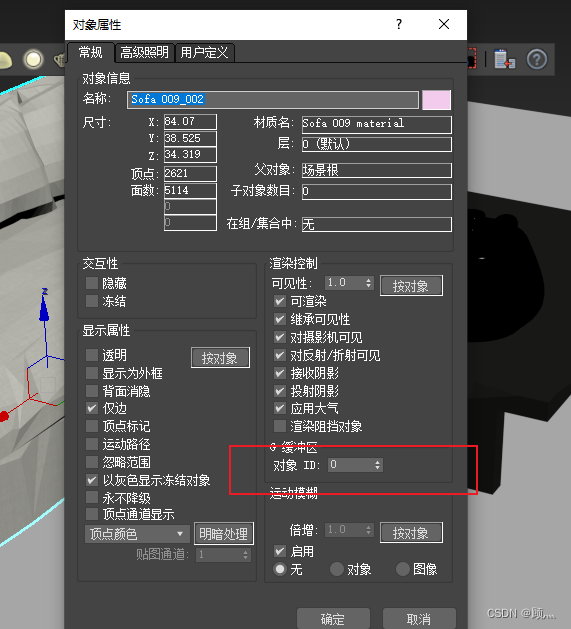

About the use of Vray 5.2 (self research notes) (II)

Review the whole process of the 5th Polkadot Hackathon entrepreneurship competition, and uncover the secrets of the winning projects!

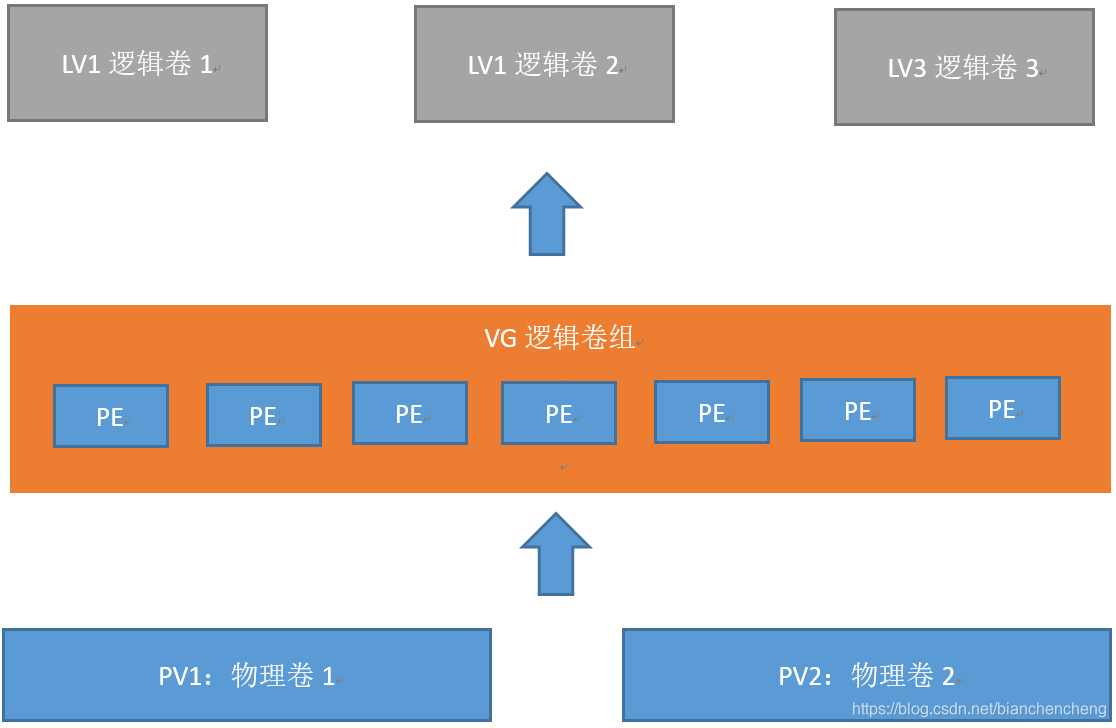

9、 Disk management

2022年危险化学品经营单位主要负责人特种作业证考试题库及答案

2022年化工自动化控制仪表考试试题及在线模拟考试

Based on shengteng AI Aibi intelligence, we launched a digital solution for bank outlets to achieve full digital coverage of information from headquarters to outlets

基于昇腾AI丨爱笔智能推出银行网点数字化解决方案,实现从总部到网点的信息数字化全覆盖

双向RNN与堆叠的双向RNN

随机推荐

Bidirectional RNN and stacked bidirectional RNN

Nuxt//

Go-3-第一个Go程序

一个可以兼容各种数据库事务的使用范例

A usage example that can be compatible with various database transactions

图片懒加载的方案

2022 chemical automation control instrument examination questions and online simulation examination

Golang应用专题 - channel

2022年T电梯修理操作证考试题及答案

分享.NET 轻量级的ORM

PWA (Progressive Web App)

Cross page communication

Bracket matching problem (STL)

flex4 和 flex3 combox 下拉框长度的解决办法

beego跨域问题解决方案-亲试成功

微信核酸检测预约小程序系统毕业设计毕设(6)开题答辩PPT

GO项目实战 — Gorm格式化时间字段

NAS and San

Go language-1-development environment configuration

Go project practice - parameter binding, type conversion