当前位置:网站首页>Figure out the working principle of gpt3

Figure out the working principle of gpt3

2022-07-07 07:48:00 【Chief prisoner】

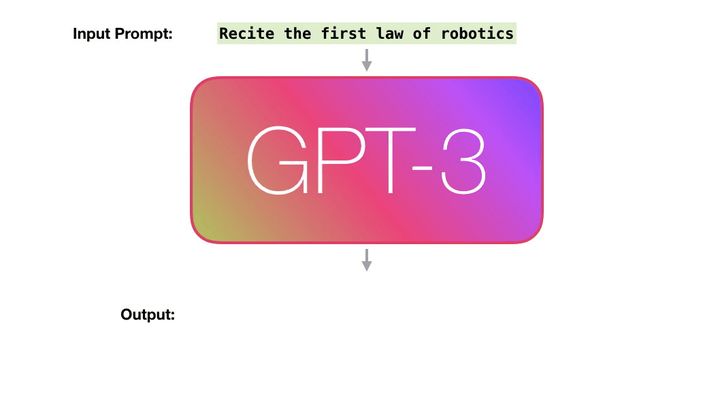

The illustration GPT3 How it works

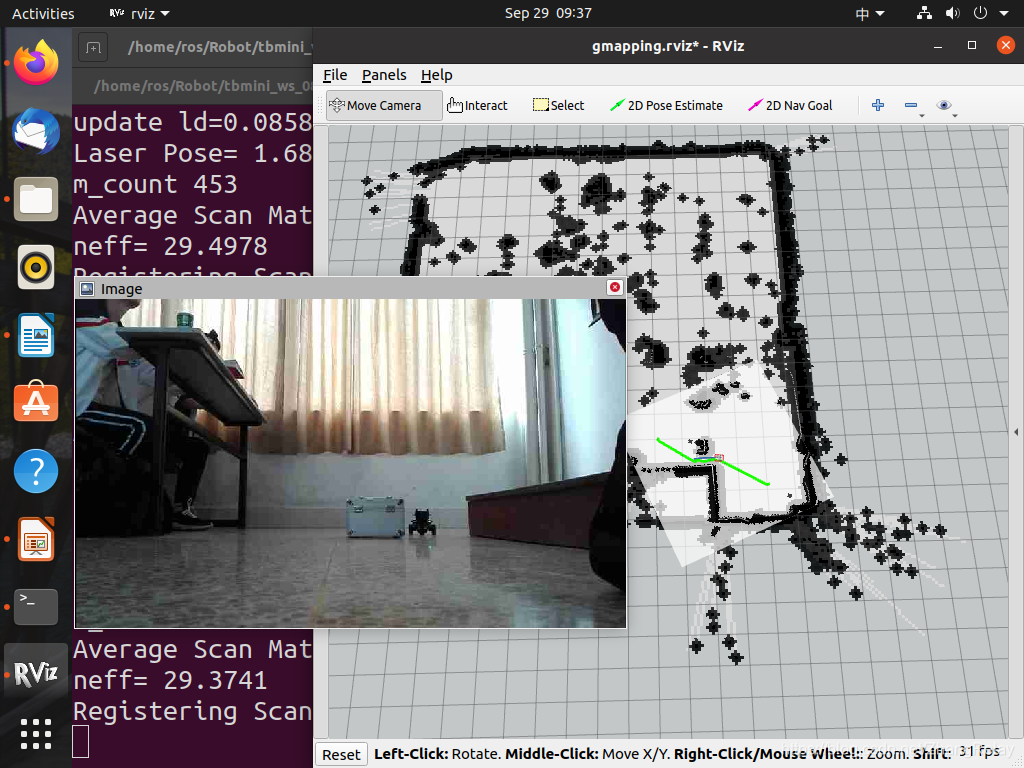

GPT3 The hype Caused an uproar in the scientific and technological circles . A large number of language models ( Such as GPT3) Our ability began to surprise us . Although most enterprises can't safely display these models in front of customers , But they are showing some clever sparks , These sparks will definitely accelerate the process of Automation , And promote the development of intelligent computer systems . Let's eliminate GPT3 Mysterious aura , Learn how it trains and works .

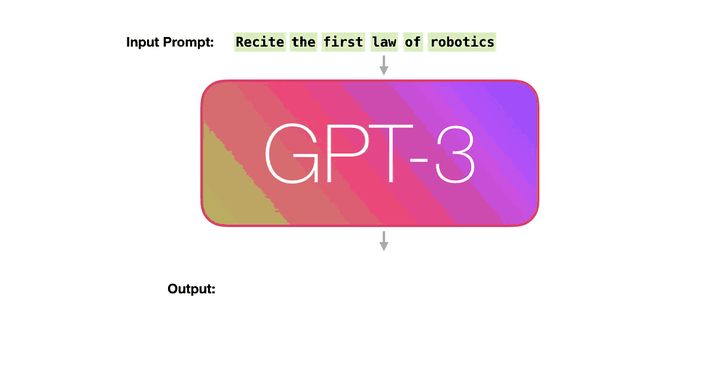

The trained language model generates text .

We can choose to pass some text to it as input , This will affect its output .

These outputs are generated by scanning a large amount of text by the model during training “ Acquire ” Something produced .

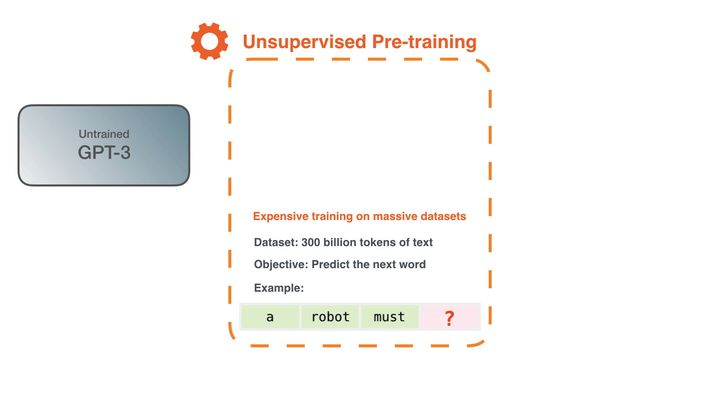

Training is the process of exposing the model to a large amount of text . The process has been completed . All the experiments you see now come from the trained model . It is estimated that , It costs 355 Year of GPU Time , cost 460 Thousands of dollars .

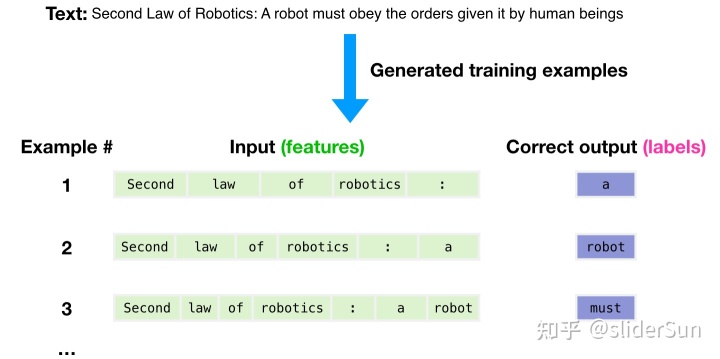

3000 100 million texts token The data set of is used to generate training samples of the model . for example , These are three training samples generated from a sentence at the top .

You will see how to slide the window over all the text and generate many samples .

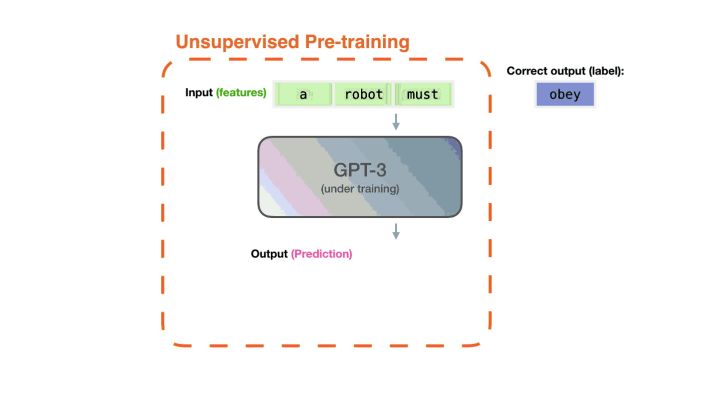

The model comes with an example . We only show it the characteristics , Then let it predict the next word .

The prediction of this model will be wrong . We calculate the error in the prediction and update the model , To make better predictions next time .

Repeat millions of times

Now? , Let's look at these same steps in more detail .

GPT3 In fact, one output is generated at a time token( Now suppose a token It's a word ).

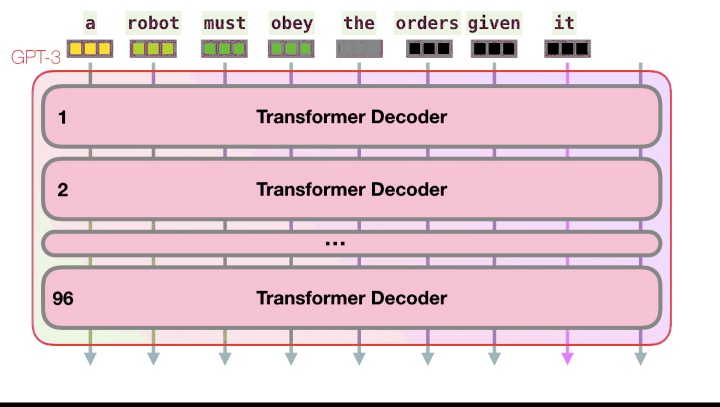

Please note that : That's right GPT-3 Description of the working mode of , Not about it GPT-3 Discussion of novelty ( It's mainly the ridiculous scale ). The architecture is based on this article https://arxiv.org/pdf/1801.10198.pdf Of Transformer Decoder model

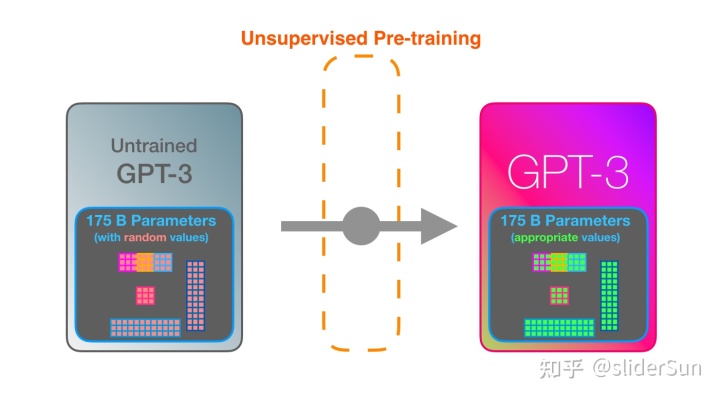

GPT3 It's huge . It's right from 1750 An digital ( It's called a parameter ) Code what you learn from your training . These numbers are used to calculate the token.

Untrained models start with random parameters . Training will find value that can bring better prediction .

These numbers are part of hundreds of matrices in the model . Prediction is mainly a lot of matrix multiplication .

stay YouTube Upper AI Introduction , Shows a simple with one parameter ML Model . A good start , To unlock this 175B The monster .

In order to clarify the distribution and use of these parameters , We need to open the model and look inside .

GPT3 by 2048 individual token. This is its “ Context window ”. That means it has 2048 Tracks , Process along these tracks token.

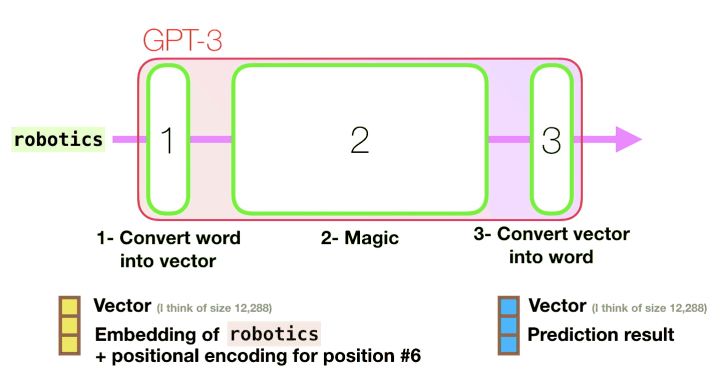

Let's follow the purple track . How does the system deal with “robotics” Word and produce “ A”?

step :

- Convert words to representative words Vector ( A list of numbers )

- Calculating predictions

- Convert the result vector into words

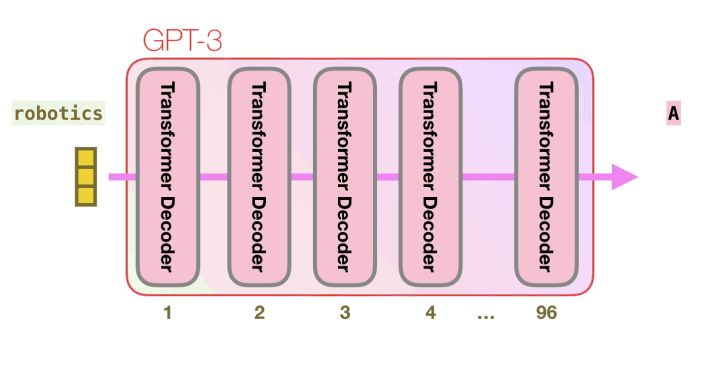

GPT3 The important calculation of takes place in its 96 individual Transformer Inside the stack of the decoder layer .

See all these layers ? This is a “ Deep learning ” Medium “ depth ”.

Each of these layers has its own 1.8B Parameters are calculated . That's it “ Magic ” Where it happened . This is a high-level view of the process :

Can be in the article The Illustrated GPT2 in See the detailed description of all contents inside the decoder .

And GPT3 The difference lies in the alternating density and Sparse self attention layer .

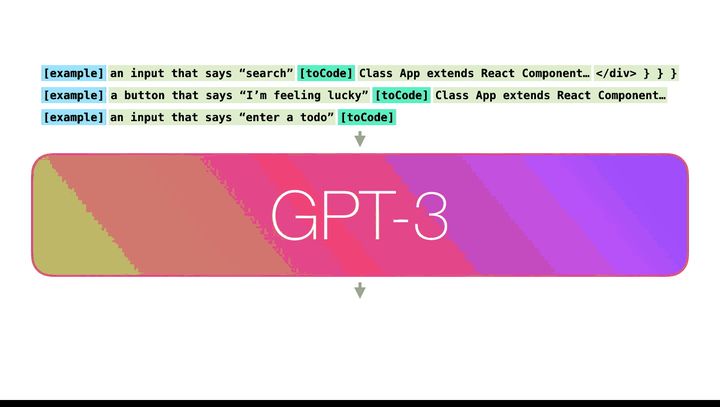

This is a GPT3 Input and response in (“Okay human”) Of X ray . Note that each token How to flow through the entire layer stack . We don't care about the output of the first word . When you're done typing , We began to care about output . We feed each word back into the model .

stay React Code generation example , The description will be an input prompt ( Use green to show ), I believe there are still a few description=> Code example .react The code will look like pink here token Generate one after another token.

My assumption is , Start the example and description as input , Use specific token Separate the examples from the results . Then input it into the model .

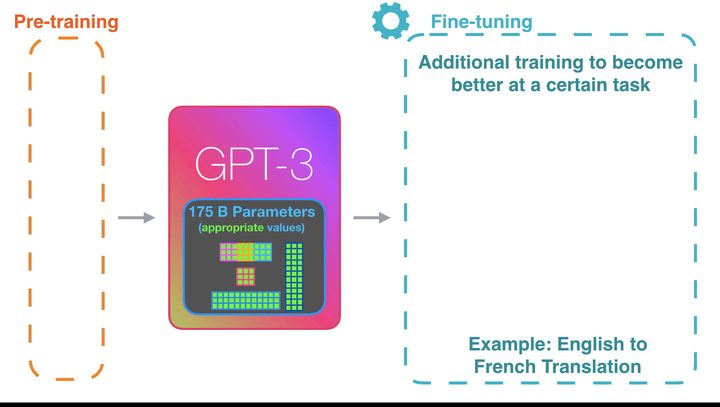

What impresses me is , It works like this . Because you just have to wait for GPT3 Fine tuning . The possibility will be even more amazing .

Fine tuning will actually update the weight of the model , To make the model perform better on some tasks .

reference :

边栏推荐

猜你喜欢

Operation suggestions for today's spot Silver

![[2022 ciscn] replay of preliminary web topics](/img/1c/4297379fccde28f76ebe04d085c5a4.png)

[2022 ciscn] replay of preliminary web topics

@component(““)

Robot technology innovation and practice old version outline

![[guess-ctf2019] fake compressed packets](/img/a2/7da2a789eb49fa0df256ab565d5f0e.png)

[guess-ctf2019] fake compressed packets

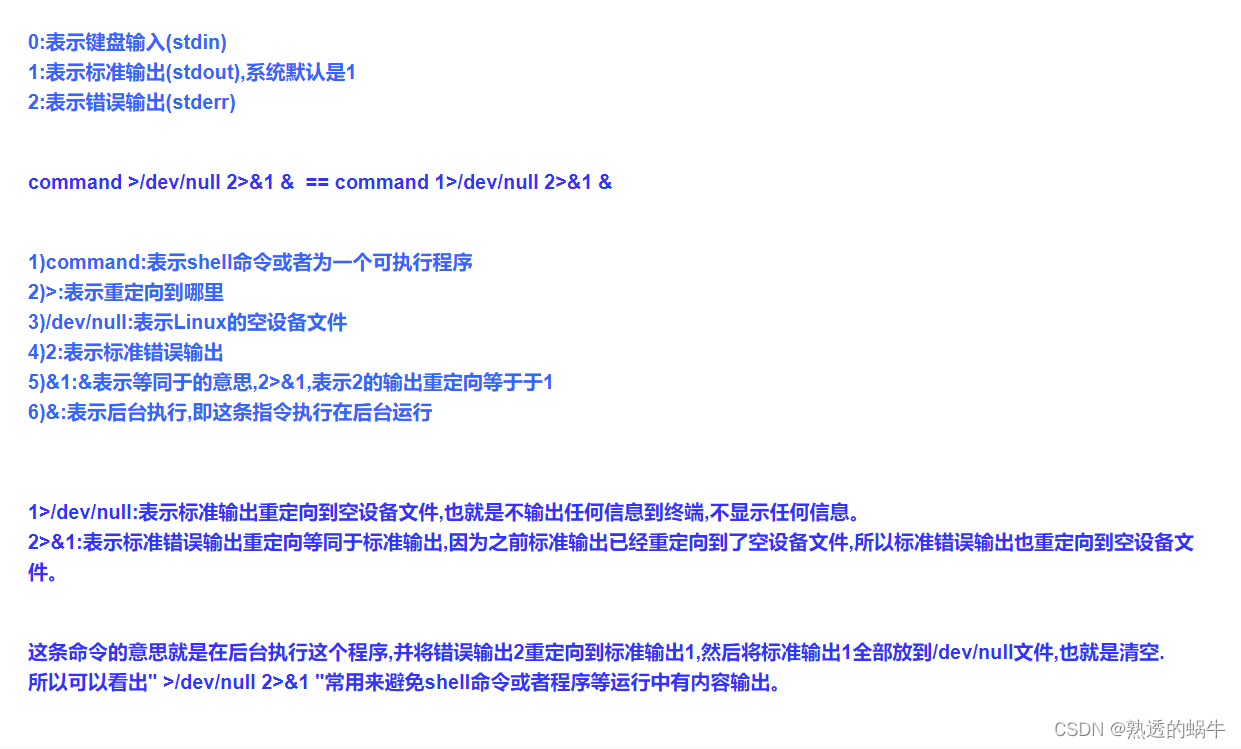

Jenkins远程构建项目超时的问题

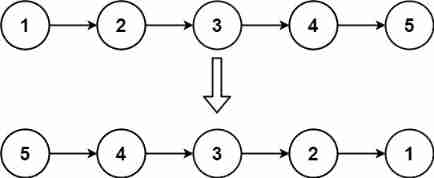

Leetcode-206. Reverse Linked List

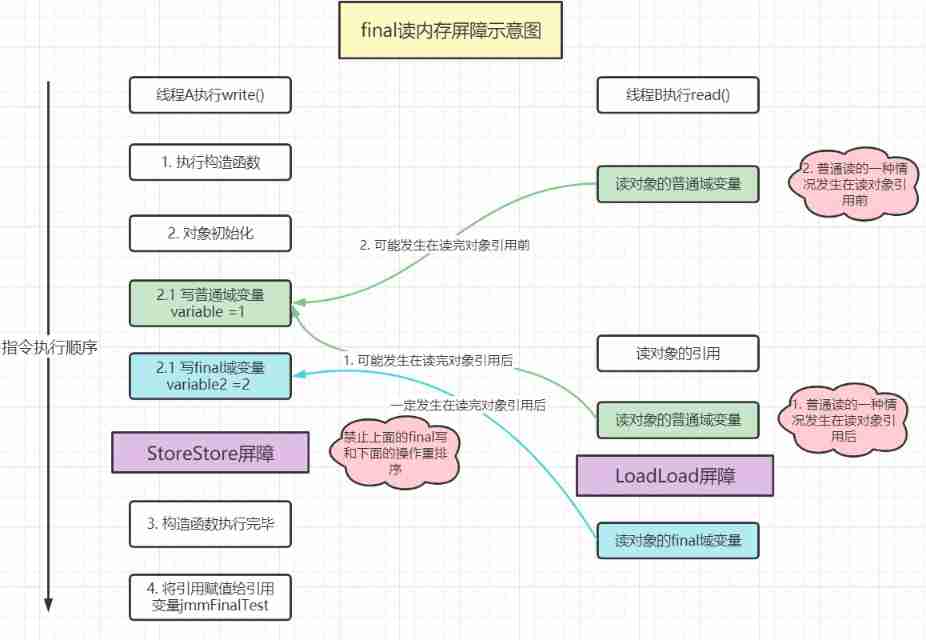

About some details of final, I have something to say - learn about final CSDN creation clock out from the memory model

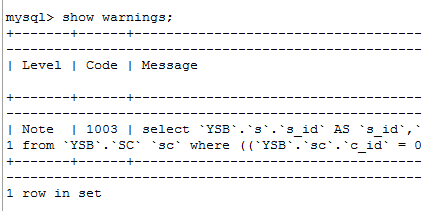

SQL优化的魅力!从 30248s 到 0.001s

Tencent's one-day life

随机推荐

Robot technology innovation and practice old version outline

[2022 CISCN]初赛 web题目复现

php导出百万数据

[webrtc] m98 Screen and Window Collection

[P2P] local packet capturing

Common validation comments

Technology cloud report: from robot to Cobot, human-computer integration is creating an era

nacos

4、 High performance go language release optimization and landing practice youth training camp notes

Jenkins远程构建项目超时的问题

Mysql高低版本切换需要修改的配置5-8(此处以aicode为例)

JSON introduction and JS parsing JSON

1141_ SiCp learning notes_ Functions abstracted as black boxes

Wx is used in wechat applet Showtoast() for interface interaction

vus. Precautions for SSR requesting data in asyndata function

1140_ SiCp learning notes_ Use Newton's method to solve the square root

pytorch 参数初始化

What is the interval in gatk4??

A concurrent rule verification implementation

Detailed explanation of uboot image generation process of Hisilicon chip (hi3516dv300)