当前位置:网站首页>Feature Engineering Study Notes

Feature Engineering Study Notes

2022-08-03 12:12:00 【Sheep baa baa baa】

Some functions that operate on features of the data

1.Encoding for strings

读取数据

import pandas as pd

vg_df =pd.read_csv(,encoding='ISO-8859-1')

vg_df[['Name','Platform','Year','Genre','Publisher']].iloc[1:7]LabelEncoder:Converts the values of different text properties to numbers.

import numpy as np

genre=np.unique(vg_df['Genre'])

from sklearn.preprocessing import LabelEncoder

gle =LabelEncoder()

gle_label =gle.fit_transform(vg_df['Genre'])

genre_mapping = {index:label for index,label in enumerate(gle.classes_)}

gle.classes_可以通过gle.classes_Output the trained classification.

poke_df =pd.read_csv(,encoding='ISO-8859-1')

poke_df.head()

gle =LabelEncoder()

generation_label =gle.fit_transform(poke_df['Generation'])

poke_df['Generation_label']=generation_label

poke_dfOneHotEncoder:独热编码,Perform numerical mapping operations on the data,One-hot encoding can expand all possible cases.

from sklearn.preprocessing import OneHotEncoder

onehot_encoder = OneHotEncoder()

gen_feature_arr = onehot_encoder.fit_transform(poke_df[['Generation']]).toarray()

gen_feature_labels = list(gle.classes_)

gen_features = pd.DataFrame(gen_feature_arr,columns =gen_feature_labels)

poke_df_ohe = pd.concat([poke_df,gen_features],axis=1)

poke_df_ohe或者可以通过pd.get_dummies进行操作

gen_ohe = pd.get_dummies(poke_df['Generation'])

pd.concat([gen_ohe,poke_df],axis=1)

gen_onehot_features = pd.get_dummies(poke_df['Generation'],prefix='one_hot')##prefitAdd prefix to

pd.concat([poke_df,gen_onehot_features],axis=1)2.Binary and polynomial features

Operates on binary features

popsong_df = pd.read_csv(,encoding='utf-8')

popsong_df.head()

watched = np.array(popsong_df['listen_count'])

watched[watched >=1]=1

popsong_df['watched']=watched

popsong_df

from sklearn.preprocessing import Binarizer

bn = Binarizer(threshold=0.9)

pd_watched = bn.transform(popsong_df['listen_count'].values.reshape(-1,1))

popsong_df['pd_watched']=pd_watched

popsong_df.head()可以使用PolynomialfeatureChanges to higher power data into new features

poke_df =pd.read_csv(,encoding='utf-8')

atk_df = poke_df[['Attack','Defense']]

atk_df.head()

from sklearn.preprocessing import PolynomialFeatures

pf = PolynomialFeatures(degree=2,interaction_only=False,include_bias=False)

res =pf.fit_transform(atk_df)3.Discretize continuous values,Divide the data into intervals

fcc_survey_df =pd.read_csv(,encoding='utf-8')

fcc_survey_df.head()

fcc_survey_df['Age_bin_round']= np.array(np.floor(np.array(fcc_survey_df['Age'])/10.))##np.floor为向下取整

fcc_survey_df[['Age','Age_bin_round']]

quantile_list=[0,.25,.5,.75,1.]

quantiles =fcc_survey_df['Income'].quantile(quantile_list)

quantiles

quantiles_label=['0~25','25~50','50~75','75~100']

fcc_survey_df['income_quantile_range']=pd.qcut(fcc_survey_df['Income'],q=quantile_list)

fcc_survey_df['income_quantile_label'] =pd.qcut(fcc_survey_df['Income'],q=quantile_list,labels = quantiles_label)

fcc_survey_df4.Operates on time series using logarithms

fcc_survey_df['income_log'] = np.log((1+fcc_survey_df['Income']))

income_log_mean = np.round(np.mean(fcc_survey_df['income_log']),2)

import datetime

import numpy as np

import pandas as pd

from dateutil.parser import parse

import pytz

time_stamps = ['2015-03-08 10:30:00.360000+00:00', '2017-07-13 15:45:05.755000-07:00',

'2012-01-20 22:30:00.254000+05:30', '2016-12-25 00:30:00.000000+10:00']

df = pd.DataFrame(time_stamps, columns=['Time'])

df

ts_objs = np.array([pd.Timestamp(item) for item in np.array(df.Time)])

df['Year'] = df['TS_obj'].apply(lambda d: d.year)

df['Month'] = df['TS_obj'].apply(lambda d: d.month)

df['Day'] = df['TS_obj'].apply(lambda d: d.day)

df['DayOfWeek'] = df['TS_obj'].apply(lambda d: d.dayofweek)

df['DayOfYear'] = df['TS_obj'].apply(lambda d: d.dayofyear)

df['WeekOfYear'] = df['TS_obj'].apply(lambda d: d.weekofyear)

df['Quarter'] = df['TS_obj'].apply(lambda d: d.quarter)

df[['Time', 'Year', 'Month', 'Day', 'Quarter',

'DayOfWeek', 'DayOfYear', 'WeekOfYear']]文本特征处理

1.建立词袋模型,进行分词操作

import pandas as pd

import numpy as np

import re

import nltk

corpus = ['The sky is blue and beautiful.',

'Love this blue and beautiful sky!',

'The quick brown fox jumps over the lazy dog.',

'The brown fox is quick and the blue dog is lazy!',

'The sky is very blue and the sky is very beautiful today',

'The dog is lazy but the brown fox is quick!'

]

labels = ['weather', 'weather', 'animals', 'animals', 'weather', 'animals']

corpus = np.array(corpus)

corpus_df = pd.DataFrame({'Document': corpus,

'Category': labels})

corpus_df = corpus_df[['Document', 'Category']]

corpus_df

#加载停用词

wpt = nltk.WordPunctTokenizer()

stop_words = nltk.corpus.stopwords.words('english')

def normalize_document(doc):

# 去掉特殊字符

doc = re.sub(r'[^a-zA-Z0-9\s]', '', doc, re.I)

# 转换成小写

doc = doc.lower()

doc = doc.strip()

# 分词

tokens = wpt.tokenize(doc)

# 去停用词

filtered_tokens = [token for token in tokens if token not in stop_words]

# 重新组合成文章

doc = ' '.join(filtered_tokens)

return doc

norm_corpus = normailized_document(corpus)

norm_corpus2.Construct methods using text features

tf-idf:Don't worry about word frequency,Also consider the importance of words

from sklearn.feature_extraction.text import TfidfVectorizer

tv = TfidfVectorizer(min_df=0.,max_df=1.,use_idf=True)

tv_matrix = tv.fit_transform(norm_corpus)

tv_matrix=tv_matrix.toarray()

vocab = tv.get_feature_names()

pd.DataFrame(np.round(tv_matrix,2),columns=vocab)Similarity feature:Convert features to branch data,Then calculate its similarity,这里需要tf-idf值

##文本Similarity feature

from sklearn.metrics.pairwise import cosine_similarity

similarity_matrix =consine_similarity(tv_matrix)特征聚类:Divide the data into heaps,Finally give each pile an actual label.

##特征聚类

from sklearn.cluster import KMeans

km = KMeans(n_cluster=2)

km.fit_transform(similarity_df)

cluster_labels -km.labels_

cluster_labels =pd.DataFrame(cluster_labels,columns=['ClusterLabel'])

pd.concat([corpus_df,cluster_labels],axis=1)Build topic models:Topic models are unsupervised methods,The input is the processed corpus,You can get the topic type and the weight of each word.

##主题模型

from sklearn.decomposition import LatentDirichletAllocation

lda =LaatentDirichletAllocation(n_topics=2,max_iter=100,random_state=42)

dt_matrix =lda.fit_transform(tv_matrix)

features =pd.DataFrame(dt_matrix,columns=['T1','T2'])

tt_matrix =lda.components_

for topic_weights in tt_matrix:

topic =[(token,weight) for token,weight in zip(vocab,topic_weights)]

topic =sorted(topic,key =lambda x :-x[1])

topic =[item for item in topic if item[1] >0.6]

print(topuc)Build a word vector model:Choose to initialize each word,The actual spatial meaning is given to each word.

##词向量模型

from gensim.models import word2vec

wpt = nltk.wordPunctTokenizer()

tokenized_corpus =[wpt.tokenized(documents) for documents in norm_corpus]

feature_size =10##词向量维度

windows_context =10##滑动窗口

min_word_count =1##最小词频

w2v_model =word2vec.WoRD2vEC(tokenized_corpus,size =feature_size,windows =window_context,min_count =min_word_count)

w2v_model.wv['sky']边栏推荐

- 类型转换、常用运算符

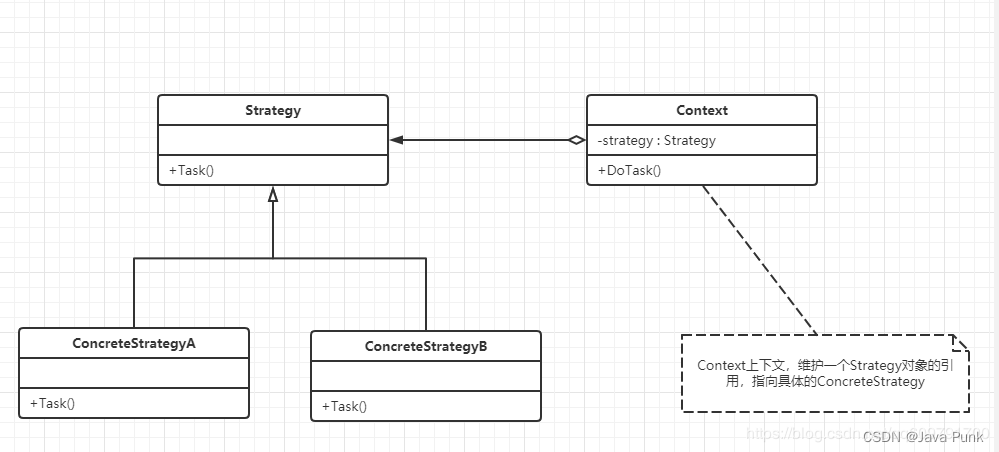

- 别再用if-else了,分享一下我使用“策略模式”的项目经验...

- After completing the interview and clearance collection of Alibaba, I successfully won the 15th Offer this year

- LeetCode-1161. 最大层内元素和

- 距LiveVideoStackCon 2022 上海站开幕还有3天!

- 深度学习跟踪DLT (deep learning tracker)

- 流式编程使用场景

- 进程内存

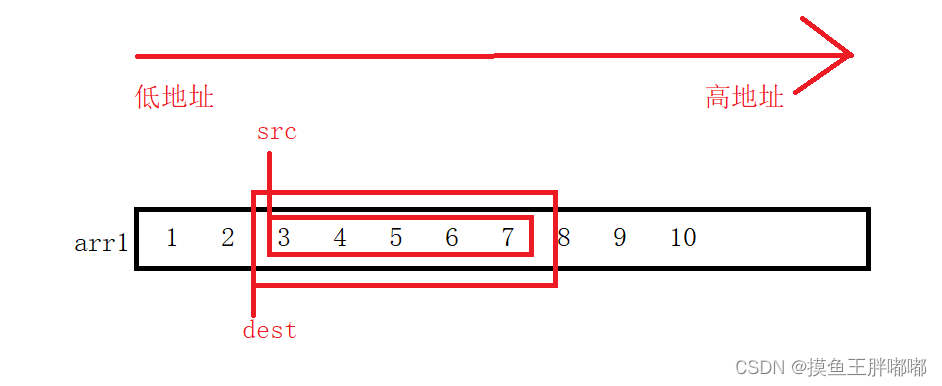

- C language advanced article: memory function

- 基于Sikuli GUI图像识别框架的PC客户端自动化测试实践

猜你喜欢

nacos app

fastposter v2.9.0 programmer must-have poster generator

如何免费获得一个市全年的气象数据?降雨量气温湿度太阳辐射等等数据

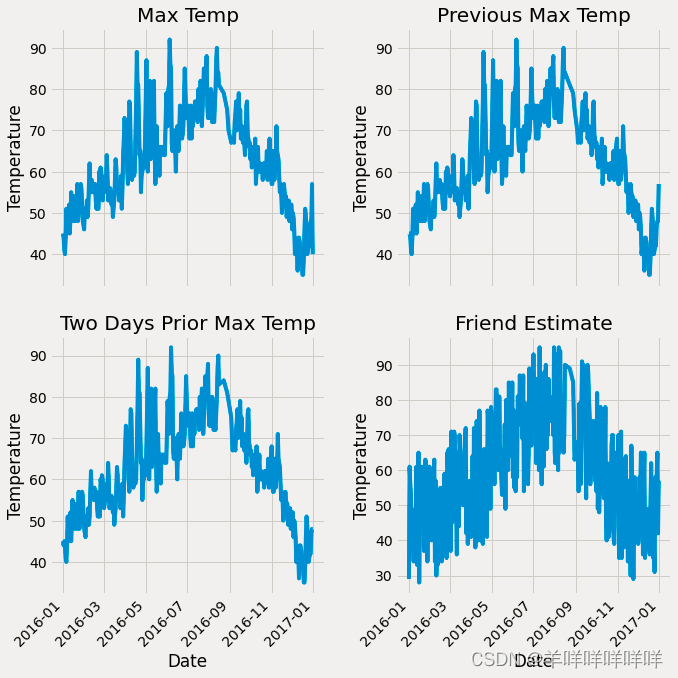

随机森林项目实战---气温预测

C language advanced article: memory function

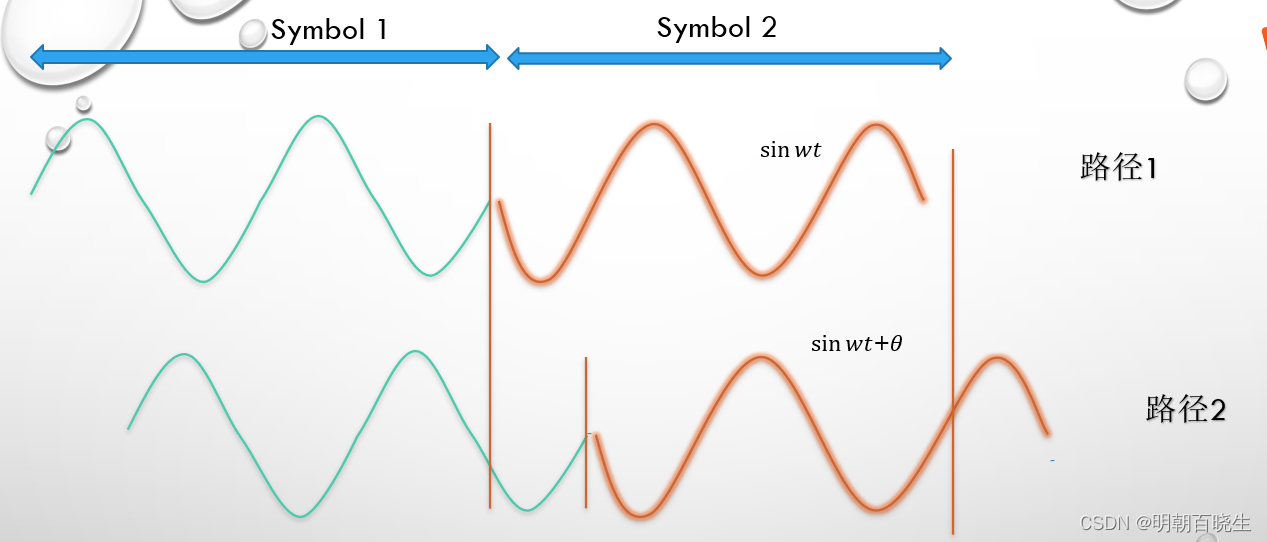

OFDM 十六讲 4 -What is a Cyclic Prefix in OFDM

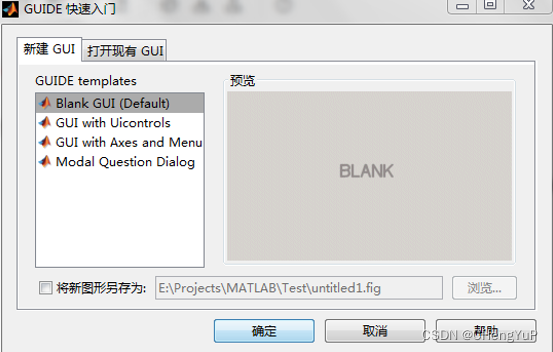

Matlab学习13-图像处理之可视化GUI程序

别再用if-else了,分享一下我使用“策略模式”的项目经验...

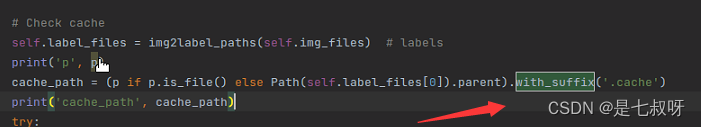

YOLOv5训练数据提示No labels found、with_suffix使用、yolov5训练时出现WARNING: Ignoring corrupted image and/or label

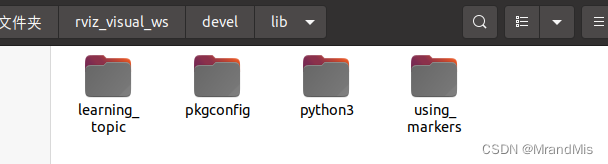

ROS中编译通过但是遇到可执行文件找不到的问题

随机推荐

苹果发布 AI 生成模型 GAUDI,文字生成 3D 场景

数据库系统原理与应用教程(076)—— MySQL 练习题:操作题 160-167(二十):综合练习

App自动化测试怎么做?实战分享App自动化测试全流程

JUC(三):锁核心类AQS ing

一个扛住 100 亿次请求的红包系统,写得太好了!!

当前页面的脚本发生错误如何解决_电脑出现当前页面脚本错误怎么办

Vs Shortcut Keys---Explore Different Programming

【HCIP持续更新】STP协议相关保护机制

TiKV & TiFlash 加速复杂业务查询丨TiFlash 应用实践

特征工程学习笔记

mysql进阶(二十四)防御SQL注入的方法总结

Matlab学习12-图像处理之图像增强

hystrix 服务熔断和服务降级

数据库系统原理与应用教程(074)—— MySQL 练习题:操作题 141-150(十八):综合练习

622. 设计循环队列

特征降维学习笔记(pca和lda)(1)

解决oracle安装在linux中jdk的冲突

flink流批一体有啥条件,数据源是从mysql批量分片读取,为啥设置成批量模式就不行

R语言拟合ARIMA模型并使用拟合模型进行预测推理、使用autoplot函数可视化ARIMA模型预测结果、可视化包含置信区间的预测结果

899. 有序队列 : 最小表示法模板题