当前位置:网站首页>04 automatic learning rate - learning notes - lihongyi's in-depth learning 2021

04 automatic learning rate - learning notes - lihongyi's in-depth learning 2021

2022-06-11 23:16:00 【iioSnail】

Next :05 Classification- Learning notes - Li Hongyi studies deeply 2021 year

Contents of this section and related links

Automatic adjustment Learning Rate Common strategies for

Class notes

When training When stuck in a bottleneck , Is not necessarily gradient Too small , It may be due to Learning rate is too high , Cause it to vibrate between valleys , The minimum value cannot be reached

Corresponding to gradient The function image of is shown in the following figure :

x x x Axis is the number of updates , y y y by gradient Size

According to the number of iterations , Current gradient and other factors , Automatic adjustment Learning Rate. θ \theta θ The updated formula of is changed to : θ i t + 1 ← θ i t − η σ i t g i t \theta_i^{t+1}\leftarrow \theta_i^t - \frac{\eta}{\sigma_i^t}g^t_i θit+1←θit−σitηgit

about Learning Rate Adjustment of , All through adjustment σ \sigma σ To achieve

Common adjustment strategies are :

- Root Mean Square: Consider this gradient and all gradients in the past

- RMSProp: Focus on this gradient , Think a little about all the gradients in the past

- Adam: Combined with the RMSProp and Momentum

- Learning Rate Decay: As the number of updates increases , Because the closer we get to our goal , So will Learning Rate The small

- Warm Up: In limine Learning Rate Smaller one , Then it increases as the number of iterations increases , And then at some point , And then it decreases with the increase of the number of iterations . As shown in the figure :

Root Mean Square Formula for : σ i t = 1 t + 1 ∑ i = 0 t ( g i t ) 2 \sigma_{i}^{t}=\sqrt{\frac{1}{t+1} \sum_{i=0}^{t}\left(g_{i}^{t}\right)^{2}} σit=t+11i=0∑t(git)2

RMSProp Formula for : σ i t = α ( σ i t − 1 ) 2 + ( 1 − α ) ( g i t ) 2 \sigma_{i}^{t}=\sqrt{\alpha\left(\sigma_{i}^{t-1}\right)^{2}+(1-\alpha)\left(g_{i}^{t}\right)^{2}} σit=α(σit−1)2+(1−α)(git)2 among α \alpha α For the super parameter to be adjusted , 0 < α < 1 0<\alpha<1 0<α<1

Adam The proposal USES Pytorch Default parameters .

Adam The adjustment strategy of is as follows :

边栏推荐

- MySQL 8.0 decompressed version installation tutorial

- 【Day9 文献泛读】On the (a)symmetry between the perception of time and space in large-scale environments

- Analysis on the market prospect of smart home based on ZigBee protocol wireless module

- Alibaba cloud server MySQL remote connection has been disconnected

- A new product with advanced product power, the new third generation Roewe rx5 blind subscription is opened

- SDNU_ ACM_ ICPC_ 2022_ Weekly_ Practice_ 1st (supplementary question)

- Research Report on development trend and competitive strategy of global reverse osmosis membrane cleaning agent industry

- Common problems of Converged Communication published in February | Yunxin small class

- 2022年安全員-A證考題模擬考試平臺操作

- The top ten trends of 2022 industrial Internet security was officially released

猜你喜欢

How to make scripts executable anywhere

2022年安全员-B证理论题库及模拟考试

Simulated examination question bank and simulated examination of 2022 crane driver (limited to bridge crane)

Games-101 闫令琪 5-6讲 光栅化处理 (笔记整理)

Implementation scheme of iteration and combination pattern for general tree structure

Pourquoi Google Search ne peut - il pas Pager indéfiniment?

The second bullet of in-depth dialogue with the container service ack distribution: how to build a hybrid cloud unified network plane with the help of hybridnet

2022年R1快开门式压力容器操作考题及在线模拟考试

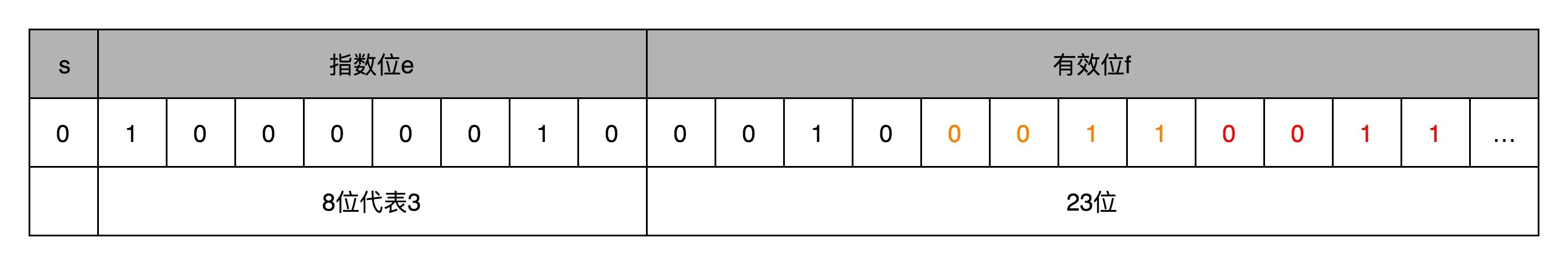

16 | floating point numbers and fixed-point numbers (Part 2): what is the use of a deep understanding of floating-point numbers?

mysql5和mysql8同时安装

随机推荐

How to completely modify the user name in win10 system and win11 system

2022年安全员-B证理论题库及模拟考试

[Day2 intensive literature reading] time in the mind: using space to think about time

Queue (C language)

Method for debugging wireless data packet capturing of Internet of things zigbee3.0 protocol e18-2g4u04b module

6. project launch

Google搜索为什么不能无限分页?

2022 high voltage electrician test question simulation test question bank and online simulation test

How to make scripts executable anywhere

【delphi】判断文件的编码方式(ANSI、Unicode、UTF8、UnicodeBIG)

Is the product stronger or weaker, and is the price unchanged or reduced? Talk about domestic BMW X5

2022 safety officer-a certificate test question simulation test platform operation

[naturallanguageprocessing] [multimodal] albef: visual language representation learning based on momentum distillation

The top ten trends of 2022 industrial Internet security was officially released

GMN of AI medicine article interpretation

栈(C语言)

Solr之基礎講解入門

Processus postgresql10

Data processing and visualization of machine learning [iris data classification | feature attribute comparison]

[day13-14 intensive literature reading] cross dimensional magnetic interactions arise from memory interference