当前位置:网站首页>MInIO入门-03 秒传+大文件分片上传

MInIO入门-03 秒传+大文件分片上传

2022-08-02 00:17:00 【m0_67402970】

MInIO 文件分片上传

1、前端页面

<!DOCTYPE html>

<html lang="en" xmlns:th="http://www.thymeleaf.org">

<head>

<meta charset="UTF-8">

<title>Title</title>

</head>

<body>

<script type="text/javascript" src="/js/jquery.js" th:src="@{/js/jquery.js}"></script>

<script type="text/javascript" src="/js/spark-md5.min.js" th:src="@{/js/spark-md5.min.js}"></script>

<input type="file" name="file" id="file">

<script>

const baseUrl = "http://localhost:18002";

/**

* 分块计算文件的md5值

* @param file 文件

* @param chunkSize 分片大小

* @returns Promise

*/

function calculateFileMd5(file, chunkSize) {

return new Promise((resolve, reject) => {

let blobSlice = File.prototype.slice || File.prototype.mozSlice || File.prototype.webkitSlice;

let chunks = Math.ceil(file.size / chunkSize);

let currentChunk = 0;

let spark = new SparkMD5.ArrayBuffer();

let fileReader = new FileReader();

fileReader.onload = function (e) {

spark.append(e.target.result);

currentChunk++;

if (currentChunk < chunks) {

loadNext();

} else {

let md5 = spark.end();

resolve(md5);

}

};

fileReader.onerror = function (e) {

reject(e);

};

function loadNext() {

let start = currentChunk * chunkSize;

let end = start + chunkSize;

if (end > file.size) {

end = file.size;

}

fileReader.readAsArrayBuffer(blobSlice.call(file, start, end));

}

loadNext();

});

}

/**

* 分块计算文件的md5值,默认分片大小为2097152(2M)

* @param file 文件

* @returns Promise

*/

function calculateFileMd5ByDefaultChunkSize(file) {

return calculateFileMd5(file, 2097152);

}

/**

* 获取文件的后缀名

*/

function getFileType(fileName) {

return fileName.substr(fileName.lastIndexOf(".") + 1).toLowerCase();

}

// 文件选择之后就计算文件的md5值

document.getElementById("file").addEventListener("change", function () {

let file = this.files[0];

calculateFileMd5ByDefaultChunkSize(file).then(e => {

// 获取到文件的md5

let md5 = e;

checkMd5(md5, file)

}).catch(e => {

// 处理异常

console.error(e);

});

});

/**

* 根据文件的md5值判断文件是否已经上传过了

*

* @param md5 文件的md5

* @param file 准备上传的文件

*/

function checkMd5(md5, file) {

// 请求数据库,查询md5是否存在

$.ajax({

url: baseUrl + "/file/check",

type: "GET",

data: {

md5: md5

},

async: true, //异步

dataType: "json",

success: function (msg) {

console.log(msg);

// 文件已经存在了,无需上传

if (msg.status === 20000) {

console.log("文件已经存在了,无需上传")

} else if (msg.status === 40004) {

// 文件不存在需要上传

console.log("文件不存在需要上传")

PostFile(file, 0, md5);

} else {

console.log('未知错误');

}

}

})

}

/**

* 执行分片上传

* @param file 上传的文件

* @param i 第几分片,从0开始

* @param md5 文件的md5值

*/

function PostFile(file, i, md5) {

let name = file.name, //文件名

size = file.size, //总大小shardSize = 2 * 1024 * 1024,

shardSize = 5 * 1024 * 1024, //以5MB为一个分片,每个分片的大小

shardCount = Math.ceil(size / shardSize); //总片数

if (i >= shardCount) {

return;

}

let start = i * shardSize;

let end = start + shardSize;

let packet = file.slice(start, end); //将文件进行切片

/* 构建form表单进行提交 */

let form = new FormData();

form.append("md5", md5);// 前端生成uuid作为标识符传个后台每个文件都是一个uuid防止文件串了

form.append("data", packet); //slice方法用于切出文件的一部分

form.append("name", name);

form.append("totalSize", size);

form.append("total", shardCount); //总片数

form.append("index", i + 1); //当前是第几片

$.ajax({

url: baseUrl + "/file/upload",

type: "POST",

data: form,

//timeout:"10000", //超时10秒

async: true, //异步

dataType: "json",

processData: false, //很重要,告诉jquery不要对form进行处理

contentType: false, //很重要,指定为false才能形成正确的Content-Type

success: function (msg) {

console.log(msg);

/* 表示上一块文件上传成功,继续下一次 */

if (msg.status === 20001) {

form = '';

i++;

PostFile(file, i, md5);

} else if (msg.status === 50000) {

form = '';

/* 失败后,每2秒继续传一次分片文件 */

setInterval(function () {

PostFile(file, i, md5)

}, 2000);

} else if (msg.status === 20002) {

merge(shardCount, name, md5, getFileType(file.name), file.size)

console.log("上传成功");

} else {

console.log('未知错误');

}

}

})

}

/**

* 合并文件

* @param shardCount 分片数

* @param fileName 文件名

* @param md5 文件md值

* @param fileType 文件类型

* @param fileSize 文件大小

*/

function merge(shardCount, fileName, md5, fileType, fileSize) {

$.ajax({

url: baseUrl + "/file/merge",

type: "GET",

data: {

shardCount: shardCount,

fileName: fileName,

md5: md5,

fileType: fileType,

fileSize: fileSize

},

// timeout:"10000", //超时10秒

async: true, //异步

dataType: "json",

success: function (msg) {

console.log(msg);

}

})

}

</script>

</body>

</html>

2、文件md5校验

/**

* 根据文件大小和文件的md5校验文件是否存在

* 暂时使用Redis实现,后续需要存入数据库

* 实现秒传接口

*

* @param md5 文件的md5

* @return 操作是否成功

*/

@GetMapping(value = "/check")

public Map<String, Object> checkFileExists(String md5) {

Map<String, Object> resultMap = new HashMap<>();

if (ObjectUtils.isEmpty(md5)) {

resultMap.put("status", StatusCode.PARAM_ERROR.getCode());

return resultMap;

}

// 先从Redis中查询

String url = (String) jsonRedisTemplate.boundHashOps(MD5_KEY).get(md5);

// 文件不存在

if (ObjectUtils.isEmpty(url)) {

resultMap.put("status", StatusCode.NOT_FOUND.getCode());

return resultMap;

}

resultMap.put("status", StatusCode.SUCCESS.getCode());

resultMap.put("url", url);

// 文件已经存在了

return resultMap;

}

3、文件分片上传

/**

* 文件上传,适合大文件,集成了分片上传

*/

@PostMapping(value = "/upload")

public Map<String, Object> upload(HttpServletRequest req) {

Map<String, Object> map = new HashMap<>();

MultipartHttpServletRequest multipartRequest = (MultipartHttpServletRequest) req;

// 获得文件分片数据

MultipartFile file = multipartRequest.getFile("data");

// 上传过程中出现异常,状态码设置为50000

if (file == null) {

map.put("status", StatusCode.FAILURE.getCode());

return map;

}

// 分片第几片

int index = Integer.parseInt(multipartRequest.getParameter("index"));

// 总片数

int total = Integer.parseInt(multipartRequest.getParameter("total"));

// 获取文件名

String fileName = multipartRequest.getParameter("name");

String md5 = multipartRequest.getParameter("md5");

// 创建文件桶

minioTemplate.makeBucket(md5);

String objectName = String.valueOf(index);

log.info("index: {}, total:{}, fileName:{}, md5:{}, objectName:{}", index, total, fileName, md5, objectName);

// 当不是最后一片时,上传返回的状态码为20001

if (index < total) {

try {

// 上传文件

OssFile ossFile = minioTemplate.putChunkObject(file.getInputStream(), md5, objectName);

log.info("{} upload success {}", objectName, ossFile);

// 设置上传分片的状态

map.put("status", StatusCode.ALONE_CHUNK_UPLOAD_SUCCESS.getCode());

return map;

} catch (Exception e) {

e.printStackTrace();

map.put("status", StatusCode.FAILURE.getCode());

return map;

}

} else {

// 为最后一片时状态码为20002

try {

// 上传文件

minioTemplate.putChunkObject(file.getInputStream(), md5, objectName);

// 设置上传分片的状态

map.put("status", StatusCode.ALL_CHUNK_UPLOAD_SUCCESS.getCode());

return map;

} catch (Exception e) {

e.printStackTrace();

map.put("status", StatusCode.FAILURE.getCode());

return map;

}

}

}

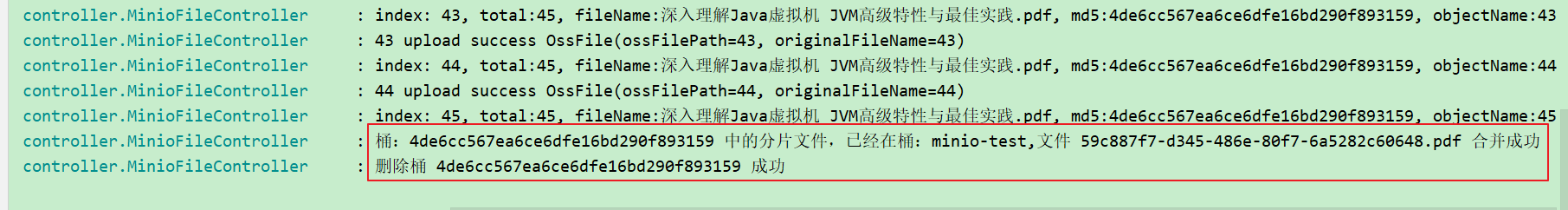

4、文件合并

/**

* 文件合并

*

* @param shardCount 分片总数

* @param fileName 文件名

* @param md5 文件的md5

* @param fileType 文件类型

* @param fileSize 文件大小

* @return 分片合并的状态

*/

@GetMapping(value = "/merge")

public Map<String, Object> merge(Integer shardCount, String fileName, String md5, String fileType,

Long fileSize) {

Map<String, Object> retMap = new HashMap<>();

try {

// 查询片数据

List<String> objectNameList = minioTemplate.listObjectNames(md5);

if (shardCount != objectNameList.size()) {

// 失败

retMap.put("status", StatusCode.FAILURE.getCode());

} else {

// 开始合并请求

String targetBucketName = minioConfig.getBucketName();

String filenameExtension = StringUtils.getFilenameExtension(fileName);

String fileNameWithoutExtension = UUID.randomUUID().toString();

String objectName = fileNameWithoutExtension + "." + filenameExtension;

minioTemplate.composeObject(md5, targetBucketName, objectName);

log.info("桶:{} 中的分片文件,已经在桶:{},文件 {} 合并成功", md5, targetBucketName, objectName);

// 合并成功之后删除对应的临时桶

minioTemplate.removeBucket(md5, true);

log.info("删除桶 {} 成功", md5);

// 计算文件的md5

String fileMd5 = null;

try (InputStream inputStream = minioTemplate.getObject(targetBucketName, objectName)) {

fileMd5 = Md5Util.calculateMd5(inputStream);

} catch (IOException e) {

log.error("", e);

}

// 计算文件真实的类型

String type = null;

try (InputStream inputStreamCopy = minioTemplate.getObject(targetBucketName, objectName)) {

type = FileTypeUtil.getType(inputStreamCopy);

} catch (IOException e) {

log.error("", e);

}

// 并和前台的md5进行对比

if (!ObjectUtils.isEmpty(fileMd5) && !ObjectUtils.isEmpty(type) && fileMd5.equalsIgnoreCase(md5) && type.equalsIgnoreCase(fileType)) {

// 表示是同一个文件, 且文件后缀名没有被修改过

String url = minioTemplate.getPresignedObjectUrl(targetBucketName, objectName);

// 存入redis中

jsonRedisTemplate.boundHashOps(MD5_KEY).put(fileMd5, url);

// 成功

retMap.put("status", StatusCode.SUCCESS.getCode());

} else {

log.error("非法的文件信息: 分片数量:{}, 文件名称:{}, 文件md5:{}, 文件类型:{}, 文件大小:{}",

shardCount, fileName, md5, fileType, fileSize);

// 并需要删除对象

minioTemplate.deleteObject(targetBucketName, objectName);

retMap.put("status", StatusCode.FAILURE.getCode());

}

}

} catch (Exception e) {

log.error("", e);

// 失败

retMap.put("status", StatusCode.FAILURE.getCode());

}

return retMap;

}

5、测试

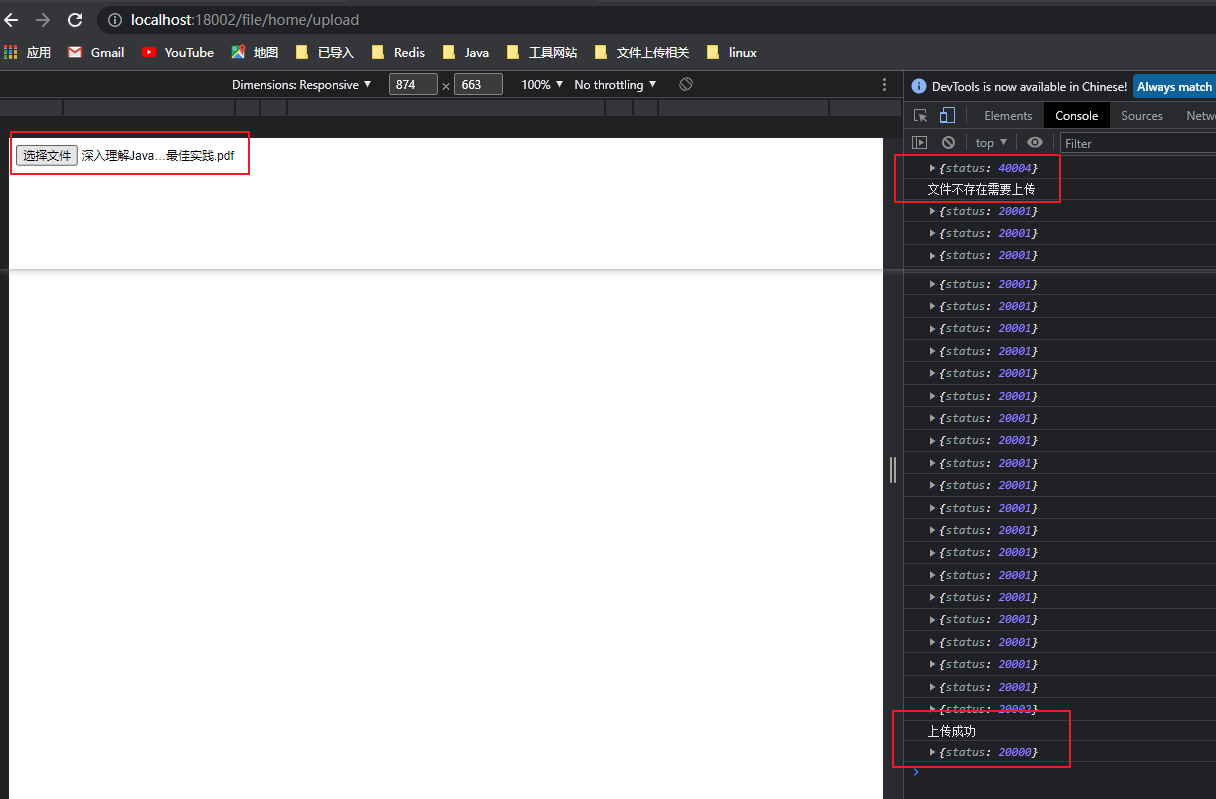

5.1、测试大文件分片上传

5.1.1、浏览器输入:http://localhost:18002/file/home/upload

5.1.2、去Minio客户端查看上传的文件

http://192.168.211.132:9090/buckets/minio-test/browse

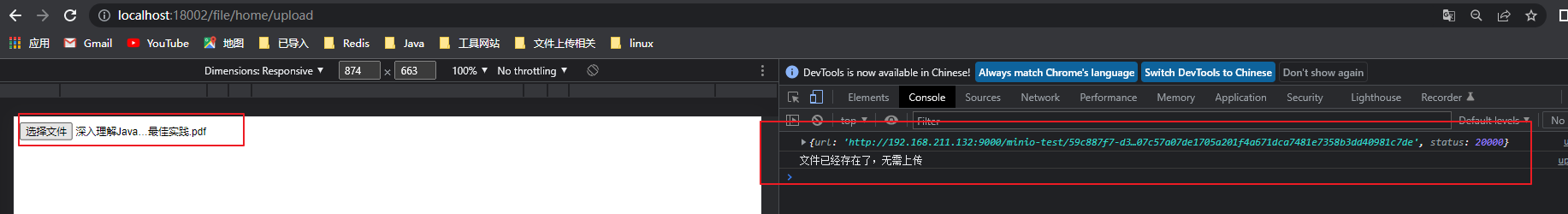

5.2、测试秒传

6、完整的代码

6.1、pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.6.7</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>cn.lyf</groupId>

<artifactId>springboot-minio-demo2</artifactId>

<version>1.0.0-SNAPSHOT</version>

<name>springboot-minio-demo2</name>

<description>springboot-minio-demo2</description>

<properties>

<!--jdk版本-->

<java.version>1.8</java.version>

<!--跳过测试-->

<skipTests>true</skipTests>

<redisson.version>3.17.0</redisson.version>

<minio.version>8.3.9</minio.version>

<okhttp.version>4.9.1</okhttp.version>

<hutool-all.version>5.8.0.M4</hutool-all.version>

<commons-io.version>2.11.0</commons-io.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-validation</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-configuration-processor</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.datatype</groupId>

<artifactId>jackson-datatype-jsr310</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

<exclusions>

<exclusion>

<groupId>io.lettuce</groupId>

<artifactId>lettuce-core</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>io.minio</groupId>

<artifactId>minio</artifactId>

<version>${minio.version}</version>

</dependency>

<dependency>

<groupId>com.squareup.okhttp3</groupId>

<artifactId>okhttp</artifactId>

<version>${okhttp.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/cn.hutool/hutool-all -->

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-all</artifactId>

<version>${hutool-all.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.redisson/redisson -->

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson</artifactId>

<version>${redisson.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/redis.clients/jedis -->

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-pool2</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-thymeleaf</artifactId>

</dependency>

<!-- https://mvnrepository.com/artifact/commons-io/commons-io -->

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>${commons-io.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.commons/commons-lang3 -->

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<excludes>

<exclude>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</exclude>

</excludes>

</configuration>

</plugin>

</plugins>

</build>

</project>

6.2、yaml

server:

port: 18002

spring:

application:

name: minio-application

servlet:

multipart:

max-file-size: 100MB

max-request-size: 100MB

redis:

database: 0

host: 192.168.211.132

port: 6379

jedis:

pool:

max-active: 200

max-wait: -1

max-idle: 10

min-idle: 0

timeout: 2000

thymeleaf:

#模板的模式,支持 HTML, XML TEXT JAVASCRIPT

mode: HTML5

#编码 可不用配置

encoding: UTF-8

#开发配置为false,避免修改模板还要重启服务器

cache: false

#配置模板路径,默认是templates,可以不用配置

prefix: classpath:/templates/

servlet:

content-type: text/html

minio:

endpoint: http://192.168.211.132:9000

accessKey: admin

secretKey: admin123456

bucketName: minio-test

6.3、注入Minio配置

6.3.1、MinioConfig.java

package cn.lyf.minio.config;

import cn.lyf.minio.core.MinioTemplate;

import io.minio.MinioClient;

import lombok.Data;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* @author lyf

* @description: Minio 配置类

* @version: v1.0

* @since 2022-04-29 14:49

*/

@Configuration

@Data

@ConfigurationProperties(value = "minio")

public class MinioConfig {

/**

* 对象存储服务的URL

*/

private String endpoint;

/**

* Access key就像用户ID,可以唯一标识你的账户。

*/

private String accessKey;

/**

* Secret key是你账户的密码。

*/

private String secretKey;

/**

* bucketName是你设置的桶的名称

*/

private String bucketName;

/**

* 初始化一个MinIO客户端用来连接MinIO存储服务

*

* @return MinioClient

*/

@Bean(name = "minioClient")

public MinioClient initMinioClient() {

return MinioClient.builder().endpoint(endpoint).credentials(accessKey, secretKey).build();

}

/**

* 初始化MinioTemplate,封装了一些MinIOClient的基本操作

*

* @return MinioTemplate

*/

@Bean(name = "minioTemplate")

public MinioTemplate minioTemplate() {

return new MinioTemplate(initMinioClient(), this);

}

}

6.4、注入Redis配置

6.4.1、RedisConfig.java

package cn.lyf.minio.config;

import com.fasterxml.jackson.annotation.JsonAutoDetect;

import com.fasterxml.jackson.annotation.JsonTypeInfo;

import com.fasterxml.jackson.annotation.PropertyAccessor;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.fasterxml.jackson.databind.SerializationFeature;

import com.fasterxml.jackson.databind.jsontype.impl.LaissezFaireSubTypeValidator;

import com.fasterxml.jackson.datatype.jdk8.Jdk8Module;

import com.fasterxml.jackson.datatype.jsr310.JavaTimeModule;

import com.fasterxml.jackson.module.paramnames.ParameterNamesModule;

import org.redisson.Redisson;

import org.redisson.api.RedissonClient;

import org.redisson.config.Config;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.data.redis.connection.RedisStandaloneConfiguration;

import org.springframework.data.redis.connection.jedis.JedisConnectionFactory;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.serializer.GenericJackson2JsonRedisSerializer;

import org.springframework.data.redis.serializer.JdkSerializationRedisSerializer;

import org.springframework.data.redis.serializer.RedisSerializer;

import org.springframework.data.redis.serializer.StringRedisSerializer;

import java.io.Serializable;

/**

* @author lyf

* @description:

* @version: v1.0

* @since 2022-04-09 14:45

*/

@Configuration

public class RedisConfig {

@Value("${spring.redis.host}")

private String redisHost;

@Value("${spring.redis.port}")

private String redisPort;

/**

* 通过配置RedisStandaloneConfiguration实例来

* 创建Redis Standolone模式的客户端连接创建工厂

* 配置hostname和port

*

* @return LettuceConnectionFactory

*/

@Bean

public JedisConnectionFactory redisConnectionFactory() {

return new JedisConnectionFactory(new RedisStandaloneConfiguration(redisHost, Integer.parseInt(redisPort)));

}

/**

* 保证序列化之后不会乱码的配置

*

* @param connectionFactory connectionFactory

* @return RedisTemplate

*/

@Bean(name = "jsonRedisTemplate")

public RedisTemplate<String, Serializable> redisTemplate(JedisConnectionFactory connectionFactory) {

return getRedisTemplate(connectionFactory, genericJackson2JsonRedisSerializer());

}

/**

* 解决:

* org.springframework.data.redis.serializer.SerializationException:

* Could not write JSON: Java 8 date/time type `java.time.LocalDateTime` not supported

*

* @return GenericJackson2JsonRedisSerializer

*/

@Bean

@Primary // 当存在多个Bean时,此bean优先级最高

public GenericJackson2JsonRedisSerializer genericJackson2JsonRedisSerializer() {

ObjectMapper objectMapper = new ObjectMapper();

// 解决查询缓存转换异常的问题

objectMapper.disable(SerializationFeature.WRITE_DATES_AS_TIMESTAMPS);

objectMapper.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY);

objectMapper.activateDefaultTyping(LaissezFaireSubTypeValidator.instance,

ObjectMapper.DefaultTyping.NON_FINAL,

JsonTypeInfo.As.WRAPPER_ARRAY);

// 支持 jdk 1.8 日期 ---- start ---

objectMapper.disable(SerializationFeature.WRITE_DATES_AS_TIMESTAMPS);

objectMapper.registerModule(new Jdk8Module())

.registerModule(new JavaTimeModule())

.registerModule(new ParameterNamesModule());

// --end --

return new GenericJackson2JsonRedisSerializer(objectMapper);

}

/**

* 注入redis分布式锁实现方案redisson

*

* @return RedissonClient

*/

@Bean

public RedissonClient redisson() {

Config config = new Config();

config.useSingleServer().setAddress("redis://" + redisHost + ":" + redisPort).setDatabase(0);

return Redisson.create(config);

}

/**

* 采用jdk序列化的方式

*

* @param connectionFactory connectionFactory

* @return RedisTemplate

*/

@Bean(name = "jdkRedisTemplate")

public RedisTemplate<String, Serializable> redisTemplateByJdkSerialization(JedisConnectionFactory connectionFactory) {

return getRedisTemplate(connectionFactory, new JdkSerializationRedisSerializer());

}

private RedisTemplate<String, Serializable> getRedisTemplate(JedisConnectionFactory connectionFactory,

RedisSerializer<?> redisSerializer) {

RedisTemplate<String, Serializable> redisTemplate = new RedisTemplate<>();

redisTemplate.setKeySerializer(new StringRedisSerializer());

redisTemplate.setValueSerializer(redisSerializer);

redisTemplate.setHashKeySerializer(new StringRedisSerializer());

redisTemplate.setHashValueSerializer(redisSerializer);

connectionFactory.afterPropertiesSet();

redisTemplate.setConnectionFactory(connectionFactory);

return redisTemplate;

}

}

6.5、MinioTemplate.java

package cn.lyf.minio.core;

import cn.hutool.core.date.DateUtil;

import cn.hutool.core.util.StrUtil;

import cn.lyf.minio.config.MinioConfig;

import cn.lyf.minio.entity.OssFile;

import io.minio.*;

import io.minio.http.Method;

import io.minio.messages.Bucket;

import io.minio.messages.Item;

import lombok.AllArgsConstructor;

import lombok.SneakyThrows;

import lombok.extern.slf4j.Slf4j;

import org.springframework.http.MediaType;

import org.springframework.util.ObjectUtils;

import org.springframework.util.StringUtils;

import javax.annotation.PostConstruct;

import java.io.InputStream;

import java.time.ZonedDateTime;

import java.util.*;

import java.util.concurrent.TimeUnit;

/**

* @author lyf

* @description:

* @version: v1.0

* @since 2022-05-03 13:04

*/

@Slf4j

@AllArgsConstructor

public class MinioTemplate {

/**

* MinIO 客户端

*/

private MinioClient minioClient;

/**

* MinIO 配置类

*/

private MinioConfig minioConfig;

/**

* 查询所有存储桶

*

* @return Bucket 集合

*/

@SneakyThrows

public List<Bucket> listBuckets() {

return minioClient.listBuckets();

}

/**

* 桶是否存在

*

* @param bucketName 桶名

* @return 是否存在

*/

@SneakyThrows

public boolean bucketExists(String bucketName) {

return minioClient.bucketExists(BucketExistsArgs.builder().bucket(bucketName).build());

}

/**

* 创建存储桶

*

* @param bucketName 桶名

*/

@SneakyThrows

public void makeBucket(String bucketName) {

if (!bucketExists(bucketName)) {

minioClient.makeBucket(MakeBucketArgs.builder().bucket(bucketName).build());

}

}

/**

* 删除一个空桶 如果存储桶存在对象不为空时,删除会报错。

*

* @param bucketName 桶名

*/

@SneakyThrows

public void removeBucket(String bucketName) {

removeBucket(bucketName, false);

minioClient.removeBucket(RemoveBucketArgs.builder().bucket(bucketName).build());

}

/**

* 删除一个桶 根据桶是否存在数据进行不同的删除

* 桶为空时直接删除

* 桶不为空时先删除桶中的数据,然后再删除桶

*

* @param bucketName 桶名

*/

@SneakyThrows

public void removeBucket(String bucketName, boolean bucketNotNull) {

if (bucketNotNull) {

deleteBucketAllObject(bucketName);

}

minioClient.removeBucket(RemoveBucketArgs.builder().bucket(bucketName).build());

}

/**

* 上传文件

*

* @param inputStream 流

* @param originalFileName 原始文件名

* @param bucketName 桶名

* @return ObjectWriteResponse

*/

@SneakyThrows

public OssFile putObject(InputStream inputStream, String bucketName, String originalFileName) {

String uuidFileName = generateFileInMinioName(originalFileName);

try {

if (ObjectUtils.isEmpty(bucketName)) {

bucketName = minioConfig.getBucketName();

}

minioClient.putObject(

PutObjectArgs.builder()

.bucket(bucketName)

.object(uuidFileName)

.stream(inputStream, inputStream.available(), -1)

.build());

return new OssFile(uuidFileName, originalFileName);

} finally {

if (inputStream != null) {

inputStream.close();

}

}

}

/**

* 删除桶中所有的对象

*

* @param bucketName 桶对象

*/

@SneakyThrows

public void deleteBucketAllObject(String bucketName) {

List<String> list = listObjectNames(bucketName);

if (!list.isEmpty()) {

for (String objectName : list) {

deleteObject(bucketName, objectName);

}

}

}

/**

* 查询桶中所有的对象名

*

* @param bucketName 桶名

* @return objectNames

*/

@SneakyThrows

public List<String> listObjectNames(String bucketName) {

List<String> objectNameList = new ArrayList<>();

if (bucketExists(bucketName)) {

Iterable<Result<Item>> results = listObjects(bucketName, true);

for (Result<Item> result : results) {

String objectName = result.get().objectName();

objectNameList.add(objectName);

}

}

return objectNameList;

}

/**

* 删除一个对象

*

* @param bucketName 桶名

* @param objectName 对象名

*/

@SneakyThrows

public void deleteObject(String bucketName, String objectName) {

minioClient.removeObject(RemoveObjectArgs.builder()

.bucket(bucketName)

.object(objectName)

.build());

}

/**

* 上传分片文件

*

* @param inputStream 流

* @param objectName 存入桶中的对象名

* @param bucketName 桶名

* @return ObjectWriteResponse

*/

@SneakyThrows

public OssFile putChunkObject(InputStream inputStream, String bucketName, String objectName) {

try {

minioClient.putObject(

PutObjectArgs.builder()

.bucket(bucketName)

.object(objectName)

.stream(inputStream, inputStream.available(), -1)

.build());

return new OssFile(objectName, objectName);

} finally {

if (inputStream != null) {

inputStream.close();

}

}

}

/**

* 返回临时带签名、Get请求方式的访问URL

*

* @param bucketName 桶名

* @param filePath Oss文件路径

* @return 临时带签名、Get请求方式的访问URL

*/

@SneakyThrows

public String getPresignedObjectUrl(String bucketName, String filePath) {

return minioClient.getPresignedObjectUrl(

GetPresignedObjectUrlArgs.builder()

.method(Method.GET)

.bucket(bucketName)

.object(filePath)

.build());

}

/**

* 返回临时带签名、过期时间为1天的PUT请求方式的访问URL

*

* @param bucketName 桶名

* @param filePath Oss文件路径

* @param queryParams 查询参数

* @return 临时带签名、过期时间为1天的PUT请求方式的访问URL

*/

@SneakyThrows

public String getPresignedObjectUrl(String bucketName, String filePath, Map<String, String> queryParams) {

return minioClient.getPresignedObjectUrl(

GetPresignedObjectUrlArgs.builder()

.method(Method.PUT)

.bucket(bucketName)

.object(filePath)

.expiry(1, TimeUnit.DAYS)

.extraQueryParams(queryParams)

.build());

}

/**

* GetObject接口用于获取某个文件(Object)。此操作需要对此Object具有读权限。

*

* @param bucketName 桶名

* @param objectName 文件路径

*/

@SneakyThrows

public InputStream getObject(String bucketName, String objectName) {

return minioClient.getObject(

GetObjectArgs.builder().bucket(bucketName).object(objectName).build());

}

/**

* 查询桶的对象信息

*

* @param bucketName 桶名

* @param recursive 是否递归查询

* @return 桶的对象信息

*/

@SneakyThrows

public Iterable<Result<Item>> listObjects(String bucketName, boolean recursive) {

return minioClient.listObjects(

ListObjectsArgs.builder().bucket(bucketName).recursive(recursive).build());

}

/**

* 获取带签名的临时上传元数据对象,前端可获取后,直接上传到Minio

*

* @param bucketName 桶名称

* @param fileName 文件名

* @return Map<String, String>

*/

@SneakyThrows

public Map<String, String> getPresignedPostFormData(String bucketName, String fileName) {

// 为存储桶创建一个上传策略,过期时间为7天

PostPolicy policy = new PostPolicy(bucketName, ZonedDateTime.now().plusDays(1));

// 设置一个参数key,值为上传对象的名称

policy.addEqualsCondition("key", fileName);

// 添加Content-Type,例如以"image/"开头,表示只能上传照片,这里吃吃所有

policy.addStartsWithCondition("Content-Type", MediaType.ALL_VALUE);

// 设置上传文件的大小 64kiB to 10MiB.

//policy.addContentLengthRangeCondition(64 * 1024, 10 * 1024 * 1024);

return minioClient.getPresignedPostFormData(policy);

}

public String generateFileInMinioName(String originalFilename) {

return "files" + StrUtil.SLASH + DateUtil.format(new Date(), "yyyy-MM-dd") + StrUtil.SLASH + UUID.randomUUID() + StrUtil.UNDERLINE + originalFilename;

}

/**

* 初始化默认存储桶

*/

@PostConstruct

public void initDefaultBucket() {

String defaultBucketName = minioConfig.getBucketName();

if (bucketExists(defaultBucketName)) {

log.info("默认存储桶:defaultBucketName已存在");

} else {

log.info("创建默认存储桶:defaultBucketName");

makeBucket(minioConfig.getBucketName());

}

;

}

/**

* 文件合并,将分块文件组成一个新的文件

*

* @param bucketName 合并文件生成文件所在的桶

* @param fileName 原始文件名

* @param sourceObjectList 分块文件集合

* @return OssFile

*/

@SneakyThrows

public OssFile composeObject(String bucketName, String fileName, List<ComposeSource> sourceObjectList) {

String filenameExtension = StringUtils.getFilenameExtension(fileName);

String objectName = UUID.randomUUID() + "." + filenameExtension;

minioClient.composeObject(ComposeObjectArgs.builder()

.bucket(bucketName)

.object(objectName)

.sources(sourceObjectList)

.build());

String presignedObjectUrl = getPresignedObjectUrl(bucketName, fileName);

return new OssFile(presignedObjectUrl, fileName);

}

/**

* 文件合并,将分块文件组成一个新的文件

*

* @param bucketName 合并文件生成文件所在的桶

* @param objectName 原始文件名

* @param sourceObjectList 分块文件集合

* @return OssFile

*/

@SneakyThrows

public OssFile composeObject(List<ComposeSource> sourceObjectList, String bucketName, String objectName) {

minioClient.composeObject(ComposeObjectArgs.builder()

.bucket(bucketName)

.object(objectName)

.sources(sourceObjectList)

.build());

String presignedObjectUrl = getPresignedObjectUrl(bucketName, objectName);

return new OssFile(presignedObjectUrl, objectName);

}

/**

* 文件合并,将分块文件组成一个新的文件

*

* @param originBucketName 分块文件所在的桶

* @param targetBucketName 合并文件生成文件所在的桶

* @param objectName 存储于桶中的对象名

* @return OssFile

*/

@SneakyThrows

public OssFile composeObject(String originBucketName, String targetBucketName, String objectName) {

Iterable<Result<Item>> results = listObjects(originBucketName, true);

List<String> objectNameList = new ArrayList<>();

for (Result<Item> result : results) {

Item item = result.get();

objectNameList.add(item.objectName());

}

if (ObjectUtils.isEmpty(objectNameList)) {

throw new IllegalArgumentException(originBucketName + "桶中没有文件,请检查");

}

List<ComposeSource> composeSourceList = new ArrayList<>(objectNameList.size());

// 对文件名集合进行升序排序

objectNameList.sort((o1, o2) -> Integer.parseInt(o2) > Integer.parseInt(o1) ? -1 : 1);

for (String object : objectNameList) {

composeSourceList.add(ComposeSource.builder()

.bucket(originBucketName)

.object(object)

.build());

}

return composeObject(composeSourceList, targetBucketName, objectName);

}

}

6.6、OssFile.java

package cn.lyf.minio.entity;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

/**

* @author lyf

* @description:

* @version: v1.0

* @since 2022-05-03 13:40

*/

@Data

@NoArgsConstructor

@AllArgsConstructor

public class OssFile {

/**

* OSS 存储时文件路径

*/

private String ossFilePath;

/**

* 原始文件名

*/

private String originalFileName;

}

6.7、StatusCode.java

package cn.lyf.minio.entity;

import lombok.Getter;

/**

* @author lyf

* @description: 状态码

* @version: v1.0

* @since 2022-05-07 9:56

*/

public enum StatusCode {

SUCCESS(20000, "操作成功"),

PARAM_ERROR(40000, "参数异常"),

NOT_FOUND(40004, "资源不存在"),

FAILURE(50000, "系统异常"),

CUSTOM_FAILURE(50001, "自定义异常错误"),

ALONE_CHUNK_UPLOAD_SUCCESS(20001, "分片上传成功的标识"),

ALL_CHUNK_UPLOAD_SUCCESS(20002, "所有的分片均上传成功");

@Getter

private final Integer code;

@Getter

private final String message;

StatusCode(Integer code, String message) {

this.code = code;

this.message = message;

}

}

6.8、Md5Util.java

package cn.lyf.minio.utils;

import lombok.extern.slf4j.Slf4j;

import java.io.BufferedInputStream;

import java.io.IOException;

import java.io.InputStream;

import java.nio.charset.StandardCharsets;

import java.security.DigestInputStream;

import java.security.MessageDigest;

import java.security.NoSuchAlgorithmException;

/**

* @author lyf

* @description: 计算文件的Md5

* @version: v1.0

* @since 2022-04-29 20:53

*/

@Slf4j

public final class Md5Util {

private static final int BUFFER_SIZE = 8 * 1024;

private static final char[] HEX_CHARS =

{'0', '1', '2', '3', '4', '5', '6', '7', '8', '9', 'a', 'b', 'c', 'd', 'e', 'f'};

private Md5Util() {

}

/**

* 计算字节数组的md5

*

* @param bytes bytes

* @return 文件流的md5

*/

public static String calculateMd5(byte[] bytes) {

try {

MessageDigest md5MessageDigest = MessageDigest.getInstance("MD5");

return encodeHex(md5MessageDigest.digest(bytes));

} catch (NoSuchAlgorithmException e) {

throw new IllegalArgumentException("no md5 found");

}

}

/**

* 计算文件的输入流

*

* @param inputStream inputStream

* @return 文件流的md5

*/

public static String calculateMd5(InputStream inputStream) {

try {

MessageDigest md5MessageDigest = MessageDigest.getInstance("MD5");

try (BufferedInputStream bis = new BufferedInputStream(inputStream);

DigestInputStream digestInputStream = new DigestInputStream(bis, md5MessageDigest)) {

final byte[] buffer = new byte[BUFFER_SIZE];

while (digestInputStream.read(buffer) > 0) {

// 获取最终的MessageDigest

md5MessageDigest = digestInputStream.getMessageDigest();

}

return encodeHex(md5MessageDigest.digest());

} catch (IOException ioException) {

log.error("", ioException);

throw new IllegalArgumentException(ioException.getMessage());

}

} catch (NoSuchAlgorithmException e) {

throw new IllegalArgumentException("no md5 found");

}

}

/**

* 获取字符串的MD5值

*

* @param input 输入的字符串

* @return md5

*/

public static String calculateMd5(String input) {

try {

// 拿到一个MD5转换器(如果想要SHA1参数,可以换成SHA1)

MessageDigest md5MessageDigest = MessageDigest.getInstance("MD5");

byte[] inputByteArray = input.getBytes(StandardCharsets.UTF_8);

md5MessageDigest.update(inputByteArray);

// 转换并返回结果,也是字节数组,包含16个元素

byte[] resultByteArray = md5MessageDigest.digest();

// 将字符数组转成字符串返回

return encodeHex(resultByteArray);

} catch (NoSuchAlgorithmException e) {

throw new IllegalArgumentException("md5 not found");

}

}

/**

* 转成的md5值为全小写

*

* @param bytes bytes

* @return 全小写的md5值

*/

private static String encodeHex(byte[] bytes) {

char[] chars = new char[32];

for (int i = 0; i < chars.length; i = i + 2) {

byte b = bytes[i / 2];

chars[i] = HEX_CHARS[(b >>> 0x4) & 0xf];

chars[i + 1] = HEX_CHARS[b & 0xf];

}

return new String(chars);

}

}

6.9、MinioFileController.java

package cn.lyf.minio.controller;

import cn.hutool.core.io.FileTypeUtil;

import cn.lyf.minio.config.MinioConfig;

import cn.lyf.minio.core.MinioTemplate;

import cn.lyf.minio.entity.OssFile;

import cn.lyf.minio.entity.StatusCode;

import cn.lyf.minio.utils.Md5Util;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.util.ObjectUtils;

import org.springframework.util.StringUtils;

import org.springframework.web.bind.annotation.*;

import org.springframework.web.multipart.MultipartFile;

import org.springframework.web.multipart.MultipartHttpServletRequest;

import org.springframework.web.servlet.ModelAndView;

import javax.annotation.Resource;

import javax.servlet.http.HttpServletRequest;

import java.io.IOException;

import java.io.InputStream;

import java.io.Serializable;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.UUID;

/**

* @author lyf

* @description:

* @version: v1.0

* @since 2022-05-03 10:39

*/

@RestController

@RequestMapping(value = "/file")

@Slf4j

@CrossOrigin // 允许跨域

public class MinioFileController {

private static final String MD5_KEY = "cn:lyf:minio:demo:file:md5List";

@Autowired

private MinioTemplate minioTemplate;

@Autowired

private MinioConfig minioConfig;

@Resource(name = "jsonRedisTemplate")

private RedisTemplate<String, Serializable> jsonRedisTemplate;

@RequestMapping(value = "/home/upload")

public ModelAndView homeUpload() {

ModelAndView modelAndView = new ModelAndView();

modelAndView.setViewName("upload");

return modelAndView;

}

/**

* 根据文件大小和文件的md5校验文件是否存在

* 暂时使用Redis实现,后续需要存入数据库

* 实现秒传接口

*

* @param md5 文件的md5

* @return 操作是否成功

*/

@GetMapping(value = "/check")

public Map<String, Object> checkFileExists(String md5) {

Map<String, Object> resultMap = new HashMap<>();

if (ObjectUtils.isEmpty(md5)) {

resultMap.put("status", StatusCode.PARAM_ERROR.getCode());

return resultMap;

}

// 先从Redis中查询

String url = (String) jsonRedisTemplate.boundHashOps(MD5_KEY).get(md5);

// 文件不存在

if (ObjectUtils.isEmpty(url)) {

resultMap.put("status", StatusCode.NOT_FOUND.getCode());

return resultMap;

}

resultMap.put("status", StatusCode.SUCCESS.getCode());

resultMap.put("url", url);

// 文件已经存在了

return resultMap;

}

/**

* 文件上传,适合大文件,集成了分片上传

*/

@PostMapping(value = "/upload")

public Map<String, Object> upload(HttpServletRequest req) {

Map<String, Object> map = new HashMap<>();

MultipartHttpServletRequest multipartRequest = (MultipartHttpServletRequest) req;

// 获得文件分片数据

MultipartFile file = multipartRequest.getFile("data");

// 上传过程中出现异常,状态码设置为50000

if (file == null) {

map.put("status", StatusCode.FAILURE.getCode());

return map;

}

// 分片第几片

int index = Integer.parseInt(multipartRequest.getParameter("index"));

// 总片数

int total = Integer.parseInt(multipartRequest.getParameter("total"));

// 获取文件名

String fileName = multipartRequest.getParameter("name");

String md5 = multipartRequest.getParameter("md5");

// 创建文件桶

minioTemplate.makeBucket(md5);

String objectName = String.valueOf(index);

log.info("index: {}, total:{}, fileName:{}, md5:{}, objectName:{}", index, total, fileName, md5, objectName);

// 当不是最后一片时,上传返回的状态码为20001

if (index < total) {

try {

// 上传文件

OssFile ossFile = minioTemplate.putChunkObject(file.getInputStream(), md5, objectName);

log.info("{} upload success {}", objectName, ossFile);

// 设置上传分片的状态

map.put("status", StatusCode.ALONE_CHUNK_UPLOAD_SUCCESS.getCode());

return map;

} catch (Exception e) {

e.printStackTrace();

map.put("status", StatusCode.FAILURE.getCode());

return map;

}

} else {

// 为最后一片时状态码为20002

try {

// 上传文件

minioTemplate.putChunkObject(file.getInputStream(), md5, objectName);

// 设置上传分片的状态

map.put("status", StatusCode.ALL_CHUNK_UPLOAD_SUCCESS.getCode());

return map;

} catch (Exception e) {

e.printStackTrace();

map.put("status", StatusCode.FAILURE.getCode());

return map;

}

}

}

/**

* 文件合并

*

* @param shardCount 分片总数

* @param fileName 文件名

* @param md5 文件的md5

* @param fileType 文件类型

* @param fileSize 文件大小

* @return 分片合并的状态

*/

@GetMapping(value = "/merge")

public Map<String, Object> merge(Integer shardCount, String fileName, String md5, String fileType,

Long fileSize) {

Map<String, Object> retMap = new HashMap<>();

try {

// 查询片数据

List<String> objectNameList = minioTemplate.listObjectNames(md5);

if (shardCount != objectNameList.size()) {

// 失败

retMap.put("status", StatusCode.FAILURE.getCode());

} else {

// 开始合并请求

String targetBucketName = minioConfig.getBucketName();

String filenameExtension = StringUtils.getFilenameExtension(fileName);

String fileNameWithoutExtension = UUID.randomUUID().toString();

String objectName = fileNameWithoutExtension + "." + filenameExtension;

minioTemplate.composeObject(md5, targetBucketName, objectName);

log.info("桶:{} 中的分片文件,已经在桶:{},文件 {} 合并成功", md5, targetBucketName, objectName);

// 合并成功之后删除对应的临时桶

minioTemplate.removeBucket(md5, true);

log.info("删除桶 {} 成功", md5);

// 计算文件的md5

String fileMd5 = null;

try (InputStream inputStream = minioTemplate.getObject(targetBucketName, objectName)) {

fileMd5 = Md5Util.calculateMd5(inputStream);

} catch (IOException e) {

log.error("", e);

}

// 计算文件真实的类型

String type = null;

try (InputStream inputStreamCopy = minioTemplate.getObject(targetBucketName, objectName)) {

type = FileTypeUtil.getType(inputStreamCopy);

} catch (IOException e) {

log.error("", e);

}

// 并和前台的md5进行对比

if (!ObjectUtils.isEmpty(fileMd5) && !ObjectUtils.isEmpty(type) && fileMd5.equalsIgnoreCase(md5) && type.equalsIgnoreCase(fileType)) {

// 表示是同一个文件, 且文件后缀名没有被修改过

String url = minioTemplate.getPresignedObjectUrl(targetBucketName, objectName);

// 存入redis中

jsonRedisTemplate.boundHashOps(MD5_KEY).put(fileMd5, url);

// 成功

retMap.put("status", StatusCode.SUCCESS.getCode());

} else {

log.error("非法的文件信息: 分片数量:{}, 文件名称:{}, 文件md5:{}, 文件类型:{}, 文件大小:{}",

shardCount, fileName, md5, fileType, fileSize);

// 并需要删除对象

minioTemplate.deleteObject(targetBucketName, objectName);

retMap.put("status", StatusCode.FAILURE.getCode());

}

}

} catch (Exception e) {

log.error("", e);

// 失败

retMap.put("status", StatusCode.FAILURE.getCode());

}

return retMap;

}

}

6.10、启动类

package cn.lyf.minio;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class Minio2Application {

public static void main(String[] args) {

SpringApplication.run(Minio2Application.class, args);

}

}

7、分片上传的核心代码 io.minio.MinioClient#composeObject

public ObjectWriteResponse composeObject(ComposeObjectArgs args)

throws ErrorResponseException, InsufficientDataException, InternalException,

InvalidKeyException, InvalidResponseException, IOException, NoSuchAlgorithmException,

ServerException, XmlParserException {

checkArgs(args);

args.validateSse(this.baseUrl);

List<ComposeSource> sources = args.sources();

int partCount = calculatePartCount(sources);

if (partCount == 1

&& args.sources().get(0).offset() == null

&& args.sources().get(0).length() == null) {

return copyObject(new CopyObjectArgs(args));

}

Multimap<String, String> headers = newMultimap(args.extraHeaders());

headers.putAll(args.genHeaders());

CreateMultipartUploadResponse createMultipartUploadResponse =

createMultipartUpload(

args.bucket(), args.region(), args.object(), headers, args.extraQueryParams());

String uploadId = createMultipartUploadResponse.result().uploadId();

Multimap<String, String> ssecHeaders = HashMultimap.create();

if (args.sse() != null && args.sse() instanceof ServerSideEncryptionCustomerKey) {

ssecHeaders.putAll(newMultimap(args.sse().headers()));

}

try {

int partNumber = 0;

Part[] totalParts = new Part[partCount];

for (ComposeSource src : sources) {

long size = src.objectSize();

if (src.length() != null) {

size = src.length();

} else if (src.offset() != null) {

size -= src.offset();

}

long offset = 0;

if (src.offset() != null) {

offset = src.offset();

}

headers = newMultimap(src.headers());

headers.putAll(ssecHeaders);

if (size <= ObjectWriteArgs.MAX_PART_SIZE) {

partNumber++;

if (src.length() != null) {

headers.put(

"x-amz-copy-source-range", "bytes=" + offset + "-" + (offset + src.length() - 1));

} else if (src.offset() != null) {

headers.put("x-amz-copy-source-range", "bytes=" + offset + "-" + (offset + size - 1));

}

UploadPartCopyResponse response =

uploadPartCopy(

args.bucket(), args.region(), args.object(), uploadId, partNumber, headers, null);

String eTag = response.result().etag();

totalParts[partNumber - 1] = new Part(partNumber, eTag);

continue;

}

while (size > 0) {

partNumber++;

long startBytes = offset;

long endBytes = startBytes + ObjectWriteArgs.MAX_PART_SIZE;

if (size < ObjectWriteArgs.MAX_PART_SIZE) {

endBytes = startBytes + size;

}

Multimap<String, String> headersCopy = newMultimap(headers);

headersCopy.put("x-amz-copy-source-range", "bytes=" + startBytes + "-" + endBytes);

UploadPartCopyResponse response =

uploadPartCopy(

args.bucket(),

args.region(),

args.object(),

uploadId,

partNumber,

headersCopy,

null);

String eTag = response.result().etag();

totalParts[partNumber - 1] = new Part(partNumber, eTag);

offset = startBytes;

size -= (endBytes - startBytes);

}

}

return completeMultipartUpload(

args.bucket(),

getRegion(args.bucket(), args.region()),

args.object(),

uploadId,

totalParts,

null,

null);

} catch (RuntimeException e) {

abortMultipartUpload(args.bucket(), args.region(), args.object(), uploadId, null, null);

throw e;

} catch (Exception e) {

abortMultipartUpload(args.bucket(), args.region(), args.object(), uploadId, null, null);

throw e;

}

}

先自我介绍一下,小编13年上师交大毕业,曾经在小公司待过,去过华为OPPO等大厂,18年进入阿里,直到现在。深知大多数初中级java工程师,想要升技能,往往是需要自己摸索成长或是报班学习,但对于培训机构动则近万元的学费,着实压力不小。自己不成体系的自学效率很低又漫长,而且容易碰到天花板技术停止不前。因此我收集了一份《java开发全套学习资料》送给大家,初衷也很简单,就是希望帮助到想自学又不知道该从何学起的朋友,同时减轻大家的负担。添加下方名片,即可获取全套学习资料哦

边栏推荐

猜你喜欢

随机推荐

go笔记之——goroutine

管理基础知识15

C语言实现扫雷游戏

2022/08/01 Study Notes (day21) Generics and Enums

Difference between JSP out.print() and out.write() methods

go mode tidy出现报错go warning “all“ matched no packages

flyway的快速入门教程

[21-Day Learning Challenge] A small summary of sequential search and binary search

146. LRU cache

After reshipment tencent greetings to monitor if the corresponding service does not exist by sc. Exe command to add services

Industrial control network intrusion detection based on automatic optimization of hyperparameters

哪里有期货开户的正规途径?

Routing strategy

Stapler:1 靶机渗透测试-Vulnhub(STAPLER: 1)

C语言:打印整数二进制的奇数位和偶数位

BGP综合实验 建立对等体、路由反射器、联邦、路由宣告及聚合

flowable工作流所有业务概念

什么是低代码(Low-Code)?低代码适用于哪些场景?

期货开户手续费加一分是主流

网络请求技术--跨域