当前位置:网站首页>Service developers publish services based on EDAs

Service developers publish services based on EDAs

2022-07-04 09:59:00 【Stestack】

This document mainly introduces Service developers are based on Aliware When doing project development , from Project environment construction , Project development , Project launch and Aliware Instructions related to service functions , Operation steps and code examples .

Service developers

The back-end development language is JAVA.

Need to use RPC(HSF).

need EADS Application management provided ( Up and down shelf management , Dynamic capacity 、 Shrinkage capacity ).

Application service monitoring service is required ( Log unified management , Operation environment monitoring ,Jvm Condition monitoring , Request link analysis ).

edition | / Updated date | Update the content |

V1.0.0 | 20170719 | First release |

V1.0.4 | 20170725 | modify Maven Private service configuration settings.xml file , Remove the line number from the code |

Application for development resources

Alibaba cloud applies for sub account

ECS Application for

Agent Application for

EDAS Agent brief introduction

EDAS Agent( hereinafter referred to as Agent) yes Installed in the ECS On , be used for EDAS Service cluster and deployment are corresponding ECS Communication between applications on Daemon Program . In the process of operation, it mainly undertakes the following roles :

Application management : Including application deployment 、 start-up 、 Stop etc. .

Status rewards : Including application survival status 、 Health check status 、Ali-Tomcat Container status, etc .

information acquisition : If you get ECS And container monitoring information .

Agent In addition to the above application based control functions , Also responsible for EDAS Communication between the console and user applications . To put it simply , Whether the service published by an application is on a certain platform ECS On the right and timely release , This simple information acquisition requires Agent Participation and coordination .

explain : Above Agent The functions involved are transparent to users , As the user , You just need to install Agent that will do .

Parent directory pom.xml File configuration

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>1.4.0.RELEASE</version>

</parent>

<properties>

<spring-cloud-starter-pandora.version>1.0</spring-cloud-starter-pandora.version>

<spring-cloud-starter-hsf.version>1.0</spring-cloud-starter-hsf.version>

<spring-cloud-starter-sentinel.version>1.0</spring-cloud-starter-sentinel.version>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>Camden.SR3</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!-- What users need to rely on Api -->

<dependency>

<groupId>cn.com.flaginfo.edas</groupId>

<artifactId>pandora-hsf-boot-demo-api</artifactId>

<version>0.0.1-SNAPSHOT</version>

</dependency>

<!-- Alibaba Aliware -->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-pandora</artifactId>

<version>${spring-cloud-starter-pandora.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-hsf</artifactId>

<version>${spring-cloud-starter-hsf.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-sentinel</artifactId>

<version>${spring-cloud-starter-sentinel.version}</version>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>javax.servlet-api</artifactId>

<version>3.1.0</version>

</dependency>

</dependencies>

</dependencyManagement>

In the project consumer and provider engineering pom.xml Add the following dependency

<dependencies>

<!-- What users need to rely on Api -->

<dependency>

<groupId>cn.com.flaginfo.edas</groupId>

<artifactId>pandora-hsf-boot-demo-api</artifactId>

</dependency>

<!-- spring dependency -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!-- Alibaba Aliware rely on -->

<!-- pandora-boot Start dependency -->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-pandora</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-hsf</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-sentinel</artifactId>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>javax.servlet-api</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba.edas.configcenter</groupId>

<artifactId>configcenter-client</artifactId>

<version>1.0.2</version>

</dependency>

</dependencies>

<build>

<plugins>

<!-- This plug-in is used to generate fat jar, And take the initiative to eliminate taobao-hsf.sar This jar package , The jar When the package is deployed in the production environment, it will pass -D Parameter assignment , So there is no need to pack when packing , Avoid waste of resources -->

<plugin>

<groupId>com.taobao.pandora</groupId>

<artifactId>pandora-boot-maven-plugin</artifactId>

<version>2.1.6.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>repackage</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

application.properties To configure

# The service name

spring.application.name=pandora-hsf-boot-demo-consumer

# Port number

server.port=8082

Startup code

In the project consumer and provider engineering springBoot Add the following code to the startup class

@SpringBootApplication

// Set up HSF Configuration file and current limiting degradation configuration

@ImportResource(locations = { "classpath:hsf-beans.xml","classpath*:sentinel-tracer.xml"})

public class HSFBootDemoConsumerApplication {

public static void main(String[] args) {

// start-up Pandora Boot Used for loading Pandora Containers

PandoraBootstrap.run(args);

SpringApplication.run(HSFBootDemoConsumerApplication.class, args);

// Mark service startup complete , And set the thread wait. Usually in main The last line of the function calls .

PandoraBootstrap.markStartupAndWait();

}

}

Service release

Defining interfaces

HSF Services based on interface implementation , When the interface is defined , The producer will implement the interface to provide specific implementations to provide services , Consumers also subscribe as a service based on this interface .

stay api Define a service interface in the project ItemService, This interface will provide two methods getItemById And getItemByName.

public interface ItemService { |

The producer implements the interface class

stay provider Create implementation classes in the project ItemServiceImpl.

public class ItemServiceImpl implements ItemService {

@Override

public Item getItemById( long id ) {

Item car = new Item();

car.setItemId( 1l );

car.setItemName( "Test Item GetItemById" );

return car;

}

@Override

public Item getItemByName( String name ) {

Item car = new Item();

car.setItemId( 1l );

car.setItemName( " Test Item GetItemByName" );

return car;

}

}

Producer allocation

stay resources Add... Under the table of contents spring The configuration file :config/hsf-provider-beans.xml

|

<?xml version="1.0" encoding="UTF-8"?> |

Producer configuration attribute list

About HSF Producer's attribute configuration , In addition to what is mentioned above , There are also the following options :

attribute | describe |

interface | interface You have to configure [String], Interfaces provided for services |

version | version Optional configuration [String], It means the version of the service , The default is 1.0.0 |

group | serviceGroup Optional configuration [String], It means the group to which the service belongs , In order to manage the configuration of services by group , The default is HSF |

clientTimeout | This configuration takes effect for all methods in the interface , But if the client passes MethodSpecial Property configures a timeout for a method , Then the timeout time of this method is subject to the client configuration , Other methods are not affected , It is still subject to the server configuration |

serializeType | serializeType Optional configuration [String(hessian|java)], Meaning serialization type , The default is hessian |

corePoolSize | Set the core thread pool for this service alone , Is to cut a piece from the big cake of the common thread pool |

maxPoolSize | Set the thread pool separately for this service , Is to cut a piece from the big cake of the common thread pool |

enableTXC | Open distributed transaction TXC |

ref | ref You have to configure [ref], Publish as needed HSF Service Spring Bean ID |

methodSpecials | methodSpecials Optional configuration , Used to individually configure timeouts for methods ( Company ms), In this way, the methods in the interface can adopt different timeout times , The priority of this configuration is higher than clientTimeout Timeout configuration of , Lower than that of the client methodSpecials To configure |

Service subscription

Consumer configuration

stay resources Add... Under the table of contents spring The configuration file :config/hsf-consumer-beans.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:hsf="http://www.taobao.com/hsf"

xmlns="http://www.springframework.org/schema/beans"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-2.5.xsd

http://www.taobao.com/hsf

http://www.taobao.com/hsf/hsf.xsd" default-autowire="byName">

<!-- Consume a service example -->

<hsf:consumer id="item" interface="cn.com.flaginfo.edas.demo.itemcenter.ItemService"

version="1.0.0" group="test">

</hsf:consumer>

</beans>

stay consumer Create implementation class tests in the project DemoController class .

@Controller

@RequestMapping("demo")

public class DemoController {

@Autowired

private ItemService itemService;

@ResponseBody

@RequestMapping(method = RequestMethod.GET)

public String testItem() {

Item item = itemService.getItemById(10);

System.out.println("getItemById=" + item);

return item.toString();

}

}

Consumer configuration attribute list

In addition to the attributes embodied in the sample code (interface,group,version) outside , There are also attribute configurations in the following table for selection :

attribute | describe |

interface | interface You have to configure [String], For the interface of the service to be called |

version | version Optional configuration [String], It means the version of the service to be called , The default is 1.0.0 |

group | group Optional configuration [String], It means the group of the service to be called , In order to manage the configuration of services by group , The default is HSF, Recommended configuration |

methodSpecials | methodSpecials Optional configuration , It means configuring the timeout for the method separately ( Company ms), In this way, the methods in the interface can adopt different timeout times , The priority of this configuration is higher than the timeout configuration of the server |

target | It is mainly used in unit test environment and hsf.runmode=0 In the development environment , In the operating environment , This property will be invalid , Instead, the target service address information pushed back by the configuration center is used |

connectionNum | connectionNum Optional configuration , It means to support setting to connect to server The number of connections , The default is 1, In small data transmission , Set more when low delay is required , Will improve TPS |

clientTimeout | The client uniformly sets the timeout of all methods in the interface ( Company ms), The priority of timeout setting from high to low is : client MethodSpecial, Client interface level , Server side MethodSpecial, Server interface level |

asyncallMethods | asyncallMethods Optional configuration [List], It means that when calling this service, you need to use the list of method names of asynchronous calls and the way of asynchronous calls . The default is empty collection , That is, all methods are called synchronously |

maxWaitTimeForCsAddress | Configure the parameter , The purpose is to subscribe to the service , Within the time specified by this parameter , Blocking thread waits for address push , Avoid the situation that the address cannot be found because the address is empty when calling the service . If the specified time of this parameter is exceeded , The address is still not pushed , The thread will no longer wait , Continue to initialize subsequent content . |

Configuration center

Add dependency

<dependency>

<groupId>com.alibaba.edas.configcenter</groupId>

<artifactId>configcenter-client</artifactId>

<version>1.0.2</version>

</dependency>

Sample code

public class ConfigCenter {

// attribute / switch

private static String config = "";

// When initializing , Add listening to the configuration

private static void initConfig() {

ConfigService.addListener("hsfDemoConsumer", "hsfConfigTest",

new ConfigChangeListener() {

public void receiveConfigInfo(String configInfo) {

try {

// When the configuration is updated , Get the new configuration now

config = configInfo;

System.out.println(configInfo);

} catch (Exception e) {

e.printStackTrace();

}

}

});

}

// adopt get Interfaces expose configuration values for use

public static String getConfig() {

return config;

}

}

unit testing

Add dependency

<dependency>

<groupId>com.alibaba.hsf</groupId>

<artifactId>LightApi</artifactId>

<version>1.0.0</version>

</dependency>

<dependency>

<groupId>org.mockito</groupId>

<artifactId>mockito-all</artifactId>

</dependency>

<dependency>

<groupId>com.taobao.pandora</groupId>

<artifactId>pandora-boot-test</artifactId>

<version>2.1.6.3</version>

</dependency>

Sample code

@RunWith(PandoraBootRunner.class)

@DelegateTo(SpringJUnit4ClassRunner.class)

// Load the classes needed for the test , Be sure to join in Spring Boot The start of class , Secondly, you need to add this class

@SpringBootTest(classes = { HSFBootDemoProviderApplication.class, VersionInfoTest.class })

@Component

public class VersionInfoTest {

// When used here @HSFConsumer when , Must be in @SpringBootTest Class loading , Load this class , Inject objects through this class , Otherwise, as generalization , Class conversion exception will be reported

@HSFConsumer(generic = true, serviceGroup = "test" ,serviceVersion = "1.0.0")

private VersionInfoService versionInfoService;

@Autowired

ApplicationService applicationService;

@Test

public void testInvoke() {

System.out.println(applicationService.getApplication());

System.out.println("#####:"+ versionInfoService.getVersionInfo());

TestCase.assertEquals("V1.0.0", versionInfoService.getVersionInfo());

}

@Test

public void testGenericInvoke() {

GenericService service = (GenericService) versionInfoService;

Object result = service.$invoke("getVersionInfo", new String[] {}, new Object[] {});

System.out.println("####:"+result);

TestCase.assertEquals("V1.0.0", result);

}

@Test

public void testMock() {

VersionInfoService mock = Mockito.mock(VersionInfoService.class, AdditionalAnswers.delegatesTo(versionInfoService));

Mockito.when(mock.getVersionInfo()).thenReturn("V1.9.beta");

TestCase.assertEquals("V1.9.beta", mock.getVersionInfo());

}

}

Actuator monitor

Add dependency

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

stay application.properties Add configuration in

#health Interface information details configuration

endpoints.health.sensitive=false

# The port number must be 7002, Otherwise, an error will be reported

management.port=7002

Usage method

http request Get Directly call the interface to get the return value information

for example :http://127.0.0.1:7002/health

Be careful : because PandoraBoot redefined actuator Start port , Fixed for 7002, Therefore, multiple project configurations in the development environment will report port occupation errors .

Deployment and operation

Local deployment

stay IDE in , adopt main Method direct start .

Local packaging FatJar , adopt JAVA Command to start .

exclude taobao-hsf.sar Depend on the startup mode ( Join in -D Appoint SAR Location )

#java -jar -Dpandora.location=/Users/Jason/.m2/repository/com/taobao/pandora/taobao-hsf.sar/3.2/taobao-hsf.sar-3.2.jar pandora-hsf-boot-demo-provider-0.0.1-SNAPSHOT.jar

explain :-Dpandora.location Designated location ( It can be an unzipped directory or JAR package )

for example : java -jar -Dpandora.location=/path/to/your/taobao-hsf.sar your_app.jar

Be careful : -Dpandora.location The specified path must be full

Do not rule out taobao-hsf.sar Depend on the startup mode ( Set through plug-ins ) adopt pandora-boot-maven-plugin plug-in unit , hold excludeSar Set to false , The default is true , This... Will be included automatically when packaging SAR package .

<plugin>

<groupId>com.taobao.pandora</groupId>

<artifactId>pandora-boot-maven-plugin</artifactId>

<version>2.1.6.3</version>

<configuration>

<excludeSar>false</excludeSar>

</configuration>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>repackage</goal>

</goals>

</execution>

</executions>

</plugin>

Direct start

java -jar pandora-hsf-boot-demo-provider-0.0.1-SNAPSHOT.jar

In application management , The created application needs to be supported FatJar The container of functions . Select deployment application , Upload FatJar The application can be deployed . In the future, the default application can only be uploaded FatJar 了 , No longer supported WAR .

The development of specification

Engineering structure standard

This structure is suitable for providing only RPC Producer interface services , for example : Comment service , Check in service, etc .

Project example :

Project name: : General comments

The project name is abbreviated as :common-comment

Directory structure example :

Description of core directory structure :

Directory name | Directory description |

common-comment | Parent directory |

common-comment-api | RPC Interface definition directory |

common-comment-common | General class directory |

common-comment-service | RPC Interface implementation directory , The producers |

Package Structure example :

The core Package Structure description :

Module name | Package name | Package describe |

common-comment-api | api.domain | service Related entity classes (BO class ) Location package |

api.service | RPC The package of the producer interface | |

common-comment-service | dao | database ORM Map the package of the interface |

dao.impl | database ORM The package of the implementation class | |

domain | The database table corresponds to the entity class (DO class ) Location package | |

service.impl | RPC The package of the producer interface implementation class | |

common-comment-common | code | The return value code enumerates the package of the class |

domain | The general return value result encapsulates the package of the class |

Be careful : For the convenience of typesetting Package Omit the prefix in the name column package, The actual project must contain pre cn.com.flaginfo. Project name ( The project name is lowercase without connecting symbol ).

This structure is applicable to many business functions and web Functional projects , for example : Party building project .

Project example :

Project name: : Jinhua fire

The project name is abbreviated as :jhxf

Directory structure example :

Directory structure description :

【 mandatory 】XXX-admin-web: by PC End front end code and view control layer code (controller).

【 mandatory 】XXX-app-web: For the mobile phone end-to-end front-end code and view control layer code (controller).

【 recommend 】XXX-service: The business processing module is based on the business and function call , Can be split into multiple service modular .

for example : A resource consumption (CPU, Memory , Threads , Hard disk IO etc. ) Relatively high function or burst high function , It is suggested to separate one service Provide RPC service , When server resources are tight , It will not affect the normal use of other functions , It is also convenient to dynamically expand and shrink the server .

【 mandatory 】 Service item naming , According to the actual function of the project, spell all English words in small letters , Use English minus sign between words “-” Separate .

for example :common-comment

【 mandatory 】 Business project naming , According to the project location - Project name in lowercase Chinese Pinyin , Pinyin is too long. Abbreviations and English minus signs can be used “-” Separate .

for example :jhxf

【 mandatory 】 The naming rules of project modules are Project name abbreviation - Module name

for example :jhxf-service, common-comment-api

RPC Interface and implementation class naming

【 mandatory 】 Service and business RPC Interfaces are defined in api.service layer , Name it XXXXService.

【 mandatory 】 Interface implementation classes in their respective businesses service Modular service.impl layer , Name it XXXXServiceImpl.

GroupId name

DataId name

POJO Class definition specification

【 mandatory 】 be-all POJO (DO,BO,VO) Class attributes must use Packaging data type .

【 mandatory 】RPC The return value and parameters of the method must use Packaging data type .

【 mandatory 】POJO Variable of boolean type in class , No more is, Otherwise, some framework parsing will cause serialization error .

for example : Defined as basic data type Boolean isDeleted; Properties of , It's the same way isDeleted(),RPC When the framework is parsed in reverse ,“ think ” The corresponding property name is deleted, Property cannot be obtained , And then throw out the difference often .

HSF Use standard

【 recommend 】HSF Configuration mode is currently recommended xml The way to configure .xml The way is convenient and unified for configuration management , More intuitive understanding of the configured interface functions , Configuration attributes are also more flexible and rich than annotation .

producer (RPC Service providers )

【 mandatory 】<hsf:provider> You have to configure group Parameters and version Parameters .

for example :

<hsf:provider id="versionInfoService"

interface="cn.com.flaginfo.edas.demo.service.VersionInfoService" group="test" version="1.0.0" ref="versionInfoServiceImpl" />

consumer (RPC Service caller )

【 mandatory 】<hsf:consumer> You have to configure group Parameters and version Parameters .

for example :

<hsf:consumer id="applicationService" version="1.0.0" group="test"

interface="cn.com.flaginfo.edas.demo.service.ApplicationService"/>

【 recommend 】RPC When the consumer uses asynchronous invocation , It needs to be used after sufficient testing in combination with business scenarios . Because this asynchrony is just a call RPC Interface is asynchronous , The method of obtaining results is synchronization , The current thread will be blocked until the interface returns the result .

【 recommend 】RPC When consumers use generalization , Pay attention to the configuration id Same as the code parameter name , To annotate parameters correctly .

<hsf:consumer id="genericTestService" version="1.0.0" group="test" interface="cn.com.flaginfo.edas.demo.service.GenericTestService" generic="true"/>

java Code :

@Autowired

private GenericService genericTestService;

【 mandatory 】RPC When consumers use generalization , If the interface method has no parameters ,$invoke Method to pass an empty array object , Can't pass on null.

String version = (String)genericTestService.$invoke("getGenericTest", new String[]{}, new Object[]{});

exception handling standard

【 mandatory 】RESTful/http The way of api Open interfaces must catch exceptions , And use “ Error code ”.

【 mandatory 】 Service classes and cross project invocation RPC The producer interface implementation class must catch exceptions , And use unified Result The way , seal loading isSuccess() Method 、“ Error code ”、“ Error short message ”.

explain : About RPC Method return method is used Result The way The reason of :

1) Use the throw exception return mode , If the caller does not catch it, it will generate a runtime error .

2) If you don't stack the information , It's just new Custom exception , Add your own understanding error message, For the call The end solution won't help much . If the stack information is added , because RPC When the reflection method is executed inside the framework , Uncaught exceptions will be encapsulated into other exceptions , Finally, the exceptions defined by the business itself will be encapsulated and nested in other 2 Within an exception , In case of frequent call errors, a large number of exception stack information will be printed , And the performance loss of data serialization and transmission is also a problem .

journal standard

【 mandatory 】 Logging systems cannot be used directly in applications (Log4j、Logback) Medium API, Instead, rely on using a logging framework SLF4J Medium API, Log framework using facade mode , It is beneficial to maintain a unified way of handling logs with each class .

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

private static final Logger logger = LoggerFactory.getLogger(Abc.class);

【 mandatory 】 Yes trace/debug/info Level of log output , Conditional output form must be used .

for example :

if (logger.isDebugEnabled()) {

logger.debug("Processing trade with userId: " + userId + " order : " + order );

}

explain :logger.debug("Processing trade with userId: " + userId + " order : " + order ); If the log level is warn, The above logs will not be printed , But string concatenation will be performed , If order It's the object , Will execute toString() Method , Wasted system resources , Did the above , The final log is not printed .

【 recommend 】 Log carefully . Production environment prohibit output debug journal ; Selective output info journal ; If you use warn To record the business behavior information when it was just launched , Be sure to pay attention to the problem of log output , Avoid bursting the server disk , And remember to delete these observation logs in time .

explain : Output a large number of invalid logs , Not conducive to system performance improvement , It's also not conducive to quickly locating the wrong points .

【 recommend 】 have access to warn Log level to record user input parameter error , Avoid customer complaints , disoriented . Note the level of log output ,error Level only records system logic errors 、 Exception and other important error messages . If not necessary , Please do not type in non abnormal and error scenarios error Level .

【 mandatory 】Http The return value of the interface is uniformly encapsulated as HttpResult, It is convenient for the interface caller to uniformly parse the returned results 、 Process return value status .

【 mandatory 】RPC Interfaces must all have return values , And uniformly packaged as ServiceResult, It is convenient for the caller to uniformly parse the returned results , Handling exception information . The return type without return value is written as ServiceResult<Void>

【 mandatory 】 Consumer calls RPC Interface returned ServiceResult<T> Must judge isSuccess() The method is worth , Handle the existing return value exception information .

HttpResult

/**

* HTTP Interface return object encapsulation

*/

public class HttpResult {

/**

* Return error result encapsulation

* @param resCode Return value code

* @param resMsg Return value message

*/

public Map<String, Object> onFailure(String resCode, String resMsg) {

Map<String, Object> result = new HashMap<String, Object>(2);

result.put("resCode", resCode);

result.put("resMsg", resMsg);

return result;

}

/**

* Return the correct result package

* @param data Returns the parameter

*/

public Map<String, Object> onSuccess(Map<String, Object> data) {

Map<String, Object> result = new HashMap<String, Object>();

result.put("errcode","0");

result.put("errmsg", "ok");

if (data != null) {

result.putAll(data);

}

return result;

}

}

ServiceResult

/**

* Service Layer return object encapsulation

*/

public class ServiceResult<T> implements Serializable{

/**

* Is the request successful

*/

private boolean success = false;

/**

* Return value code

*/

private String resCode;

/**

* Return message

*/

private String resMsg;

/**

* Return results

*/

private T result;

private ServiceResult() {

}

/**

* Return the correct result package

* @param result Return results

* @param <T> Return results Class

* @return Correct result encapsulation

*/

public static <T> ServiceResult<T> onSuccess(T result) {

ServiceResult<T> serviceResult = new ServiceResult<T>();

serviceResult.success = true;

serviceResult.result = result;

serviceResult.resCode = "0";

serviceResult.resMsg = "ok";

return serviceResult;

}

/**

* Return error result encapsulation

* @param resCode Error code

* @param resMsg Error message

* @return Error result encapsulation

*/

public static <T> ServiceResult<T> onFailure(String resCode, String resMsg) {

ServiceResult<T> item = new ServiceResult<T>();

item.success = false;

item.resCode = resCode;

item.resMsg = resMsg;

return item;

}

/**

* Return error result encapsulation

* @param resCode Error code

* @return Error result encapsulation

*/

public static <T> ServiceResult<T> onFailure(String resCode) {

ServiceResult<T> item = new ServiceResult<T>();

item.success = false;

item.resCode = resCode;

item.resMsg = "failure";

return item;

}

public boolean hasResult() {

return result != null;

}

public boolean isSuccess() {

return success;

}

public T getResult() {

return result;

}

public String getResCode() {

return resCode;

}

public String getResMsg() {

return resMsg;

}

@Override

public String toString() {

return "ServiceResult{" +

"success=" + success +

", resCode='" + resCode + '\'' +

", resMsg='" + resMsg + '\'' +

", result=" + result +

'}';

}

}

Launch and deployment instructions

The login address :https://signin.aliyun.com/flaginfo/login.htm

EDAS Console entry

Select the list on the left of the page 《 Enterprise level distributed application services EDAS》 Or at the bottom of the middle of the page 《 Enterprise level distributed application services EDAS》 label , Get into EDAS Console .

EDAS Console

This page is abbreviated as EDAS Console ,EDAS Related resource management , Application management , Configuration Management , Performance monitoring , Task scheduling , Link analysis is integrated here .

Click on Application management → Create an , Enter application related information , And then click next step .

Field description :

Application running environment : The container that runs the application (Ali-Tomcat) edition , The default is the latest version

apply name : apply name ( This service name ), Cannot be repeated in the primary account

Application area : Choose East China 1( At present, we EDAS All servers are in East China 1)

Apply a health check :EDAS Visit this regularly URL, According to its response state , Determine the survival status of the application , If not configured ,EDAS Do not perform applied health checks , But it does not affect the normal operation of the application .

remarks : Descriptive information about the application .

Set the application type and network type

Field description :

The application type choice General application

Network type choice Classic network

example choice Apply the instance to be deployed

I'm gonna go ahead and create an app ( After this step , The application container is created , Then upload war Package or jar package )

Click on Application management → Operations behind applications that need to be deployed ( management )→ Deploy the application , Upload jar Package input version information , After setting up , single click Deploy the application

Deployment application parameter description :

File upload method :

Upload war Package or jar package : Choose upload war Package or jar After package , Upload below war Package or jar On the right side of the package, click to select a file , Open local folder , Select the... To deploy WAR Package or jar package .

war Package or jar Package address : choice war Package or jar After the package address , Hereunder war Package or jar Enter fixed storage... In the text box on the right of the package address war Package or jar Package and accessible URL Address .

Use historical version : Use historical version : After selecting to use the historical version , Select the historical version to use in the drop-down box of the historical version below .

Publish target groups : You need to publish the grouping of this application version .

Please fill in the version : The application version is used to identify the version of the deployment package used for an application release , It can help users distinguish the deployment package version of each application release , And when rolling back the operation , It can accurately track a release .

Be careful : When deploying applications , You can add a version number or text description , Not recommended Use timestamp as version number .

Version Description : Regarding this war package /jar Package version .

Version history ( It is only applicable to file upload methods using historical versions ): Select the historical version to use in the drop-down box .

After the application is successfully created and deployed , Click... In the upper right corner of the application details page Start the application , Start the application .

After the application starts , The top right corner of the page will prompt Successful launch . The task status of the instance in the application is displayed as Running .

Click left Side Run log → Real time log → double-click catalina.out, You can view the startup log

Sign in EDAS Console .

On the left navigation bar , single click Application management .

In the application list , Click the apply name .

In the left navigation bar of the specific application page , choice Application monitoring → Monitor the market .

explain : Include CPU、 load 、 Memory 、 Disk and network , Hover over the monitor chart - A point on the abscissa , You can view the information and status data at this time point .

EDAS Collect data from the machine where the application is running , Then based on the results of the analysis CPU、 Memory 、 load 、 The Internet 、 Single machine and cluster view of five main indicators of disk . All monitoring is based on application for data statistics and processing .

Be careful : There is a certain delay from data acquisition to analysis , therefore EDAS Unable to provide 100% real-time monitoring view , The current delay strategy is 2 minute .

The steps to view the cluster or stand-alone statistical view are :

Sign in EDAS Console , single click Application management , stay Application list Click the specific application .

Select Application monitoring → Basic monitoring , Enter the basic monitoring page .

The basic monitoring page monitors the grouping data of the last half hour by default , You can also monitor the grouped data and stand-alone data of other time intervals by selecting the time interval and stand-alone data tab .

Select the monitoring data type

The data types monitored include grouped data and stand-alone data .

These two data types monitor the same columns of indicators from different dimensions , Include :

CPU Data statistics summary : representative CPU The occupancy rate of , by user and sys Sum of occupancy , The diagram of grouping data is the average occupancy rate of all machines in the application grouping .

Memory data statistics summary : The total size of physical memory and the actual size used , The diagram of grouping data is the total size and total usage size of all machines in the application grouping .

Load data statistics summary : In the system load 1 min load Field , The figure in the packet data shows all the machines in the application packet 1 min load Average value .

Statistics and summary of network speed data : Write and read speed of network card , If there are multiple network cards on the machine , This data represents all network card names with “eth” The sum of the writing and reading speed at the beginning , The figure in the grouping data is the average value of all machines in the application grouping .

Disk data statistics summary : The total size and actual size of all attached disks on the machine , The diagram of grouping data is the total size and total usage size of all machines in the application grouping .

Disk read / write speed data statistics summary : The total reading and writing speed of all attached disks on the machine , The diagram of grouped data is the average value of all machine modification data in the application group .

Statistics and summary of disk read / write times : The number of reads and writes of all attached disks on the machine (IOPS) The sum of the , The diagram of grouped data is the average value of all machine modification data in the application group .

Set the time interval

The time interval includes half an hour 、 Six hours 、 One day and one Thursday .

Half an hour : Count the monitoring data within half an hour before the current time , It is the default way to log in to the basic monitoring page . The time interval of data statistical points is 1 minute , yes EDAS The finest query granularity provided .

Six hours : Before the current time 6 Monitoring data within hours , The time interval of data statistical points is 5 minute .

One day : Before the current time 24 Monitoring data within hours , The time interval of data statistical points is 15 minute .

a week : Before the current time 7 Monitoring data within days , The time interval of data statistical points is 1 Hours , yes EDAS The longest statistical period provided .

explain :

On the page “ Starting time ” And “ End time ”, Is the time span of the current view ; When you choose one of them , The corresponding item will automatically adjust its value , Such as : choice “ Half an hour ”, End time selection “2016 year 5 month 20 Japan 12 spot 00 branch 00 second ” when , The start time will be automatically set to “2016 year 5 month 20 Japan 11 spot 30 branch 00 second ”.

After setting up , The monitored data is automatically updated with the selected time interval .

By collecting and analyzing the embedded log points on different network call middleware , You can get the call chain relationship of each system on the same request , It helps to sort out the application's request entry and service invocation source 、 Dependency relationship , meanwhile , Also for analyzing system call bottlenecks 、 Estimate link capacity 、 It is very helpful to quickly locate exceptions .

Sign in EDAS Console .

In the left navigation bar , single click Application management .

In the application list , Click the apply name .

Select... In the navigation bar on the left side of the specific application page Application monitoring > Service monitoring .

The service monitoring page contains seven tabs :

Application Summary : Show the overall situation of the provided services and the invoked Services .

My entrance : Show what is offered HTTP Entry list .

Provided RPC service : Show what the current application offers RPC Call status of .

RPC Call source : Show what the current application offers RPC Which applications call the service .

RPC Call dependency : Show which application services are called by the current application .

Access database : Show the request to the database .

Message type :MQ News, etc. .

The application summary tab under the default group is displayed by default .

( Optional ) Set monitoring conditions , single click to update , Update monitoring data .

newest : The data of the current time is displayed by default , Click the drop-down arrow , You can choose the time manually .

order by: Default to QPS Sort , Click the drop-down arrow , It can be time-consuming 、 wrong /S( The average error in a minute QPS) Sort .

Number of results : Default display 10 Bar result , Click the drop-down arrow , You can set the display quantity , Include 1、5、30、50、100 And unlimited .

show : By default, it is displayed in a tofu block diagram , You can also choose to support multiple graphs and tables as presentation forms .

Click the specific indicator of a column in the monitoring chart , The custom query page pops up , View the monitoring data of this indicator item .

In the indicator area , Choose different indicators , It can also monitor different combinations of data .

In the process of monitoring services , It can monitor the calling relationship between this application and the attachments of other applications , At the same time, you can also view the detailed call link .

In the monitoring diagram , Click Look at the call chain , Jump to Link analysis > Call chain query page .

On the call chain query page , You can view the application and ( By ) The invocation link between the invoked Services .

Click on TraceId It will jump to the call chain details page , Show call chain details

Monitor tripping applications

The service monitoring page can query the call link related to the application , You can also drill down to interdependent applications for monitoring .

stay Provided RPC service 、RPC Call source or RPC Call dependency Wait in the tab , Click on the top of the monitor chart Run in On the right side of the Source application 、 Called service or Called service Button , Pop up the monitoring page of drilling application .

Monitor the data of downhole Application .

Full link voltage measurement scheme

https://www.aliyun.com/product/apsara-stack/doc?spm=5176.12021097.1218927.3.16e56015283E54

边栏推荐

- 对于程序员来说,伤害力度最大的话。。。

- mmclassification 标注文件生成

- Baidu R & D suffered Waterloo on three sides: I was stunned by the interviewer's set of combination punches on the spot

- Write a jison parser from scratch (1/10):jison, not JSON

- Solution to null JSON after serialization in golang

- Some summaries of the third anniversary of joining Ping An in China

- Svg image quoted from CodeChina

- MySQL transaction mvcc principle

- Deadlock in channel

- Luogu deep foundation part 1 Introduction to language Chapter 4 loop structure programming (2022.02.14)

猜你喜欢

Some summaries of the third anniversary of joining Ping An in China

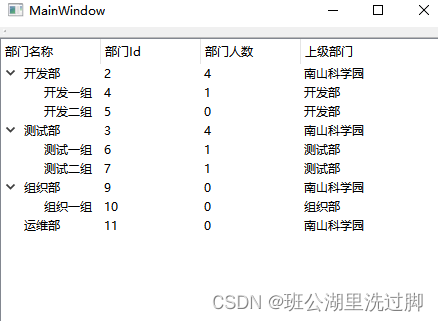

QTreeView+自定义Model实现示例

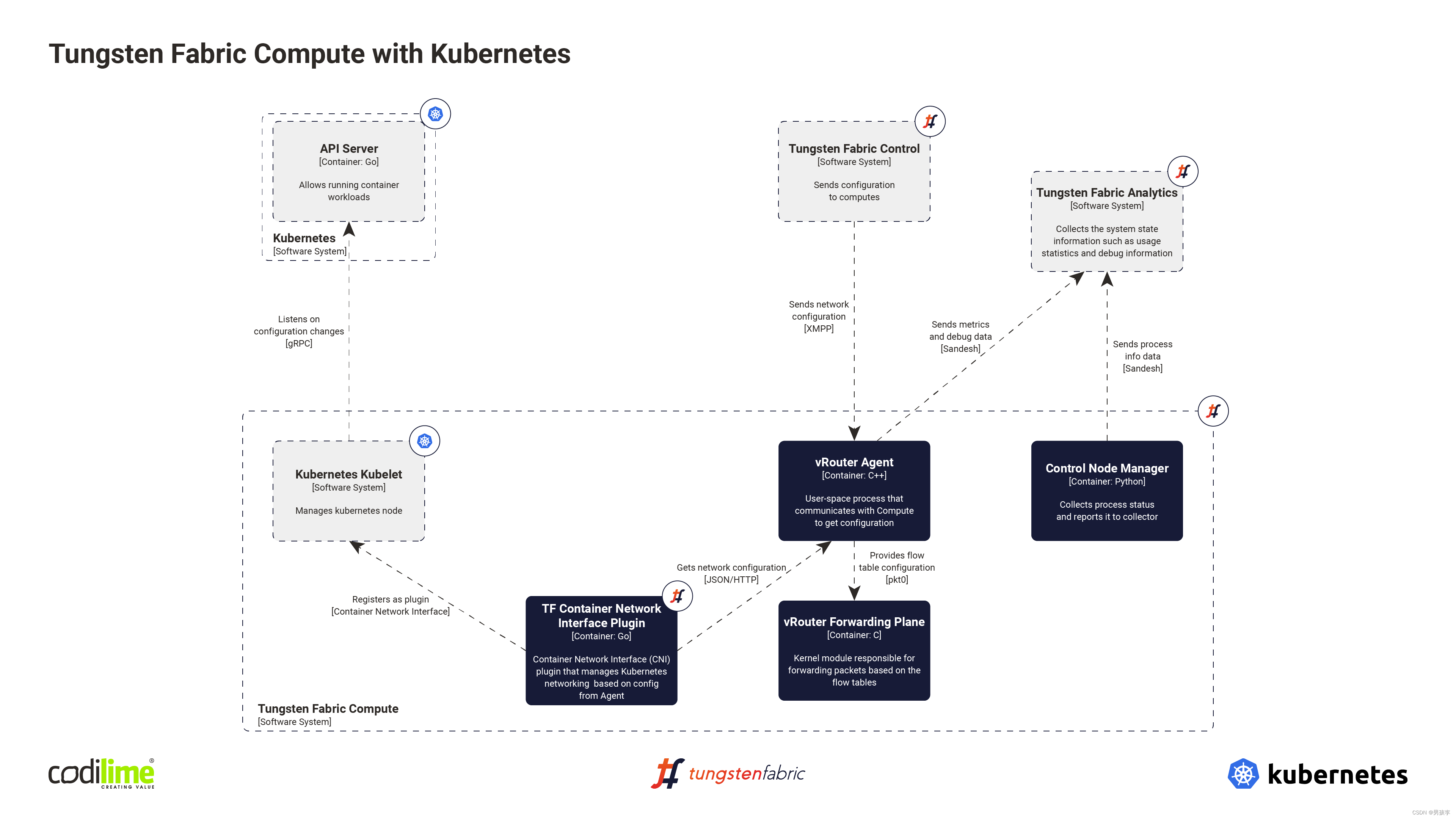

Fabric of kubernetes CNI plug-in

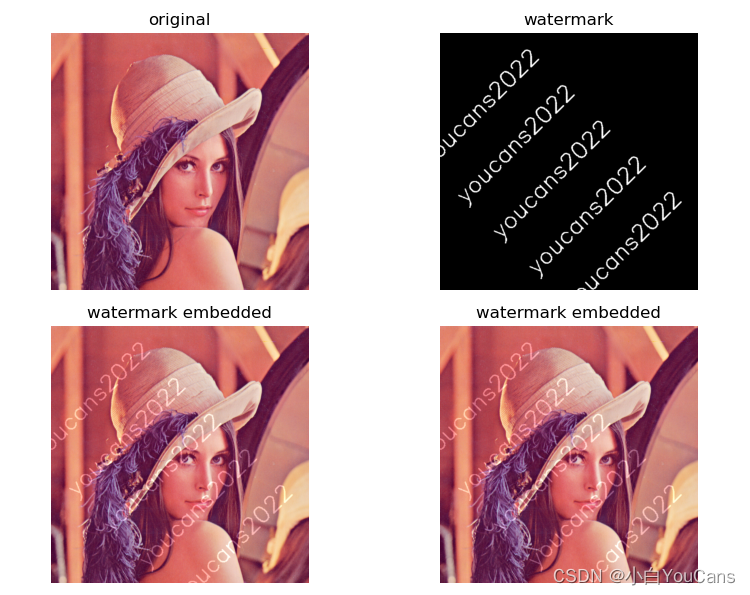

【OpenCV 例程200篇】218. 多行倾斜文字水印

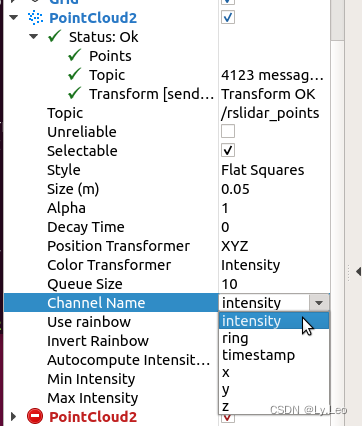

Pcl:: fromrosmsg alarm failed to find match for field 'intensity'

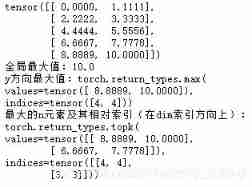

Hands on deep learning (III) -- Torch Operation (sorting out documents in detail)

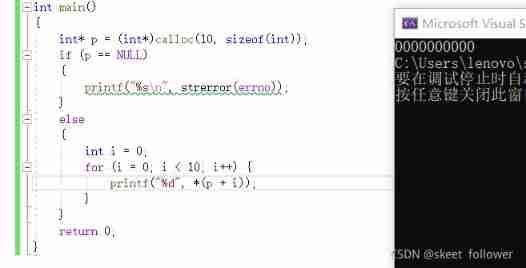

Dynamic memory management

用数据告诉你高考最难的省份是哪里!

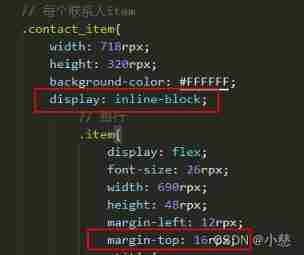

The child container margin top acts on the parent container

Summary of reasons for web side automation test failure

随机推荐

Launpad | 基礎知識

C语言指针经典面试题——第一弹

Custom type: structure, enumeration, union

原生div具有编辑能力

JDBC and MySQL database

Daughter love in lunch box

Hands on deep learning (43) -- machine translation and its data construction

Whether a person is reliable or not, closed loop is very important

C语言指针面试题——第二弹

浅谈Multus CNI

How to teach yourself to learn programming

Exercise 8-7 string sorting (20 points)

Deadlock in channel

Golang type comparison

Web端自动化测试失败原因汇总

libmysqlclient.so.20: cannot open shared object file: No such file or directory

AUTOSAR from getting started to mastering 100 lectures (106) - SOA in domain controllers

Summary of small program performance optimization practice

Pcl:: fromrosmsg alarm failed to find match for field 'intensity'

Write a jison parser from scratch (3/10): a good beginning is half the success -- "politics" (Aristotle)