当前位置:网站首页>[code practice] [stereo matching series] Classic ad census: (6) multi step parallax optimization

[code practice] [stereo matching series] Classic ad census: (6) multi step parallax optimization

2022-07-05 08:53:00 【Li Yingsong~】

Long time no see, students !

Download the complete source code , Click to enter : https://github.com/ethan-li-coding/AD-Census

Welcome to Github Discuss in the project !

In the last article of actual combat , We are right. AD-Census The practical code of the scan line optimization steps is introduced :

Optimized by scanline , Parallax effect is basically finalized , Let's review :

|  |  |  |

Students who are familiar with stereo matching should know , In the future, we will generally do a parallax optimization , To eliminate error parallax and parallax filling .AD-Census No exception , It has been carried out in total 4 A small step to complete the purpose of parallax optimization , They are :

- Outlier Detection Outlier detection

- Iterative Region Voting Iterative local voting

- Proper Interpolation

- Depth Discontinuity Adjustment Parallax discontinuity adjustment

We won't repeat the theoretical part , Please check the previous blog posts :

【 Constant theory 】【 Stereo matching series 】 classic AD-Census: (4) Multistep parallax optimization

The content of this article is closely followed by the code , Explain the code well . Let's look down .

List of articles

Code implementation

Class design

Member functions

Let's use a multi-step optimizer class MultiStepRefiner To achieve this function . The implementation of the class is placed in the file multistep_refiner.h/multistep_refiner.cpp in .

/** * \brief Multistep optimizer */

class MultiStepRefiner

{

public:

MultiStepRefiner();

~MultiStepRefiner();

}

In order to complete the algorithm , We need to allocate some memory to store data or results ,Initialize Member functions can help accomplish this :

/** * \brief initialization * \param width The image is wide * \param height Image height * \return true: Successful initialization */

bool Initialize(const sint32& width, const sint32& height);

The algorithm needs data input and parameter design , So we design member functions SetData and SetParam To achieve this goal :

/** * \brief Set the data of multi-step optimization * \param img_left // Left image data , Three channels * \param cost // Cost data * \param cross_arms // Cross arm data * \param disp_left // Left parallax data * \param disp_right // Right parallax data */

void SetData(const uint8* img_left, float32* cost,const CrossArm* cross_arms, float32* disp_left, float32* disp_right);

/** * \brief Set parameters of multi-step optimization * \param min_disparity // Minimum parallax * \param max_disparity // Maximum parallax * \param irv_ts // Iterative Region Voting Parameters ts * \param irv_th // Iterative Region Voting Parameters th * \param lrcheck_thres // Consistency check threshold * \param do_lr_check // Whether to check the left and right consistency * \param do_region_voting // Whether to do interpolation filling * \param do_interpolating // Whether to fill in partial voting * \param do_discontinuity_adjustment // Whether to make discontinuous area adjustment */

void SetParam(const sint32& min_disparity, const sint32& max_disparity, const sint32& irv_ts, const float32& irv_th, const float32& lrcheck_thres,

const bool& do_lr_check, const bool& do_region_voting, const bool& do_interpolating, const bool& do_discontinuity_adjustment);

Last , You need a main function to perform optimization :

/** \brief Multistep parallax optimization */

void Refine();

The above is the public function list of the class , You can complete the whole optimization operation by calling the above functions .

And we know that Refine There are actually four small steps , Each step is an independent algorithm module , So let's design four private member functions to realize these four functions respectively .

//------4 Small step parallax optimization ------//

/** \brief Outlier detection */

void OutlierDetection();

/** \brief Iterative local voting */

void IterativeRegionVoting();

/** \brief Interpolation filling */

void ProperInterpolation();

/** \brief Depth discontinuity parallax adjustment */

void DepthDiscontinuityAdjustment();

Finally, there are some member variables of the class , Image data 、 Cost data 、 Parallax data 、 Algorithm parameters, etc , There is no need to explain one by one .

/** \brief Image size */

sint32 width_;

sint32 height_;

/** \brief Left image data ( Three channels ) */

const uint8* img_left_;

/** \brief Cost data */

float32* cost_;

/** \brief Cross arm data */

const CrossArm* cross_arms_;

/** \brief Left parallax data */

float* disp_left_;

/** \brief Right parallax data */

float* disp_right_;

/** \brief Left view edge data */

vector<uint8> vec_edge_left_;

/** \brief Minimum parallax value */

sint32 min_disparity_;

/** \brief Maximum parallax value */

sint32 max_disparity_;

/** \brief Iterative Region Voting Parameters ts */

sint32 irv_ts_;

/** \brief Iterative Region Voting Parameters th */

float32 irv_th_;

float32 lrcheck_thres_;

/** \brief Whether to check the left and right consistency */

bool do_lr_check_;

/** \brief Whether to fill in partial voting */

bool do_region_voting_;

/** \brief Whether to do interpolation filling */

bool do_interpolating_;

/** \brief Whether to make discontinuous area adjustment */

bool do_discontinuity_adjustment_;

/** \brief Occlusion pixel set */

vector<pair<int, int>> occlusions_;

/** \brief Mismatched area pixel set */

vector<pair<int, int>> mismatches_;

Class implementation

First let's look at , Three non algorithmic functional functions Initialize、SetData、SetParam, Their code is relatively simple , They are all prepared for algorithm implementation .

First of all Initialize, The code is as follows , Only one initialization of edge data is done ,vec_edge_left_ Is an array with the same size as the image , It stores the edge values of all pixels of the image , The function is when the parallax is not adjusted continuously , Provide edge information .

bool MultiStepRefiner::Initialize(const sint32& width, const sint32& height)

{

width_ = width;

height_ = height;

if (width_ <= 0 || height_ <= 0) {

return false;

}

// Initialize edge data

vec_edge_left_.clear();

vec_edge_left_.resize(width*height);

return true;

}

The second is SetData, Incoming image data 、 Cost data 、 Cross arm data 、 Left and right parallax map .

void MultiStepRefiner::SetData(const uint8* img_left, float32* cost,const CrossArm* cross_arms, float32* disp_left, float32* disp_right)

{

img_left_ = img_left;

cost_ = cost;

cross_arms_ = cross_arms;

disp_left_ = disp_left;

disp_right_= disp_right;

}

Then there SetParam, Assign values to all parameters of the algorithm .

void MultiStepRefiner::SetParam(const sint32& min_disparity, const sint32& max_disparity, const sint32& irv_ts, const float32& irv_th, const float32& lrcheck_thres,

const bool& do_lr_check, const bool& do_region_voting, const bool& do_interpolating, const bool& do_discontinuity_adjustment)

{

min_disparity_ = min_disparity;

max_disparity_ = max_disparity;

irv_ts_ = irv_ts;

irv_th_ = irv_th;

lrcheck_thres_ = lrcheck_thres;

do_lr_check_ = do_lr_check;

do_region_voting_ = do_region_voting;

do_interpolating_ = do_interpolating;

do_discontinuity_adjustment_ = do_discontinuity_adjustment;

}

When the algorithm data and parameters are assigned , Next is the realization of each optimization sub step . Let's talk about it one by one .

1. Outlier Detection Outlier detection

Outlier detection sounds different , But in fact, it is completely a left-right consistency check , A lot has been said about left-right consistency check , It's really not a strange word .

In the process of outlier detection , We will classify the outliers into occluded points and non occluded points . This sum SGM As like as two peas. , The discrimination method of occluded area and non occluded area is :

(1) p p p The parallax value of is close to that of the surrounding background pixels .

(2) p p p Invisible on the right image due to occlusion , So it will match the foreground pixels on the right image , The parallax value of foreground pixels must be larger than that of background pixels , I.e. ratio p p p The parallax is large .

Here is a more detailed explanation , Please check the blog :

【 Code on the actual battle 】【 Stereo matching series 】 classic SGM:(6) Parallax filling

So let's look at the code :

void MultiStepRefiner::OutlierDetection()

{

const sint32 width = width_;

const sint32 height = height_;

const float32& threshold = lrcheck_thres_;

// Occlusion area pixels and mismatching area pixels

auto& occlusions = occlusions_;

auto& mismatches = mismatches_;

occlusions.clear();

mismatches.clear();

// --- Left and right consistency check

for (sint32 y = 0; y < height; y++) {

for (sint32 x = 0; x < width; x++) {

// Left image parallax value

auto& disp = disp_left_[y * width + x];

if (disp == Invalid_Float) {

mismatches.emplace_back(x, y);

continue;

}

// Find the corresponding pixel with the same name on the right image according to the parallax value

const auto col_right = lround(x - disp);

if (col_right >= 0 && col_right < width) {

// The parallax value of the pixel with the same name on the right image

const auto& disp_r = disp_right_[y * width + col_right];

// Judge whether the two apparent differences are consistent ( The difference is within the threshold )

if (abs(disp - disp_r) > threshold) {

// Distinguish between occluded areas and mismatched areas

// Calculate the matching pixels in the left image through the parallax of the right image , And get parallax disp_rl

// if(disp_rl > disp)

// pixel in occlusions

// else

// pixel in mismatches

const sint32 col_rl = lround(col_right + disp_r);

if (col_rl > 0 && col_rl < width) {

const auto& disp_l = disp_left_[y * width + col_rl];

if (disp_l > disp) {

occlusions.emplace_back(x, y);

}

else {

mismatches.emplace_back(x, y);

}

}

else {

mismatches.emplace_back(x, y);

}

// Make the parallax value invalid

disp = Invalid_Float;

}

}

else {

// The pixel with the same name cannot be found on the right image through the parallax value ( Out of image range )

disp = Invalid_Float;

mismatches.emplace_back(x, y);

}

}

}

}

We perform a consistency check on all pixel parallaxes in the left view , Pixels that do not meet the consistency check , We assign the parallax value to an invalid value , And it is divided into pixels in the mismatch area and pixels in the occlusion area and stored separately , The purpose is to use different strategies in the later parallax interpolation .

2. Iterative Region Voting Iterative local voting

For invalid pixels p p p The cross domain of supports all reliable pixels in the area , Statistics [0, d m a x d_{max} dmax] Histogram of range parallax distribution H p H_p Hp

( The value of the histogram is equivalent to the number of votes obtained by parallax ). Occupy the most pixels ( That is, get the most votes ) The parallax value of is recorded as d p ∗ d_p^* dp∗ . The number of reliable pixels is recorded as S p S_p Sp

. If the number of reliable pixels is large enough , And the parallax with the most votes is worth enough votes , Then put d p ∗ d_p^* dp∗ Assign to p p p . The two here “ Enough ”, Use threshold to control :In style , τ s τ_s τs and τ H τ_H τH For two preset thresholds .

The above is the theoretical description of partial voting , So for each pixel , All we have to do is , Put invalid pixels p p p The histogram of all reliable pixels in the cross domain support area is calculated , Then pick out the parallax value with the most occurrences d p ∗ d_p^* dp∗, If the proportion of its quantity to the total is greater than the threshold , And there are enough reliable pixels in the whole support area , Just put d p ∗ d_p^* dp∗ Assign to p p p.

The above operation , We have to repeat it many times , The recommended algorithm is 5 Time .

So let's look at the code :

void MultiStepRefiner::IterativeRegionVoting()

{

const sint32 width = width_;

const auto disp_range = max_disparity_ - min_disparity_;

if(disp_range <= 0) {

return;

}

const auto arms = cross_arms_;

// Histogram

vector<sint32> histogram(disp_range,0);

// iteration 5 Time

const sint32 num_iters = 5;

for (sint32 it = 0; it < num_iters; it++) {

for (sint32 k = 0; k < 2; k++) {

auto& trg_pixels = (k == 0) ? mismatches_ : occlusions_;

for (auto& pix : trg_pixels) {

const sint32& x = pix.first;

const sint32& y = pix.second;

auto& disp = disp_left_[y * width + x];

if(disp != Invalid_Float) {

continue;

}

// init histogram

memset(&histogram[0], 0, disp_range * sizeof(sint32));

// Calculate the parallax histogram of the support area

// obtain arm

auto& arm = arms[y * width + x];

// Traverse the pixel parallax of the support area , Statistical histogram

for (sint32 t = -arm.top; t <= arm.bottom; t++) {

const sint32& yt = y + t;

auto& arm2 = arms[yt * width_ + x];

for (sint32 s = -arm2.left; s <= arm2.right; s++) {

const auto& d = disp_left_[yt * width + x + s];

if (d != Invalid_Float) {

const auto di = lround(d);

histogram[di - min_disparity_]++;

}

}

}

// Calculate the parallax corresponding to the histogram peak

sint32 best_disp = 0, count = 0;

sint32 max_ht = 0;

for (sint32 d = 0; d < disp_range; d++) {

const auto& h = histogram[d];

if (max_ht < h) {

max_ht = h;

best_disp = d;

}

count += h;

}

if (max_ht > 0) {

if (count > irv_ts_ && max_ht * 1.0f / count > irv_th_) {

disp = best_disp + min_disparity_;

}

}

}

// Delete filled pixels

for (auto it = trg_pixels.begin(); it != trg_pixels.end();) {

const sint32 x = it->first;

const sint32 y = it->second;

if(disp_left_[y * width + x]!=Invalid_Float) {

it = trg_pixels.erase(it);

}

else {

++it; }

}

}

}

}

Proper Interpolation

This step is actually parallax filling . In consistency checking, invalid parallax is divided into occlusion area and mismatch area . First, the invalid pixels p p p , Along its neighborhood 16 Search for reliable pixel parallax values in two directions , For occluded pixels , Select the minimum of all reliable pixel parallax values , Because the probability of occlusion comes from the background , Background parallax is often a small value ; For mismatched pixels , Then choose and p p p The parallax of the nearest pixel of color , Because pixels with similar colors often have similar parallax values ( Here should be to limit the search step , It's too far. Assuming that the probability fails ).

void MultiStepRefiner::ProperInterpolation()

{

const sint32 width = width_;

const sint32 height = height_;

const float32 pi = 3.1415926f;

// Maximum search travel , There is no need to search too far pixels

const sint32 max_search_length = std::max(abs(max_disparity_), abs(min_disparity_));

std::vector<pair<sint32, float32>> disp_collects;

for (sint32 k = 0; k < 2; k++) {

auto& trg_pixels = (k == 0) ? mismatches_ : occlusions_;

if (trg_pixels.empty()) {

continue;

}

std::vector<float32> fill_disps(trg_pixels.size());

// Traverse the pixels to be processed

for (auto n = 0u; n < trg_pixels.size(); n++) {

auto& pix = trg_pixels[n];

const sint32 x = pix.first;

const sint32 y = pix.second;

// collect 16 The first effective parallax value encountered in four directions

disp_collects.clear();

double ang = 0.0;

for (sint32 s = 0; s < 16; s++) {

const auto sina = sin(ang);

const auto cosa = cos(ang);

for (sint32 m = 1; m < max_search_length; m++) {

const sint32 yy = lround(y + m * sina);

const sint32 xx = lround(x + m * cosa);

if (yy < 0 || yy >= height || xx < 0 || xx >= width) {

break;}

const auto& d = disp_left_[yy * width + xx];

if (d != Invalid_Float) {

disp_collects.emplace_back(yy * width * 3 + 3 * xx, d);

break;

}

}

ang += pi / 16;

}

if (disp_collects.empty()) {

continue;

}

// If it is a mismatch area , Then select the pixel parallax value with the closest color

// If it is a sheltered area , Then select the minimum parallax value

if (k == 0) {

sint32 min_dist = 9999;

float32 d = 0.0f;

const auto color = ADColor(img_left_[y*width * 3 + 3 * x], img_left_[y*width * 3 + 3 * x + 1], img_left_[y*width * 3 + 3 * x + 2]);

for (auto& dc : disp_collects) {

const auto color2 = ADColor(img_left_[dc.first], img_left_[dc.first + 1], img_left_[dc.first + 2]);

const auto dist = abs(color.r - color2.r) + abs(color.g - color2.g) + abs(color.b - color2.b);

if (min_dist > dist) {

min_dist = dist;

d = dc.second;

}

}

fill_disps[n] = d;

}

else {

float32 min_disp = Large_Float;

for (auto& dc : disp_collects) {

min_disp = std::min(min_disp, dc.second);

}

fill_disps[n] = min_disp;

}

}

for (auto n = 0u; n < trg_pixels.size(); n++) {

auto& pix = trg_pixels[n];

const sint32 x = pix.first;

const sint32 y = pix.second;

disp_left_[y * width + x] = fill_disps[n];

}

}

}

Depth Discontinuity Adjustment Parallax discontinuity adjustment

The purpose of this step is to further optimize the parallax value of the parallax discontinuous region .

First , Will do an edge detection on the parallax map , For pixels on the edge p p p , Record the parallax value as D ( p ) D(p) D(p) , Record the left and right pixels p 1 p_1 p1、 p 2 p_2 p2 The parallax value of D L ( p 1 ) D_L(p_1) DL(p1)、 D L ( p 2 ) D_L(p_2) DL(p2). If D L ( p 1 ) D_L(p_1) DL(p1)、 D L ( p 2 ) D_L(p_2) DL(p2) There is a parallax value assigned to the pixel p p p The matching cost ratio after p p p The original matching cost C 2 ( p , D ( p ) ) C_2(p,D(p)) C2(p,D(p)) smaller , Then put D ( p ) D(p) D(p) Replace with the parallax value .

In fact, it is to fine tune the pixel value on the edge , Select the parallax value on the left and right sides to make it less expensive .

So our code implementation is also relatively simple , Do an edge detection , Whether there is parallax value in the left and right search will bring less matching cost , Replace if any . Edge detection we use Sobel operator , Two simple two-dimensional convolution operations ( Other edge detection operators can also be used instead ).

void MultiStepRefiner::EdgeDetect(uint8* edge_mask, const float32* disp_ptr, const sint32& width, const sint32& height, const float32 threshold)

{

memset(edge_mask, 0, width*height * sizeof(uint8));

// sobel operator

for (int y = 1; y < height - 1; y++) {

for (int x = 1; x < width - 1; x++) {

const auto grad_x = (-disp_ptr[(y - 1) * width + x - 1] + disp_ptr[(y - 1) * width + x + 1]) +

(-2 * disp_ptr[y * width + x - 1] + 2 * disp_ptr[y * width + x + 1]) +

(-disp_ptr[(y + 1) * width + x - 1] + disp_ptr[(y + 1) * width + x + 1]);

const auto grad_y = (-disp_ptr[(y - 1) * width + x - 1] - 2 * disp_ptr[(y - 1) * width + x] - disp_ptr[(y - 1) * width + x + 1]) +

(disp_ptr[(y + 1) * width + x - 1] + 2 * disp_ptr[(y + 1) * width + x] + disp_ptr[(y + 1) * width + x + 1]);

const auto grad = abs(grad_x) + abs(grad_y);

if (grad > threshold) {

edge_mask[y*width + x] = 1;

}

}

}

}

void MultiStepRefiner::DepthDiscontinuityAdjustment()

{

const sint32 width = width_;

const sint32 height = height_;

const auto disp_range = max_disparity_ - min_disparity_;

if (disp_range <= 0) {

return;

}

// Edge detection of parallax map

// The method of edge detection is flexible , Choose here sobel operator

const float32 edge_thres = 5.0f;

EdgeDetect(&vec_edge_left_[0], disp_left_, width, height, edge_thres);

// Adjust the parallax of edge pixels

for (sint32 y = 0; y < height; y++) {

for (sint32 x = 1; x < width - 1; x++) {

const auto& e_label = vec_edge_left_[y*width + x];

if (e_label == 1) {

const auto disp_ptr = disp_left_ + y*width;

float32& d = disp_ptr[x];

if (d != Invalid_Float) {

const sint32& di = lround(d);

const auto cost_ptr = cost_ + y*width*disp_range + x*disp_range;

float32 c0 = cost_ptr[di];

// Record the parallax value and proxy value of the left and right pixels

// Select the pixel parallax value with the lowest cost

for (int k = 0; k<2; k++) {

const sint32 x2 = (k == 0) ? x - 1 : x + 1;

const float32& d2 = disp_ptr[x2];

const sint32& d2i = lround(d2);

if (d2 != Invalid_Float) {

const auto& c = (k == 0) ? cost_ptr[-disp_range + d2i] : cost_ptr[disp_range + d2i];

if (c < c0) {

d = d2;

c0 = c;

}

}

}

}

}

}

}

}

The above is the implementation of all sub steps , Note that there is another sub-pixel optimization in the paper , A classmate wanted to ask me why I didn't see it in my code , Because I put sub-pixel optimization in parallax calculation , In main class ADCensusStereo class ComputeDisparity To realize .

Last , We are Refine In member function , Perform four sub steps in sequence , Complete the whole multi-step parallax optimization . The code is as follows :

void MultiStepRefiner::Refine()

{

if (width_ <= 0 || height_ <= 0 ||

disp_left_ == nullptr || disp_right_ == nullptr ||

cost_ == nullptr || cross_arms_ == nullptr) {

return;

}

// step1: outlier detection

if (do_lr_check_) {

OutlierDetection();

}

// step2: iterative region voting

if (do_region_voting_) {

IterativeRegionVoting();

}

// step3: proper interpolation

if (do_interpolating_) {

ProperInterpolation();

}

// step4: discontinuities adjustment

if (do_discontinuity_adjustment_) {

DepthDiscontinuityAdjustment();

}

// median filter

adcensus_util::MedianFilter(disp_left_, disp_left_, width_, height_, 3);

}

Then we followed a median filter , It is also a routine operation , Although there is no , But there is no harm in adding it .

experiment

Yes Cone data , We do four groups of experiments corresponding to four sub steps , Namely

- Outlier Detection(1)

- Outlier Detection + Iterative Region Voting(1+2)

- Outlier Detection + Iterative Region Voting + Proper Interpolation(1+2+3)

- Outlier Detection + Iterative Region Voting + Proper Interpolation + Depth Discontinuity Adjustment(1+2+3+4)

Let's first post the results of the previous article , That is, the effect picture of the scanning line optimization step :

|  |  |  |

The experimental results of multi-step parallax optimization are as follows :

|  |  |  |

You can see , The biggest influence on the effect of parallax map is 1 And the first 3 Step , One is consistency check denoising , One is parallax filling , The other two steps change the parallax map very little , It's icing on the cake or parallax tuning , If it is to achieve high efficiency , These two steps can be appropriately abandoned .

Okay , We ADCensus This is the end of our code combat series , I hope that's helpful .

download AD-Census Complete source code , Click to enter : https://github.com/ethan-li-coding/AD-Census

Welcome to Github Discuss in the project , If you think the blogger's code quality is good , There is a star in the upper right corner ! thank !

Previous blog posts are as follows :

Code combat series

【 Code on the actual battle 】【 Stereo matching series 】 classic AD-Census: (1) frame

【 Code on the actual battle 】【 Stereo matching series 】 classic AD-Census: (2) The main class

【 Code on the actual battle 】【 Stereo matching series 】 classic AD-Census: (3) Cost calculation

【 Code on the actual battle 】【 Stereo matching series 】 classic AD-Census: (4) Cross domain cost aggregation

【 Code on the actual battle 】【 Stereo matching series 】 classic AD-Census: (5) Scan line optimization

About bloggers :

Ethan Li Li Yingsong ( You know : Li Yingsong )

Wuhan University Doctor of photogrammetry and remote sensing

Main direction Stereo matching 、 Three dimensional reconstruction

2019 Won the first prize of scientific and technological progress in surveying and mapping in ( Provincial and ministerial level )

Love 3D , Love sharing , Love open source

GitHub: https://github.com/ethan-li-coding ( welcome follow and star)

Personal wechat :

Welcome to exchange !

Pay attention to bloggers and don't get lost , thank !

Blog home page :https://ethanli.blog.csdn.net

边栏推荐

猜你喜欢

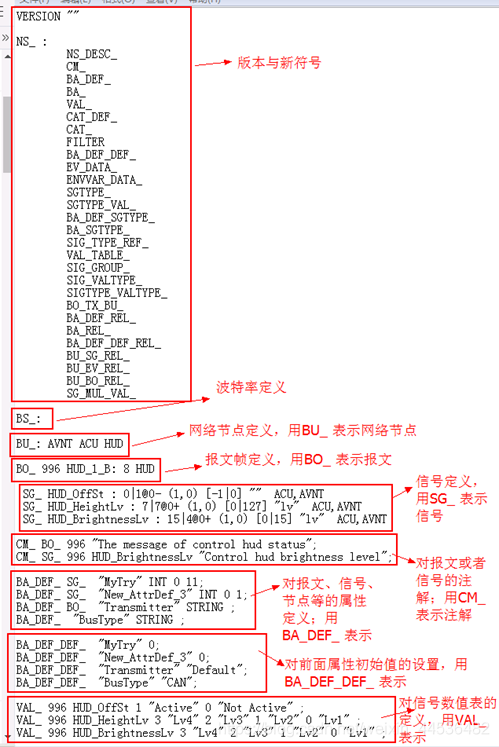

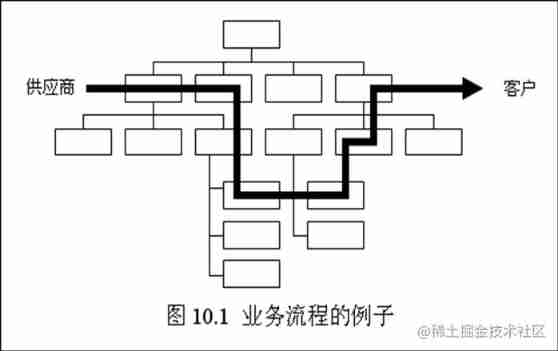

Business modeling of software model | object modeling

It cold knowledge (updating ing~)

C# LINQ源码分析之Count

AUTOSAR从入门到精通100讲(103)-dbc文件的格式以及创建详解

Business modeling | process of software model

Guess riddles (9)

![[daiy4] copy of JZ35 complex linked list](/img/bc/ce90bb3cb6f52605255f1d6d6894b0.png)

[daiy4] copy of JZ35 complex linked list

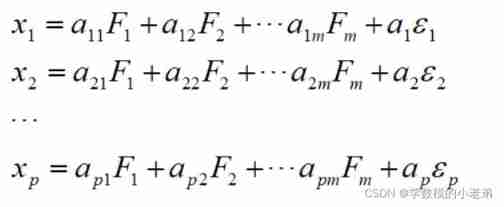

Mathematical modeling: factor analysis

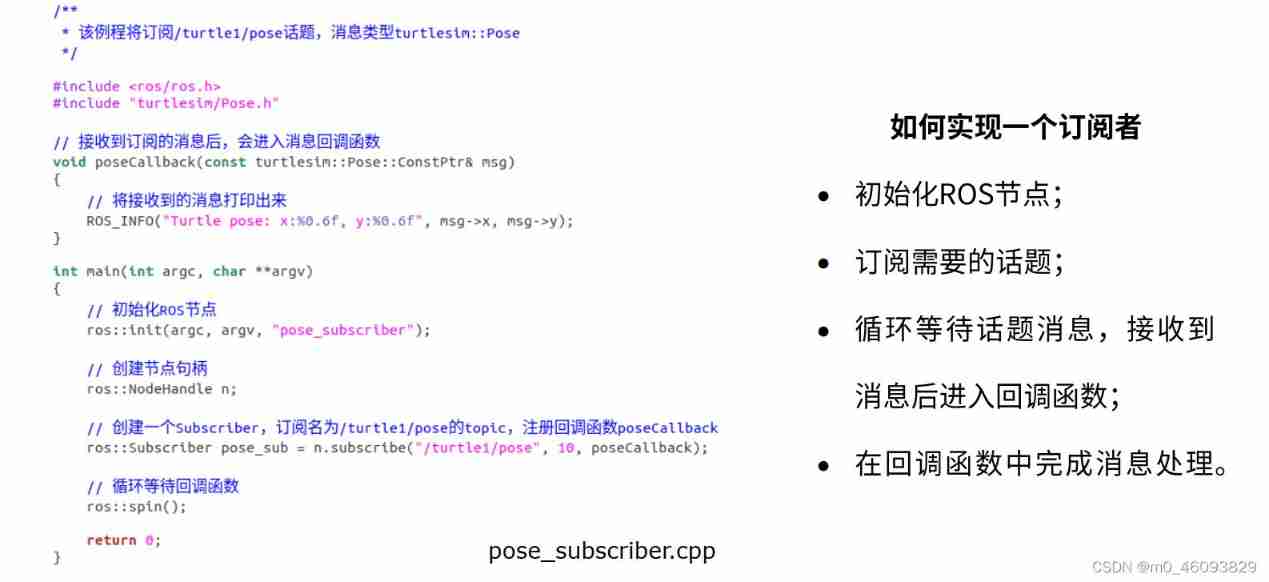

Programming implementation of subscriber node of ROS learning 3 subscriber

Halcon color recognition_ fuses. hdev:classify fuses by color

随机推荐

Search data in geo database

[formation quotidienne - Tencent Selection 50] 557. Inverser le mot III dans la chaîne

Warning: retrying occurs during PIP installation

Business modeling of software model | overview

Return of missing persons

How many checks does kubedm series-01-preflight have

Run菜单解析

Latex improve

Programming implementation of ROS learning 6 -service node

我从技术到产品经理的几点体会

Dynamic dimensions required for input: input, but no shapes were provided. Automatically overriding

MPSoC QSPI flash upgrade method

Chris LATTNER, the father of llvm: why should we rebuild AI infrastructure software

图解八道经典指针笔试题

golang 基础 ——map、数组、切片 存放不同类型的数据

资源变现小程序添加折扣充值和折扣影票插件

ABC#237 C

Illustrated network: what is gateway load balancing protocol GLBP?

ROS learning 1- create workspaces and function packs

Halcon shape_ trans