当前位置:网站首页>Faster-ILOD、maskrcnn_ Benchmark trains its own VOC data set and problem summary

Faster-ILOD、maskrcnn_ Benchmark trains its own VOC data set and problem summary

2022-07-02 07:34:00 【chenf0】

One 、 Train yourself to mark VOC Data sets

Use Faster-ILOD Train yourself to annotate data sets , The dataset has been converted to VOC Format

I have been able to train smoothly before VOC2007 Data sets , May refer to

Faster-ILOD、maskrcnn_benchmark Installation process and problems encountered

Mainly modify the following documents :

1.maskrcnn_benchmark/config/paths_catalog.py

The corresponding path is modified to the path where your dataset is located

"voc_2007_train": {

"data_dir": "/data/taxi_data/VOC2007_all",

"split": "train"

},

"voc_2007_val": {

"data_dir": "/data/taxi_data/VOC2007_all",

"split": "val"

},

"voc_2007_test": {

"data_dir": "/data/taxi_data/VOC2007_all",

"split": "test"

},

2.maskrcnn_benchmark/data/voc.py

Modify to include categories in the dataset I mainly identify taxis and private cars

# CLASSES = ("__background__ ", "aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow",

# "diningtable", "dog", "horse", "motorbike", "person", "pottedplant", "sheep", "sofa", "train", "tvmonitor")

CLASSES = ("__background__ ", "Taxi","Other Car")

3. modify configs/e2e_faster_rcnn_R_50_C4_1x.yaml

Modify the number of categories , I trained both classes at once .

NUM_CLASSES: 3 # total classes

NAME_OLD_CLASSES: []

#NAME_NEW_CLASSES: ["aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow", "diningtable", "dog",

# "horse", "motorbike", "person","pottedplant", "sheep", "sofa", "train","tvmonitor"]

# NAME_EXCLUDED_CLASSES: [ ]

NAME_NEW_CLASSES: ["Taxi","Other Car"]

NAME_EXCLUDED_CLASSES: []

4. function

python tools/train_first_step.py --config-file="./configs/e2e_faster_rcnn_R_50_C4_1x.yaml"

Two 、 Have a problem

1. There is no counterpart Taxi_train.txt Wait for the documents

Because it is incremental learning , It will be processed for each category , Originally downloaded voc The file contains the corresponding train、test etc. txt file , But the data set of self annotation is converted to voc Format data set is converted to train.txt,test.txt,trainval.txt as well as val.txt. There is no counterpart txt file , Therefore, the corresponding file should be generated .

Observe the corresponding txt file , Each row corresponds to two columns , One column is the name of the picture , The other column is a single digit , Expected value is 1.-1.0.

-1 It means that this class does not exist in this picture

1 Bits exist in this picture

0 For difficult samples

( Personally, I think so , Wrong, welcome to correct )

according to xml Document and train.txt,test.txt,val.txt Convert the corresponding category . The code is as follows :

When I generate the corresponding file, I only write the name of the image with this category , When reading data, the code is changed if an error is reported , You can modify the code , Consistent with the original data set .

names = locals()

dir_path = "D:/achenf/data/jiaotong_data/1taxi_train_data/VOC/VOC2007/"

# Read train.txt/test.txt/val.txt file

f = open("D:/achenf/data/jiaotong_data/1taxi_train_data/VOC/VOC2007/ImageSets/Main/train.txt", 'r')

# Include categories

classes = ['Taxi','Other Car']

# Write document name

txt_path = dir_path+"by_classes/"+"Other Car_train.txt"

fp_w = open(txt_path, 'w')

for line in f.readlines():

if not line:

break

n= line[:-1]

xmlpath=dir_path+"Annotations/"+n+'.xml'

fp = open(xmlpath)

xmllines = fp.readlines()

ind_start = []

ind_end = []

lines_id_start = xmllines[:]

lines_id_end = xmllines[:]

# Modify the corresponding category Other Car/Taxi

classes1 = ' <name>Other Car</name>\n'

while " <object>\n" in lines_id_start:

a = lines_id_start.index(" <object>\n")

ind_start.append(a)

lines_id_start[a] = "delete"

while " </object>\n" in lines_id_end:

b = lines_id_end.index(" </object>\n")

ind_end.append(b)

lines_id_end[b] = "delete"

# names Store all the object block

i = 0

for k in range(0, len(ind_start)):

names['block%d' % k] = []

for j in range(0, len(classes)):

if classes[j] in xmllines[ind_start[i] + 1]:

a = ind_start[i]

for o in range(ind_end[i] - ind_start[i] + 1):

names['block%d' % k].append(xmllines[a + o])

break

i += 1

# Search for... In a given class , If there is, then , write in object Piece of information

a = 0

if len(ind_start)>0:

# xml head

string_start = xmllines[0:ind_start[0]]

# xml tail

string_end = [xmllines[len(xmllines) - 1]]

flag = False

for k in range(0, len(ind_start)):

if classes1 in names['block%d' % k]:

flag = True

a += 1

string_start += names['block%d' % k]

string_start += string_end

# If there is a write

if flag:

fp_w.write(n+" "+"1"+'\n')

fp.close()

fp_w.close()

Get the following documents :

2.x[2] == ‘0’ Subscript exceeded

When dividing each line , If the value is 1, Source dataset txt The document is decomposed into [‘000000’,‘’,‘1’], The file I generated is decomposed into [‘000000’,‘1’], Because there are no difficult samples , Comment these lines directly

for i in range(len(buff)):

x = buff[i]

x = x.split(' ')

if x[1] == '-1':

pass

# elif x[2] == '0': # include difficult level object

# if self.is_train:

# pass

# else:

# img_ids_per_category.append(x[0])

# self.ids.append(x[0])

else:

img_ids_per_category.append(x[0])

self.ids.append(x[0])

And my xml Nor does it exist in the file difficult The label of , So this line of code difficult = int(obj.find("difficult").text) == 1 You will also report mistakes. , So directly difficult The assignment is False

for obj in target.iter("object"):

difficult = False

# difficult = int(obj.find("difficult").text) == 1

if not self.keep_difficult and difficult:

continue

3.ValueError: invalid literal for int() with base 10: ‘0.0’

Report the mistake on this trip bndbox = tuple(map(lambda x: x - TO_REMOVE, list(map(int, box)))), Should be str Go straight to int The problem of , So we first convert it into float, In the transformation of int, Revised as follows :bndbox = tuple(map(lambda x: x - TO_REMOVE, list(map(int, map(float,box)))))

Just ok 了

Learn a new knowledge point ,map() Function can realize batch data type conversion .

May refer to : Python3 of use map() Convert data types in batches , for example str turn float

4.Pytorch Report errors CUDA error: device-side assert triggered

Reference resources :https://blog.csdn.net/veritasalice/article/details/111917185

边栏推荐

- RMAN incremental recovery example (1) - without unbacked archive logs

- Oracle 11.2.0.3 handles the problem of continuous growth of sysaux table space without downtime

- 【信息检索导论】第一章 布尔检索

- PointNet原理证明与理解

- 解决万恶的open failed: ENOENT (No such file or directory)/(Operation not permitted)

- Alpha Beta Pruning in Adversarial Search

- 【信息检索导论】第六章 词项权重及向量空间模型

- Oracle 11g sysaux table space full processing and the difference between move and shrink

- 华为机试题-20190417

- Play online games with mame32k

猜你喜欢

生成模型与判别模型的区别与理解

Oracle 11g uses ords+pljson to implement JSON_ Table effect

![[introduction to information retrieval] Chapter 1 Boolean retrieval](/img/78/df4bcefd3307d7cdd25a9ee345f244.png)

[introduction to information retrieval] Chapter 1 Boolean retrieval

深度学习分类优化实战

SSM student achievement information management system

中年人的认知科普

Faster-ILOD、maskrcnn_benchmark训练coco数据集及问题汇总

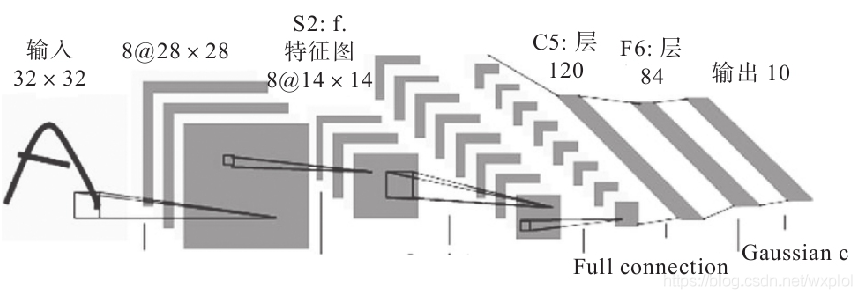

常见CNN网络创新点

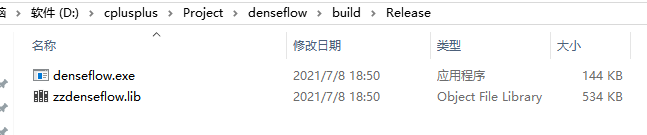

win10+vs2017+denseflow编译

![[introduction to information retrieval] Chapter II vocabulary dictionary and inverted record table](/img/3f/09f040baf11ccab82f0fc7cf1e1d20.png)

[introduction to information retrieval] Chapter II vocabulary dictionary and inverted record table

随机推荐

SSM second hand trading website

Faster-ILOD、maskrcnn_benchmark训练coco数据集及问题汇总

Oracle 11g sysaux table space full processing and the difference between move and shrink

Calculate the total in the tree structure data in PHP

Conversion of numerical amount into capital figures in PHP

MySQL组合索引加不加ID

【Torch】解决tensor参数有梯度,weight不更新的若干思路

矩阵的Jordan分解实例

点云数据理解(PointNet实现第3步)

Faster-ILOD、maskrcnn_benchmark训练自己的voc数据集及问题汇总

[torch] some ideas to solve the problem that the tensor parameters have gradients and the weight is not updated

ssm超市订单管理系统

SSM二手交易网站

ssm垃圾分类管理系统

自然辩证辨析题整理

Oracle 11.2.0.3 handles the problem of continuous growth of sysaux table space without downtime

Error in running test pyspark in idea2020

MySQL has no collation factor of order by

Conda 创建,复制,分享虚拟环境

Point cloud data understanding (step 3 of pointnet Implementation)