当前位置:网站首页>[AI practice] Application xgboost Xgbregressor builds air quality prediction model (I)

[AI practice] Application xgboost Xgbregressor builds air quality prediction model (I)

2022-07-03 03:15:00 【szZack】

1、xgboost.XGBRegressor Detailed explanation

xgboost.XGBRegressor Detailed parameters of can be viewed https://xgboost.readthedocs.io/en/latest/python/python_api.html?highlight=XGBRegressor#xgboost.XGBRegressor

XGBRegressor class :

class xgboost.XGBRegressor(*, objective='reg:squarederror', **kwargs)

Core parameters include :

n_estimators (int) – Number of gradient boosted trees. Equivalent to number of boosting rounds.

max_depth (Optional[int]) – Maximum tree depth for base learners.

learning_rate (Optional[float]) – Boosting learning rate (xgb’s “eta”)

verbosity (Optional[int]) – The degree of verbosity. Valid values are 0 (silent) - 3 (debug).

tree_method (Optional[str]) – Specify which tree method to use. Default to auto. If this parameter is set to default, XGBoost will choose the most conservative option available. It’s recommended to study this option from the parameters document tree method

n_jobs (Optional[int]) – Number of parallel threads used to run xgboost. When used with other Scikit-Learn algorithms like grid search, you may choose which algorithm to parallelize and balance the threads. Creating thread contention will significantly slow down both algorithms.

gamma (Optional[float]) – (min_split_loss) Minimum loss reduction required to make a further partition on a leaf node of the tree.

min_child_weight (Optional[float]) – Minimum sum of instance weight(hessian) needed in a child.

subsample (Optional[float]) – Subsample ratio of the training instance.

colsample_bytree (Optional[float]) – Subsample ratio of columns when constructing each tree.

scale_pos_weight (Optional[float]) – Balancing of positive and negative weights.

2、 application xgboost.XGBRegressor Build an air quality prediction model

2.1 Dependent Library

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.model_selection import KFold #k Crossover verification

from sklearn.model_selection import GridSearchCV # The grid search

from sklearn.metrics import make_scorer

import os

import sys

import time

import math

from sklearn.metrics import r2_score

from sklearn.ensemble import GradientBoostingRegressor

import numpy as np

import warnings

warnings.filterwarnings("ignore", category=FutureWarning, module="sklearn", lineno=193)

from sklearn.multioutput import MultiOutputRegressor

import xgboost as xgb

import joblib

from sklearn.preprocessing import MinMaxScaler

2.2 Build an air quality prediction model

Model

Use xgboost.XGBRegressor As a basic model , Use MultiOutputRegressor packing XGBRegressor So as to achieve multi-dimensional time output ( Multi objective regression Multi target regression)The core code of the model is as follows :

def fit_model(self, x, y, learning_rate=0.05,

n_estimators=500,

max_depth=7,

min_child_weight=1,

gamma=0.0,

subsample=0.8,

colsample_bytree=0.8,

scale_pos_weight=0.8):

model = xgb.XGBRegressor(learning_rate=learning_rate,

n_estimators=n_estimators,

max_depth=max_depth,

min_child_weight=min_child_weight,

gamma=gamma,

subsample=subsample,

colsample_bytree=colsample_bytree,

scale_pos_weight=scale_pos_weight,

seed=42,

tree_method='gpu_hist',

gpu_id=2)

multioutput = MultiOutputRegressor(model).fit(x, y)

return multioutput

- Input x

shape by (N, W, 24)

among N Is the number of days of data , W For the dimension of characteristics , 24 Is the number of hours of input data - Output y

shape by (N, 24)

among N Is the number of days of data ,24 Is the number of hours of output data

2.3 Core code

# be based on XGBRegressor Air quality model

class AQXGB():

def __init__(self, factor, n_input, n_output, version):

self.n_input = n_input

self.n_output = n_output

self.version = version

self.factor = factor# Air factor

if not os.path.exists('./ml_data/'):# Save the training data of machine learning

os.mkdir('./ml_data/')

def train(self, train_data_path, test_data_path):

x,y = self.load_data(self.version, 'train', train_data_path, self.n_input, self.n_output)

train_x,test_x,train_y,test_y = train_test_split(x,y,test_size=0.2,random_state=2022)

model = self.fit_model(train_x, train_y)

pre_y = model.predict(test_x)

# Calculate the decision coefficient r Fang

r2 = self.performance_metric(test_y, pre_y)

print('test_r2 = ', r2)

x,y = self.load_data(self.version, 'test', test_data_path, self.n_input, self.n_output)

pre_y = model.predict(x)

r2 = self.performance_metric(y, pre_y)

print('val_r2 = ', r2)

# Save the model

joblib.dump(model, './ml_data/xgb_%s_%d_%d_%s.model' %(self.factor, self.n_input, self.n_output, self.version))

def performance_metric(self, y_true, y_predict):

# Select the evaluation function as needed

# r2

score = r2_score(y_true,y_predict)

# MSE

MSE=np.mean(( y_predict- y_true)**2)

print('RMSE: ',MSE**0.5)

#MAE

MAE=np.mean(np.abs( y_predict- y_true))

print('MAE: ',MAE)

#SMAPE

SMAPE=self.smape(y_true, y_predict)

print('SMAPE: ',SMAPE)

return score

def smape(self, A, F):

A = A.reshape(-1)

F = F.reshape(-1)

return 1.0/len(A) * np.sum(2 * np.abs(F - A) / (np.abs(A) + np.abs(F)))

def fit_model(self, x, y, learning_rate=0.05,

n_estimators=500,

max_depth=7,

min_child_weight=1,

gamma=0.0,

subsample=0.8,

colsample_bytree=0.8,

scale_pos_weight=0.8):

model = xgb.XGBRegressor(learning_rate=learning_rate,

n_estimators=n_estimators,

max_depth=max_depth,

min_child_weight=min_child_weight,

gamma=gamma,

subsample=subsample,

colsample_bytree=colsample_bytree,

scale_pos_weight=scale_pos_weight,

seed=42,

tree_method='gpu_hist',

gpu_id=2)

multioutput = MultiOutputRegressor(model).fit(x, y)

return multioutput

2.4 model training

Training code

if __name__ == "__main__": if len(sys.argv) == 7: # Training models # python3 src/train_xgb_model.py data/train_data.csv data/test_data.csv O3 24 24 v2 aq_model = AQXGB(sys.argv[3], int(sys.argv[4]), int(sys.argv[5]), sys.argv[6]) aq_model.train(sys.argv[1], sys.argv[2])Training scripts

Enter the past 24 Hours are characteristic data , Export future 24 Hours of O3 Forecast results ofpython3 src/train_xgb_model.py data/train_data.csv data/test_data.csv O3 24 24 v2

2.5 data format

- data format

csv file - Example

air_pressure,CO,humidity,AQI,monitoring_time,NO2,O3,PM10,PM25,SO2,station_number,air_temperature,wind_direction,wind_speed,longitude,latitude,station_type_name

1013.0,0.3,59.0,69.0,2019-02-01 00:00:00,15.0,80.0,88.0,26.0,8.0,xxx Monitoring stations ,-0.4,205.8,1.1,116.97810856433719,36.61655020673796,shik

1013.0,0.3,58.0,68.0,2019-02-01 01:00:00,15.0,80.0,86.0,26.0,8.0,xxx Monitoring stations ,-0.5,179.4,1.0,116.97810856433719,36.61655020673796,shik

1012.0,0.3,62.0,72.0,2019-02-01 02:00:00,15.0,80.0,94.0,26.0,8.0,xxx Monitoring stations ,-0.9,175.7,0.8,116.97810856433719,36.61655020673796,shik

1011.0,0.3,64.0,76.0,2019-02-01 03:00:00,15.0,80.0,102.0,26.0,8.0,xxx Monitoring stations ,-1.0,166.9,0.9,116.97810856433719,36.61655020673796,shik

1011.0,0.3,65.0,80.0,2019-02-01 04:00:00,15.0,80.0,110.0,26.0,8.0,xxx Monitoring stations ,-0.8,191.1,0.9,116.97810856433719,36.61655020673796,shik

1011.0,0.3,66.0,84.0,2019-02-01 05:00:00,15.0,80.0,117.0,26.0,8.0,xxx Monitoring stations ,-1.1,211.4,1.0,116.97810856433719,36.61655020673796,shik

1011.0,0.3,68.0,85.0,2019-02-01 06:00:00,15.0,80.0,119.0,26.0,8.0,xxx Monitoring stations ,-1.4,137.3,1.3,116.97810856433719,36.61655020673796,shik

1011.0,0.3,68.0,65.75,2019-02-01 07:00:00,15.0,80.0,130.6,26.0,8.0,xxx Monitoring stations ,-1.3,147.0,1.5,116.97810856433719,36.61655020673796,shik

1011.0,0.3,58.0,46.5,2019-02-01 08:00:00,15.0,80.0,142.2,26.0,8.0,xxx Monitoring stations ,0.7,157.0,1.4,116.97810856433719,36.61655020673796,shik

3、 Other reference

【AI actual combat 】XGBRegressor Model acceleration training , Use GPU Second training XGBRegressor

【AI actual combat 】xgb.XGBRegressor Multiple regression MultiOutputRegressor Adjustable parameter 1

边栏推荐

- Idea set method call ignore case

- com. fasterxml. jackson. databind. Exc.invalidformatexception problem

- MySQL practice 45 [SQL query and update execution process]

- Reset or clear NET MemoryStream - Reset or Clear . NET MemoryStream

- 二维数组中的元素求其存储地址

- What happens between entering the URL and displaying the page?

- Three. JS local environment setup

- Docker install MySQL

- open file in 'w' mode: IOError: [Errno 2] No such file or directory

- MySQL practice 45 lecture [row lock]

猜你喜欢

随机推荐

labelimg生成的xml文件转换为voc格式

LVGL使用心得

为什么线程崩溃不会导致 JVM 崩溃

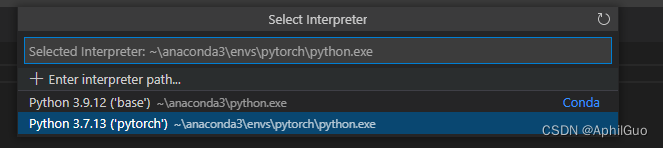

Vs Code configure virtual environment

ComponentScan和ComponentScans的区别

idea 加载不了应用市场解决办法(亲测)

Limit of one question per day

docker安装redis

从输入URL到页面展示这中间发生了什么?

VS 2019 配置tensorRT生成engine

[error record] the parameter 'can't have a value of' null 'because of its type, but the im

The process of connecting MySQL with docker

Use of check boxes: select all, deselect all, and select some

[C language] MD5 encryption for account password

模糊查询时报错Parameter index out of range (1 > number of parameters, which is 0)

Converts a timestamp to a time in the specified format

TCP handshake three times and wave four times. Why does TCP need handshake three times and wave four times? TCP connection establishes a failure processing mechanism

How do you adjust the scope of activerecord Association in rails 3- How do you scope ActiveRecord associations in Rails 3?

[pyg] understand the messagepassing process, GCN demo details

Yolov5 project based on QT