当前位置:网站首页>Principle and steps of principal component analysis (PCA)

Principle and steps of principal component analysis (PCA)

2022-06-13 02:30:00 【Eight dental shoes】

PCA Background

In many fields of data analysis and processing , There are often many complex variables , There is usually a correlation between variables , It is extremely difficult to extract information that can reflect the characteristics of things from a large number of variables , The analysis of single variables is not comprehensive , And lose information , Wrong conclusion . Principal component analysis (PCA) Is to reduce the dimension through mathematics , Find out the data analysis method that can most determine the principal component of data characteristics , Use fewer comprehensive indicators , Reveal the simple structure hidden behind multi-dimensional complex data variables , Get more scientific and effective data information .

PCA Dimension reduction

PCA The main idea of dimensionality reduction is to reduce n Dimension features map to k D on , this k Dimension is a new orthogonal feature, also known as the main component , It's in the original n Based on the characteristics of dimension, we reconstruct k Whitman's sign .

For the selection of principal component coordinate axis , There are two requirements : First, they should be orthogonal to each other , That is, the basis vector is linearly independent ; The second is to choose the direction with the largest variance , So that the data can be scattered on the coordinate axis , Easy to observe . The first axis is the direction with the largest variance in the original data , The second coordinate axis is selected to maximize the variance in the plane orthogonal to the first coordinate axis , The third axis is the same as 1,2 The one with the largest variance in the plane with orthogonal axes . In this way, we can get n One of these axes . We choose from these axes k Coordinate axis with maximum information , The dimension reduction processing is realized .

Specific implementation method

1. Covariance matrix

For a set of data we get , The sample mean can be calculated

Sample variance :

Covariance between two samples :

When the covariance is zero 0 when , explain X and Y Variables are linearly independent .

A matrix consisting of column vectors of all samples X, according to  The covariance matrix between two data can be obtained :

The covariance matrix between two data can be obtained :

From the knowledge of matrix , The covariance matrix is symmetric , The diagonal is the variance of the sample , The other is the covariance between two variables . We can use the diagonalization of the symmetric matrix to convert the covariance into 0, Realize linear independence between variables , And at this time, the largest... Is selected on the diagonal K One variance is enough .

2. Eigenvalues and eigenvectors

The eigenvalues and eigenvectors are calculated according to the covariance matrix , From linear algebra we know , If a vector v It's a matrix A Eigenvector of , It must be expressed in the following form :

Thus make use of |λE - A| = 0 The eigenvalue of the matrix can be solved , An eigenvalue corresponds to a set of eigenvectors , The eigenvectors are orthogonal to each other . Arrange the eigenvectors corresponding to their eigenvalues from large to small , Choose the biggest k Vector unitization , It can be used as PCA Required for transformation k Base vectors , And the variance is the largest .

It can be proved mathematically , The eigenvalue λ That is, the variance of the data after dimensionality reduction , The concrete proof can refer to the optimization of Lagrange multiplier method , I won't go into details here .

3. Basis vector transformation

X It's a mxn Matrix , Each column vector represents the data at a sampling point .Y Represents the new dataset representation after conversion . P Is a linear transformation between them , That is, to calculate the inner product of a vector . Can be expressed as :

Y=PX

P It is the transformation matrix composed of base vectors , After linear transformation ,X The coordinates of the new base vector are converted to the coordinates in the space determined by the new base vector Y, Geometrically ,Y yes X Projection in new space .

After the base vector transformation , That is, the dimensionality reduction of data is completed .

The example analysis

Take such a set of two-dimensional data as an example

We have to reduce it to one dimension , The mean value of two sets of row vectors is zero , Therefore, zero mean centring is not required .

Find the covariance matrix :

Then the eigenvalues and eigenvectors are calculated according to the obtained covariance matrix , The eigenvalue obtained from the solution is :

The corresponding eigenvector is :

The eigenvector is unitized to obtain :

So the base vector transformation matrix is :

The use of P And matrix X Do the vector inner product , The reduced dimension matrix is obtained Y:

N Dimension data matrix can also be deduced in this way , Get in k After the reduced dimension vector , Usually take 2-3 Dimension is the principal component coordinate , It can reflect most of the characteristic information .

Matlab The code is as follows :

clear;

clc;

k = 1;

A = [-1 -1 0 2 0;

-2 0 0 1 1 ]’

[rows cols] = size(A)

covA = cov(A) % Find the covariance matrix of the sample

[vec val] = eigs(covA) % The eigenvalues of the covariance matrix D And eigenvectors V

meanA = mean(A) % Sample mean mean

SCORE2 = (A - tempA)*vec % drop to 1 The principal components obtained after dimension

pcaData2 = SCORE2(:,1:k) % Take the first set of data

figure;

scatter(pcaData2(:,1));

边栏推荐

- Paper reading - jukebox: a generic model for music

- Impossible d'afficher le contenu de la base de données après que l'idée a utilisé le pool de connexion c3p0 pour se connecter à la base de données SQL

- Barrykay electronics rushes to the scientific innovation board: it is planned to raise 360million yuan. Mr. and Mrs. Wang Binhua are the major shareholders

- [reading papers] deep learning face representation from predicting 10000 classes. deepID

- Armv8-m learning notes - getting started

- Several articles on norms

- [dest0g3 520 orientation] dest0g3, which has been a long time since we got WP_ heap

- Understand CRF

- Huawei equipment configures private IP routing FRR

- Think: when do I need to disable mmu/i-cache/d-cache?

猜你喜欢

After idea uses c3p0 connection pool to connect to SQL database, database content cannot be displayed

04 route jump and carry parameters

Thinking back from the eight queens' question

Configuring virtual private network FRR for Huawei equipment

Mbedtls migration experience

Queuing theory, game theory, analytic hierarchy process

Paipai loan parent company Xinye quarterly report diagram: revenue of RMB 2.4 billion, net profit of RMB 530million, a year-on-year decrease of 10%

Laravel permission export

Solution of depth learning for 3D anisotropic images

Impossible d'afficher le contenu de la base de données après que l'idée a utilisé le pool de connexion c3p0 pour se connecter à la base de données SQL

随机推荐

About the fact that I gave up the course of "Guyue private room course ROS manipulator development from introduction to actual combat" halfway

04 route jump and carry parameters

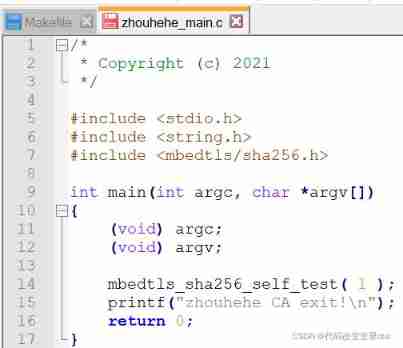

Mbedtls migration experience

Paper reading - beat tracking by dynamic programming

Armv8-m (Cortex-M) TrustZone summary and introduction

Automatic differential reference

Share three stories about CMDB

OpenCVSharpSample05Wpf

1、 Set up Django automation platform (realize one click SQL execution)

[keras learning]fit_ Generator analysis and complete examples

05 tabbar navigation bar function

ROS learning-8 pit for custom action programming

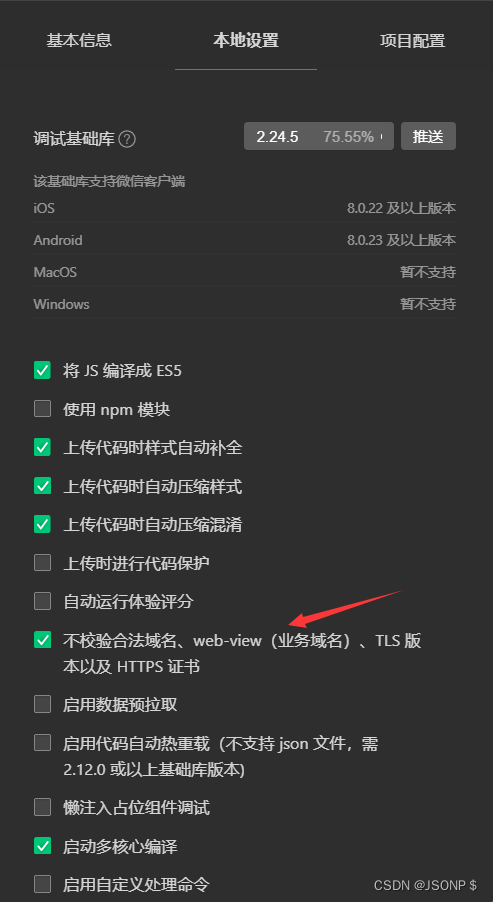

02 optimize the default structure of wechat developer tools

Opencvshare4 and vs2019 configuration

Microsoft Pinyin opens U / V input mode

Swiper horizontal rotation grid

A real-time target detection model Yolo

Leetcode 93 recovery IP address

CCF 201409-1: adjacent number pairs (100 points + problem solving ideas)

Understand HMM